Hierarchical Question-Image Co-Attention for Visual Question Answering

Introduction

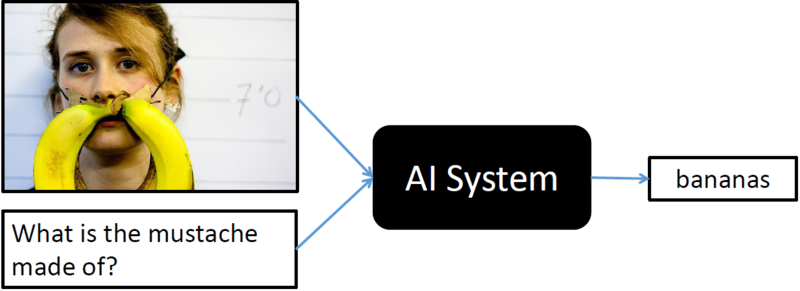

Visual Question Answering (VQA) is a recent problem in computer vision and natural language processing that has garnered a large amount of interest from the deep learning, computer vision, and natural language processing communities. In VQA, an algorithm needs to answer text-based questions about images in natural language as illustrated in Figure 1.

Recently, visual attention based models have been explored for VQA, where the attention mechanism typically produces a spatial map highlighting image regions relevant to answering the question. However, to correctly answer a visual question about an image, the machine not only needs to understand or "attend" regions in the image but it is equally important to "attend" the parts of the question as well. In this paper, authors have developed a novel co-attention technique to combine "where to look" or visual-attention along with "what words to listen to" or question-attention. The co-attention mechanism for VQA allows model to jointly reasons about image and question thus improving the state of art results.