strategies for Training Large Scale Neural Network Language Models

Introduction

Statistical models of natural languages are a key part of many systems today. The most widely used known applications are automatic speech recognition, machine translation, and optical character recognition. In recent years language models, including Recurrent Neural Network and Maximum Entropy-based models have gained a lot of attention and are considered the most successful models. However, the main drawback of these models is their huge computation complexity. This paper introduces a hash-based implementation of a class based maximum entropy model, that allows to easily control the trade-off between memory complexity and computational complexity.

Motivation

As computational complexity is an issue for different types of deep neural network language models, this study briefly presents simple techniques that can be used to reduce computational cost of the training and test phases. The study also mentions that training neural network language models with maximum entropy models leads to better performance in terms of computational complexity. The maximum entropy model can be viewed as a Neural network model with no hidden layer with the input layer directly connected to the output layer.

Model description

The main difference between a neural network language model and Maximum entropy is that the features for the NN LM are automatically learned as a function of the history. Also, the usual features for the ME model are binary, while NN models use continuous-valued features. After the model is trained, similar words have similar low-dimensional representations

Recurrent Neural Network Models

The standard neural network language model has a very similar form to the maximum entropy model. The main difference is that the features for this model are automatically learned as a function of the history. Also, the usual features for the ME model are binary, while NN models use continuous-valued features. The NN LM can be described as:

[math]\displaystyle{ P(w|h)=\frac{e^{\sum_{k=1}^N \lambda_i f_i(s,w)}} {\sum_{w=1} e^{ \sum_{k=1}^N\lambda_i f_i(s,w)}} }[/math]

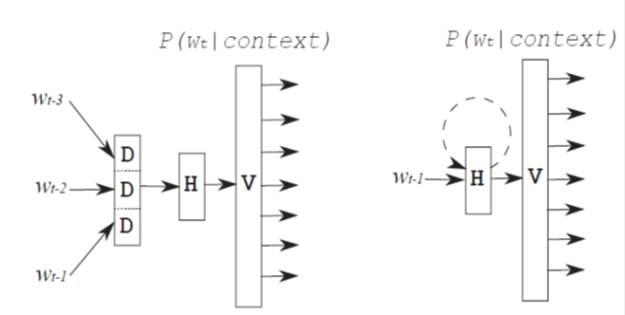

where f is a set of feature, λ is a set of weights, and s is a state of the hidden layer. For the feedforward NN LM architecture, the state of the hidden layer depends on a projection layer, that is formed as a projection of N − 1 recent words into low-dimensional space. After the model is trained, similar words have similar low-dimensional representations. Alternatively, the state of hidden layer can depend on the most recent word and the state in the previous time step. Thus, the time is not represented explicitly. This recurrence allows the hidden layer to represent low-dimensional representation of the entire history (or in other words, it provides the model with a memory). The architecture is called the Recurrent neural network based language model (RNN LM)<ref name=MiT1> Mikolov, Tomas, et al "Extensions of recurrent neural network language model." in Acoustics, Speech and Signal Processing (ICASSP), (2011). </ref> <ref name=MiT2> Mikolov, Tomas, et al "“Empirical evaluation and combination of advanced language modeling techniques" in Proceedings of Interspeech, (2010). </ref>.

Feedforward neural network 4-gram model (on the left) and Recurrent neural network language model (on the right)

Maximum Entropy model

A maximum entropy model has the following form:

[math]\displaystyle{ P(w|h)=\frac{e\sum_{k=1}^N \lambda_i f_i(h,w)} {\sum_{w=1} e \sum_{k=1}^N\lambda_i f_i(h,w)} }[/math]

where h is a history, f is the the set of features, which in maximum entropy case are n grams. The choice of features is usually done manually, and significantly affects the overall performance of the model. Training the maximum entropy model consists of learning the set of weights λ.

Computational complexity

The training time of N-gram neural network language model is proportional to:

[math]\displaystyle{ \,I*W*((N-1) *D*H+H*V) }[/math]

where I is the number of training epochs before convergence is achieved, W is the number of tokens in the training set, N is the N-gram order, D is the dimensionality of words in the low-dimensional space, H is size of the hidden layer and V is the size of the vocabulary.

The recurrent NN LM has computational complexity as:

[math]\displaystyle{ \,I*W*(H*H+H*V) }[/math]

It can be seen that by increasing order N, the complexity of the feedforward architecture increases linearly, while it remains constant for the recurrent one.

The computational complexity in the maximum entropy model is also described as follows:

[math]\displaystyle{ \,I*W*(N*V) }[/math]

The simple techniques used in the present study to reduce the computational complexity are:

A. Reduction of training epochs

Training is usually performed by stochastic gradient descent, and takes 10-50 training epocs to converge. In this study, it has been demonstrated that good performance can be achieved while performing as few as 7 training epochs instead of using thousands of epochs. This is achieved by sorting the training data by complexity.

B. Reduction of number of training tokens

In a vast majority of cases, NN LMs for LVCSR tasks are trained on 5-30M tokens. Although the subsampling trick can be used to claim that the neural network model has seen all training data at least once, simple subsampling techniques lead to severe performance degradation, against a model that is trained on all data

In this study, NN LMs are trained only on a small part of the data (which are in-domain corpora) plus some randomly subsampled part of out-of-domain data.

C. Reduction of vocabulary

One technique is to compute probability distribution only for the top M words in the neural network model and for the rest of the words use backoff n-gram probabilities. The list of top M words is then called a shortlist. However, it was shown in that this technique causes severe degradation of performance for small values of M, and even with M = 2000, the complexity of the H × V term is still significant. Goodman’s trick can be used for speeding up the models in terms of vocabulary. Each word from the vocabulary is assigned to a class and only the probability distribution over classes is computed. As the number of classes can be very small (several hundreds), this is a more effective solution than using shortlists, and the performance degradation is smaller.

Another way to reduce H×V is to choose a small value of H. Some techniques with respect to the combination of NN model with other methods are introduced for choosing the proper size of the hidden layer.

E. Parallelization

As the state of the hidden layer depends on the previous state, the recurrent networks are hard to be parallelized. One can parallelize just the computation between hidden and output layers. The other way is to parallelize the whole network by training from multiple points in the training data at the same time. However, parallelization is a highly architecture-specific optimization problem. In the current study, this problem is dealt with algorithmic approaches for reducing computational complexity.

Automatic data selection and sorting

The full training set is divided into 560 equally-sized chunks, and the perplexity on the development data is computed on each chunk. The data chunks with perplexity above 600 are discarded to obtain the reduced sorted training set.

Experiment with large RNN models

By training the RNN model on the reduced sorted dataset and increasing the hidden layer, better results than baseline backoff model are obtained. However, the performance of RNN models is strongly correlated with the size of the hidden layer. Combining the RNN models with baseline 4-gram model and tuning the weights of individual models on the development set leads to quite impressive reduction of WER.

Hash-based implementation of class-based maximum entropy model

The maximum entropy model can be seen in the context of neural network models as a weight matrix that directly connects the input and output layers. In the present study, direct connections are added to the class-based RNN architecture. Direct parameters are used to connect input and output layers, and input and class layers. This model is denoted as RNNME.

Using direct connections leads to problems in memory complexity. To avoid this problem, a hash function is used to map the huge sparse matrix into one dimensional array. Using the underlying method, the achieved perplexity is better than the baseline perplexity of the KN4 model. Even better results are gained after interpolation of both models, and using rescoring experiment.

Conclusions

Some of the contributions and demonstrations in the paper are as follows:

- Constructing of the largest neural-network-based language models ever trained (to date), including 400M tokens, 640 hidden layer neurons, and 84K word vocabulary

- Showing that removing and sorting parts of the training data can reduce perplexity by about 10% and possibly more

- Dropping relative word error rate (WER) to almost 11%

- Showing that an RNN model with direct connections does not need very large hidden layers in order to perform well

References

<references />