strategies for Training Large Scale Neural Network Language Models

Introduction

Statistical models of natural languages are a key part of many systems today. The most widely used known applications are automatic speech recognition, machine translation, and optical character recognition. In recent years language models, including Recurrent Neural Network and Maximum Entropy-based models have gained a lot of attention and are considered the most successful models. However, the main drawback of these models is their huge computation complexity.

Motivation

As computational complexity is an issue for different types of deep neural network language models, this study briefly presents simple techniques that can be used to reduce computational cost of the training and test phases. The study also mentions that training neural network language models with maximum entropy models leads to better performance in terms of computational complexity.

Model description

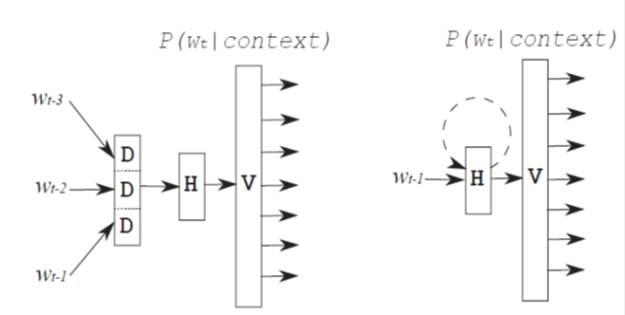

Recurrent Neural Network Models

Features in this model are learned as a function of history. The model is described as:

where f is a set of feature, λ is a set of weights, and s is a state of the hidden layer. The state of hidden layer can depend on the most recent word and the state in the previous time step. This recurrence allows the hidden layer to represent low-dimensional representation of the entire history.

where f is a set of feature, λ is a set of weights, and s is a state of the hidden layer. The state of hidden layer can depend on the most recent word and the state in the previous time step. This recurrence allows the hidden layer to represent low-dimensional representation of the entire history.