stat940W25

Notes on Exercises

Exercises are numbered using a two-part system, where the first number represents the lecture number and the second number represents the exercise number. For example:

- 1.1 refers to the first exercise in Lecture 1.

- 2.3 refers to the third exercise in Lecture 2.

Students are encouraged to complete these exercises as they follow the lecture content to deepen their understanding.

Exercise 1.1

Level: ** (Moderate)

Exercise Types: Novel

Each exercise you contribute should fall into one of the following categories:

- Novel: Preferred – An original exercise created by you.

- Modified: Valued – An exercise adapted or significantly altered from an existing source.

- Copied: Permissible – An exercise reproduced exactly as it appears in the source.

References: Source: (e.g., book or other resources, if a webpage has its URL), Chapter,Page Number.

Question

Prove that the Perceptron Learning Algorithm converges in a finite number of steps if the dataset is linearly separable.

Hint:Note: exc Assume that the dataset

Solution

Step 1: Linear Separability Assumption

If the dataset is linearly separable, there exists a weight vector

Step 2: Perceptron Update Rule

The Perceptron algorithm updates the weight vector

- Initialize

- For each misclassified point

Define the margin

Step 3: Bounding the Number of Updates

Let

Growth of

After

Lower Bound on

Let

Combining the Results

The Cauchy-Schwarz inequality gives:

Step 4: Conclusion

The Perceptron Learning Algorithm converges after at most

Exercise 1.2

Level: * (Easy)

Exercise Types: Modified

References: Simon J.D. Prince. Understanding Deep learning. 2024

This problem generalized Problem 4.10 in this textbook to

Question

(a) Consider a deep neural network with a single input, a single output, and

(b) Now, generalize the problem: if the number of inputs is

Solution

(a) Total number of parameters when there is a single input and output:

For the first layer, the input size is

Number of parameters:

For hidden layers

Number of parameters for all

For the output layer, the number of weights is

Number of parameters:

Therefore, the total number of parameters is

(b) Total number of parameters for

In this case, the number of parameters for the first layer becomes

Therefore, in total, the number of parameters is

Exercise 1.3

Level: * (Easy)

Exercise Types: Modified

References: Simon J.D. Prince. Understanding Deep learning. MIT Press, 2023

This problem modified from the background mathematics problem chap01 Question1.

Question

A single linear equation with three inputs associates a value

We add an inverse problem: If

Solution

A single linear equation with three inputs is of the form:

where

We can define the code as follows:

def linear_function_3D(x1, x2, x3, beta, omega1, omega2, omega3):

y = beta + omega1 * x1 + omega2 * x2 + omega3 * x3

return y

Given

Thus,

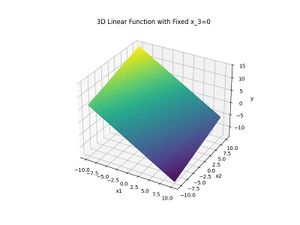

To visualize, we can fix

Here is the code:

import numpy as np

import matplotlib.pyplot as plt

# Generate grid for x1 and x2, fix x3 = 0

x1 = np.linspace(-10, 10, 100)

x2 = np.linspace(-10, 10, 100)

x1, x2 = np.meshgrid(x1, x2)

x3 = 0

# Define coefficients

beta = 0.5

omega1 = -1.0

omega2 = 0.4

omega3 = -0.3

# Compute y-values

y = linear_function_3D(x1, x2, x3, beta, omega1, omega2, omega3)

# Visualization

fig = plt.figure(figsize=(8, 6))

ax = fig.add_subplot(111, projection='3d')

ax.plot_surface(x1, x2, y, cmap='viridis')

ax.set_xlabel('x1')

ax.set_ylabel('x2')

ax.set_zlabel('y')

plt.title('3D Linear Function with Fixed x3=0')

plt.show()

The plot is shown below:

For the inverse problem, given

The problem is solvable if

y = 10.0

beta = 1.0

omega = [2, -1, 0.5]

rhs = y - beta

# Solve using least squares

x_vec = np.linalg.lstsq(np.array([omega]), [rhs], rcond=None)[0]

print(f"Solution for x: {x_vec}")

Exercise 1.4

Level: * (Easy)

Exercise Types: Novel

Question

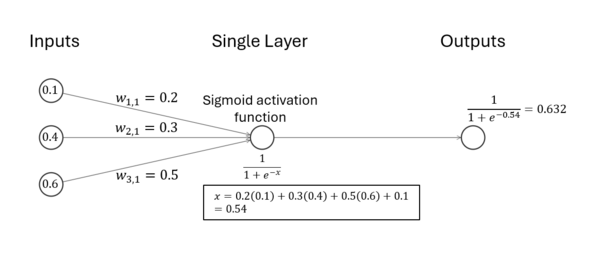

Thinking about feedforward model with sigmoid activation, compute the output of a single-layer neural network with 3 inputs and 1 output.

Assuming:

- Input vector:

- weights:

- Bias:

- a). Sigmoid activation function:

- b). ReLU activation function:

- c). Tanh activation function:

Solution

1. Compute the weighted sum:

Breaking this down step-by-step:

2.

a). Apply the sigmoid activation function:

Substituting

Thus, the final output is 0.632.

b). Similarly, apply the ReLU activation function:

Substituting

c). Finally, apply the Tanh activation function:

Substituting

Exercise 1.5

Level: * (Easy)

Exercise Types: Novel

Question

1.2012: ________'s ImageNet victory brings mainstream attention.

2.2016: Google's ________ uses deep learning to defeat a Go world champion.

3.2017: ________ architecture revolutionizes Natural Language Processing.

Solution

1. AlexNet

2. AlphaGo

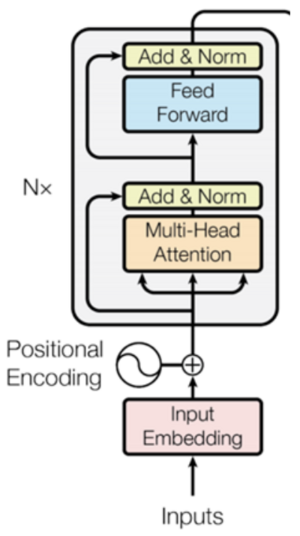

3. Transformer

Key Milestones in Deep Learning

•2006: Deep Belief Networks – The modern era of deep learning begins.

•2012: AlexNet's ImageNet victory brings mainstream attention.

•2014-2015: Introduction of Generative Adversarial Networks (GANs).

•2016: Google's AlphaGo uses deep learning to defeat a Go world champion.

•2017: Transformer architecture revolutionizes Natural Language Processing.

•2018-2019: BERT and GPT-2 set new benchmarks in NLP.

•2020: GPT-3 demonstrates advanced language understanding and generation.

•2021: AlphaFold 2 achieves breakthroughs in protein structure prediction.

•2021-2022: Diffusion Models (e.g., DALL-E 2, Stable Diffusion) achieve state-of-the-art in image and video generation.

•2022: ChatGPT popularizes conversational AI and large language models (LLMs).

Exercise 1.6

Level: * (Easy)

Exercise Type: Novel

Question

a) What are some common examples of first-order search strategies in neural network optimization, and why are first-order methods generally preferred over second-order methods?

b) What is the difference between a deep neural network and a shallow neural network, and how many hidden layers does each typically have?

c) Prove that a perceptron cannot converge for the XOR problem.

Solution

a)

Common examples of first-order search strategies in neural network optimization include Gradient Descent (GD), Stochastic Gradient Descent (SGD), Momentum, and Adam. These methods rely on gradients (first derivatives) of the loss function to update model parameters, making them computationally efficient and scalable. First-order methods are preferred due to their efficiency, scalability to large datasets, and lower memory requirements compared to second-order methods. While second-order methods can converge faster, first-order methods like Adam balance performance and resource usage well, especially in large-scale networks.

b)

A deep neural network typically has more than 2 hidden layers, allowing it to learn complex, abstract features at each layer. A shallow neural network usually has 1 or 2 hidden layers. Therefore, networks with more than 2 hidden layers are considered deep, while those with fewer layers are considered shallow.

c)

Step 1: XOR Dataset

The XOR problem has the following data points and labels:

| x₁ | x₂ | y |

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

Step 2: Perceptron Decision Boundary

The perceptron decision boundary is defined as:

A point is classified as:

- y = 1 if z > 0

- y = 0 if z < 0

For the XOR dataset, we derive inequalities for each data point.

Step 3: Derive Inequalities

1. For (x₁, x₂) = (0, 0), y = 0:

b < 0

2. For (x₁, x₂) = (0, 1), y = 1:

w₂ + b > 0

3. For (x₁, x₂) = (1, 0), y = 1:

w₁ + b > 0

4. For (x₁, x₂) = (1, 1), y = 0:

w₁ + w₂ + b < 0

Step 4: Attempt to Solve

From the inequalities:

1.

2.

3.

4.

Now, add inequalities (2) and (3):

But compare this with inequality (4):

This leads to a contradiction because

Therefore, the XOR dataset is not linearly separable, and the perceptron cannot converge for the XOR problem.

Exercise 1.7

Level: * (Easy)

Exercise Type: Novel

Question

The sigmoid activation function is defined as:

(a) Derive the derivative of

(b) Use this property to explain why sigmoid activation is suitable for modeling probabilities in binary classification tasks.

Solution

(a) Derivative:

Starting with

By noting that

(b) Why sigmoid for probabilities:

The sigmoid function maps any real

A closely related function is the softmax, which generalizes the same probabilistic interpretation to multi-class settings. For two classes, softmax is essentially the same as the sigmoid function, so it can also be suitable for binary classification problems.

Exercise 1.8

Level: * (Easy)

Exercise Types: Novel

Question

In classification, it is possible to minimize the number of misclassifications directly by using:

where

(a) Why is this approach not commonly used in practice?

(b) Name and give formulas for two differentiable loss functions commonly employed in practice for binary classification tasks, explaining why they are more popular.

Solution

(a): The expression

(b): Two alternative loss functions:

Hinge Loss:

The hinge loss is often used in Support Vector Machines (SVMs) and works well when the data is linearly separable.

Logistic (Cross-Entropy) Loss:

The logistic loss (or cross-entropy loss) is commonly used in logistic regression and neural networks. It is differentiable so it works well for gradient-based optimization methods.

Exercise 1.9

Level: ** (Easy)

Exercise Types: Novel

Question

How are neural networks modeled? Using an example to explain it clearly.

Solution

Neural networks are modeled from biological neurons. A neural network consists of layers of interconnected neurons where each connection has associated weights.

The input layer receives the data features, and each neuron corresponds to one feature from the dataset.

The hidden layer consists of multiple neurons that transform the input data into intermediate representations, using a combination of weights, biases, and activation functions which allows the network to learn complex patterns, like the Sigmoid function

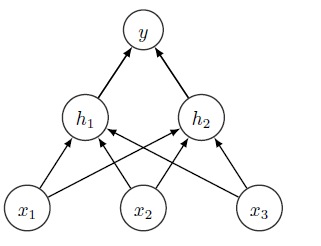

For example, in the lecture note, inputs

The computations yield hidden neuron values of

which are then processed by the output layer to produce the final predictions.

This process demonstrates how neural networks learn and transform input data step by step.

Exercise 1.10

Level: ** (Moderate)

Exercise Types: Novel

Question

Biological neurons in the human brain have the following characteristics:

1. A neuron fires an electrical signal only when its membrane potential exceeds a certain threshold. Otherwise, it remains inactive.

2. Neurons are connected to one another through dendrites (input) and axons (outputs), forming a highly interconnected network.

3. The intensity of the signal passed between neurons depends on the strength of the connection, which can change over time due to learning and adaptation.

Considering the above points, answer the following questions:

Explain how these biological properties of neurons might inspire the design and functionality of nodes in artificial neural networks.

Solution

1. Threshold Behavior: The concept of a neuron firing only when its membrane potential exceeds a threshold is mirrored in neural networks through activation functions. These functions decide whether a node "fires" by producing a significant output.

2. Connectivity: The connections between biological neurons via dendrites and axons inspire the weighted connections in artificial neural networks. Each node receives inputs, processes them, and sends weighted outputs to subsequent node, similar how to signals propagate in the brain.

3. Learning and Adaptation: Biological neurons strengthen or weaken their connections based on experience (neuroplasticity). This is similar to how artificial networks adjust weights during training using backpropagation and optimization algorithms. The dynamic modification of weights allows artificial networks to learn from data.

Exercise 1.11

Level: * (Easy)

Exercise Type: Novel

Question

If the pre-activation is 20, what are the outputs of the following activation functions: ReLU, Leaky ReLU, logistic, and hyperbolic?

Choose the correct answer:

a) 20, 20, 1, 1

b) 20, 0, 1, 1

c) 20, -20, 1, 1

d) 20, 20, -1, 1

e) 20, -20, 1, -1

Solution

The correct answer is a): 20, 20, 1, 1.

Calculation

Exercise 1.12

Level: * (Easy)

Exercise Type: Novel

Question

Imagine a simple feedforward neural network with a single hidden layer. The network structure is as follows: - linear activation function - The input layer has 2 neurons. - The hidden layer has 2 neurons. - The output layer has 1 neuron. - There are no biases in the network.

If the weights from the input layer to the hidden layer are given by:

Calculate the output of the network for the input vector

Hint

- The output of each layer is calculated by multiplying the input of that layer by the layer's weight matrix. - Use matrix multiplication to compute the outputs step-by-step.

Solution

- Step 1: Calculate Hidden Layer Output**

The input to the hidden layer is the initial input

- Step 2: Calculate Output Layer Output**

The input to the output layer is the output from the hidden layer:

Thus, the output of the network for the input vector

Exercise 1.13

Level: * (Easy)

Exercise Types: Novel

Question

Explain whether this is a classification, regression, or clustering task each time. If the task is either classification or regression, also comment on whether the focus is prediction or explanation.

1. **Stock Market Trends:**

A financial analyst wants to predict the future stock prices of a company based on historical trends, economic indicators, and company performance metrics.

2. **Customer Segmentation:**

A retail company wants to group its customers based on their purchasing behaviour, including transaction frequency, product categories, and total spending, to design targeted marketing campaigns.

3. **Medical Diagnosis:**

A hospital wants to develop a model to determine whether a patient has a specific disease based on symptoms, medical history, and lab test results.

4. **Predicting Car Fuel Efficiency:**

An automotive researcher wants to understand how engine size, weight, and aerodynamics affect a car's fuel efficiency (miles per gallon).

Solution

**1. Stock Market Trends**

- Task Type:** Regression

- Focus:** Prediction

- Reasoning:** Stock prices are continuous numerical values, making this a regression task. The goal is to predict future prices rather than explain past fluctuations.

**2. Customer Segmentation**

- Task Type:** Clustering

- Focus:** —

- Reasoning:** Customers are grouped based on their purchasing behaviour without predefined labels, making this a clustering task.

**3. Medical Diagnosis**

- Task Type:** Classification

- Focus:** Prediction

- Reasoning:** The disease status is a categorical outcome (Has disease: Yes/No), making this a classification problem. The goal is to predict a diagnosis for future patients.

**4. Predicting Car Fuel Efficiency**

- Task Type:** Regression

- Focus:** Explanation

- Reasoning:** Fuel efficiency (miles per gallon) is a continuous variable. The researcher is interested in understanding how different factors influence efficiency, so the focus is on explanation.

Summary

| Task | Type | Focus | Reasoning |

|---|---|---|---|

| Stock Market Trends | Regression | Prediction | Predict future stock prices (continuous variable). |

| Customer Segmentation | Clustering | — | Group customers based on purchasing behaviour. |

| Medical Diagnosis | Classification | Prediction | Determine if a patient has a disease (Yes/No). |

| Predicting Car Fuel Efficiency | Regression | Explanation | Understand how factors affect fuel efficiency. |

Exercise 1.14

Level: ** (Easy)

Exercise Types: Novel

Question

You are given a set of real-world scenarios. Your task is to identify the most suitable fundamental machine learning approach for each scenario and justify your choice.

- Scenarios:**

1. **Loan Default Prediction:**

A bank wants to predict whether a loan applicant will default on their loan based on their credit history, income, and employment status.

2. **House Price Estimation:**

A real estate company wants to estimate the price of a house based on features such as location, size, and number of bedrooms.

3. **User Grouping for Advertising:**

A social media platform wants to group users with similar interests and online behavior for targeted advertising.

4. **Dimensionality Reduction in Medical Data:**

A medical researcher wants to reduce the number of variables in a dataset containing hundreds of patient health indicators while retaining the most important information.

- Tasks:**

- For each scenario, classify the problem into one of the four fundamental categories: Classification, Regression, Clustering, or Dimensionality Reduction. - Explain why you selected that category for each scenario. - Suggest a possible algorithm that could be used to solve each problem.

Solution

- 1. Loan Default Prediction**

- Task Type:** Classification

- Reasoning:** The target variable (loan default) is categorical (Yes/No), making this a classification problem. The goal is to predict whether an applicant will default based on their financial history.

- Possible Algorithm:** Logistic Regression, Random Forest, or Gradient Boosting.

- 2. House Price Estimation**

- Task Type:** Regression

- Reasoning:** House prices are continuous numerical values, making this a regression task. The goal is to estimate a house's price based on features like location and size.

- Possible Algorithm:** Linear Regression, Decision Trees, or XGBoost.

- 3. User Grouping for Advertising**

- Task Type:** Clustering

- Reasoning:** The goal is to group users based on their behavior without predefined labels, making this a clustering task.

- Possible Algorithm:** K-Means, DBSCAN, or Hierarchical Clustering.

- 4. Dimensionality Reduction in Medical Data**

- Task Type:** Dimensionality Reduction

- Reasoning:** The goal is to reduce the number of variables while preserving essential information, making this a dimensionality reduction task.

- Possible Algorithm:** Principal Component Analysis (PCA), t-SNE, or Autoencoders.

Exercise 1.15

Level: ** (Easy)

Exercise Types: Novel

Question

Define what machine learning is and how it is different from classical statistics. Provide the three learning methods used in machine learning, briefly define each and give an example of where each of them can be used. Include some common algorithms for each of the learning methods.

Solution

- Machine learning Definition**

– Machine Learning is the ability to teach a computer without explicitly programming it

– Examples are used to train computers to perform tasks that would be difficult to program

The difference between classical statistics and machine learning is the size of the data that they infer information from. In classical statistics, this is usually done from a small dataset(not enough data) while in machine learning it is done from a large dataset(Too many data).

- Supervised learning**

Supervised learning is a type of machine learning where the model is trained on a labeled dataset, meaning each training example has input features and a corresponding correct output. The algorithm learns the relationship between inputs and outputs to make predictions on new, unseen data.

Examples: Predicting house prices based on location, size, and other features (Regression). Identifying whether an email is spam or not (Classification).

Common Algorithms: Linear Regression, Logistic Regression, Decision Trees, Random Forest, Support Vector Machines (SVM), Neural Networks.

- Unsupervised Learning**

Unsupervised learning involves training a model on data without labeled outputs. The algorithm attempts to discover patterns, structures, or relationships within the data.

Examples: Grouping customers with similar purchasing behaviors for targeted marketing (Clustering). Identifying important features in a high-dimensional dataset (Dimensionality Reduction).

Common Algorithms: K-Means, Hierarchical Clustering, DBSCAN (Clustering). Principal Component Analysis (PCA), t-SNE, Autoencoders (Dimensionality Reduction).

- Reinforcement Learning**

Reinforcement learning (RL) is a type of machine learning where an agent learns to make decisions by performing actions in an environment to maximize cumulative rewards. The agent interacts with the environment, receives feedback in the form of rewards or penalties, and improves its strategy over time.

Examples: Training a robot to walk by rewarding successful movements. Teaching an AI to play chess or video games by rewarding wins and penalizing losses.

Common Algorithms: Q-Learning, Deep Q Networks (DQN), Policy Gradient Methods, Proximal Policy Optimization (PPO).

Summary

| Aspect | Supervised Learning | Unsupervised Learning | Reinforcement Learning |

|---|---|---|---|

| Definition | Learning from labeled data where inputs are paired with outputs. | Learning patterns or structures from unlabeled data. | Learning by interacting with an environment to maximize cumulative rewards. |

| Key Characteristics | Trains on known inputs and outputs to predict outcomes for unseen data. | No predefined labels; discovers hidden structures in the data. | Agent learns through trial and error by receiving rewards or penalties for its actions. |

| Examples | - Predicting house prices (Regression). - Classifying emails as spam or not (Classification). |

- Grouping customers by behavior (Clustering). - Reducing variables in large datasets (Dimensionality Reduction). |

- Training robots to walk. - Teaching AI to play chess or video games. |

| Common Algorithms | - Linear Regression - Logistic Regression - Decision Trees - Random Forest - SVM - Neural Networks |

- K-Means - Hierarchical Clustering - PCA - t-SNE - Autoencoders |

- Q-Learning - Deep Q Networks (DQN) - Policy Gradient Methods - Proximal Policy Optimization (PPO) |

Exercise 1.16

Level: * (Easy)

Exercise Types: Novel

Question

Categorize each of these machine learning scenarios into supervised learning, unsupervised learning, or reinforcement learning. Justify your reasoning for each case.

(a) A neural network is trained to classify handwritten digits using the MNIST dataset, which contains 60 000 images of handwritten digits, along with the correct answer for each image.

(b) A robot is programmed to learn how to play a video game. It does not have access to the game’s rules, but it can observe its current score after each action. Over time, it learns to play better by maximizing its score.

(c) A deep learning model is designed to segment medical images into different sections corresponding to specific organs. The training data consists of medical scans that have been annotated by experts to mark the boundaries of the organs.

(d) A machine learning model is given 100 000 astronomical images of unknown stars and galaxies. Using dimensionality reduction techniques, it groups similar-looking objects based on their features, such as size and shape.

Solution

(a) Supervised learning: The model is trained with labeled data, where each image has a corresponding digit label.

(b) Reinforcement learning: The model learns by interacting with an environment and receiving feedback in the form of rewards or penalties. It explores different actions to maximize cumulative rewards over time.

(c) Supervised learning: The model uses labeled data where professionals annotated each region of the image.

(d) Unsupervised learning: The model works with unlabeled data to find patterns and group similar objects.

Exercise 1.17

Level: * (Easy)

Exercise Types: Novel

Question

How does the introduction of ReLU as an activation function address the vanishing gradient problem observed in early deep learning models using sigmoid or tanh functions?

Solution

The vanishing gradient problem occurs when activation functions like sigmoid or tanh compress their inputs into small ranges, resulting in gradients that become very small during backpropagation. This hinders learning, particularly in deeper networks.

The ReLU (Rectified Linear Unit), defined as

(a) Non-Saturating Gradients: For positive input values, ReLU's gradient remains constant (equal to 1), preventing gradients from vanishing.

(b) Efficient Computation: The simplicity of the ReLU function makes it computationally faster than the sigmoid or tanh functions, which involve more complex exponential calculations.

(c) Sparse Activations: ReLU outputs zero for negative inputs, leading to sparse activations, which can improve computational efficiency and reduce overfitting.

However, ReLU can experience the "dying ReLU" problem, where neurons output zero for all inputs and effectively become inactive. Variants like Leaky ReLU and Parametric ReLU address this by allowing small, non-zero gradients for negative inputs, ensuring neurons remain active.

Exercise 1.18

Level: * (Easy)

Exercise Types: Novel

Question

What is the general concept of text generation in deep learning, and how does it work?

Solution

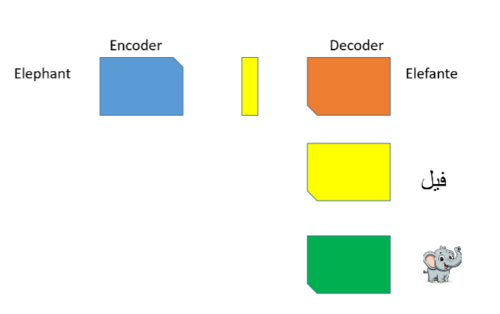

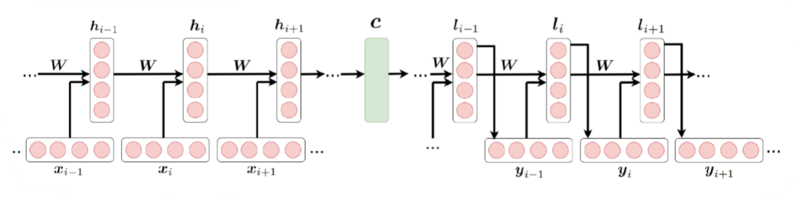

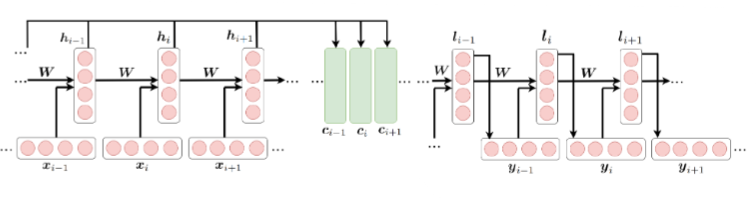

Text generation in deep learning refers to the process of automatically creating coherent and contextually relevant text based on input data or a learned language model. The goal is to produce text that mimics human-written content, maintaining grammatical structure, logical flow, and contextual relevance.

There are five steps.

1. Training on a Language Corpus: A deep learning model, such as a Recurrent Neural Network (RNN), Long Short-Term Memory (LSTM), or Transformer, is trained on a large dataset of text. During training, the model learns patterns, relationships between words, and context within sentences and across paragraphs.

2. Tokenization and Embeddings: Input text is broken into smaller units, such as words or subwords (tokens). These tokens are converted into numerical vectors (embeddings) that capture semantic and syntactic relationships.

3. The model predicts the probability of the next word or token in a sequence based on the context provided by the preceding words. It uses conditional probability, such as:

4. Once the model generates probabilities for the next token, decoding strategies are used to construct text.

5. Generated text is evaluated for coherence, fluency, and relevance. Techniques such as fine-tuning on specific domains or datasets improve the model's performance for targeted applications.

Exercise 1.19

Level: * (Easy)

Exercise Types: Novel

Question

Supervised learning and unsupervised learning are two of the main types of machine learning, and they differ mainly in how the models are trained and the type of data used. Briefly state their differences.

Solution

Supervised Learning:

Data: Requires labeled data.

Goal: The model learns a mapping from inputs to the correct output.

Example Tasks: Classification and regression.

Training Process: The model is provided with both input data and corresponding labels during training, allowing it to learn from these examples to make predictions on new, unseen data.

Common Algorithms: Linear regression, decision trees, random forests, support vector machines, and neural networks.

Unsupervised Learning:

Data: Does not require labeled data.

Goal: The model tries to find hidden patterns or structure in the data.

Example Tasks: Clustering and dimensionality reduction.

Training Process: The model analyzes the input data without being told the correct answer, and it organizes or structures the data in meaningful ways.

Common Algorithms: K-means clustering, hierarchical clustering, principal component analysis (PCA), and autoencoders.

Exercise 1.20

Level: * (Easy)

Exercise Types: Novel

Question

It was mentioned in lecture that the step function had previously been used as an activation function, but we now commonly use the sigmoid function as an activation function. Highlight the key differences between these functions.

Solution

- The step function takes a single real numbered value and outputs 0 if the number is negative and 1 if the number is 0 or positive

- The sigmoid activation function is an s shaped curve with the output spanning between 0 and 1 (not inclusive)

- The equation for the sigmoid function is

- The step activation function only produces two values as the output, 0 or 1, whereas the sigmoid activation function produces a continuous range of values between 0 and 1

- The smoothness of the sigmoid activation function makes it more suitable for gradient based learning in neural networks, allowing for more efficient back propagation

Exercise 1.21

Level: * (Easy)

Exercise Types: Novel

Question

Consider a linear regression model where we aim to estimate the weight vector

- Compute the gradient: Derive the gradient of

- Find the optimal

- Interpretation: What is the significance of the solution you obtained in terms of ordinary least squares (OLS)?

Provide your answers with clear derivations and explanations.

Solution

To find the optimal weight vector

Setting the gradient to zero and solving for

These are known as the normal equations, since at the optimal solution,

The corresponding solution

The matrix

To ensure the solution is unique, we examine the Hessian matrix:

If

Since the Hessian is positive definite in this case, the least squares objective has a unique global minimum.

Exercise 1.22

Level: * (Easy)

Exercise Types: Novel

Question

Which of the following best highlights the key difference between Machine Learning and Deep Learning?

A. Machine Learning is only suitable for small datasets, while Deep Learning can handle datasets of any size.

B. Machine Learning is restricted to regression and classification, whereas Deep Learning is used for image and text processing.

C. Deep Learning can model without any data, while Machine Learning requires large datasets.

D. Machine Learning relies on manually extracted features, while Deep Learning can automatically learn feature representations.

Solution

Answer: D;

Explanation: Machine Learning algorithms often require manual feature engineering, whereas Deep Learning can automatically extract features from data through multi-layered neural networks. This is a significant distinction between the two.

Machine Learning techniques include decision trees, svms, xgboosting, etc. These techniques typically work better on smaller datasets due to simpler structure. They often struggle to match the flexibility and scalability of deep neural networks as the data becomes more complex. Deep neural networks work well on large datasets consisting of data with a more complex structure, such as images or sentences (LLM). Often times, more data is needed for deep neural networks in order for them to learn effectively and avoid overfitting. Their complex architecture of deep neural networks allow them to learn feature representations from raw data, without the need for manual feature engineering.

Exercise 1.23

Level: * (Easy)

Exercise Types: Novel

Question

Pros and Cons of supervised learning and unsupervised learning?

Solution

Supervised learning is to learn from labelled data. The benefits of supervised learning include its clear objective and direct evaluation through performance metrics such as MSE to compare model predictions with clear labels. The consequences of supervised learning encompass the time-consuming nature to obtain large labelled dataset and the risk of overfitting. Unsupervised learning needs pattern or data structure detection. The benefits of unsupervised learning include opportunities for data preprocessing. Thus, dimension reduction techniques can be used to simplify the data structure. Nevertheless, we can't directly control or interpret the results as good as the supervised learning does.

Exercise 1.24

Level: ** (Easy)

Exercise Types: Novel

Question

Consider the dataset:

Given the weights and bias:

Solution

The decision function is:

For

For

For

Exercise 2.1

Level: * (Easy)

Exercise Types: Novel

References: Calin, Ovidiu. Deep learning architectures: A mathematical approach. Springer, 2020

This problem is coincidentally similar to Exercise 5.10.1 (page 163) in this textbook, although that exercise was not used as the basis for this question.

Question

This problem is about using perceptrons to implement logic functions. Assume a dataset of the form

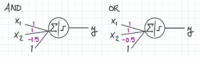

(a)* Find weights

(b)* Find the weights

(c)** Given the truth table for the XOR function:

Show that it cannot be learned by a single perceptron. Find a small neural network of multiple perceptrons that can implement the XOR function. (Hint: a hidden layer with 2 perceptrons).

Solution

(a) A perceptron that implements the AND function:

Here:

(b) A perceptron that implements the OR function:

Here:

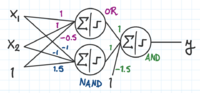

(c) XOR is not linearly separable, so it cannot be implemented by a single perceptron.

The XOR function returns 1 when the following are true:

- Either

To implement this, the outputs of an OR and a NAND perceptron can be taken as inputs to an AND perceptron. (The NAND perceptron was derived by multiplying the weights and bias of the AND perceptron by -1.)

Why can't the perceptron converge in the case of linear non-separability?

In linearly separable data, there exists a weight vector

But in the case of linear non-separability, w and b satisfying this condition do not exist, so the perceptron cannot satisfy the convergence condition.

Exercise 2.2

Level: * (Easy)

Exercise Types: Novel

Question

1.How do feedforward neural networks utilize backpropagation to adjust weights and improve the accuracy of predictions during training?

2. How would the training process be affected if the learning rate in optimization algorithm were too high or too low?

Solution

1. After a forward pass where inputs are processes to generate an output, the error between the prediction and actual values is calculated. This error is then propagated backward through the network, and the gradients of the loss function with respect to the weights are computed. Using these gradients, the weights are updated with an optimization algorithm like stochastic gradient descent, gradually minimizing the error and improving the networks' performance.

2. If the learning rate is too high, the weights might overshoot the optimal values, leading to oscillations or divergence. If it's too low, the training process might become very slow and stuck in local minimum.

Calculations

Step 1: Forward Propagation

Each neuron computes:

where:

- W = weights, b = bias

- f(z) = activation function (e.g., sigmoid, ReLU)

- a = neuron’s output

Step 2: Compute Loss

The error between predicted

For classification, **Cross-Entropy Loss** is commonly used.

Step 3: Backward Propagation

Using the **chain rule**, gradients are computed:

These gradients guide weight updates to minimize loss.

Step 4: Weight Update using Gradient Descent

Weights are updated using:

where

Exercise 2.3

Level: * (Easy)

Exercise Types: Modified

References: Simon J.D. Prince. Understanding Deep learning. 2024

This problem comes from Problem 3.5 in this textbook. In addition to the proof, I explained why this property is important to learning neural networks.

Question

Prove that the following property holds for

Explain why this property is important in neural networks.

Solution

This is known as the non-negative homogeneity property of the ReLU function.

Recall the definition of the ReLU function:

We prove the property by considering the two possible cases for

Case 1:

If

Therefore:

and:

Hence, in this case:

Case 2:

If

Therefore:

and:

Hence, in this case:

Since the property holds in both cases, this completes the proof.

Why is this property important in neural networks?

In a neural network, the input to a neuron is often a linear combination of the weights and inputs.

When training neural networks, scaling the inputs or weights can affect the activations of neurons. However, because ReLU satisfies the homogeneity property, the output of the ReLU function scales proportionally with the input. This means that scaling the inputs by a positive constant (like a learning rate or normalization factor) does not change the overall pattern of activations — it only scales them. This stability in scaling is important during optimization because it makes the network's output more predictable and ensures that scaling transformations don't break the network's functionality.

Additionally, because of the non-negative homogeneity property, the gradients also scale proportionally, the scale of the gradient changes proportionally with the input scale, which ensures that the optimization process remains stable. It helps prevent exploding gradients when the inputs are scaled by large positive values.

The homogeneity property of ReLU also helps the network to perform well on different types of data. By keeping the scaling of activations consistent, it helps maintain the connection between inputs and outputs during training, even when the data is adjusted or scaled. This makes ReLU useful when input values vary a lot, and it simplifies the network's response to changes in input distributions, which is especially valuable when transferring a trained model to new data or domains.

Exercise 2.4

Level: * (Easy)

Exercise Types: Novel

Question

Train a perceptron on the given dataset using the following initial settings, and ensure it classifies all data points correctly.

- Initial weights:

- Learning rate:

- Training dataset:

(x₁ = 1, x₂ = 2, y = 1) (x₁ = -1, x₂ = -1, y = -1) (x₁ = 2, x₂ = 1, y = 1)

Solution

Iteration 1

1. First data point (x₁ = 1, x₂ = 2) with label 1:

- Weighted sum:

- Predicted label:

- Actual label: 1 → No misclassification

2. Second data point (x₁ = -1, x₂ = -1) with label -1:

- Weighted sum:

- Predicted label:

- Actual label: -1 → Misclassified

3. Third data point (x₁ = 2, x₂ = 1) with label 1:

- Weighted sum:

- Predicted label:

- Actual label: 1 → No misclassification

Update Weights (using the Perceptron rule with the cost as the distance of all misclassified points)

For the misclassified point (x₁ = -1, x₂ = -1):

- Updated weights:

Updated weights after first iteration:

Iteration 2

1. First data point (x₁ = 1, x₂ = 2) with label 1:

- Weighted sum:

- Predicted label:

- Actual label: 1 → No misclassification

2. Second data point (x₁ = -1, x₂ = -1) with label -1:

- Weighted sum:

- Predicted label:

- Actual label: -1 → No misclassification

3. Third data point (x₁ = 2, x₂ = 1) with label 1:

- Weighted sum:

- Predicted label:

- Actual label: 1 → No misclassification

Since there are no misclassifications in the second iteration, the perceptron has converged!

Final Result

- Weights after convergence:

- Total cost after convergence:

Exercise 2.5

Level: * (Moderate)

Exercise Types: Novel

Question

Consider a Feed-Forward Neural Network (FFN) with one or more hidden layers. Answer the following questions:

(a) Describe how does the Feed-Forward Neural Network (FFN) work in general. Describe the component of the Network.

(b) How does the forward pass work ? Provide the relevant formulas for each step.

(c) How does the backward pass (backpropagation) ? Explain and provide the formulas for each step.

Solution

(a): A Feed-Forward Neural Network (FFN) consists of an input layer, one or more hidden layers, and one output layer. Each layer transforms the input data, with each neuron's output being fed to the next layer as input. Each neuron in a layer is a perceptron, which is a basic computational unit that contains weights, bias and an activation function. The perceptron computes a weighted sum of its inputs, adds a bias term, and passes the result through an activation function. In this structure, each layer transforms the data as it passes through, with each neuron's output being fed to the next layer. The final output is the network’s prediction, then the loss function use the prediction and the true label of the data to calculate the loss. The backward pass computes the gradients of the loss with respect to each weight and bias in the network, and then update the weights and biases to minimize the loss. This process is repeated for each sample (or mini-batch) of data until the loss converges and the weights are optimized.

(b): The forward pass involves computing the output for each layer in the network. For each layer, the algorithm performs the following steps:

1. Compute the weighted sum of inputs to the layer:

2. Use the activation function to calculate the output of the layer:

3. Repeat these steps for each layer, until getting to the output layer

(c): The backward pass (backpropagation) updates the gradients of the loss function with respect to each weight and bias, and then use the gradient descents to update the weights.

1. Calculate the errors for each layer:

Error at the output layer: The error term at the output layer has this formula:Error for the hidden layers: The error for each hidden layer is:

3. Gradient of the loss with respect to weights and biases. Compute the gradients for the weights and biases:

The gradient for weights is:

The gradient is for biases is:

4. Update the weights and biases using gradient descent:

Where

Repeat these steps for each layers from output layer to input layers to update all the weights and biases

Exercise 2.6

Level: * (Easy)

Exercise Types: Novel

Question

A single neuron takes an input vector

1. Calculate the weighted sum

2. Compute the squared error loss:

3. Find the gradient of the loss with respect to the weights

4.Provide the updated weights and the error after the update.

5. Compare the result of the previous step with the case of a learning rate of

Solution

1.

2.

3. The gradient of the loss with respect to

For

For

For

For

4. Recalculate

Recalculate the error:

5. Compare the result of the previous step with the case of a learning rate of

For

For

Recalculate

Recalculate the error:

Comparison:

- With a learning rate of

- With

The error is much lower when using a larger learning rate

Exercise 2.7

Level: * (Easy)

Exercise Types: Copied

This problem comes from Exercise 2 : Perceptron Learning.

Question

Given two single perceptrons

- Perceptron

Is perceptron

Solution

To decide if perceptron

- **Perceptron

- **Perceptron

A perceptron

Observe that for any

Hence, perceptron

Additional Subquestion

For a random sample of points, verify empirically that any point classified as positive by perceptron

Additional Solution (Sample Code)

Below is a short Python snippet that generates random points and checks their classification according to each perceptron. (No visual output is included.)

```python import numpy as np

def perceptron_output(x1, x2, w0, w1, w2):

return (w0 + w1*x1 + w2*x2) >= 0

- Weights for a and b:

w_a = (1, 2, 1) # w0=1, w1=2, w2=1 w_b = (0, 2, 1) # w0=0, w1=2, w2=1

n_points = 1000 x1_vals = np.random.uniform(-10, 10, n_points) x2_vals = np.random.uniform(-10, 10, n_points)

count_b_positive_also_positive_in_a = 0 count_b_positive = 0

for x1, x2 in zip(x1_vals, x2_vals):

output_b = perceptron_output(x1, x2, *w_b)

output_a = perceptron_output(x1, x2, *w_a)

if output_b:

count_b_positive += 1

if output_a:

count_b_positive_also_positive_in_a += 1

print("Out of", count_b_positive, "points that B classified as positive,") print(count_b_positive_also_positive_in_a, "were also positive for A.") print("Hence, all (or nearly all) B-positive points lie in A's positive region.")

Exercise 2.10

Level: * (Easy)

Exercise Type: Novel

Question

In a simple linear regression

a). Derive the vectorized form of the SSE (loss function) in terms of

b). Find the optimal value of

Solution

a).

b).

Note that

Exercise 2.11

Level: Moderate

Exercise Types: Novel

Question

Deep learning models often face challenges during training due to the vanishing gradient problem, especially when using sigmoid or tanh activation functions.

(a) Describe the vanishing gradient problem and its impact on the training of deep networks.

(b) Explain how the introduction of ReLU (Rectified Linear Unit) activation function mitigates this problem.

(c) Discuss one potential downside of using ReLU and propose an alternative activation function that addresses this limitation.

Solution

(a) Vanishing Gradient Problem: The vanishing gradient problem occurs when the gradients of the loss function become extremely small as they are propagated back through the layers of a deep network. This leads to:

(i)Slow or stagnant weight updates in early layers;

(ii)Difficulty in effectively training deep models. This issue is particularly pronounced with activation functions like sigmoid and tanh, where gradients approach zero as inputs saturate.

(b) Role of ReLU in Mitigation:

ReLU, defined as

(i)Producing non-zero gradients for positive inputs, maintaining effective weight updates;

(ii)Introducing sparsity, as neurons deactivate (output zero) for negative inputs, which improves model efficiency.

(c) Downside of ReLU and Alternatives: One downside of ReLU is the "dying ReLU" problem, where neurons output zero for all inputs, effectively becoming inactive. This can happen when weights are poorly initialized or during training.

Alternative: Leaky ReLU allows a small gradient for negative inputs, defined as

Exercise 2.12

Level: * (Easy)

Exercise Type: Novel

Question

Consider the following data:

This data is fitted by a linear regression model with no bias term. What is the loss for the first four data points? Use the L2 loss defined as:

Solution

The correct losses for the first four data points are 0, 0.5, 0, and 0.5.

Calculation

Step 1: Fit the linear regression model.

Since there is no bias term, the model is

Compute

Step 2: Calculate

For the first four data points:

Step 3: Compute the L2 loss for each point.

- For

- For

- For

- For

Thus, the losses are: 0, 0.5, 0, 0.5.

Exercise 2.13

Level: ** (Moderate)

Exercise Types: Novel

References: A. Ghodsi, STAT 940 Deep Learning: Lecture 2, University of Waterloo, Winter 2025.

Question

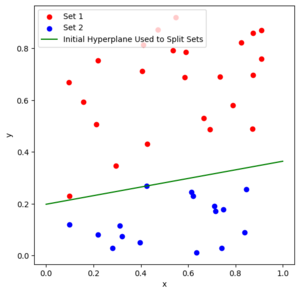

In Lecture 2, we derived a formula for a simple perceptron to determine a hyperplane which splits a set of linearly independant data.

In class, we defined the error as

Where

The function for the simple perceptron was defined as

Where a point belongs to Set 1 if it is above the line, and Set 2 if it is below the line.

In lecture 2, we found the partial derivatives as

and

And defined the formula for gradient descent as

Generate a random set of points and a line in 2D using python, and sort the set of points into 2 sets above and below the line. Then use gradient descent and the formulas above to find a hyperplane which also sorts the set with no prior knowledge of the line used for sorting.

Solution

The code below is a sample of implementing the 2D gradient descent algorithm derived in Lecture 2 to a random dataset

import numpy as np

import matplotlib.pyplot as plt

import functools

#Generate a random array of points

random_array = np.random.random((40,2))

#Linearlly seperate the points by a random hyperplane (in this case, a 2D line)

#y = ax + b

a = np.random.random()

b = np.random.random()*0.25

#Create the ground truth, classify points into Set 1 or Set 2

set_1_indicies = random_array[:,1] >= (a*random_array[:,0] + b) #Boolean indicies where y is greater to or equal to the line y = ax + b

set_2_indicies = random_array[:,1] < (a*random_array[:,0] + b)

#Get the (x,y) coordinates of set 1 and set 2 by selecting the indicies of the random array which are either above or below the hyperplane

set_1= random_array[set_1_indicies]

set_2= random_array[set_2_indicies]

plt.figure(1,figsize=(6,6))

plt.scatter(set_1[:,0],set_1[:,1],c='r')

plt.scatter(set_2[:,0],set_2[:,1],c='b')

plt.xlabel('x')

plt.ylabel('y')

x = np.linspace(0,1,2)

y = x*a + b

plt.plot(x,y,'g')

plt.legend(['Set 1','Set 2','Initial Hyperplane Used to Split Sets'])

plt.title("Generated Dataset")

plt.show()

#Now use a simple perceptron and the algorithm developed in class during lecture 2 to have an agent with no prior knowledge of the hyperplane determine what it is, or find a hyperplane that can seperate the two sets.

#Randomly initialize beta and beta_0

beta = np.random.random()

beta_0 = np.random.random()*0.25

def update_weights(beta,beta_0,random_array,set_1_indicies,set_2_indicies,learning_rate):

#Find perceptron set 1 and set 2

perceptron_set_1_indicies = random_array[:,1] >= (beta*random_array[:,0] + beta_0)

perceptron_set_2_indicies = random_array[:,1] < (beta*random_array[:,0] + beta_0)

#Find set M, the misclassified points

#A point x,y classified by the agent as class 1 is misclassified if it is actually in set 2 or if it is classified as class 2 and is actually in set 1

M_indicies= np.logical_or(np.logical_and(perceptron_set_1_indicies,set_2_indicies),np.logical_and(perceptron_set_2_indicies,set_1_indicies))

#Get an array of the x,y coordinates of the misclassified points

M=random_array[M_indicies]

#Define variables for the sums on the right side of the gradient descent linear equation

sum_yixi = 0

sum_yi = 0

#Compute the sums needed to do gradient descent

for i in range(0,len(M)):

sum_yixi = sum_yixi + M[i,0]*M[i,1]

sum_yi = sum_yi + M[i,1]

#Update beta and beta_0

beta = beta + learning_rate*sum_yixi

beta_0 = beta_0 + learning_rate*sum_yi

return beta,beta_0

#Run for 1000 epochs

for i in range(0,1000):

beta,beta_0 = update_weights(beta,beta_0,random_array,set_1_indicies,set_2_indicies,0.01)

plt.figure(1,figsize=(6,6))

plt.scatter(set_1[:,0],set_1[:,1],c='r')

plt.scatter(set_2[:,0],set_2[:,1],c='b')

plt.xlabel('x')

plt.ylabel('y')

x = np.linspace(0,1,2)

y = beta*x + beta_0

plt.plot(x,y,c='g')

plt.legend(['Set 1','Set 2','Gradient Descent Hyperplane'])

plt.title("Gradient Descent Generated Hyperplane")

plt.show()

The figure below shows a sample of the randomly generated linearly separated sets, and the lines used to separate the sets.

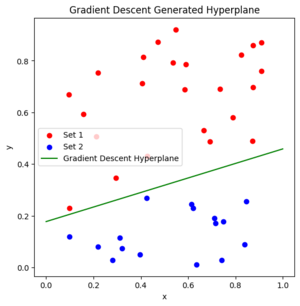

The figure below shows the predicted hyperplane found using gradient descent

Exercise 2.14

Level: Easy

Exercise Types: Novel

Question

Consider a simple neural network model with a single hidden layer to classify input data into two categories based on their features. Address the following points:

- Describe the process of input data transformation through a single hidden layer.

- Identify the role of activation functions in neural networks.

- Explain the importance of the learning rate in the neural network training process.

Solution

- Input Processing:

The network receives input features and feeds them through a hidden layer, where each input is subject to a weighted sum, addition of a bias, and application of an activation function. This series of operations transforms the input data into a representation that captures complex relationships within the data.

Mathematically, the output

- Role of Activation Functions:

Activation functions such as sigmoid, ReLU, or tanh introduce necessary non-linearities into the model, enabling it to learn more complex patterns and relationships in the data. These functions are applied to each neuron's output and help regulate the neural network's overall output, ensuring predictability and differentiation between different types of outputs.

- Importance of Learning Rate:

The learning rate

Exercise 2.15

Level: ** (Moderate)

Exercise Types: Novel

Question

Consider a simple feedforward neural network with one input layer, one hidden layer, and one output layer. The network structure is as follows:

- Input layer: 2 neurons (features

- Hidden layer: 3 neurons with ReLU activation (

- Output layer: 1 neuron with no activation (linear output).

The weights (

Hidden layer:

Output layer:

For input values

- Calculate the output of the hidden layer (

- Calculate the final output of the network (

Solution

Step 1: Calculate

The input vector is:

Step 2: Apply ReLU activation

The ReLU activation is defined as:

Step 3: Calculate

Step 4: Final Output

The final output of the network is:

Exercise 2.16

Level: * (Easy)

Exercise Types: Novel

Question

Consider the following binary classification task, where the goal is to classify points into two categories: +1 or -1.

Training Data:

Task: Train a perceptron model using gradient descent, and update the weights using the perceptron update rule using the first data point(2,3,1). Initialize the weights as

Solution

Step 1: Compute the Prediction

The perceptron model predicts the label using the following equation:

Substituting the initial weights and the input values:

Since the predicted label

Step 2: Update the Weights

Exercise 2.17

Level: * (Easy)

Exercise Types: Novel

Question

Consider a binary classification problem where a perceptron is used to separate two linearly separable classes in

where:

Prove that if the data is **linearly separable**, the perceptron algorithm **converges in a finite number of steps

Solution

Define the angle between

By repeatedly applying the update rule and using the Cauchy-Schwarz inequality, we can show that:

which grows linearly with

Combining these inequalities leads to the bound on the number of updates:

Exercise 2.18

Level: * (Easy)

Exercise Types: Novel

Question

In a binary classification problem,assume the input data is a 2-dimensional vector x = [x1,x2].The Perceptron model is defined with the following parameters:

Weights: w = [2,-1] Bias: b = -0.5

The Perceptron output is given by: y = sign(wx + b), where sign(z) is the sign function that outputs +1 if z > 0, and -1 otherwise.

1. Compute the Perceptron output y for the input sample x = [1,2];

2. Assume the target label is t = +1. Determine if the Perceptron classifies the sample correctly. If it misclassifies, provide the update rule for the weights w and bisas b, and compute their updated values after one step.

Solution

1.Compute the predicted output y and determine if classification is correct:

The target label t = +1, but the Perceptron output y = -1. Hence, the classification is incorrect.

2.Update rule for weights and bias:

Gradient:

Update:

Assume the learning rate here is 0.1,

Exercise 2.19

Level: ** (Moderate)

Exercise Types: Novel

Question

Given a simple feedforward neural network with one hidden layer and a softmax output, the network has the following structure:

- Input layer:

- Hidden layer: Linear transformation

- Output layer: Softmax output

Let the true label be

1.Derive the gradient of the loss function (cross-entropy loss) with respect to the output logit

2. Using the chain rule, derive the gradient of the loss function with respect to the hidden layer

Solution

1.

Let the output logits be represented as:

The softmax output

The cross-entropy loss

The gradient of the loss with respect to the output logits

The gradient with respect to the output

2.

Now, we compute the gradient of the loss with respect to the hidden layer

Using the chain rule, we have:

From part 1, we already computed the derivative of the loss with respect to the output logits:

The derivative of the logits with respect to the hidden layer is:

Therefore, the gradient with respect to the hidden layer is:

The ReLU activation introduces a nonlinearity in the gradient computation. Specifically, ReLU is defined as:

The gradient of the ReLU function is:

Therefore, the gradient with respect to the hidden layer becomes:

This means that if an element of

Exercise 2.20

Level: * (Easy)

Exercise Types: Novel

Question

Compute the distance of the following misclassified points to the hyperplane

The hyperplane parameters are:

Solution

The hyperplane equation is:

For

For

Exercise 2.21

Level: * (Easy)

Exercise Types: Novel

Question

Train a perceptron using gradient descent for the following settings:

- Input Data:

- Labels:

- Initial Weights:

- Learning Rate:

The perceptron update rules are:

Dataset:

Tasks:

- Perform one iteration of gradient descent (update weights and bias).

- Determine whether both points are correctly classified after the update.

- Final weights:

- Final bias:

- For

- For

Solution

Initialization:Step 1: Update for Point

Compute perceptron output:

Misclassification occurs (

Update weights and bias:

Step 2: Update for Point

Compute perceptron output:

Misclassification occurs (

Update weights and bias:

Results:

Classification Check:

Conclusion:

After one iteration of gradient descent, both points are correctly classified.

Exercise 2.22

Level: * (Moderate)

Exercise Types: Novel

Question

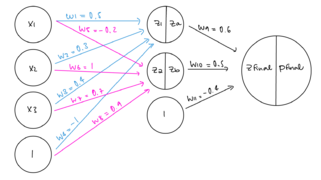

For the following neural network, do one forward pass through the network using the point (x1, x2, x3) = (1, 2, -1). The hidden layer uses the ReLu activation function and the output layer uses the sigmoid activation function. Calculate pfinal.

Solution

1) Hidden layer calculations:

The equations for the hidden layer are

2) Output layers calculations:

The equations for the output layer is

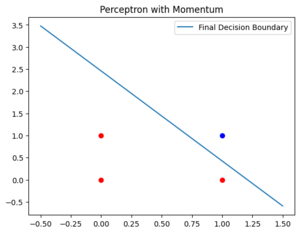

Exercise 3.1

Level: ** (Moderate)

Exercise Type: Novel

Implement the perceptron learning algorithm with momentum for the AND function. Plot the decision boundary after 10 epochs of training with a learning rate of 0.1 and a momentum of 0.9.

Solution

import numpy as np

import matplotlib.pyplot as plt

# Dataset

data = np.array([

[0, 0, -1],

[0, 1, -1],

[1, 0, -1],

[1, 1, 1]

])

# Initialize weights, learning rate, and momentum

weights = np.random.rand(3)

learning_rate = 0.1

momentum = 0.9

epochs = 10

previous_update = np.zeros(3)

# Add bias term to data

X = np.hstack((np.ones((data.shape[0], 1)), data[:, :2]))

y = data[:, 2]

# Training loop

for epoch in range(epochs):

for i in range(len(X)):

prediction = np.sign(np.dot(weights, X[i]))

if prediction != y[i]:

update = learning_rate * y[i] * X[i] + momentum * previous_update

weights += update

previous_update = update

# Plot final decision boundary

x_vals = np.linspace(-0.5, 1.5, 100)

y_vals = -(weights[1] * x_vals + weights[0]) / weights[2]

plt.plot(x_vals, y_vals, label='Final Decision Boundary')

# Plot dataset

for point in data:

color = 'blue' if point[2] == 1 else 'red'

plt.scatter(point[0], point[1], color=color)

plt.title('Perceptron with Momentum')

plt.legend()

plt.show()

Exercise 3.2

Level: ** (Moderate)

Exercise Types: Novel

Question

Write a python program showing how the back propagation algorithm work with a 2 inputs 2 hidden layer and 1 output layer neural network. Train the network on the XOR problem:

- Input[0,0] -> Output 0

- Input [0,1] -> Output 1

- Input [1,0] -> Output 1

- Input [1,1] -> Output 0

Use the sigmoid activation function. Use Mean Square Error as the loss function.

Solution

import numpy as np

# -------------------------

# 1. Define Activation Functions

# -------------------------

def sigmoid(x):

"""

Sigmoid activation function.

"""

return 1 / (1 + np.exp(-x))

def sigmoid_derivative(x):

"""

Derivative of the sigmoid function.

Here, 'x' is assumed to be sigmoid(x),

meaning x is already the output of the sigmoid.

"""

return x * (1 - x)

# -------------------------

# 2. Prepare the Training Data (XOR)

# -------------------------

# Input data (4 samples, each with 2 features)

X = np.array([

[0, 0],

[0, 1],

[1, 0],

[1, 1]

])

# Target labels (4 samples, each is a single output)

y = np.array([

[0],

[1],

[1],

[0]

])

# -------------------------

# 3. Initialize Network Parameters

# -------------------------

# Weights for input -> hidden (shape: 2x2)

W1 = np.random.randn(2, 2)

# Bias for hidden layer (shape: 1x2)

b1 = np.random.randn(1, 2)

# Weights for hidden -> output (shape: 2x1)

W2 = np.random.randn(2, 1)

# Bias for output layer (shape: 1x1)

b2 = np.random.randn(1, 1)

# Hyperparameters

learning_rate = 0.1

num_epochs = 10000

# -------------------------

# 4. Training Loop

# -------------------------

for epoch in range(num_epochs):

# 4.1. Forward Pass

# - Compute hidden layer output

hidden_input = np.dot(X, W1) + b1 # shape: (4, 2)

hidden_output = sigmoid(hidden_input)

# - Compute final output

final_input = np.dot(hidden_output, W2) + b2 # shape: (4, 1)

final_output = sigmoid(final_input)

# 4.2. Compute Loss (Mean Squared Error)

error = y - final_output # shape: (4, 1)

loss = np.mean(error**2)

# 4.3. Backpropagation

# - Gradient of loss w.r.t. final_output

d_final_output = error * sigmoid_derivative(final_output) # shape: (4, 1)

# - Propagate error back to hidden layer

error_hidden_layer = np.dot(d_final_output, W2.T) # shape: (4, 2)

d_hidden_output = error_hidden_layer * sigmoid_derivative(hidden_output) # shape: (4, 2)

# 4.4. Gradient Descent Updates

# - Update W2, b2

W2 += learning_rate * np.dot(hidden_output.T, d_final_output) # shape: (2, 1)

b2 += learning_rate * np.sum(d_final_output, axis=0, keepdims=True) # shape: (1, 1)

# - Update W1, b1

W1 += learning_rate * np.dot(X.T, d_hidden_output) # shape: (2, 2)

b1 += learning_rate * np.sum(d_hidden_output, axis=0, keepdims=True) # shape: (1, 2)

# Print loss every 1000 epochs

if epoch % 1000 == 0:

print(f"Epoch {epoch}, Loss: {loss:.6f}")

# -------------------------

# 5. Testing / Final Outputs

# -------------------------

print("\nTraining complete.")

print("Final loss:", loss)

# Feedforward one last time to see predictions

hidden_output = sigmoid(np.dot(X, W1) + b1)

final_output = sigmoid(np.dot(hidden_output, W2) + b2)

print("\nOutput after training:")

for i, inp in enumerate(X):

print(f"Input: {inp} -> Predicted: {final_output[i][0]:.4f} (Target: {y[i][0]})")

Output:

Epoch 0, Loss: 0.257193 Epoch 1000, Loss: 0.247720 Epoch 2000, Loss: 0.226962 Epoch 3000, Loss: 0.191367 Epoch 4000, Loss: 0.162169 Epoch 5000, Loss: 0.034894 Epoch 6000, Loss: 0.012459 Epoch 7000, Loss: 0.007127 Epoch 8000, Loss: 0.004890 Epoch 9000, Loss: 0.003687 Training complete. Final loss: 0.0029435579049382756 Output after training: Input: [0 0] -> Predicted: 0.0598 (Target: 0) Input: [0 1] -> Predicted: 0.9461 (Target: 1) Input: [1 0] -> Predicted: 0.9506 (Target: 1) Input: [1 1] -> Predicted: 0.0534 (Target: 0)

Exercise 3.3

Level: * (Easy)

Exercise Types: Novel

Question

Implement 4 iterations of gradient descent with and without momentum for the function

Solution

Note that

Without momentum:

Iteration 1:

Iteration 2:

Iteration 3:

Iteration 4:

With momentum:

Iteration 1:

Iteration 2:

Iteration 3:

Iteration 4:

By observation, we know that the minimum of

Benefits for momentum: Momentum is a technique used in optimization to accelerate convergence. Inspired by physical momentum, it helps in navigating the optimization landscape.

By remembering the direction of previous gradients, which are accumulated into a running average (the velocity), momentum helps guide the updates more smoothly, leading to faster progress. This running average allows the optimizer to maintain a consistent direction even if individual gradients fluctuate. Additionally, momentum can help the algorithm escape from shallow local minima by carrying the updates through flat regions. This prevents the optimizer from getting stuck in small, unimportant minima and helps it continue moving toward a better local minimum.

Additional Comment:

It is important to note that the use of running average is only there to help with intuition. At time t, while the velocity is a linear sum of previous gradients, the weight of the gradient decreases as time get further away. That is, the

Exercise 3.4

Level: ** (Moderate)

Exercise Types: Novel

Question

Perform one iteration of forward pass and backward propagation for the following network:

- Input layer: 2 neurons (x₁, x₂)

- Hidden layer: 2 neurons (h₁, h₂)

- Output layer: 1 neuron (ŷ)

- Input-to-Hidden Layer:

w₁₁¹ = 0.15, w₂₁¹ = 0.20, w₁₂¹ = 0.25, w₂₂¹ = 0.30 Bias: b¹ = 0.35 Activation function: sigmoid

- Hidden-to-Output Layer:

w₁₁² = 0.40, w₂₁² = 0.45 Bias: b² = 0.60 Activation function: sigmoid

Input:

- x₁ = 0.05, x₂ = 0.10

- Target output: y = 0.01

- Learning rate: η = 0.5

Solution

Step 1: Forward Pass

1. Hidden Layer Outputs

For neuron h₁:

For neuron h₂:

2. Output Layer

Step 2: Compute Error

Step 3: Backpropagation

3.1: Gradients for Output Layer

1. Gradient w.r.t. output neuron:

2. Update weights and bias for hidden-to-output layer:

3.2: Gradients for Hidden Layer

1. Gradients for hidden layer neurons:

For h₁:

For h₂:

2. Update weights and bias for input-to-hidden layer:

For w₁₁¹:

For w₂₁¹:

For b¹:

This completes one iteration of forward and backward propagation.

Exercise 3.5

Level: * (Easy)

Exercise Types: Novel

Question

Consider the loss function

Solution

Compute the gradient at

Update the weight using SGD:

Compute the gradient at

Update the weight again:

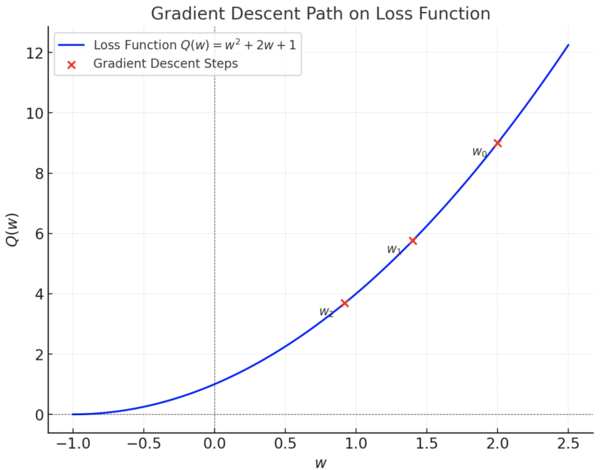

Gradient Path Figure:

Exercise 3.6

Level: * (Easy)

Exercise Types: Novel

Question

What is the prediction of the following MLP for

Both layers are using sigmoid activation. The weight matrices connecting the input and hidden layer, and the hidden layer and output are respectively:

Choose the correct answer:

a)

b)

c)

d)

Solution

The correct answer is b):

Calculation

Step 1: Compute the hidden layer output

Step 2: Compute the output layer prediction

Thus, the prediction of the MLP is

Exercise 3.7

Level: * (Easy)

Exercise Types: Novel

Question

Feedforward Neural Networks (FNNs) are one of the commonly used model in deep learning. In the context of training such networks, there are several important design components and techniques to consider.

(a) Give three commonly used activation functions. For each function, provide the formula and comment on its usage.

(b) Write down a widely used loss function for classification and explain why it is popular.

(c) Explain what adaptive learning methods are and how they help optimize and speed up neural network training.

Solution

(a)

- Sigmoid Activation Function

The output of the sigmoid function ranges from 0 to 1, making it suitable for estimating probabilities. For example, it can be used in the final layer of a binary classification task.

- ReLU (Rectified Linear Unit) Activation Function

ReLU outputs zero or a positive number, enabling some weights to be set to 0, promoting sparse representation. Since it only requires a comparison, and possibly setting a number to zero, it is more computationally efficient than other activation functions. An activation function similar to ReLU is the Gaussian error linear unit (GELU), which has a very similar shape except it is smooth at

- Tanh (Hyperbolic Tangent)

Advantages: It can have zero-centered output, which helps during optimization by leading to balanced gradient updates; It's also a smooth gradient that works well in many cases. It also squashes extreme values into the range [-1,1], reducing the bias introduced from outlying datapoints.

Disadvantages: It outputs close to -1 or 1 can have near-zero gradients, leading to slower learning (vanishing gradient issue).

(b)

Cross-Entropy Loss

It provides a smooth and continuous gradient, and it penalizes the incorrect predictions more when the predictions are made with confidence. It is well suited for multi-class classification, especially when used with softmax function together.

(c)

Some variants of SGD adjust the learning rate during the training process based on the gradients' magnitudes, helping accelerate convergence and manage gradients more effectively.

Exercise 3.8

Level: * (Easy)

Exercise Types: Novel

Question

Consider a Feedforward Neural Network (FNN) with a single neuron, where the loss function is given by:

Solution

Gradient of

So, after 2 iterations,

Additional Expanded Question

What if the loss function were

Additional Solution

For

1. **Iteration 1** (

2. **Iteration 2** (

Clearly,

Exercise 3.9

Level: ** (Moderate)

Exercise Types: Modified

References: Source: Schonlau, M., Applied Statistical Learning. With Case Studies in Stata, Springer. ISBN 978-3-031-33389-7 (Chapter 14, page 318).

Question

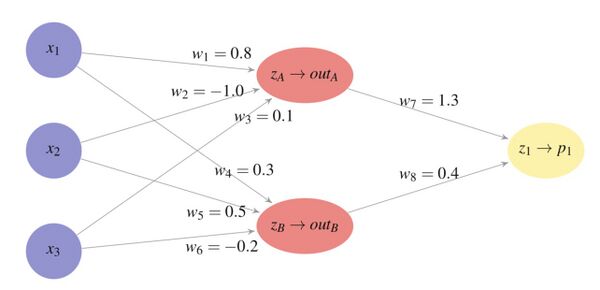

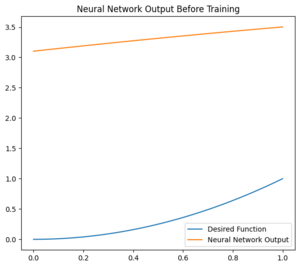

Consider the feedforward neural network with initial weights shown in Figure 3.9 (For simplicity, there are no biases). Use sigmoid activation functions (

(a): Compute a forward pass through the network for the observation (y,x1,x2,x3) = (1,3,-2,5). That is, compute the predicted probability

(b): Using the result from (a), make a backward pass to compute the revised w7 and w1. Use squared error loss:

Solution

(a)

(b)

Using

Exercise 3.10

Level: * (Easy)

Exercise Types: Novel

Question

What is vanishing gradient and how is it caused? What is the solution that fixes vanishing gradient?

Answer

The vanishing gradient problem occurs when the gradients used to update weights in a neural network become extremely small as they propagate backward through the layers. This happens because activation functions compress their inputs into a narrow output range. Consequently, their derivatives are very small, particularly for inputs far from zero, causing gradients to shrink exponentially in deeper layers. As a result, in modern computing, the gradient will be the result of multiplying multiple epsilon sized gradients where the resulting update is 0. To fix this, we use the relu activation function where the gradient is either 0 or 1. This ensures that the update value will not vanish due to small gradients. This is because ReLU does not squash its outputs into a narrow range. For positive inputs, the gradient of ReLU is constant and equal to 1, ensuring that gradients remain significant during backpropagation. Unlike other functions that lead to exponentially small gradients, ReLU’s piecewise linear nature avoids the compounding effect of small derivatives across layers. By maintaining larger gradient values, ReLU ensures that weight updates are not hindered, making it an effective solution to the vanishing gradient problem, especially in deep networks.

Exercise 3.11

Level: ** (Moderate)

Exercise Types: Novel

Question

Given a two-layer neural network with the following specifications:

Inputs: Represented as

Weights: First layer weight matrix:

Activations: The activation function for all layers is the sigmoid function, defined as:

Loss Function: The cross-entropy loss, given by

(a). Write out the forward propagation steps.

(b). Calculate the derivative of the loss with respect to weights in

Solution

(a). Forward Propagation Steps:

For the first layer pre-activation:

For the first layer activation:

For the second layer pre-activation:

For the second layer activation (output):

For the loss calculation:

(b). Derivative of the Loss

Gradients for the Second Layer

For the loss gradient w.r.t. output:

For the gradient of sigmoid output w.r.t. pre-activation:

For the gradient of pre-activation w.r.t. weights:

For the combine terms:

Gradients for the First Layer

For the gradient of loss w.r.t. first layer activation:

For the gradient of activation w.r.t. pre-activation:

For the gradient of pre-activation w.r.t. first layer weights:

For the combine terms:

Exercise 3.12

Level: ** (Moderate)

Exercise Types: Novel

Question

Consider the **Bent Identity loss function**, a smooth approximation of the absolute loss, defined as:

The following identities may be useful:

where

Part (a): Compute the Gradient

Find the gradient of the loss function with respect to

Part (b): Implement Gradient Descent

Using your result from Part (a), write the update rules for **gradient descent** and implement the iterative optimization process.

Solution

Step 1: Computing the Gradient

We differentiate the loss function:

Differentiating with respect to

Step 2: Gradient Descent Update Rules

We update the parameters using gradient descent:

where

Step 3: Algorithm Implementation

Initialize w, b randomly

Set learning_rate η

For t = 1 to max_iterations:

Compute predicted value: y_hat = w^T * x + b

Compute gradients:

grad_w = ((y_hat - y) / sqrt(1 + (y_hat - y)^2)) * x

grad_b = (y_hat - y) / sqrt(1 + (y_hat - y)^2)

Update parameters:

w = w - η * grad_w

b = b - η * grad_b

Check for convergence

Exercise 3.13

Level: ** (Difficult)

Exercise Types: Novel

Question

Consider training a deep neural network with momentum-based SGD on a quadratic approximation of the loss near a local minimum,

Solution

Let

where

In the eigenbasis of

Projecting the iteration onto the direction

Because

Small

When

Large

If

Overall, momentum alters the eigenvalues of

Exercise 3.14

Level: * (Easy)

Exercise Types: Novel

Question

Perform one step of backpropagation for the following network:

- Input layer: 2 neurons