DETECTING STATISTICAL INTERACTIONS FROM NEURAL NETWORK WEIGHTS

DETECTING STATISTICAL INTERACTIONS FROM NEURAL NETWORK WEIGHTS

Introduction

Within several areas, regression analysis is essential. However, due to complexity, the only tool left for practitioners are some simple tools based on linear regression. Growth in computational power available, practitioners are now able to use complicated models. Nevertheless, now the problem is not complexity: Interpretability. Neural network mostly exhibits superior predictable power compare to other traditional statistical regression methods. However, it's highly complicated structure simply prevent users to understand the results. In this paper, we are going to present one way of implementing interpretability in neural network.

Note that in this paper, we only consider one specific types of neural network, Feed-Forward Neural Network. Based on the methodology discussed here, we can build interpretation methodology for other types of networks also.

Notations

Before we dive in to methodology, we are going to define a few notations here. Most of them will be trivial.

1. Vector: Vectors are defined with bold-lowercases, v, w

2. Matrix: Matrice are defined with blod-uppercases, V, W

3. Interger Set: For some interger p [math]\displaystyle{ \in }[/math] Z, we define [p] := {1,2,3,...,p}

Interaction

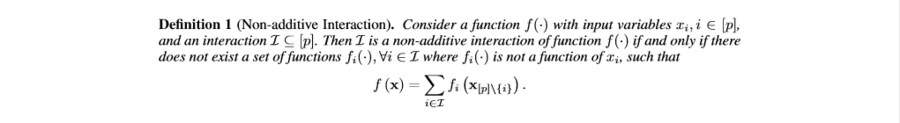

First of all, in order to explain the model, we need to be able to explain the interactions and their effects to output. Therefore, we define 'interacion' between variables as below.

From the definition above, for a function like, [math]\displaystyle{ x_1x_2 + sin(x_3 + x_4 + x_5) }[/math], we have [math]\displaystyle{ {[x_1, x_2]} }[/math] and [math]\displaystyle{ {[x_3, x_4, x_5]} }[/math] interactions. And we say that the latter interaction to be 3-way interaction.

Note that from the definition above, we can naturally deduce that d-way interaction can exist if and only if all of its (d-1) interactions exist. For example, 3-way interaction above shows that we have 2-way interactions [math]\displaystyle{ {[3,4], [4,5]} }[/math] and [math]\displaystyle{ {[3,5]} }[/math].

One thing that we need to keep in mind is that for models like neural network, most of interactions are happening within hidden layers. This means that we needa proper way of measuring interaction strength.

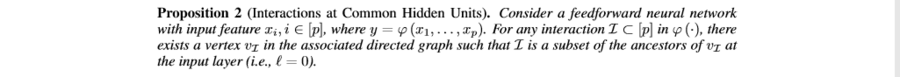

The key observation is that for any kinds of interaction, at a some hidden unit of some hidden layer, two interacting features the ancestors. In graph-theoretical language, interaction map can be viewed as an associated directed graph and for any interaction [math]\displaystyle{ \Gamma \in [p] }[/math], there exists at least one vertix that has all of features of [math]\displaystyle{ \Gamma }[/math] as ancestors. The statement can be rigorized as the following:

Now, the above mathematical statement gurantees us to measure interaction strengths at ANY hidden layers. For example, if we want to study about interactions at some specific hidden layer, now we now that there exists corresponding vertices between the hidden layer and output layer. Therefore all we need to do is now to find approprite measure which can summarize the information between those two layers.

Before doing so, let's think about a single-layered neural network. For any one hidden unit, we can have possibly, [math]\displaystyle{ 2^||W_i,:|| }[/math], number of interactions. This means that our search space might be too huge for multi-layered networks. Therefore, we need a some descent way of approximate out search space.