MULTI-VIEW DATA GENERATION WITHOUT VIEW SUPERVISION

This page contains a summary of the paper "Multi-View Data Generation without Supervision" by Mickael Chen, Ludovic Denoyer, Thierry Artieres. It was published at the International Conference on Learning Representations (ICLR) in 2018 in Poster Category.

Introduction

Motivation

High Dimensional Generative models have seen a surge of interest off late with introduction of Variational auto-encoders and generative adversarial networks. This paper focuses on a particular problem where one aims at generating samples corresponding to a number of objects under various views. The distribution of the data is assumed to be driven by two independent latent factors: the content, which represents the intrinsic features of an object, and the view, which stands for the settings of a particular observation of that object (for example, the different angles of the same object). The paper proposes two models using this disentanglement of latent space - a generative model and a conditional variant of the same.

Related Work

The problem of handling multi-view inputs has mainly been studied from the predictive point of view where one wants, for example, to learn a model able to predict/classify over multiple views of the same object (Su et al. (2015); Qi et al. (2016)). These approaches generally involve (early or late) fusion of the different views at a particular level of a deep architecture. Recent studies have focused on identifying factors of variations from multiview datasets. The underlying idea is to consider that a particular data sample may be thought as the mix of a content information (e.g. related to its class label like a given person in a face dataset) and of a side information, the view, which accounts for factors of variability (e.g. exposure, viewpoint, with/wo glasses...). So, all the samples of same class contain the same content but different view. A number of approaches have been proposed to disentangle the content from the view, also referred as the style in some papers (Mathieu et al. (2016); Denton & Birodkar (2017)). The two common limitations the earlier approaches pose - as claimed by the paper - are that (i) they usually consider discrete views that are characterized by a domain or a set of discrete (binary/categorical) attributes (e.g. face with/wo glasses, the color of the hair, etc.) and could not easily scale to a large number of attributes or to continuous views. (ii) most models are trained using view supervision (e.g. the view attributes), which of course greatly helps learning such model, yet prevents their use on many datasets where this information is not available.

Contributions

The contributions that authors claim are the following: (i) A new generative model able to generate data with various content and high view diversity using a supervision on the content information only. (ii) Extend the generative model to a conditional model that allows generating new views over any input sample.

Paper Overview

Background

The paper uses concept of the poplar GAN (Generative Adverserial Networks) proposed by Goodfellow et al.(2014).

GENERATIVE ADVERSARIAL NETWORK:

Generative adversarial networks (GANs) are deep neural net architectures comprised of two nets, pitting one against the other (thus the “adversarial”). GANs were introduced in a paper by Ian Goodfellow and other researchers at the University of Montreal, including Yoshua Bengio, in 2014. Referring to GANs, Facebook’s AI research director Yann LeCun called adversarial training “the most interesting idea in the last 10 years in ML.”

Let us denote [math]\displaystyle{ X }[/math] an input space composed of multidimensional samples x e.g. vector, matrix or tensor. Given a latent space [math]\displaystyle{ R^n }[/math] and a prior distribution [math]\displaystyle{ p_z(z) }[/math] over this latent space, any generator function [math]\displaystyle{ G : R^n → X }[/math] defines a distribution [math]\displaystyle{ p_G }[/math] on [math]\displaystyle{ X }[/math] which is the distribution of samples G(z) where [math]\displaystyle{ z ∼ p_z }[/math]. A GAN defines, in addition to G, a discriminator function D : X → [0; 1] which aims at differentiating between real inputs sampled from the training set and fake inputs sampled following [math]\displaystyle{ p_G }[/math], while the generator is learned to fool the discriminator D. Usually both G and D are implemented with neural networks. The objective function is based on the following adversarial criterion:

where px is the empirical data distribution on X . It has been shown in Goodfellow et al. (2014) that if G∗ and D∗ are optimal for the above criterion, the Jensen-Shannon divergence between [math]\displaystyle{ p_{G∗} }[/math] and the empirical distribution of the data [math]\displaystyle{ p_x }[/math] in the dataset is minimized, making GAN able to estimate complex continuous data distributions.

CONDITIONAL GENERATIVE ADVERSARIAL NETWORK:

In the Conditional GAN (CGAN), the generator learns to generate a fake sample with a specific condition or characteristics (such as a label associated with an image or more detailed tag) rather than a generic sample from unknown noise distribution. Now, to add such a condition to both generator and discriminator, we will simply feed some vector y, into both networks. Hence, both the discriminator D(X,y) and generator G(z,y) are jointly conditioned to two variables, z or X and y.

Now, the objective function of CGAN is:

The paper also suggests that, many studies have reported that on when dealing with high-dimensional input spaces, CGAN tends to collapse the modes of the data distribution,mostly ignoring the latent factor z and generating x only based on the condition y, exhibiting an almost deterministic behaviour.

Generative Multi-View Model

Objective and Notations: The distribution of the data x ∈ X is assumed to be driven by two latent factors: a content factor denoted c which corresponds to the invariant proprieties of the object,and a view factor denoted v which corresponds to the factor of variations. Typically, if X is the space of people’s faces, c stands for the intrinsic features of a person’s face while v stands for the transient features and the viewpoint of a particular photo of the face, including the photo exposure and additional elements like a hat, glasses, etc.... These two factors c and v are assumed to be independent and these are the factors needed to learn.

The paper defines two tasks here to be done: (i) Multi View Generation: we want to be able to sample over X by controlling the two factors c and v. Given two priors, p(c) and p(v), this sampling will be possible if we are able to estimate p(x|c, v) from a training set. (ii) Conditional Multi-View Generation: the second objective is to be able to sample different views of a given object. Given a prior p(v), this sampling will be achieved by learning the probability p(c|x), in addition to p(x|c, v). Ability to learn generative models able to generate from a disentangled latent space would allow controlling the sampling on the two different axis, the content and the view. The authors claim the originality of work is to learn such generative models without using any view labelling information.

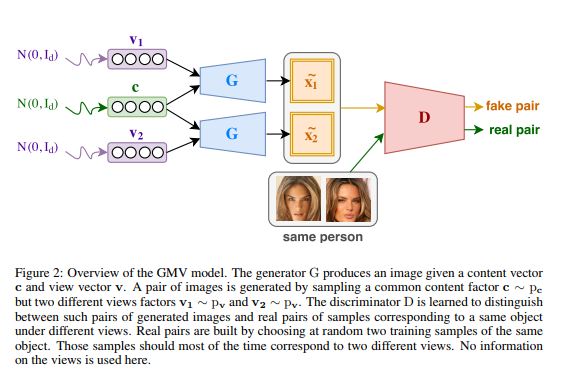

Generative Multi-view Model:

Let us consider two prior distributions over the content and view factors denoted as [math]\displaystyle{ p_c }[/math] and [math]\displaystyle{ pv }[/math], corresponding to the prior distribution over content and latent factors. Moreover, we consider a generator G that implements a distribution over samples x, denoted as [math]\displaystyle{ p_G }[/math] by computing G(c, v) with [math]\displaystyle{ c ∼ p_c }[/math] and [math]\displaystyle{ v ∼ p_v }[/math]. Objective is to learn this generator so that its first input c corresponds to the content of the generated sample while its second input v, captures the underlying view of the sample. Doing so would allow one to control the output sample of the generator by tuning its content or its view (i.e. c and v).