Zero-Shot Visual Imitation

This page contains a summary of the paper "Zero-Shot Visual Imitation" by Pathak, D., Mahmoudieh, P., Luo, G., Agrawal, P. et al. It was published at the International Conference on Learning Representations (ICLR) in 2018.

Introduction

The dominant paradigm for imitation learning relies on strong supervision of expert actions to learn both what and how to imitate for a certain task. For example, in the robotics field, Learning from Demonstration (LfD) (Argall et al., 2009; Ng & Russell, 2000; Pomerleau, 1989; Schaal, 1999) requires an expert to manually move robot joints (kinesthetic teaching) or teleoperate the robot to teach a desired task. The expert will, in general, provide multiple demonstrations of a specific task at training time which the agent will form into observation-action pairs to then distill into a policy for performing the task. In the case of demonstrations for a robot, this heavily supervised process is tedious and unsustainable especially looking at the fact that new tasks need a set of new demonstrations for the robot to learn from.

Observational Learning (Bandura & Walters, 1977), a term from the field of psychology, suggests a more general formulation where the expert communicates what needs to be done (as opposed to how something is to be done) by providing observations of the desired world states via video or sequential images. This is the proposition of the paper and while this is a harder learning problem, it is possibly more useful because the expert can now distill a large number of tasks easily (and quickly) to the agent.

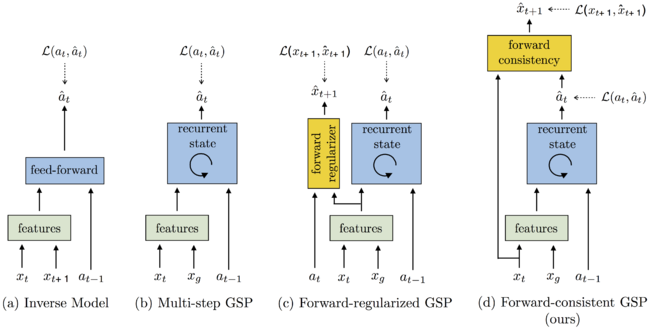

This paper follows (Agrawal et al., 2016; Levine et al., 2016; Pinto & Gupta, 2016) where an agent first explores the environment independently and then distills its observations into goal-directed skills. The word 'skill' is used to denote a function that predicts the sequence of actions to take the agent from the current observation to the goal. This function is what is known as a "goal-conditioned skill policy (GSP)" and it is learned by re-labeling states that the agent has visited as goals and the actions taken as prediction targets. During inference/prediction, the GSP recreates the task step-by-step given the goal observations from the demonstration.

Learning the Goal-Conditioned Skill Policy (GSP)

[math]\displaystyle{ S : \{x_1, a_1, x_2, a_2, ..., x_T\} }[/math]

[math]\displaystyle{ a = π_E(s) }[/math]

[math]\displaystyle{ (x_i, x_g) }[/math]

[math]\displaystyle{ (\overrightarrow{a}_τ : a_1, a_2, ..., a_K) }[/math]

[math]\displaystyle{ (x_g) }[/math]

[math]\displaystyle{ (x_i) }[/math]

[math]\displaystyle{ \overrightarrow{a}_τ =π (x_i, x_g; θ_π) }[/math]