stat946W25

Record your contributions here [1]

Use the following notations:

C: You have written a summary/critique on the paper.

Your feedback on presentations

Topic 12: State Space Models

Introduction

State Space Models (SSMs) are introduced as powerful alternatives to traditional sequence modeling approaches. These models demonstrate good performance in various modalities, including time series analysis, audio generation, and image processing and they can capture long-range dependencies more efficiently. SSMs initially struggled to match the performance of Transformers in language modeling tasks and there were some gaps between them. To address their challenges, recent advances in their architecture such as the Structured State Space Model (S4) have been introduced, which succeeded in long-range reasoning tasks and allowed for more efficient computation while preserving theoretical strengths. However, its implementation remains complex and computationally demanding. So further research led to simplified variants such as the Diagonal State Space Model (DSS), which achieves comparable performance with a more straightforward formulation. In parallel, hybrid approaches, like the H3 model, that integrate SSMs with attention mechanisms try to bridge the mentioned gaps. To understand better what I mean from the hybrid word, for example in H3 the authors try replacing almost all the attention layers in transformers with SSMs. More recently, models like Mamba have pushed the boundaries of SSMs by selectively parameterizing state matrices as functions of the input and allowing more flexible and adaptive information propagation. Research in SSMs continues to resolve the remaining challenges and the potential to substitute attention-based architectures with SSMs grows stronger. They will likely play a crucial role in the next generation of sequence modeling frameworks.

Core concepts

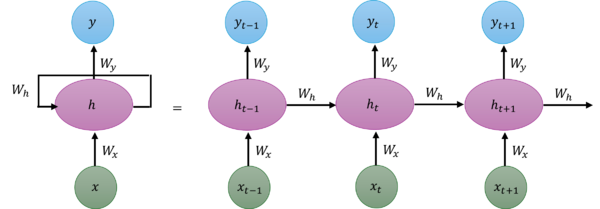

To understand State Space Models better, let's first recall how Recurrent Neural Networks (RNNs) work. A simple RNN updates its hidden state using below formula:

[math]\displaystyle{ h(t) = \sigma(W_h h(t-1) + W_x x(t)) }[/math]

[math]\displaystyle{ y(t) = W_y h(t) }[/math]

Where:

- [math]\displaystyle{ h(t) }[/math] is the hidden state at time t

- [math]\displaystyle{ x(t) }[/math] is the input

- [math]\displaystyle{ y(t) }[/math] is the output

- [math]\displaystyle{ W_h, W_x, and W_y }[/math] are weight matrices

- [math]\displaystyle{ \sigma }[/math] is a non-linear activation function

State Space Models are coming from control theory and define a linear mapping from an input signal u(t) to an output signal y(t) through a state-variable x(t), are formulated as:

[math]\displaystyle{ x(t) = A x(t-1) + B u(t) }[/math]

[math]\displaystyle{ y(t) = C x(t) + D u(t) }[/math]

Where:

- [math]\displaystyle{ x(t) }[/math] represents the hidden state (equivalent to h(t) in RNN notation)

- [math]\displaystyle{ u(t) }[/math] is the input (equivalent to x(t) in RNN notation)

- [math]\displaystyle{ y(t) }[/math] is the output

- [math]\displaystyle{ A, B, C, }[/math] and [math]\displaystyle{ D }[/math] are parameter matrices

Looking at these formulations shows us their similarity. We can see that an RNN is essentially a non-linear extension of a state space model. The main differences are:

- SSMs are linear transformations between states, while RNNs apply non-linearity through the activation function

- SSMs come from control theory and in control systems, the matrices are typically derived from physics equations, while in machine learning we learn these matrices from data

- In SSMs, we have D u(t) in the second equation which is commonly left out in control problems