a neural representation of sketch drawings

Introduction

lalala

Motivation

The authors offer two motivations for their work:

- To translate between languages for which large parallel corpora does not exist

- To provide a strong lower bound that any semi-supervised machine translation system is supposed to yield

Note: What is a corpus (plural corpora)?

In linguistics, a corpus (plural corpora) or text corpus and structured set of texts (nowadays usually electronically stored and processed). They are used to do statistical analysis and hypothesis testing, checking occurrences or validating linguistic rules within a specific language territory. A corpus may contain texts in a single language (monolingual corpus) or text data in multiple language (multilingual corpus).

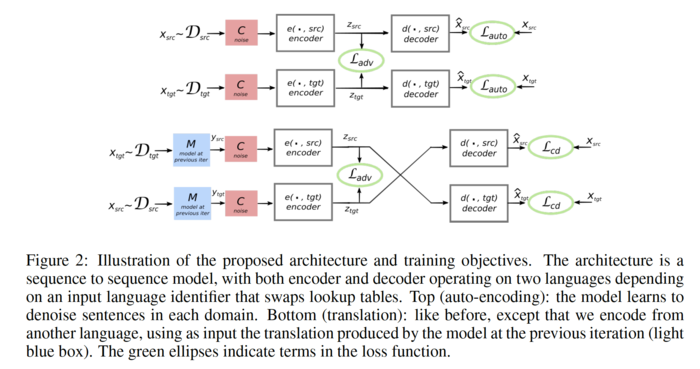

Overview of unsupervised translation system

The unsupervised translation scheme has the following outline:

- The word-vector embeddings of the source and target languages are aligned in an unsupervised manner.

- Sentences from the source and target language are mapped to a common latent vector space by an encoder, and then mapped to probability distributions over

Encoder

The encoder [math]\displaystyle{ E }[/math] reads a sequence of word vectors [math]\displaystyle{ (z_1,\ldots, z_m) \in \mathcal{Z}' }[/math] and outputs a

Decoder

The decoder is a mono-directional LSTM that accepts a sequence of hidden states [math]\displaystyle{ h=(h_1,\ldots, h_m) \in H' }[/math] from the latent space and a

Overview of objective

The objective function is the sum of:

- The de-noising auto-encoder loss,

I shall describe these in the following sections.

De-noising Auto-encoder Loss

A de-noising auto-encoder is a function optimized to map a corrupted sample from some dataset to the original un-corrupted sample. De-noising auto-encoders were

Translation Loss

To compute the translation loss, we sample a sentence from one of the languages, translate it with the encoder and decoder of the previous epoch, and then corrupt its

Adversarial Loss

The intuition underlying the latent space is that it should encode the meaning of a sentence in a language-independent way. Accordingly, the authors introduce an

Objective Function

Combining the above-described terms, we can write the overall objective function. Let [math]\displaystyle{ Q_S }[/math] denote the monolingual dataset for the

Validation

The authors' aim is for their method to be completely unsupervised, so they do not use parallel corpora even for the selection of hyper-parameters. Instead, they validate

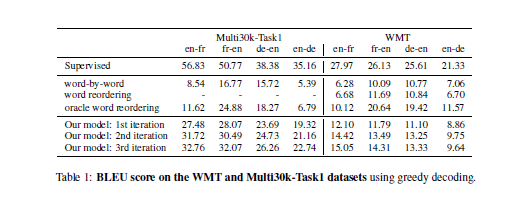

Experimental Procedure and Results

The authors test their method on four data sets. The first is from the English-French translation task of the Workshop on Machine Translation 2014 (WMT14). This data set

Result Figures

Commentary

This paper's results are impressive: that it is even possible to translate between languages without parallel data suggests that languages are more similar than we might

Future Work

The principal of performing unsupervised translation by starting with a rough but reasonable guess, and then improving it using knowledge of the structure of target

References

- Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. "Neural machine translation by jointly learning to align and translate." arXiv preprint arXiv:1409.0473 (2014).