stat841F18/

Presented by

Yan Yu Chen, Qisi Deng, Hengxin Li, Bochao Zhang

Introduction

In the past two decades, due to their surprising classi- fication capability, support vector machine (SVM) [1] and its variants [2]–[4] have been extensively used in classification applications. Least square support vector machine (LS-SVM) and proximal sup- port vector machine (PSVM) have been widely used in binary classification applications. The conventional LS-SVM and PSVM cannot be used in regression and multiclass classification appli- cations directly, although variants of LS-SVM and PSVM have been proposed to handle such cases.

Motivation

There are several issues on BP learning algorithms:

(1) When the learning rate Z is too small, the learning algorithm converges very slowly. However, when Z is too large, the algorithm becomes unstable and diverges.

(2) Another peculiarity of the error surface that impacts the performance of the BP learning algorithm is the presence of local minima [6]. It is undesirable that the learning algorithm stops at a local minima if it is located far above a global minima.

(3) Neural network may be over-trained by using BP algorithms and obtain worse generalization performance. Thus, validation and suitable stopping methods are required in the cost function minimization procedure.

(4) Gradient-based learning is very time-consuming in most applications.

Due to the simplicity of their implementations, least square support vector machine (LS-SVM) and proximal support vector machine (PSVM) have been widely used in binary classification applications. The conventional LS-SVM and PSVM cannot be used in regression and multiclass classification applications directly, although variants of LS-SVM and PSVM have been proposed to handle such cases. This paper shows that both LS-SVM and PSVM can be simplified further and a unified learning framework of LS-SVM, PSVM, and other regularization algorithms referred to extreme learning machine (ELM) can be built.

Previous Work

As the training of SVMs involves a quadratic programming problem, the computational complexity of SVM training al- gorithms is usually intensive, which is at least quadratic with respect to the number of training examples

Least square SVM (LS-SVM) [2] and proximal SVM (PSVM) [3] provide fast implementations of the traditional SVM. Both LS-SVM and PSVM use equality optimization constraints instead of inequalities from the traditional SVM, which results in a direct least square solution by avoiding quadratic programming.

SVM, LS-SVM, and PSVM are originally proposed for bi- nary classification. Different methods have been proposed in or- der for them to be applied in multiclass classification problems. One-against-all (OAA) and one-against-one (OAO) methods are mainly used in the implementation of SVM in multiclass classification applications [8].

extreme learning machine (ELM) for single hidden layer feedforward neural networks (SLFNs) which randomly chooses the input weights and analytically determines the output weights of SLFNs. In theory, this algorithm tends to provide the best generalization performance at extremely fast learning speed. The experimental results based on real world benchmarking function approximation and classification problems including large complex applications show that the new algorithm can produce best generalization performance in some cases and can learn much faster than traditional popular learning algorithms for feedforward neural networks.

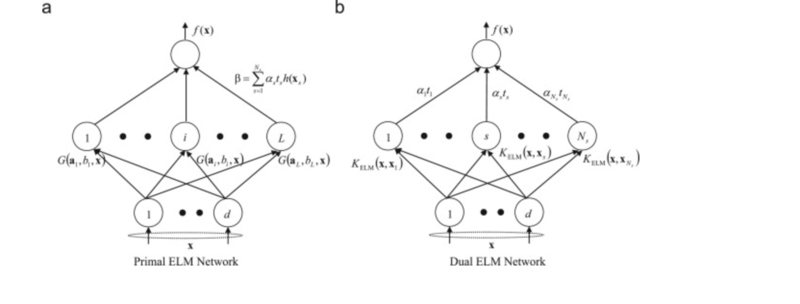

Model Architecture

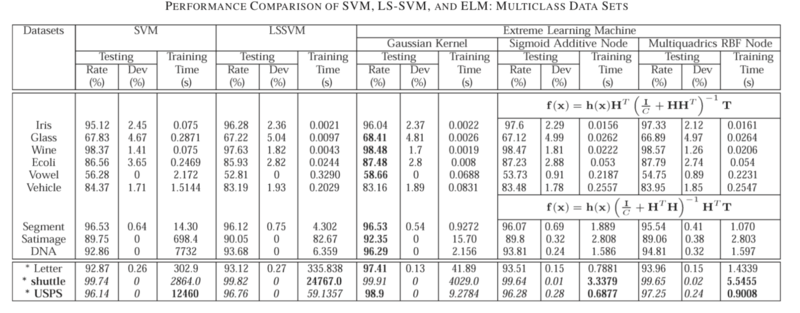

Performance Verification

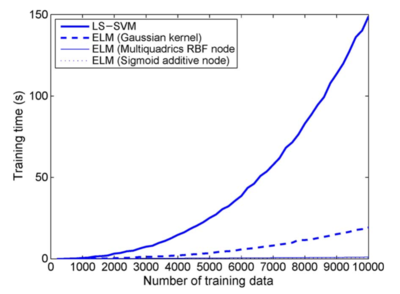

Fig. 1 shows the scalability of different classifiers: An example on letter data set. training time spent by LS-SVM and ELM (Gaussian kernel) increases sharply when the number of training data increases. However, the training time spent by ELM with Sigmoid additive node and multiquadric function node increases very slowly when the number of training data increases.

Conclusion

Critiques

References

- [1]G.-B. Huang, Q.-Y. Zhu, and C.-K. Siew, “Extreme learning machine: A new learning scheme of feedforward neural networks,” in Proc. IJCNN,Budapest, Hungary, Jul. 25–29, 2004, vol. 2, pp. 985–990.

- [2]G.-B. Huang, X.Ding, and H.Zhou, Optimization method based extreme learning machine for classification," Neurocomputing, vol. 74, no. 1-3, pp. 155-163, Dec. 2010.