MULTI-VIEW DATA GENERATION WITHOUT VIEW SUPERVISION

This page contains a summary of the paper "Multi-View Data Generation without Supervision" by Mickael Chen, Ludovic Denoyer, Thierry Artieres. It was published at the International Conference on Learning Representations (ICLR) in 2018 in Poster Category.

Introduction

Motivation

High Dimensional Generative models have seen a surge of interest off late with introduction of Variational auto-encoders and generative adversarial networks. This paper focuses on a particular problem where one aims at generating samples corresponding to a number of objects under various views. The distribution of the data is assumed to be driven by two independent latent factors: the content, which represents the intrinsic features of an object, and the view, which stands for the settings of a particular observation of that object (for example, the different angles of the same object). The paper proposes two models using this disentanglement of latent space - a generative model and a conditional variant of the same.

Related Work

The problem of handling multi-view inputs has mainly been studied from the predictive point of view where one wants, for example, to learn a model able to predict/classify over multiple views of the same object (Su et al. (2015); Qi et al. (2016)). These approaches generally involve (early or late) fusion of the different views at a particular level of a deep architecture. Recent studies have focused on identifying factors of variations from multiview datasets. The underlying idea is to consider that a particular data sample may be thought as the mix of a content information (e.g. related to its class label like a given person in a face dataset) and of a side information, the view, which accounts for factors of variability (e.g. exposure, viewpoint, with/wo glasses...). So, all the samples of same class contain the same content but different view. A number of approaches have been proposed to disentangle the content from the view, also referred as the style in some papers (Mathieu et al. (2016); Denton & Birodkar (2017)). The two common limitations the earlier approaches pose - as claimed by the paper - are that (i) they usually consider discrete views that are characterized by a domain or a set of discrete (binary/categorical) attributes (e.g. face with/wo glasses, the color of the hair, etc.) and could not easily scale to a large number of attributes or to continuous views. (ii) most models are trained using view supervision (e.g. the view attributes), which of course greatly helps learning such model, yet prevents their use on many datasets where this information is not available.

Contributions

The contributions that authors claim are the following: (i) A new generative model able to generate data with various content and high view diversity using a supervision on the content information only. (ii) Extend the generative model to a conditional model that allows generating new views over any input sample.

Paper Overview

Background

The paper uses concept of the poplar GAN (Generative Adverserial Networks) proposed by Goodfellow et al.(2014).

GENERATIVE ADVERSARIAL NETWORK:

Generative adversarial networks (GANs) are deep neural net architectures comprised of two nets, pitting one against the other (thus the “adversarial”). GANs were introduced in a paper by Ian Goodfellow and other researchers at the University of Montreal, including Yoshua Bengio, in 2014. Referring to GANs, Facebook’s AI research director Yann LeCun called adversarial training “the most interesting idea in the last 10 years in ML.”

Let us denote [math]\displaystyle{ X }[/math] an input space composed of multidimensional samples x e.g. vector, matrix or tensor. Given a latent space [math]\displaystyle{ R^n }[/math] and a prior distribution [math]\displaystyle{ p_z(z) }[/math] over this latent space, any generator function [math]\displaystyle{ G : R n → X }[/math] defines a distribution [math]\displaystyle{ p_G on X }[/math] which is the distribution of samples G(z) where [math]\displaystyle{ z ∼ p_z }[/math]. A GAN defines, in addition to G, a discriminator function D : X → [0; 1] which aims at differentiating between real inputs sampled from the training set and fake inputs sampled following [math]\displaystyle{ p_G }[/math], while the generator is learned to fool the discriminator D. Usually both G and D are implemented with neural networks. The objective function is based on the following adversarial criterion:

[math]\displaystyle{ \underset{G}{min} \ \underset{D}{max} }[/math] [math]\displaystyle{ E_{x\~p_x} }[/math]

Observational Learning (Bandura & Walters, 1977), a term from the field of psychology, suggests a more general formulation where the expert communicates what needs to be done (as opposed to how something is to be done) by providing observations of the desired world states via video or sequential images. This is the proposition of the paper and while this is a harder learning problem, it is possibly more useful because the expert can now distill a large number of tasks easily (and quickly) to the agent.

This paper follows (Agrawal et al., 2016; Levine et al., 2016; Pinto & Gupta, 2016) where an agent first explores the environment independently and then distills its observations into goal-directed skills. The word 'skill' is used to denote a function that predicts the sequence of actions to take the agent from the current observation to the goal. This function is what is known as a goal-conditioned skill policy (GSP) and it is learned by re-labeling states that the agent has visited as goals and the actions taken as prediction targets. During inference, the GSP recreates the task step-by-step given the goal observations from the demonstration.

A challenge of learning the GSP is that the distribution of trajectories from one state to another is multi-modal; that is, there are many possible ways of traversing from one state to another. This issue is addressed with the main contribution of this paper, the forward-consistent loss which essentially says that reaching the goal is more important than how it is reached. First, a forward model is learned that predicts the next observation from the given action and current observation. The difference in the output of the forward model for the GSP-selected action and the ground-truth next state is used to train the model. This forward-consistent loss has the effect of not inadvertently penalizing actions that are consistent with the ground-truth action but not exactly the same.

As a simple example to explain the forward-consistent loss, imagine a scenario where a robot must grab an object some distance ahead with an obstacle along the pathway. Now suppose that during demonstration the obstacle is avoided by going to the right and then grabbing the object while the agent during training decides to go left and then grab the object. The forward-consistent loss would characterize the action of the robot as consistent with the ground-truth action of the demonstrator and not penalize the robot for going left instead of right.

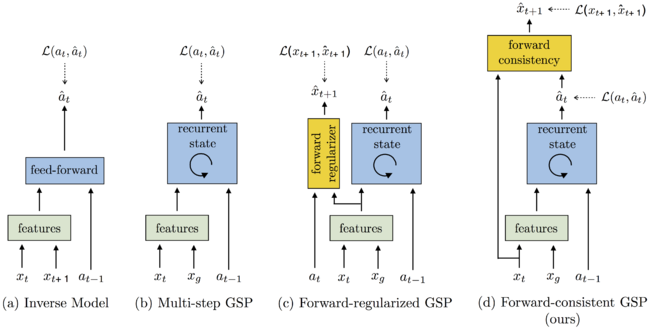

Of course, when introducing something like this forward-consistent loss, issues related to the number of steps needed to reach a certain goal become prevalent. To address this, the paper pairs the GSP with a goal recognizer that determines if the goal has been satisfied with respect to some metrics. Figure 1 shows various GSPs along with diagram d) showing the forward-consistent loss proposed in this paper.

The zero-shot imitator is tested on a Baxter robot performing tasks involving rope manipulation, a TurtleBot performing office navigation and navigation experiments in VizDoom. Positive results are shown for all three experiments leading to the conclusion that the forward-consistent GSP can be used to imitate a variety of tasks without making environmental or task-specific assumptions.

Related Work

Some key ideas related to this paper are imitation learning, visual demonstration, forward/inverse dynamics and consistency and finally, goal conditioning. The paper has more on each of these topics including citations to related papers. The propositions in this paper are related to imitation learning but the problem being addressed is different in that there is less supervision and the model requires generalization across tasks during inference.

Learning to Imitate Without Expert Supervision

In this section (and the included subsections) the methods for learning the GSP, forward consistency loss and goal recognizer network are described.

Let [math]\displaystyle{ S : \{x_1, a_1, x_2, a_2, ..., x_T\} }[/math] be the sequence of observation-action pairs generated by the agent as it explores the environment using the policy [math]\displaystyle{ a = π_E(s) }[/math]. This exploration data is used to learn the GSP.

The above equation represents the learned GSP. [math]\displaystyle{ π }[/math] takes as input a pair of observations [math]\displaystyle{ (x_i, x_g) }[/math] and outputs the sequence of required actions [math]\displaystyle{ (\overrightarrow{a}_τ : a_1, a_2, ..., a_K) }[/math] to reach the goal observation [math]\displaystyle{ x_g }[/math] from the current observation [math]\displaystyle{ x_i }[/math]. The states [math]\displaystyle{ x_i }[/math] and [math]\displaystyle{ x_g }[/math] are sampled from [math]\displaystyle{ S }[/math] and the number of actions [math]\displaystyle{ K }[/math] is inferred by the model. [math]\displaystyle{ π }[/math] can be thought of as a general deep network with parameters [math]\displaystyle{ θ_π }[/math]. It is good to note that [math]\displaystyle{ x_g }[/math] could be an intermediate subtask of the overall goal. So in essence, subtasks can be strung together to achieve an overall goal (i.e. go to position 1, then go to position 2, then go to final destination).

Let the sequence of images [math]\displaystyle{ D: \{x_1^d, x_2^d, ..., x_N^d\} }[/math] be the task to be imitated which is captured when the expert demonstrates the task. The sequence has at least one entry and can be as temporally dense as needed (i.e. the expert can show as many goals or sub-goals as needed to the agent). The agent then uses the learned GSP [math]\displaystyle{ π }[/math] to start from initial state [math]\displaystyle{ x_0 }[/math] and follow the actions predicted by [math]\displaystyle{ π(x_0, x_1^d; θ_π) }[/math] to imitate the observations in [math]\displaystyle{ D }[/math].

A separate goal recognizer network is needed to ascertain if the current observation is close to the goal or not. This is because multiple actions might be required to reach close to [math]\displaystyle{ x_1^d }[/math]. Knowing this, let [math]\displaystyle{ x_0^\prime }[/math] be the observation after executing the predicted action. The goal recognizer evaluates whether [math]\displaystyle{ x_0^\prime }[/math] is sufficiently close to the goal and if not, the agent executes [math]\displaystyle{ a = π(x_0^\prime, x_1^d; θ_π) }[/math]. This process is executed repeatedly for each image in [math]\displaystyle{ D }[/math] until the final goal is reached. This approach never requires the expert to convey to the agent what actions it performed.

Learning the Goal-Conditioned Skill Policy (GSP)

It is easy to first describe the one-step version of GSP and then extend it to a multi-step version. The one-step trajectory can be generalized as [math]\displaystyle{ (x_t; a_t; x_{t+1}) }[/math] with GSP [math]\displaystyle{ \hat{a}_t = π(x_t; x_{t+1}; θ_π) }[/math] and is trained by the standard cross-entropy loss given below with respect to the GSP parameters [math]\displaystyle{ θ_π }[/math]:

[math]\displaystyle{ p }[/math] and [math]\displaystyle{ \hat{a}_t }[/math] are the ground-truth and predicted action distributions respectively. [math]\displaystyle{ p }[/math] is not readily available so it is empirically approximated using samples from the distribution [math]\displaystyle{ a_t }[/math]. In a standard deep learning problem it is common to assume [math]\displaystyle{ p }[/math] as a delta function at [math]\displaystyle{ a_t }[/math] but this is violated if [math]\displaystyle{ p }[/math] is multi-modal and high-dimensional. That is, the same inputs would be presented with different targets leading to high variance in gradients. This would make learning challenging, leading to the further developments presented in sections 2.2, 2.3 and 2.4.

Forward Consistency Loss

To deal with multi-modality, this paper proposes the forward consistency loss where instead of penalizing actions predicted by the GSP to match the ground truth, the parameters of the GSP are learned such that they minimize the distance between observation [math]\displaystyle{ \hat{x}_{t+1} }[/math] (prediction from executing [math]\displaystyle{ \hat{a}_t = π(x_t, x_{t+1}; θ_π) }[/math] ) and the observation [math]\displaystyle{ x_{t+1} }[/math] (ground truth). This is done so that the predicted action is not penalized if it leads to the same next state as the ground-truth action. This will in turn reduce the variation in gradients and aid the learning process. This is what is denoted as forward consistency loss.

To operationalize the forward consistency loss... The forward dynamics [math]\displaystyle{ f }[/math] are learned from the data and is defined as [math]\displaystyle{ \widetilde{x}_{t+1} = f(x_t, a_t; θ_f) }[/math]. Since [math]\displaystyle{ f }[/math] is not analytic, there is no guarantee that [math]\displaystyle{ \widetilde{x}_{t+1} = \hat{x}_{t+1} }[/math] so an additional term is added to the loss: [math]\displaystyle{ ||x_{t+1} - \hat{x}_{t+1}||_2^2 }[/math]. The parameters of [math]\displaystyle{ θ_f }[/math] are inferred by minimizing [math]\displaystyle{ ||x_{t+1} - \widetilde{x}_{t+1}||_2^2 + λ||x_{t+1} - \hat{x}_{t+1}||_2^2 }[/math] where λ is a scalar hyper-parameter. The first term ensures that the learned model explains the ground truth transitions while the second term ensures consistency. In summary, the loss function is given below:

Past works have shown that learning forward dynamics in the feature space as opposed to raw observation space is more robust so this paper follows in extending the GSP to make predictions on feature representations denoted [math]\displaystyle{ \phi(x_t), \phi(x_{t+1}) }[/math]. The forward consistency loss is then computed to make predictions in the feature space [math]\displaystyle{ \phi }[/math]. Learning [math]\displaystyle{ θ_π,θ_f }[/math] from scratch can cause noisier gradient updates for [math]\displaystyle{ π }[/math]. This is addressed by pre-training the forward model with the first term and GSP seperately by blocking gradient flow. Fine-tuning is then done with [math]\displaystyle{ θ_π,θ_f }[/math] jointly.

The generalization to multi-step GSP [math]\displaystyle{ π_m }[/math] is shown below where [math]\displaystyle{ \phi }[/math] refers to the feature space rather than observation space which was used in the single-step case:

The forward consistency loss is computed at each time step, t, and jointly optimized with the action prediction loss over the whole trajectory. [math]\displaystyle{ \phi(.) }[/math] is represented by a CNN with parameters [math]\displaystyle{ θ_{\phi} }[/math]. The multi-step forward consistent GSP [math]\displaystyle{ \pi_m }[/math] is implemented via a recurrent network with inputs current state, goal states, actions at previous time step and the internal hidden representation denoted [math]\displaystyle{ h_{t-1} }[/math], and outputs the actions to take.

Goal Recognizer

The goal recognizer network was introduced to figure out if the current goal is reached. This allows the agent to take multiple steps between goals without being penalized. In this paper, goal recognition was taken as a binary classification problem; given an observation and the goal, is the observation close to the goal or not.

The goal recognizer was trained on data from the agent's random exploration. Pseudo-goal states were samples from the visited states, and all observations within a few timesteps of these were considered as positive results (close to the goal). The goal classifier was trained using the standard cross-entropy loss.

The authors found that training a separate goal recognition network outperformed simply adding a 'stop' action to the action space of the policy network.

Ablations and Baselines

To summarize, the GSP formulation is composed of (a) recurrent variable-length skill policy network, (b) explicitly encoding the previous action in the recurrence, (c) goal recognizer, (d) forward consistency loss function, and (w) learning forward dynamics in the feature space instead of raw observation space.

To show the importance of each component a systematic ablation (removal) of components for each experiment is done to show the impact on visual imitation. The following methods will be evaluated in the experiments section:

- Classical methods: In visual navigation, the paper attempts to compare against the state-of-the-art ORB-SLAM2 and Open-SFM.

- Inverse model: Nair et al. (2017) leverage vanilla inverse dynamics to follow demonstration in rope manipulation setup.

- GSP-NoPrevAction-NoFwdConst is the removal of the paper's recurrent GSP without previous action history and without forwarding consistency loss.

- GSP-NoFwdConst refers to the recurrent GSP with previous action history, but without forwarding consistency objective.

- GSP-FwdRegularizer refers to the model where forward prediction is only used to regularize the features of GSP but has no role to play in the loss function of predicted actions.

- GSP refers to the complete method with all the components.

Experiments

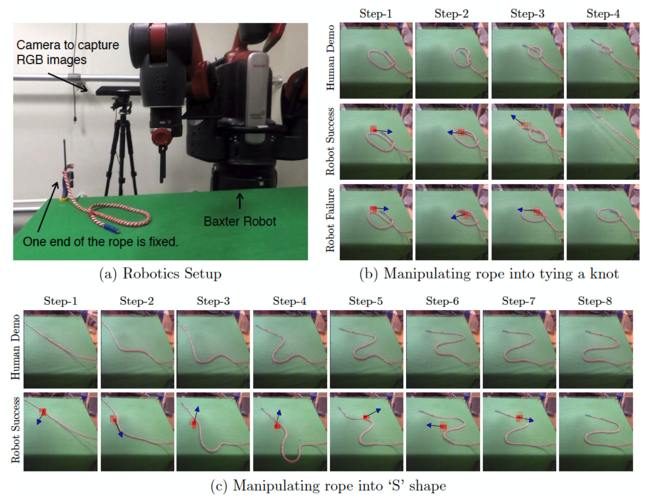

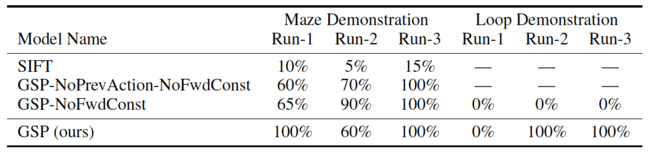

The model is evaluated by testing performance on a rope manipulation task using a Baxter Robot, navigation of a TurtleBot in cluttered office environments and simulated 3D navigation in VizDoom. A good skill policy will generalize to unseen environments and new goals while staying robust to irrelevant distractors and observations. For the rope manipulation task this is tested by making the robot tie a knot, a task it did not observe during training. For the navigation tasks, generalization is checked by getting the agents to traverse new buildings and floors.

Rope Manipulation

Rope manipulation is an interesting task because even humans learn complex rope manipulation, such as tying knots, via observing an expert perform it.

In this paper, rope manipulation data collected by Nair et al. (2017) is used, where a Baxter robot manipulated a rope kept on a table in front of it. During this exploration, the robot picked up the rope at a random point and displaced it randomly on the table. 60K interaction pairs were collected of the form [math]\displaystyle{ (x_t, a_t, x_{t+1}) }[/math]. These were used to train the GSP proposed in this paper.

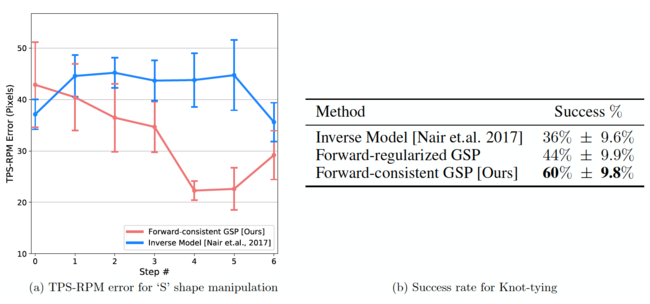

For this experiment, the Baxter robot is setup exactly like the one presented in Nair et al. (2017). The robot is tasked with manipulating the rope into an 'S' as well as tying a knot as shown in Figure 2. In testing, the robot was only provided with images of intermediate states of the rope, and not the actions taken by the human trainer. The thin plate spline robust point matching technique (TPS-RPM) (Chui & Rangarajan, 2003) is used to measure the performance of constructing the 'S' shape as shown in Figure 3. Visual verification (by a human) was used to assess the tying of a successful knot.

The base architecture consisted of a pre-trained AlexNet whose features were fed into a skill policy network that predicts the location of grasp, the direction of displacement and the magnitude of displacement. All models were optimized using Asam with a learning rate of 1e-4. For the first 40K iterations, the AlexNet weights were frozen and then fine-tuned jointly with the later layers. More details are provided in the appendix of the paper.

The approach of this paper is compared to (Nair et al., 2017) where they did similar experiments using an inverse model. The results in Figure 3 show that for the 'S' shape construction, zero-shot visual imitation achieves a success rate of 60% versus the 36% baseline from the inverse model.

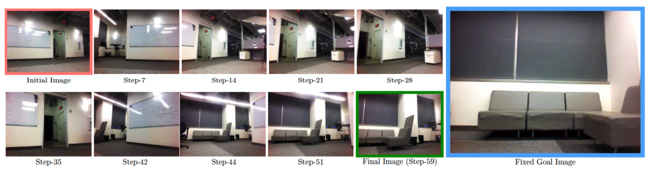

In this experiment, the robot was shown a single image or multiple images to lead it to the goal. The robot, a TurtleBot2, autonomously moves to the goal. For learning the GSP, an automated self-supervised method for data collection was devised that didn't require human supervision. The robot explored two floors of an academic building and collected 230K interactions [math]\displaystyle{ (x_t, a_t, x_{t+1}) }[/math] (more detail is provided I the appendix of the paper). The robot was then placed into an unseen floor of the building with different textures and furniture layout for performing visual imitation at test time.

The collected data was used to train a recurrent forward-consistent GSP. The base architecture for the model was an ImageNet pre-trained ResNet-50 network. The loss weight of the forward model is 0.1 and the objective is minimized using Adam with a learning rate of 5e-4. More details on the implementation are given in the appendix of the paper.

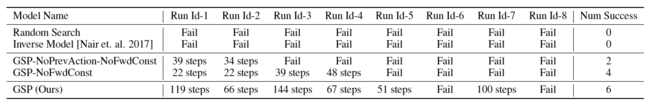

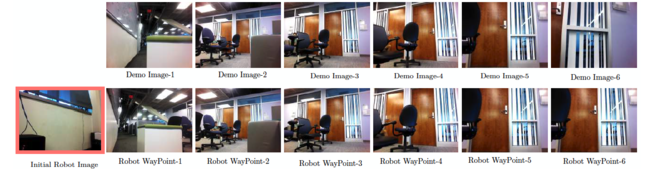

Figure 4 shows the robot's observations during testing. Table 1 shows the results of this experiment; as can be seen, GSP fairs much better than all previous baselines.

Figure 5 and table 1 show the results for the robot performing a task with multiple waypoints, i.e. the robot was shown multiple sub-goals instead of just one final goal state. This was required when the end goal was far away form the robot, such as in another room. It is good to note that zero-shot visual imitation is robust to a changing environment where every frame need not match the demonstrated frame. This is achieved by providing sparse landmarks.

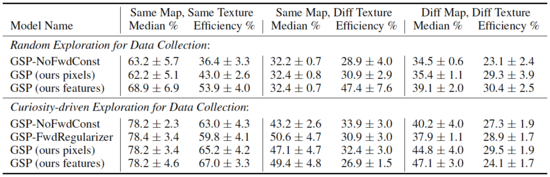

To round off the experiments, a VizDoom simulation environment was used to test the GSP. VizDoom is a Doom-based popular Reinforcement Learning testbed. It allows agents to play the doom game using only a screen buffer. It is a 3D simulation environment that is traditionally considered to be harder than 2D domain like Atari. The goal was to measure the robustness of each method with proper error bars, the role of initial self-supervised data collection and the quantitative difference in modeling forward consistency loss in feature space in comparison to raw visual space.

Data were collected using two methods: random exploration and curiosity-driven exploration (Pathak et al., 2017). The hypothesis here is that better data rather than just random exploration can lead to a better learned GSP. More details on the implementation are given in the paper appendix.

Table 3 shows the results of the VizDoom experiments with the key takeaway that the data collected via curiosity seems to improve the final imitation performance across all methods.

Discussion

This work presented a method for imitating expert demonstrations from visual observations alone. The key idea is to learn a GSP utilizing data collected by self-supervision. A limitation of this approach is that the quality of the learned GSP is restricted by the exploration data. For instance, moving to a goal in between rooms would not be possible without an intermediate sub-goal. So, future research in zero-shot imitation could aim to generalize the exploration such that the agent is able to explore across different rooms for example.

A limitation of the work in this paper is that the method requires first-person view demonstrations. Extending to the third-person may yield a learning of a more general framework. Also, in the current framework, it is assumed that the visual observations of the expert and agent are similar. When the expert performs a demonstration in one setting such as daylight, and the agent performs the task in the evening, results may worsen.

The expert demonstrations are also purely imitated; that is, the agent does not learn the demonstrations. Future work could look into learning the demonstration so as to richen its exploration techniques.

This work used a sequence of images to provide a demonstration but the work, in general, does not make image-specific assumptions. Thus the work could be extended to using formal language to communicate goals, an idea left for future work.

References

[1] D.Pathak, P.Mahmoudieh, G.Luo, P.Agrawal, D.Chen, Y.Shentu, E.Shelhamer, J.Malik, A.A.Efros, and T. Darrell. Zero-shot Visual Imitation. In ICLR, 2018.

[2] Brenna D Argall, Sonia Chernova, Manuela Veloso, and Brett Browning. A survey of robot learning from demonstration. Robotics and autonomous systems, 2009.

[3] Albert Bandura and Richard H Walters. Social learning theory, volume 1. Prentice-hall Englewood Cliffs, NJ, 1977.

[4] Pulkit Agrawal, Ashvin Nair, Pieter Abbeel, Jitendra Malik, and Sergey Levine. Learning to poke by poking: Experiential learning of intuitive physics. NIPS, 2016.

[5] Sergey Levine, Peter Pastor, Alex Krizhevsky, and Deirdre Quillen. Learning hand-eye coordination for robotic grasping with large-scale data collection. In ISER, 2016.

[6] Lerrel Pinto and Abhinav Gupta. Supersizing self-supervision: Learning to grasp from 50k tries and 700 robot hours. ICRA, 2016.

[7] Ashvin Nair, Dian Chen, Pulkit Agrawal, Phillip Isola, Pieter Abbeel, Jitendra Malik, and Sergey Levine. Combining self-supervised learning and imitation for vision-based rope manipulation. ICRA, 2017.

[8] Deepak Pathak, Pulkit Agrawal, Alexei A. Efros, and Trevor Darrell. Curiosity-driven exploration by self-supervised prediction. In ICML, 2017.