F21-STAT 940-Proposal: Difference between revisions

(Updated proposal) |

m (updating image name) |

||

| Line 114: | Line 114: | ||

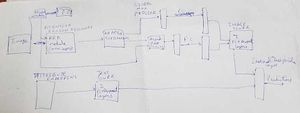

Description: Attention Region Discovery and Adaptive Thresholding module are taken from the idea of “Attentive Region Embedding Network for Zero-shot Learning” (https://openaccess.thecvf.com/content_CVPR_2019/papers/Xie_Attentive_Region_Embedding_Network_for_Zero-Shot_Learning_CVPR_2019_paper.pdf) whereas the idea for projecting image and text embeddings into a shared space was taken by “HUSE: Hierarchical Universal Semantic Embeddings” (https://arxiv.org/pdf/1911.05978.pdf). The motivation is that the attribute embedding can provide some complementary information to the model which can be learned to represent into a shared space and hence a better prediction to the zero-shot learning can be made. Also, the Squeeze and Excitation layer showed some impressive results when applied to the feature extraction part of the model, therefore we thought of re-weighting the channels first before applying the self-attention module so that the model can give even better attention to the image. The paper “Attentive Region Embedding Network for Zero-shot Learning” does not make use of class attributes of the classes present in the dataset, therefore we wanted to make use of these attributes and see if the model can make use of this new information as well. | Description: Attention Region Discovery and Adaptive Thresholding module are taken from the idea of “Attentive Region Embedding Network for Zero-shot Learning” (https://openaccess.thecvf.com/content_CVPR_2019/papers/Xie_Attentive_Region_Embedding_Network_for_Zero-Shot_Learning_CVPR_2019_paper.pdf) whereas the idea for projecting image and text embeddings into a shared space was taken by “HUSE: Hierarchical Universal Semantic Embeddings” (https://arxiv.org/pdf/1911.05978.pdf). The motivation is that the attribute embedding can provide some complementary information to the model which can be learned to represent into a shared space and hence a better prediction to the zero-shot learning can be made. Also, the Squeeze and Excitation layer showed some impressive results when applied to the feature extraction part of the model, therefore we thought of re-weighting the channels first before applying the self-attention module so that the model can give even better attention to the image. The paper “Attentive Region Embedding Network for Zero-shot Learning” does not make use of class attributes of the classes present in the dataset, therefore we wanted to make use of these attributes and see if the model can make use of this new information as well. | ||

[[File:a227jain-proposal. | [[File:a227jain-proposal.jpeg | 300px | Architecture diagram]] | ||

Revision as of 11:28, 11 December 2020

Use this format (Don’t remove Project 0)

Project # 0 Group members:

Last name, First name

Last name, First name

Last name, First name

Last name, First name

Title: Making a String Telephone

Description: We use paper cups to make a string phone and talk with friends while learning about sound waves with this science project. (Explain your project in one or two paragraphs).

Project # 1 Group members:

McWhannel, Pierre

Yan, Nicole

Hussein Salamah, Ahmed

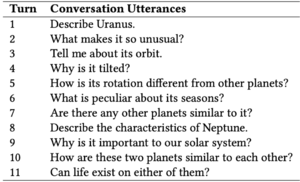

Title: Dense Retrieval for Conversational Information Seeking

Description: One of the recognized problems in Information Retrieval (IR) is the conversational search that attracts much attention in form of Conversational Assistants such as Alexa, Siri and Cortana. The users’ needs are the ultimate goal of conversational search systems, in this context the questions are asked sequentially imposing a multi-turn format as the Conversational Information Seeking (CIS) task. TREC Conversational Assistance Track (CAsT) [3] is a multi-turn conversational search task as it contains a large-scale reusable test collection for sequences of conversational queries. The response of this conversational model is not a list of relevant documents, but it is limited to brief response passages with a length of 1 to 3 sentences in length.

In [4], the authors focus on improving open domain question answering by including dense representations for retrieval instead of the traditional methods. They have adopted a simple dual-encoder framework to construct a learnable retriever on large collections. We want to adopt this dense representation for the conversational model in the CAsT task and compare it with the performance of the other approaches in literature. The performance will be indicated by using graded relevance on five point, which are Fails to meet, Slightly meets, Moderately meets, Highly meets, and Fully meets.

We aim to further improve our system performance by integrating the following techniques:

• Paragraph-level pre-training tasks: ICT, BFS, and WLP [1]

• ANCE training: periodically using checkpoints to encode documents, from which the strong negatives close to the relevant document would be used as next training negatives [5]

In summary, this project is exploratory in nature as we will be trying to use state-of-art Dense Passage Retrieval techniques (based on BERT) [4, 6], in a question answering (QA) problem. Current first-stage-retrieval approaches mainly rely on bag-of-words models. In this project, we hope to explore the feasibility of using state-of-art methods such as BERT. We will first compare how these perform on the TREC CAsT datasets [3] against the results retrieved using BM25. After these first points of comparison we will next explore methods of improving DPR by exploring one or more techniques that are made to improve the performance of DPR. [1, 5].

References

[1] Wei-Cheng Chang et al. Pre-training Tasks for Embedding-based Large-scale Retrieval. 2020. arXiv: 2002.03932 [cs.LG].

[2] Zhuyun Dai and Jamie Callan. Context-Aware Sentence/Passage Term Importance Estimation For First Stage Retrieval. 2019. arXiv: 1910.10687 [cs.IR].

[3] Jeffrey Dalton, Chenyan Xiong, and Jamie Callan. TREC CAsT 2019: The Conversational Assistance Track Overview. 2020. arXiv: 2003.13624 [cs.IR].

[4] Vladimir Karpukhin et al. Dense Passage Retrieval for Open-Domain Ques- tion Answering. 2020. arXiv: 2004.04906 [cs.CL].

[5] Lee Xiong et al. Approximate Nearest Neighbor Negative Contrastive Learn- ing for Dense Text Retrieval. 2020. arXiv: 2007.00808 [cs.IR].

[6] Jingtao Zhan et al. RepBERT: Contextualized Text Embeddings for First- Stage Retrieval. 2020. arXiv: 2006.15498 [cs.IR].

Project # 2 Group members:

Singh, Gursimran

Sharma, Govind

Chanana, Abhinav

Title: Quick Text Description using Headline Generation and Text To Image Conversion

Description: An automatic tool to generate short description based on long textual data is a useful mechanism to share quick information. Most of the current approaches involve summarizing the text using varied deep learning approaches from Transformers to different RNNs. For this project, instead of building a standard text summarizer, we aim to provide two separate utilities for generating a quick description of the text. First, we plan to develop a model that produces a headline for the long textual data, and second, we are intending to generate an image describing the text.

Headline Generation - Headline generation is a specific case of text summarization where the output is generally a combination of few words that gives an overall outcome from the text. In most cases, text summarization is an unsupervised learning problem. But, for the headline generation, we have the original headlines available in our training dataset that makes it a supervised learning task. We plan to experiment with different Recurrent Neural Networks like LSTMs and GRUs with varied architectures. For model evaluation, we are considering BERTScore using which we can compare the reference headline with the automatically generated headline from the model. We also aim to explore Attention and Transformer Networks for the text (headline) generation. We will make use of the currently available techniques mentioned in the various research papers but also try to develop our own architecture if the previous methods don't reveal reliable results on our dataset. Therefore, this task would primarily fit under the category of application of deep learning to a particular domain, but could also include some components of new algorithm design.

Text to Image Conversion - Generation or synthesis of images from a short text description is another very interesting application domain in deep learning. One approach for image generation is based on mapping image pixels to specific features as described by the discriminative feature representation of the text. Recurrent Neural Networks have been successfully used in learning such feature representations of text. This approach is difficult to generalize because the recognition of discriminative features for texts in different domains is not an easy task and it requires domain expertise. Different generative methods have been used including Variational Recurrent Auto-Encoders and its extension in Deep Recurrent Attention Writer (DRAW). We plan to experiment with Generative Adversarial Networks (GAN). Application of GANs on domain-specific datasets has been done but we aim to apply different variants of GANs on the Microsoft COCO dataset which has been used in other architectures. The analysis will be focusing on how well GANs are able to generalize when compared to other alternatives on the given dataset.

Scope - The above models will be trained independently on different datasets. Therefore, for a particular text, only one of the two functionalities will be available.

Project # 3 Group members:

Sikri, Gaurav

Bhatia, Jaskirat

Title: Malware Prediction

Description: The malware industry continues to be a well-organized, well-funded market dedicated to evading traditional security measures. Once a computer is infected by malware, criminals can hurt consumers and enterprises in many ways. With more than one billion enterprise and consumer customers, Microsoft takes this problem very seriously and is deeply invested in improving security.

In this project, we plan to predict how likely a machine is to be infected by malware given its current specifications(total 82) like: company name, Firewall status, physical RAM, etc.

Project # 4 Group members:

Maleki, Danial

Rasoolijaberi, Maral

Title: Binary Deep Neural Network for the domain of Pathology

Description: The binary neural network, largely saving the storage and computation, serves as a promising technique for deploying deep models on resource-limited devices. However, the binarization inevitably causes severe information loss, and even worse, its discontinuity brings difficulty to the optimization of the deep network. We want to investigate the possibility of using these types of networks in the domain of histopathology as it has gigapixels images which make the use of them very useful.

Project # 5 Group members:

Jain, Abhinav

Bathla, Gautam

Title: Zero short learning with AREN and HUSE

Description: Attention Region Discovery and Adaptive Thresholding module are taken from the idea of “Attentive Region Embedding Network for Zero-shot Learning” (https://openaccess.thecvf.com/content_CVPR_2019/papers/Xie_Attentive_Region_Embedding_Network_for_Zero-Shot_Learning_CVPR_2019_paper.pdf) whereas the idea for projecting image and text embeddings into a shared space was taken by “HUSE: Hierarchical Universal Semantic Embeddings” (https://arxiv.org/pdf/1911.05978.pdf). The motivation is that the attribute embedding can provide some complementary information to the model which can be learned to represent into a shared space and hence a better prediction to the zero-shot learning can be made. Also, the Squeeze and Excitation layer showed some impressive results when applied to the feature extraction part of the model, therefore we thought of re-weighting the channels first before applying the self-attention module so that the model can give even better attention to the image. The paper “Attentive Region Embedding Network for Zero-shot Learning” does not make use of class attributes of the classes present in the dataset, therefore we wanted to make use of these attributes and see if the model can make use of this new information as well.

Project # 6 Group members:

You, Bowen

Avilez, Jose

Mahmoud, Mohammad

Wu, Mohan

Title: Deep Learning Models in Volatility Forecasting

Description: Price forecasting has become a very hot topic in the financial industry in recent years. We are however very interested in the volatility of such financial instruments. We propose a new deep learning architecture or model to predict volatility and apply our model to real life datasets of various financial products. We will analyze our results and compare them to more traditional methods.

Project # 7 Group members:

Chen, Meixi

Shen, Wenyu

Title: Through the Lens of Probability Theory: A Comparison Study of Bayesian Deep Learning Methods

Description: Deep neural networks have been known as black box models, but they can be made less mysterious when adopting a Bayesian approach. From a Bayesian perspective, one is able to assign uncertainty on the weights instead of having single point estimates, which allows for a better interpretability of deep learning models. However, Bayesian deep learning methods are often intractable due an increase amount of parameters and often times don't have as good performance. In this project, we will study different BDL methods such as Bayesian CNN using variational inference and Laplace approximation, with applications on image classification, and we will try to propose improvements where possible.

Project # 8 Group members:

Avilez, Jose

Title: A functional universal approximation theorem

Description: In the seminal paper "Approximation by superpositions of a sigmoidal function", Cybenko gave a simple proof using elementary functional analysis that a certain class of functions, called discriminatory functions, serve as valid activation functions for universal neural approximators. The objective of our project is three-fold:

1) Prove a converse of Cybenko's Universal Approximation Theorem by means of the Stone-Weierstrass theorem

2) Provide examples and non-examples of Cybenko's discriminatory functions

3) Construct a neural network for functional data (i.e. data arising in function spaces) and prove a universal approximation theorem for Lp spaces.

References:

[1] Cybenko, G. (1989). Approximation by superpositions of a sigmoidal function. Mathematics of control, signals and systems, 2(4), 303-314.

[2] Folland, Gerald B. Real analysis: modern techniques and their applications. Vol. 40. John Wiley & Sons, 1999.

[3] Ramsay, J. O. (2004). Functional data analysis. Encyclopedia of Statistical Sciences, 4.

Project # 9 Group members:

Sikaroudi, Milad

Ashrafi Fashi, Parsa

Title: Magnification Generalization with Model-Agnostic Semantic Features in Histopathology Images

Many of the embedding methods learn the subspace for only a specific magnification. However, one of the main challenges in histopathology image embedding is the different magnification levels for indexing of a Whole Slide Indexing (WSI) image [1]. It is well-known that significantly different patterns may exist at different magnification levels of a WSI [2]. It is useful to train an embedding space for discriminating the histopathology patches regardless of their magnifications. That would lead to learning more compact WSI representations. It has been an arduous task because of the significant domain shifts between different magnification levels with noticeably different patterns. The performance of conventional deep neural networks tends to degrade in the presence of a domain shift, such as the gathering of data from different centers. In this study for the first time, we are going to introduce different magnification levels as a domain shift to see if we can generalize to in-common features in different magnification levels by means of a domain generalization technique, known as Model Agnostic Learning of Semantic Features. The hypothesis is that the statistics of retrieval for the model trained using episodic domain generalization will not degrade as much as the baseline when there is a domain shift.

[1] Sellaro, Tiffany L., et al. "Relationship between magnification and resolution in digital pathology systems." Journal of pathology informatics 4 (2013).

[2] Zaveri, Manit, et al. "Recognizing Magnification Levels in Microscopic Snapshots." arXiv preprint arXiv:2005.03748 (2020).

Project # 10 Group members:

Torabian, Parsa

Ebrahimi Farsangi, Sina

Moayyedi, Arash

Title: Meta-Learning Regularizers for Few-Shot Classification Models

Our project aims at exploring the effects of self-supervised pre-training on few-shot classification. We draw inspiration from the paper “When Does Self-supervision Improve Few-shot Learning?”[1] where the authors analyse the effects of using the Jigsaw puzzle[2] and rotation tasks as regularizers for training Prototypical Networks[3] and Model-Agnostic Meta-Learning (MAML)[4] networks.

The introduced paper analyzes the effects of regularizing meta-learning models using self-supervised loss, based on rotation and Jigsaw tasks. It is conventionally thought that one of the reasons MAML and other optimization based meta-learning algorithms work well is due to initializing a network into a task-generalizable state[5]. In this project, we will be looking at the effects of self-supervised pre-training, as presumably it will initialize the network into a better state than random, and potentially improve subsequent meta-learning. We will compare the effects of using self-supervised methods as pre-training, as regularization, and the combination of both. The effects of other self-supervised learning tasks, such as discoloration and flipping, will be studied as well. We will also look at which combination of tasks, whether interlaced or applied sequentially, work better and complement one another. We will evaluate our final results on the Omniglot and Mini-Imagenet datasets. These improvements will later be compared with their application on other few-shot learning methods, including first-order MAML and Matching Networks.

References:

[1] https://arxiv.org/abs/1910.03560

[2] https://arxiv.org/abs/1603.09246

[3] https://arxiv.org/abs/1703.05175

[4] https://arxiv.org/abs/1703.03400

[5] https://arxiv.org/abs/2003.11539

Project # 11 Group Members:

Shikhar Sakhuja: s2sakhuj@uwaterloo.ca

Introduction:

Controller Area Network (CAN bus) is a vehicle bus standard that allows Electronic Control Units (ECU) within an automobile to communicate with each other without the need for a host computer. Modern automobiles might have up to 70 ECUs for various subsystems such as Engine, Transmission, Breaking, etc. The ECUs exchange messages on the CAN bus and allow for a lot of modern vehicle capabilities such as automatic start/stop, electric park brakes, lane detection, collision avoidance, and more. Each message exchanged on the bus is encoded as a 29-bit packet. These 29 bits consist of a combination of Parameter Group Number (PGN), message priority, and the source address of the message. Parameter groups can be, for example, engine temperature which could include coolant temperature, fuel temperature, etc. The PGN itself includes information such as priority, reserved status, data page, and PDU format. Lastly, the source address maps the message to the ECU it originates from.

Goals:

(1) This project aims to use messages exchanged on the CAN bus of a Challenger Truck collected by the Embedded Systems Group at the University of Waterloo. The data exists in a temporal format with a new message exchanged periodically. The goals of this project are two folds:

(2) Predicting the PGN and source address of message N exchanged on the bus, given messages 1 to N-1. We might also explore predicting attributes within the PGN. Predicting the delay between messages N-1 and N, given the delay between each pair of consecutive messages leading up to message N-1.

Potential Approach:

For the first goal, we intend to experiment with RNN models along with Attention modules since they have shown promising results in text generation/prediction.

The second goal is more of an investigative problem where we intend to use regression techniques powered by Neural Networks to predict delays between messages N-1 and N.

Project # 12 Group members:

Hemati, Sobhan

Meaney, Cameron

Title: Representation learning of gigapixel histopathology images using PointNet a permutation invariant neural network

Description:

In recent years, there has been a significant growth in the amount of information available in digital pathology archives. This data is valuable because of its potential uses in research, education, and pathologic diagnosis. As a result, representation learning of histopathology whole slide images (WSIs) has attracted significant attention and become an active area of research. Unfortunately, scientific progress with these data have been difficult because of challenges inherent to the data itself. These challenges include highly complex textures of different tissue types, color variations caused by different stainings, and most notably, the size of the images which are often larger than 50,000x50,000 pixels. Additionally, these images are multi-resolution meaning that each WSI may contain images from different zoom levels, primarily 5X, 10X, 20X, and 40X. With the advent of deep learning, there is optimism that these challenges can be overcome. The main challenge in this approach is that the sheer size of the images makes it infeasible (or impossible) to obtain a vector representation for a WSI, which is a necessary step in order to leverage deep learning algorithms. In practice, this is often bypassed by considering ‘patches’ of the WSI of smaller sizes, a set of which is meant to represent the full WSI. This approach lead to a set representation for a WSI. However, unlike traditional image or sequence models, deep networks that process and learn permutation invariant representations from sets is still a developing area of research. Recent attempts at this include Multi-instance Learning Schemes, Deep Set, and Set Transformers. A particularly successful attempt in developing a deep neural network for set representation in called PointNet which was developed for classification and segmentation of 3D objects and point clouds. In PointNet, each set is represented using a set of (x,y,z) coordinates, and the network is designed to learn a permutation invariant global representation for each set and then use this representation for classification or segmentation.

In this project, we attempt to first extend the PointNet network to a convolutional PointNet network such that it uses a set of image patches rather than (x,y,z) coordinates to learn the universal permutation invariant representation. Then, we attempt improve the representational power of PointNet as a permutation invariant neural network. For the first part, the main challenge is that while PointNet has been designed for processing of sets with the same size, in WSIs, the size of the image and therefore number of patches is not fixed. For this reason, we will need to develop an idea which enables CNN-PointNet to process sets with different sizes. One possible solution is to use fake members to standardize the set size and then remove the effect of these fake members in backpropagation using a masking scheme. For the second part, the PointNet network can be improved in many ways. For example, the rotation matrix used is not a real rotation matrix as the orthogonality is incorporated using a regularization term. However, using a projected gradient technique and the existence of a closed form solution for obtaining nearest orthogonal matrix to a given matrix (Orthogonal Procrustes Problem) we can keep the exact orthogonality constraint and obtain a real rotation matrix. This exact orthogonality is geometrically important as, otherwise, this transformation will likely corrupt the neighborhood structure of the points in each set. Furthermore, PointNet uses very simple symmetric function (max pooling) as a set approximator, however there more powerful symmetric functions like statistical moments, power-sum with a trainable parameter, and other set approximators can be used. It would be interesting to see how more complicated symmetric functions can improve the representational power of PointNet to achieve more discriminative permutation invariant representations for each set (in this case WSIs).

Project # 13 Group Members:

Syed Saad Naseem ssnaseem@uwaterloo.ca

Title: Text classification of topics related to COVID-19 on social media using deep learning The COVID-19 pandemic has become a public health emergency and a critical socioeconomic issue worldwide. It is changing the way we live and do business. Social media is a rich source of data about public opinion on different types of topics including topics about COVID-19. I plan on using Reddit to get a dataset of posts and comments from users related to COVID-19 and since Reddit is divided into communities so the posts and comments are also clustered by the topic of the community, for example, posts from the political subreddit will have posts about politics.

I plan to make a classifier that will take a given text and will tell what the text of talking about for example it can be talking about politics, studies, relationships, etc. The goals of this project are to:

• Scrape a dataset from Reddit from different communities

• Train a deep learning model (CNN or RNN model) to classify a given text into the possible categories

• Test the model on posts from social talking about COVID-19

Project # 14 Group members

Edwards, John

Title: Click-through Rate Prediction Using Historical User Data

Click-through Rate (CTR) prediction consists of forecasting a users probability of clicking on a specified target. CTR is used largely by online advertising systems which sell ad space on a cost-per-click pricing model to asses the likenesses of a user clicking on a targeted ad.

User session logs provides firms with an assortment of individual specific features, a large - number of which are categorical. Additionally, advertisers posses multiple ad candidates each with their own respective features. The challenge of CTR prediction is to design a model which encompass the Interacting effects of these features to produced high quality forecasts and pair users with advertisements with high potential for click conversion. Additionally computational efficiency must balanced with model complexity so that predictions can be done in an online setting throughout the progression of a users session.

This projects primary objective will be to attempt creating a new Deep Neural Network (DNN) architecture for producing high quality CTR forecasts while also satisfying the aforementioned challenges.

While many variants of DNN for CTR predictions exists they can differ greatly in application setting. Specifically, the vast majority of models evaluate each user-ad interaction independently. They fail to utlise information contained for each specific users’ historical add impressions. There is only a small subset of models [1,2,4] which have tried to address this by adapting architectures to utilize historical information. This projects focus will be within this application setting exploring new architectures which can better utilise information contained within a users historical behaviour.

This projects implementation will consist of the following action plan: Develop a new model architecture inspired by innovations of previous CTR network designs which lacked the ability to adapt their model to utlize a users historical data [4,5]. Use the public benchmark Avito advertising dataset to empirically evaluate the new models performance and compare it against previous state of the art models for this data set.

References:

[1] Ouyang, Wentao & Zhang, Xiuwu & Ren, Shukui & Li, Li & Liu, Zhaojie & Du, Yanlong. (2019). Click-Through Rate Prediction with the User Memory Network.

[2] Ouyang, Wentao & Zhang, Xiuwu & Li, Li & Zou, Heng & Xing, Xin & Liu, Zhaojie & Du, Yanlong. (2019). Deep Spatio-Temporal Neural Networks for Click-Through Rate Prediction. 2078-2086. 10.1145/3292500.3330655.

[3] Ouyang, Wentao & Zhang, Xiuwu & Ren, Shukui & Qi, Chao & Liu, Zhaojie & Du, Yanlong. (2019). Representation Learning-Assisted Click-Through Rate Prediction. 4561-4567. 10.24963/ijcai.2019/634.

[4] Li, Zeyu, Wei Cheng, Yang Chen, H. Chen and W. Wang. “Interpretable Click-Through Rate Prediction through Hierarchical Attention.” Proceedings of the 13th International Conference on Web Search and Data Mining (2020)

[5] Zhou, Guorui & Gai, Kun & Zhu, Xiaoqiang & Song, Chenru & Fan, Ying & Zhu, Han & Ma, Xiao & Yan, Yanghui & Jin, Junqi & Li, Han. (2018). Deep Interest Network for Click-Through Rate Prediction. 1059-1068. 10.1145/3219819.3219823.