User:X93ma: Difference between revisions

(Created page with "== Presented by == Qing Guo, Xueguang Ma, James Ni, Yuanxin Wang == Introduction == Mask RCNN [1] is a deep neural network architecture that aims to solve instance segmenta...") |

No edit summary |

||

| Line 10: | Line 10: | ||

- Image Classification: Predict a set of labels to characterize the contents of an input image | - Image Classification: Predict a set of labels to characterize the contents of an input image | ||

- Object Detection: Build on image classification but localize each object in an image | - Object Detection: Build on image classification but localize each object in an image | ||

- Semantic Segmentation: Associate every pixel in an input image with a class label | - Semantic Segmentation: Associate every pixel in an input image with a class label | ||

- Instance Segmentation: Associate every pixel in an input image to a specific object | - Instance Segmentation: Associate every pixel in an input image to a specific object | ||

[[File:instance segmentation.png | center]] | |||

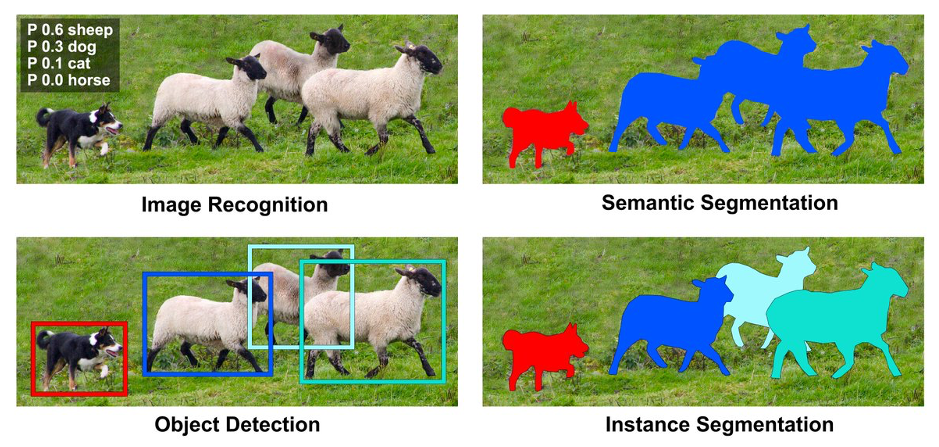

<div align="center">Figure 1: Visual Perception tasks</div> | |||

Mask RCNN is an deep neural network architecture for Instance Segmentation. | Mask RCNN is an deep neural network architecture for Instance Segmentation. | ||

| Line 65: | Line 72: | ||

(e) Mask Branch (ResNet-50-FPN): Fully convolutional networks (FCN) vs. multi-layer perceptrons (MLP, fully-connected) for mask prediction. FCNs improve results as they take advantage of explicitly encoding spatial layout. | (e) Mask Branch (ResNet-50-FPN): Fully convolutional networks (FCN) vs. multi-layer perceptrons (MLP, fully-connected) for mask prediction. FCNs improve results as they take advantage of explicitly encoding spatial layout. | ||

== References == | == References == | ||

[1] | [1] Kaiming He, Georgia Gkioxari, Piotr Dollár, Ross Girshick. Mask R-CNN. arXiv:1703.06870, 2017. | ||

[ | [2] Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks, arXiv:1506.01497, 2015. | ||

[ | [3] Tsung-Yi Lin, Michael Maire, Serge Belongie, Lubomir Bourdev, Ross Girshick, James Hays, Pietro Perona, Deva Ramanan, C. Lawrence Zitnick, Piotr Dollár. Microsoft COCO: Common Objects in Context. arXiv:1405.0312, 2015 | ||

Revision as of 14:15, 27 November 2020

Presented by

Qing Guo, Xueguang Ma, James Ni, Yuanxin Wang

Introduction

Mask RCNN [1] is a deep neural network architecture that aims to solve instance segmentation problems in computer vision. Mask R-CNN, extends Faster R-CNN [2] by adding a branch for predicting an object mask in parallel with the existing branch for bounding box recognition. Mask R-CNN is simple to train and adds only a small overhead to Faster R-CNN, running at 5 fps. Moreover, Mask R-CNN is easy to generalize to other tasks, e.g., allowing us to estimate human poses in the same framework. Mask R-CNN achieved top results in all three tracks of the COCO suite of challenges [3], including instance segmentation, bounding-box object detection, and person keypoint detection.

Visual Perception tasks

- Image Classification: Predict a set of labels to characterize the contents of an input image

- Object Detection: Build on image classification but localize each object in an image

- Semantic Segmentation: Associate every pixel in an input image with a class label

- Instance Segmentation: Associate every pixel in an input image to a specific object

Mask RCNN is an deep neural network architecture for Instance Segmentation.

Related Work

Region Proposal Network: A Region Proposal Network (RPN) takes an image (of any size) as input and outputs a set of rectangular object proposals, each with an objectness score.

ROI Pooling: The main use of ROI Pooling is to adjust the proposal to a uniform size. It’s better for subsequent network to process. It map the proposal to the corresponding position of the feature map, divide the mapped area into sections of the same size and perform max pooling or average pooling operations on each section.

Faster R-CNN: Faster R-CNN consists of two stages. The first stage, called a Region Proposal Network, proposes candidate object bounding boxes. The second stage, which is in essence Fast R-CNN, extracts features using RoIPool from each candidate box and performs classification and bounding-box regression. The features used by both stages can be shared for faster inference.

ResNet-FPN: FPN uses a top-down architecture with lateral connections to build an in-network feature pyramid from a single-scale input. FPN is actually a general architecture that can be used in conjunction with various networks, such as VGG, ResNet, etc. Faster R-CNN with an FPN backbone extracts RoI features from different levels of the feature pyramid according to their scale, but otherwise the rest of the approach is similar to vanilla ResNet. Using a ResNet-FPN backbone for feature extraction with Mask RCNN gives excellent gains in both accuracy and speed.

Model Architecture

The structure of mask R-CNN is quite similar to the structure of faster R-CNN. Faster R-CNN has two stages, the RPN(Region Proposal Network) first proposes candidate object bounding boxes. Then RoIPool extracts the features from these boxes. After the features are extracted, these features data can be analyzed using classification and bounding-box regression. Mask R-CNN shares the identical first stage. But the second stage is adjusted to tackle the issue of simplifying stages pipeline. Instead of only performing classification and bounding-box regression, it also outputs a binary mask for each RoI.

The important concept here is that, for most recent network systems, there's a certain order to follow when performing classification and regression, because classification depends on mask predictions. Mask R-CNN, on the other hand, applies bounding-box classification and regression in parallel, which effectively simplifies the multi-stage pipeline of the original R-CNN. And just for comparison, a complete R-CNN pipeline stages involve: 1. Make region proposals; 2. Feature extraction from region proposals; 3. SVM

For object classification; 4. Bounding box regression. In conclusion, stage 3 and 4 are adjusted to simplify the network procedures. The system follows the multi-task loss, which by formula equals classification loss plus bounding-box loss plus the average binary cross-entropy loss. One thing worth noticing is that for other network systems, those masks across classes compete with each other, but in this particular case, with a per-pixel sigmoid and a binary loss the masks across classes no longer compete, which makes this formula the key for good instance segmentation results.

Another important concept involved is called the RoIAlign. This concept is useful in stage 2 where the RoIPool extracts features from bounding-boxes. For each RoI as input, there will be a mask and a feature map as output. The mask is obtained using the FCN(Fully Convolutional Network) and the feature map is obtained using the RoIPool. The mask helps with spatial layout, which is crucial to pixel-to-pixel correspondence. The two things we desire along the procedure are: pixel-to-pixel correspondence; no quantization is performed on any coordinates involved in the RoI, its bins, or the sampling points. Pixel-to-pixel correspondence makes sure that the input and output matches in size. If there is size difference, there will be information loss, and coordinates cannot be matched. Also, instead of quantization, the coordinates are computed using bilinear interpolation to guarantee spatial correspondence.

The network architecture utilized are called ResNet and ResNeXt. The depth can be either 50 or 101. ResNet-FPN(Feature Pyramid Network) is used for feature extraction.

There are some implementation details that should be mentioned: first, an RoI is considered positive if it has IoU with a ground-truth box of at least 0.5 and negative otherwise. It is important because the mask loss Lmask is defined only on positive RoIs. Second, image-centric training is used to rescale images so that pixel correspondence is achieved. An example complete structure is, the proposal number is 1000 for FPN, and then run the box prediction branch on these proposals. The mask branch is then applied to the highest scoring 100 detection boxes. The mask branch can predict K masks per RoI, but only the k-th mask will be used, where k is the predicted class by the classification branch. The m-by-m floating-number mask output is then resized to the RoI size, and binarized at a threshold of 0.5.

Results

Instance Segmentation: Based on COCO dataset, Mask R-CNN outperforms all categories comparing to MNC and FCIS which are state of art model

Bounding Box Detection: Mask R-CNN outperforms the base variants of all previous state-of-the-art models, including the winner of the COCO 2016 Detection Challenge.

Ablation Experiments

(a) Backbone Architecture: Better backbones bring expected gains: deeper networks do better, FPN outperforms C4 features, and ResNeXt improves on ResNet.

(b) Multinomial vs. Independent Masks (ResNet-50-C4): Decoupling via perclass binary masks (sigmoid) gives large gains over multinomial masks (softmax).

(c) RoIAlign (ResNet-50-C4): Mask results with various RoI layers. Our RoIAlign layer improves AP by ∼3 points and AP75 by ∼5 points. Using proper alignment is the only factor that contributes to the large gap between RoI layers.

(d) RoIAlign (ResNet-50-C5, stride 32): Mask-level and box-level AP using large-stride features. Misalignments are more severe than with stride-16 features, resulting in big accuracy gaps.

(e) Mask Branch (ResNet-50-FPN): Fully convolutional networks (FCN) vs. multi-layer perceptrons (MLP, fully-connected) for mask prediction. FCNs improve results as they take advantage of explicitly encoding spatial layout.

References

[1] Kaiming He, Georgia Gkioxari, Piotr Dollár, Ross Girshick. Mask R-CNN. arXiv:1703.06870, 2017.

[2] Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks, arXiv:1506.01497, 2015.

[3] Tsung-Yi Lin, Michael Maire, Serge Belongie, Lubomir Bourdev, Ross Girshick, James Hays, Pietro Perona, Deva Ramanan, C. Lawrence Zitnick, Piotr Dollár. Microsoft COCO: Common Objects in Context. arXiv:1405.0312, 2015