Speech2Face: Learning the Face Behind a Voice: Difference between revisions

| Line 6: | Line 6: | ||

== Previous Work == | == Previous Work == | ||

== Motivation == | == Motivation == | ||

Often, when we listen to a person speaking without seeing his/her face, on the phone, or on the radio, we build a mental image in our head for what we think the person may look like. There is a strong connection between speech and appearance, which is a direct result of the factors that affect speech, such as, age, | |||

gender (which affects the pitch of our voice), the shape of the mouth, etc. In addition, other voice-appearance correlations stem from the way in which we talk: language, accent, speed, pronunciations—such properties of speech are often shared among nationalities and cultures, which can, in turn, translate to common physical features. Our goal in this work is to study to what extent we can infer how a person looks from the way they talk. Specifically, from a short input audio segment of a person speaking, our method directly reconstructs an image of the person’s face in a canonical form (i.e., frontal-facing, neutral expression). Obviously, there is no one-to-one matching between faces and voices. Thus, our goal is not to predict a recognizable image of the exact face, but rather to capture dominant facial then we listen to a person speaking without seeing his/her face, on the phone, or on the radio, we often build a mental model for the way the person looks. There is a strong connection between speech and appearance, part of which is a direct result of the mechanics of speech production: age, gender (which affects the pitch of our voice), the shape of the mouth, facial bone structure, thin or full lips—all can affect the sound we generate. In addition, other voice-appearance correlations stem from the way in which we talk: language, accent, speed, pronunciations—such properties of speech are often shared among nationalities and cultures, which can, in turn, translate to common physical features. Our goal in this work is to study to what extent we can infer how a person looks from the way they talk. Specifically, from a short input audio segment of a person speaking, our method directly reconstructs an image of the person’s face in a canonical form (i.e., frontal-facing, neutral expression). Obviously, there is no one-to-one matching between faces and voices. Thus, our goal is not to predict a recognizable image of the exact face, but rather to capture dominant facial traits of the person that are correlated with the input speech | |||

== Model Architecture == | == Model Architecture == | ||

Revision as of 13:39, 24 November 2020

Presented by

Ian Cheung, Russell Parco, Scholar Sun, Jacky Yao, Daniel Zhang

Introduction

Previous Work

Motivation

Often, when we listen to a person speaking without seeing his/her face, on the phone, or on the radio, we build a mental image in our head for what we think the person may look like. There is a strong connection between speech and appearance, which is a direct result of the factors that affect speech, such as, age, gender (which affects the pitch of our voice), the shape of the mouth, etc. In addition, other voice-appearance correlations stem from the way in which we talk: language, accent, speed, pronunciations—such properties of speech are often shared among nationalities and cultures, which can, in turn, translate to common physical features. Our goal in this work is to study to what extent we can infer how a person looks from the way they talk. Specifically, from a short input audio segment of a person speaking, our method directly reconstructs an image of the person’s face in a canonical form (i.e., frontal-facing, neutral expression). Obviously, there is no one-to-one matching between faces and voices. Thus, our goal is not to predict a recognizable image of the exact face, but rather to capture dominant facial then we listen to a person speaking without seeing his/her face, on the phone, or on the radio, we often build a mental model for the way the person looks. There is a strong connection between speech and appearance, part of which is a direct result of the mechanics of speech production: age, gender (which affects the pitch of our voice), the shape of the mouth, facial bone structure, thin or full lips—all can affect the sound we generate. In addition, other voice-appearance correlations stem from the way in which we talk: language, accent, speed, pronunciations—such properties of speech are often shared among nationalities and cultures, which can, in turn, translate to common physical features. Our goal in this work is to study to what extent we can infer how a person looks from the way they talk. Specifically, from a short input audio segment of a person speaking, our method directly reconstructs an image of the person’s face in a canonical form (i.e., frontal-facing, neutral expression). Obviously, there is no one-to-one matching between faces and voices. Thus, our goal is not to predict a recognizable image of the exact face, but rather to capture dominant facial traits of the person that are correlated with the input speech

Model Architecture

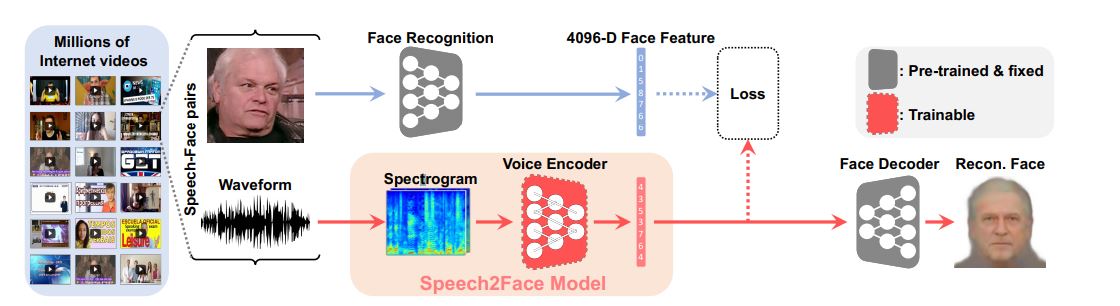

Speech2Face model and training pipeline

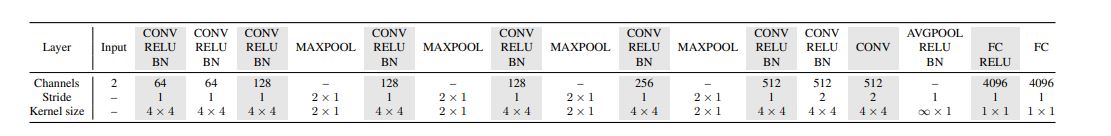

Voice Encoder Architechture