F21-STAT 940-Proposal: Difference between revisions

No edit summary |

No edit summary |

||

| Line 138: | Line 138: | ||

Description: Deep neural networks have been known as black box models, but they can be made less mysterious when adopting a Bayesian approach. From a Bayesian perspective, one is able to assign uncertainty on the weights instead of having single point estimates, which allows for a better interpretability of deep learning models. However, Bayesian deep learning methods are often intractable due an increase amount of parameters and often times don't have as good performance. In this project, we will study different BDL methods such as Bayesian CNN using variational inference and Laplace approximation, with applications on image classification, and we will try to propose improvements where possible. | Description: Deep neural networks have been known as black box models, but they can be made less mysterious when adopting a Bayesian approach. From a Bayesian perspective, one is able to assign uncertainty on the weights instead of having single point estimates, which allows for a better interpretability of deep learning models. However, Bayesian deep learning methods are often intractable due an increase amount of parameters and often times don't have as good performance. In this project, we will study different BDL methods such as Bayesian CNN using variational inference and Laplace approximation, with applications on image classification, and we will try to propose improvements where possible. | ||

Project # 8 Group members: | |||

Avilez, Jose | |||

Title: A functional universal approximation theorem | |||

Description: In the seminal paper "Approximation by superpositions of a sigmoidal function", Cybenko gave a simple proof using elementary functional analysis that a certain class of functions, called discriminatory functions, serve as valid activation functions for universal neural approximators. The objective of our project is three-fold: | |||

1) Prove a converse of Cybenko's Universal Approximation Theorem by means of the Stone-Weierstrass theorem | |||

2) Provide examples and non-examples of Cybenko's discriminatory functions | |||

3) Construct a neural network for functional data (i.e. data arising in function spaces) and prove a universal approximation theorem for Lp spaces. | |||

References: | |||

[1] Cybenko, G. (1989). Approximation by superpositions of a sigmoidal function. Mathematics of control, signals and systems, 2(4), 303-314. | |||

[2] Folland, Gerald B. Real analysis: modern techniques and their applications. Vol. 40. John Wiley & Sons, 1999. | |||

[3] Ramsay, J. O. (2004). Functional data analysis. Encyclopedia of Statistical Sciences, 4. | |||

Revision as of 13:47, 9 October 2020

Use this format (Don’t remove Project 0)

Project # 0 Group members:

Last name, First name

Last name, First name

Last name, First name

Last name, First name

Title: Making a String Telephone

Description: We use paper cups to make a string phone and talk with friends while learning about sound waves with this science project. (Explain your project in one or two paragraphs).

Project # 1 Group members:

McWhannel, Pierre

Yan, Nicole

Hussein Salamah, Ahmed

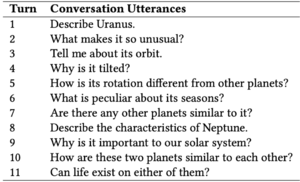

Title: Dense Retrieval for Conversational Information Seeking

Description: One of the recognized problems in Information Retrieval (IR) is the conversational search that attracts much attention in form of Conversational Assistants such as Alexa, Siri and Cortana. The users’ needs are the ultimate goal of conversational search systems, in this context the questions are asked sequentially imposing a multi-turn format as the Conversational Information Seeking (CIS) task. TREC Conversational Assistance Track (CAsT) [3] is a multi-turn conversational search task as it contains a large-scale reusable test collection for sequences of conversational queries. The response of this conversational model is not a list of relevant documents, but it is limited to brief response passages with a length of 1 to 3 sentences in length.

In [4], the authors focus on improving open domain question answering by including dense representations for retrieval instead of the traditional methods. They have adopted a simple dual-encoder framework to construct a learnable retriever on large collections. We want to adopt this dense representation for the conversational model in the CAsT task and compare it with the performance of the other approaches in literature. The performance will be indicated by using graded relevance on five point, which are Fails to meet, Slightly meets, Moderately meets, Highly meets, and Fully meets.

We aim to further improve our system performance by integrating the following techniques:

• Paragraph-level pre-training tasks: ICT, BFS, and WLP [1]

• ANCE training: periodically using checkpoints to encode documents, from which the strong negatives close to the relevant document would be used as next training negatives [5]

In summary, this project is exploratory in nature as we will be trying to use state-of-art Dense Passage Retrieval techniques (based on BERT) [4, 6], in a question answering (QA) problem. Current first-stage-retrieval approaches mainly rely on bag-of-words models. In this project, we hope to explore the feasibility of using state-of-art methods such as BERT. We will first compare how these perform on the TREC CAsT datasets [3] against the results retrieved using BM25. After these first points of comparison we will next explore methods of improving DPR by exploring one or more techniques that are made to improve the performance of DPR. [1, 5].

References

[1] Wei-Cheng Chang et al. Pre-training Tasks for Embedding-based Large-scale Retrieval. 2020. arXiv: 2002.03932 [cs.LG].

[2] Zhuyun Dai and Jamie Callan. Context-Aware Sentence/Passage Term Importance Estimation For First Stage Retrieval. 2019. arXiv: 1910.10687 [cs.IR].

[3] Jeffrey Dalton, Chenyan Xiong, and Jamie Callan. TREC CAsT 2019: The Conversational Assistance Track Overview. 2020. arXiv: 2003.13624 [cs.IR].

[4] Vladimir Karpukhin et al. Dense Passage Retrieval for Open-Domain Ques- tion Answering. 2020. arXiv: 2004.04906 [cs.CL].

[5] Lee Xiong et al. Approximate Nearest Neighbor Negative Contrastive Learn- ing for Dense Text Retrieval. 2020. arXiv: 2007.00808 [cs.IR].

[6] Jingtao Zhan et al. RepBERT: Contextualized Text Embeddings for First- Stage Retrieval. 2020. arXiv: 2006.15498 [cs.IR].

Project # 2 Group members:

Singh, Gursimran

Sharma, Govind

Chanana, Abhinav

Title: Quick Text Description using Headline Generation and Text To Image Conversion

Description: An automatic tool to generate short description based on long textual data is a useful mechanism to share quick information. Most of the current approaches involve summarizing the text using varied deep learning approaches from Transformers to different RNNs. For this project, instead of building a standard text summarizer, we aim to provide two separate utilities for generating a quick description of the text. First, we plan to develop a model that produces a headline for the long textual data, and second, we are intending to generate an image describing the text.

Headline Generation - Headline generation is a specific case of text summarization where the output is generally a combination of few words that gives an overall outcome from the text. In most cases, text summarization is an unsupervised learning problem. But, for the headline generation, we have the original headlines available in our training dataset that makes it a supervised learning task. We plan to experiment with different Recurrent Neural Networks like LSTMs and GRUs with varied architectures. For model evaluation, we are considering BERTScore using which we can compare the reference headline with the automatically generated headline from the model. We also aim to explore attention models for the text (headline) generation. We will make use of the currently available techniques mentioned in the various research papers but also try to develop our own architecture if the previous methods don't reveal reliable results on our dataset. Therefore, this task would primarily fit under the category of application of deep learning to a particular domain, but could also include some components of new algorithm design.

Text to Image Conversion - Generation or synthesis of images from a short text description is another very interesting application domain in deep learning. One approach for image generation is based on mapping image pixels to specific features as described by the discriminative feature representation of the text. Recurrent Neural Networks have been successfully used in learning such feature representations of text. This approach is difficult to generalize because the recognition of discriminative features for texts in different domains is not an easy task and it requires domain expertise. Different generative methods have been used including Variational Recurrent Auto-Encoders and its extension in Deep Recurrent Attention Writer (DRAW). We plan to experiment with Generative Adversarial Networks (GAN). Application of GANs on domain-specific datasets has been done but we aim to apply different variants of GANs on the Microsoft COCO dataset which has been used in other architectures. The analysis will be focusing on how well GANs are able to generalize when compared to other alternatives on the given dataset.

Scope - The above models will be trained independently on different datasets. Therefore, for a particular text, only one of the two functionalities will be available.

Project # 3 Group members:

Sikri, Gaurav

Bhatia, Jaskirat

Title: Not decided yet (Placeholder)

Description: Not decided yet :)

Project # 4 Group members:

Maleki, Danial

Rasoolijaberi, Maral

Title: Binary Deep Neural Network for the domain of Pathology

Description: The binary neural network, largely saving the storage and computation, serves as a promising technique for deploying deep models on resource-limited devices. However, the binarization inevitably causes severe information loss, and even worse, its discontinuity brings difficulty to the optimization of the deep network. We want to investigate the possibility of using these types of networks in the domain of histopathology as it has gigapixels images which make the use of them very useful.

Project # 5 Group members:

Jain, Abhinav

Bathla, Gautam

Title: lyft-motion-prediction-autonomous-vehicles(Kaggle)(Tentative)

Description: Autonomous vehicles (AVs) are expected to dramatically redefine the future of transportation. However, there are still significant engineering challenges to be solved before one can fully realize the benefits of self-driving cars. One such challenge is building models that reliably predict the movement of traffic agents around the AV, such as cars, cyclists, and pedestrians.

Comments: We are more inclined towards a 3-D object detection project. We are in the process of finding the right problem statement for it and if we are not successful, we will continue with the above Kaggle competition.

Project # 6 Group members:

You, Bowen

Avilez, Jose

Mahmoud, Mohammad

Wu, Mohan

Title: Deep Learning Models in Volatility Forecasting

Description: Price forecasting has become a very hot topic in the financial industry in recent years. We are however very interested in the volatility of such financial instruments. We propose a new deep learning architecture or model to predict volatility and apply our model to real life datasets of various financial products. We will analyze our results and compare them to more traditional methods.

Project # 7 Group members:

Chen, Meixi

Shen, Wenyu

Title: Through the Lens of Probability Theory: A Comparison Study of Bayesian Deep Learning Methods

Description: Deep neural networks have been known as black box models, but they can be made less mysterious when adopting a Bayesian approach. From a Bayesian perspective, one is able to assign uncertainty on the weights instead of having single point estimates, which allows for a better interpretability of deep learning models. However, Bayesian deep learning methods are often intractable due an increase amount of parameters and often times don't have as good performance. In this project, we will study different BDL methods such as Bayesian CNN using variational inference and Laplace approximation, with applications on image classification, and we will try to propose improvements where possible.

Project # 8 Group members:

Avilez, Jose

Title: A functional universal approximation theorem

Description: In the seminal paper "Approximation by superpositions of a sigmoidal function", Cybenko gave a simple proof using elementary functional analysis that a certain class of functions, called discriminatory functions, serve as valid activation functions for universal neural approximators. The objective of our project is three-fold:

1) Prove a converse of Cybenko's Universal Approximation Theorem by means of the Stone-Weierstrass theorem

2) Provide examples and non-examples of Cybenko's discriminatory functions

3) Construct a neural network for functional data (i.e. data arising in function spaces) and prove a universal approximation theorem for Lp spaces.

References:

[1] Cybenko, G. (1989). Approximation by superpositions of a sigmoidal function. Mathematics of control, signals and systems, 2(4), 303-314.

[2] Folland, Gerald B. Real analysis: modern techniques and their applications. Vol. 40. John Wiley & Sons, 1999.

[3] Ramsay, J. O. (2004). Functional data analysis. Encyclopedia of Statistical Sciences, 4.