stat946w18/IMPROVING GANS USING OPTIMAL TRANSPORT: Difference between revisions

| Line 9: | Line 9: | ||

== GANs AND OPTIMAL TRANSPORT == | == GANs AND OPTIMAL TRANSPORT == | ||

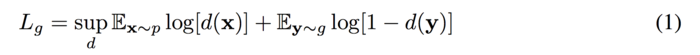

The objective function of the GAN: | The objective function of the GAN: // | ||

[[File:equation1.png|700px]] | [[File:equation1.png|700px]] | ||

improve the stability of learning, get rid of problems like mode collapse | improve the stability of learning, get rid of problems like mode collapse | ||

Revision as of 16:44, 12 March 2018

Introduction

Generative Adversarial Networks (GANs) are powerful generative models. It consists of a generator and a discriminator or critic. The generator is a neural network which learns to generate data as similar to the real distribution as possible. The critic measures the distance between the generated data distribution and the real data distribution which uses to distinguish between the generated data and the training data.

Optimal transport theory is a similar approach to measure the distance between the generated data and the training data distribution. The idea of this theory is by optimally transporting the data points between the training data and the generated data, the two data distribution will be approximately the same. This method produces a closed form solution instead of the approximated solution which is the advantage of this method. However, the primal form optimal transport could give biased gradients when mini-batches are applied.

This paper presents a variant GANs named OT-GAN, which incorporates a MIni-batch Energy Distance into its critic. This newly defined metric is unbiased when min-batch is applied.

GANs AND OPTIMAL TRANSPORT

The objective function of the GAN: //

improve the stability of learning, get rid of problems like mode collapse