STAT946F17/ Dance Dance Convolution: Difference between revisions

Jump to navigation

Jump to search

| Line 1: | Line 1: | ||

=Introduction= | =Introduction= | ||

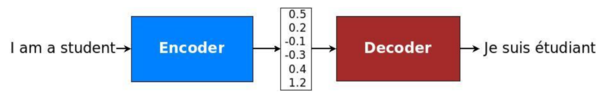

Neural Machine Translation (NMT), which is based on deep neural networks and provides an end- to-end solution to machine translation, uses an RNN-based encoder-decoder architecture to model the entire translation process. Specifically, an NMT system first reads the source sentence using an encoder to build a "thought" vector, a sequence of numbers that represents the sentence meaning; a decoder, then, processes the "meaning" vector to emit a translation. | Neural Machine Translation (NMT), which is based on deep neural networks and provides an end- to-end solution to machine translation, uses an RNN-based encoder-decoder architecture to model the entire translation process. Specifically, an NMT system first reads the source sentence using an encoder to build a "thought" vector, a sequence of numbers that represents the sentence meaning; a decoder, then, processes the "meaning" vector to emit a translation. [[File:VNFigure1.png|thumb|600px|center|Figure 1: Encoder-decoder architecture – example of a general approach for NMT.]] | ||

[[File:VNFigure1.png|thumb|600px|center|Figure 1: Encoder-decoder architecture – example of a general approach for NMT.]] | (Figure 1)<sup>[[#References|[1]]]</sup> | ||

=References= | =References= | ||

1. https://github.com/tensorflow/nmt | 1. https://github.com/tensorflow/nmt | ||

Revision as of 13:22, 24 November 2017

Introduction

Neural Machine Translation (NMT), which is based on deep neural networks and provides an end- to-end solution to machine translation, uses an RNN-based encoder-decoder architecture to model the entire translation process. Specifically, an NMT system first reads the source sentence using an encoder to build a "thought" vector, a sequence of numbers that represents the sentence meaning; a decoder, then, processes the "meaning" vector to emit a translation.

(Figure 1)[1]