FeUdal Networks for Hierarchical Reinforcement Learning: Difference between revisions

(Created page with "== Introduction == Even though deep reinforcement learning has been hugely successful in a variety of domains, it has not been able to succeed in environments which have spar...") |

No edit summary |

||

| Line 17: | Line 17: | ||

== Model == | == Model == | ||

[[File:feudal_network_model_diagram.png|frame]] | |||

Manager | A high-level explanation of the model is as follows: | ||

The Manager computes a latent state representation <math>s_t</math> and outputs a goal vector <math>g_t</math> . The Worker outputs actions based on the environment observation, its own state, and the Manager’s goal. A perceptual module computes intermediate representation, <math>z_t</math> of the environment observation <math>x_t</math>. The Manager’s goals <math>g_t</math> are trained using an approximate transition policy gradient. The Worker is then trained via intrinsic reward which stimulates it to output actions that will achieve the goals set by the Manager. | |||

<center> | <center> | ||

[[File: | [[File:model_definition.png|500px]] | ||

</center> | </center> | ||

Manager and Worker are recurrent networks (<math>{h^M}</math> and <math>{h^W}</math> being their internal states). <math>\phi</math> is a linear transform that maps a goal <math>g_t</math> into an embedding vector <math>w_t \in {R^k}</math> , which is then combined with matrix <math>U_t</math> (Worker's output) via a matrix-vector product to produce policy <math>\pi</math> – vector of probabilities over primitive actions. The projection <math>\phi</math> is linear, with no biases, and is learnt with gradients coming from the Worker’s actions.Since <math>\phi</math> has no biases it can never produce a constant non-zero vector – which is the only way the setup could ignore the Manager’s input. This makes sure that the goal output by the Manager always influences the final policy. | |||

===Learning=== | |||

Since FuN is fully differentiable, the authors could have trained it end-to-end using a policy gradient algorithm operating on the actions taken by the Worker such the outputs <math>g</math> of the Manager would be trained by gradients coming from the Worker. This, however would deprive Manager’s goals <math>g</math> of any semantic meaning, making them just internal latent variables of the model. So instead, Manager is independently trained to predict advantageous directions (transitions) in state space and to intrinsically reward the Worker to follow these directions. | |||

Update rule for manager: | |||

<Equation> | |||

The intrinsic reward that encourages the Worker to follow | |||

the goals is defined as: | |||

<Equation> | |||

Compared to FRL (ref Hinton) , which advocated concealing the reward from lower levels of the | |||

hierarchy, the Worker in FuN network is trained using an advantage actor critic (ref Mnih et al., 2016) to maximise a weighted sum <math>R_t + α R_t^I</math> , where <math>α</math> is a hyper-parameter that regulates the influence of the intrinsic reward. | |||

The Advantage function <Equation> is calculated using an internal critic, which estimates the value functions for both rewards. | |||

===Transition Policy Gradient=== | |||

The update rule for the Manager given above is a novel form of policy gradient with respect to a ''model'' of the Worker’s behaviour. The Worker can follow a complex trajectory it is not necessarily required to learn from these samples. If the trajectories can be predicted, by modelling the transitions, then the policy gradient of the predicted transition can be followed instead of the Worker's actual path. FuN assumes a particular form for the transition model: that the direction in state-space, <math>s_{t+c} − s_t</math>, follows a von Mises-Fisher distribution. | |||

==Architecture== | |||

The perceptual module <math>f^{percept}</math> is a convolutional network (CNN) followed by a fully connected layer. Each convolutional and fully-connected layer is followed by a rectifier non-linearity. <math>f_Mspace</math>, which is another fully connected layer followed by a rectifier non-linearity, is used to compute the state space, which the Manager uses to formulate goals. The Worker’s recurrent network <math>f^{Wrnn}</math> is a standard LSTM (ref Hochreiter & Schmidhuber, 1997). | |||

The Manager uses a novel architecture called a dilated LSTM (dLSTM), which operates at lower temporal resolution than the data stream. It is similar to dilated convolutional networks (ref Yu & Koltun, 2016) and clockwork RNN. For a dilation radius r,the network is composed of r separate groups of sub-states or ‘cores’. At time <math>t</math>, the network is governed by the following equations: <Equation>, where % denotes the modulo operation and allows us to indicate which group of cores is currently being updated. At each time step only the corresponding part of the state is updated and the output is pooled across the previous c outputs. This allows the r groups of cores inside the dLSTM to preserve the memories for long periods, yet the dLSTM as a whole is still able to process and learn from every input experience, and is also able to update its output at every step. | |||

==Experiments== | |||

The baseline the authors are using is a recurrent LSTM (ref Hochreiter & Schmidhuber, 1997) network on top of a representation learned by a CNN. The A3C method (ref Mnih et al.,2016) for all reinforcement learning experiments. The trajectory is cut and run backpropagation through time (BPTT) (ref Mozer, 1989) after K forward passes of a network or if a terminal signal is received. For each method, 100 experiments were run. A training epoch is defined as as one million observations. | |||

===Montezuma’s Revenge=== | |||

Montezuma’s revenge is a prime example of an environment with sparse rewards. FuN starts learning much earlier and achieves much higher scores. It takes > 300 epochs for LSTM to reach the score 400, which corresponds to solving the first room (take the key, open a door). FuN solves the first room in less than 200 epochs and immediately moves on to explore further, eventually visiting several other rooms and scoring up to 2600 points. | |||

===ATARI=== | |||

The experiment was run on a diverse set of ATARI games, some of which involve long term credit assignment and some which are more reactive. Enduro stands out as all the LSTM agents completely fail at it. Frostbite is a hard game (ref Vezhnevets et al., 2016; Lake et al., 2016) that requires both long-term credit assignment and good exploration. The best-performing frostbite agent is FuN with 0.95 Manager discount, which outperforms the rest by a factor of 7. The other resuls can be seen in the figure. | |||

===Comparing the option-critic architecture=== | |||

FuN network was run on the same games as Option-Critic (Asterix, Ms. Pacman, Seaquest and Zaxxon) and after 200 epochs it achieves a similar score on Seaquest, doubles it on Ms. Pacman, more than triples it on Zaxxon and gets more than 20x improvement on Asterix. | |||

===Memory in Labyrinth=== | |||

DeepMind Lab (Beattie et al., 2016) is a first-person 3D game platform extended from OpenArena. The games on which the experiments were run on include a Water maze, T-maze and Non-match (which is a visual memorisation task). FuN consitently outperforms the LSTM baseline – it learns faster and also reaches a higher final reward. Interestingly, the LSTM agent doesn’t appear to use its memory for water maze task at all, always circling the maze at the roughly the same radius. | |||

Revision as of 06:08, 3 November 2017

Introduction

Even though deep reinforcement learning has been hugely successful in a variety of domains, it has not been able to succeed in environments which have sparsely spaced reward signals and encounters the major challenge of long-term credit assignment, where the agent is not able to attribute a reward to an action taken several timesteps back.

This paper proposes a hierarchical reinforcement learning architecture (HRL), called FeUdal Networks (FuN), which has been inspired from Feudal Reinforcement Learning (FRL)(ref Hinton). It is a fully-differentiable neural network with two levels of hierarchy – a Manager module at the top level and a Worker module below. The Manager sets abstract goals, which are learned, at a lower temporal resolution in a latent state-space. The Worker operates at a higher temporal resolution and produces primitive actions at every tick of the environment, motivated to follow the goals received from Manager, by an intrinsic reward.

The key contributions of the authors in this paper are: (1) A consistent, end-to-end differentiable FRL inspired HRL. (2) A novel, approximate transition policy gradient update for training the Manager (3) The use of goals that are directional rather than absolute in nature. (4) Dilated LSTM – a novel RNN design for the Manager that allows gradients to flow through large hops in time.

The experiments conducted on several tasks which involve sparse rewards show that FuN significantly improves long-term credit assignment and memorization.

Related Work

Several hierarchical reinforcement learning models were proposed to solve this problem. The options framework (ref Sutton et al., 1999; Precup, 2000) considers the problem with a two-level hierarchy, with options being typically learned using sub-goals and ‘pseudo-rewards’ that are provided explicitly. Whereas, the option-critic architecture(Bacon et al., 2017) uses the policy gradient theorem for learning options in an end-to-end fashion. A problem with learning options end-to-end is that they tend to a trivial solution where: (i) only one option is active, which solves the whole task; (ii) a policy-over-options changes options at every step, micro-managing the behavior. The authors state that the option-criric architectire is the only other end-to-end trainable system with sub-policies.

Non-hierarchical deep RL (non-HRL) methods using auxiliary losses and rewards such as pseudo count for exploration. (ref Bellemare et al., 2016a) have significantly improved results by stimulating agents to explore new parts of the state space. The UNREAL agent (Jaderberget al., 2016) is another non-HRL method that showed a strong improvement using unsupervised auxiliary tasks.

Model

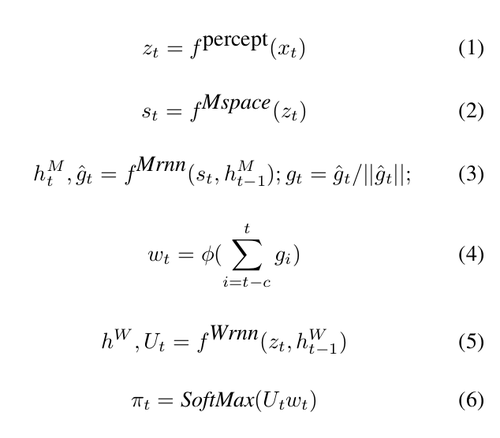

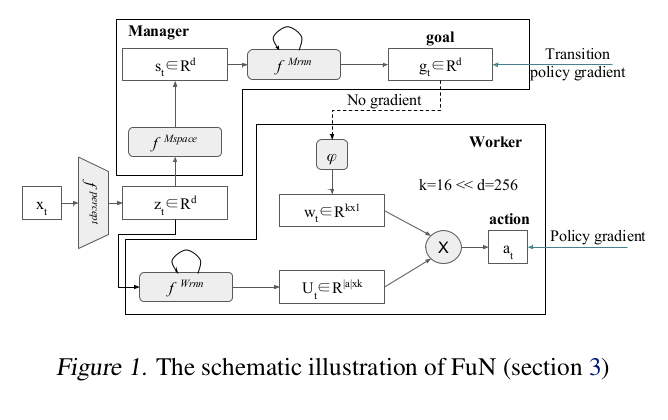

A high-level explanation of the model is as follows:

The Manager computes a latent state representation [math]\displaystyle{ s_t }[/math] and outputs a goal vector [math]\displaystyle{ g_t }[/math] . The Worker outputs actions based on the environment observation, its own state, and the Manager’s goal. A perceptual module computes intermediate representation, [math]\displaystyle{ z_t }[/math] of the environment observation [math]\displaystyle{ x_t }[/math]. The Manager’s goals [math]\displaystyle{ g_t }[/math] are trained using an approximate transition policy gradient. The Worker is then trained via intrinsic reward which stimulates it to output actions that will achieve the goals set by the Manager.

Manager and Worker are recurrent networks ([math]\displaystyle{ {h^M} }[/math] and [math]\displaystyle{ {h^W} }[/math] being their internal states). [math]\displaystyle{ \phi }[/math] is a linear transform that maps a goal [math]\displaystyle{ g_t }[/math] into an embedding vector [math]\displaystyle{ w_t \in {R^k} }[/math] , which is then combined with matrix [math]\displaystyle{ U_t }[/math] (Worker's output) via a matrix-vector product to produce policy [math]\displaystyle{ \pi }[/math] – vector of probabilities over primitive actions. The projection [math]\displaystyle{ \phi }[/math] is linear, with no biases, and is learnt with gradients coming from the Worker’s actions.Since [math]\displaystyle{ \phi }[/math] has no biases it can never produce a constant non-zero vector – which is the only way the setup could ignore the Manager’s input. This makes sure that the goal output by the Manager always influences the final policy.

Learning

Since FuN is fully differentiable, the authors could have trained it end-to-end using a policy gradient algorithm operating on the actions taken by the Worker such the outputs [math]\displaystyle{ g }[/math] of the Manager would be trained by gradients coming from the Worker. This, however would deprive Manager’s goals [math]\displaystyle{ g }[/math] of any semantic meaning, making them just internal latent variables of the model. So instead, Manager is independently trained to predict advantageous directions (transitions) in state space and to intrinsically reward the Worker to follow these directions.

Update rule for manager:

<Equation>

The intrinsic reward that encourages the Worker to follow the goals is defined as:

<Equation>

Compared to FRL (ref Hinton) , which advocated concealing the reward from lower levels of the hierarchy, the Worker in FuN network is trained using an advantage actor critic (ref Mnih et al., 2016) to maximise a weighted sum [math]\displaystyle{ R_t + α R_t^I }[/math] , where [math]\displaystyle{ α }[/math] is a hyper-parameter that regulates the influence of the intrinsic reward.

The Advantage function <Equation> is calculated using an internal critic, which estimates the value functions for both rewards.

Transition Policy Gradient

The update rule for the Manager given above is a novel form of policy gradient with respect to a model of the Worker’s behaviour. The Worker can follow a complex trajectory it is not necessarily required to learn from these samples. If the trajectories can be predicted, by modelling the transitions, then the policy gradient of the predicted transition can be followed instead of the Worker's actual path. FuN assumes a particular form for the transition model: that the direction in state-space, [math]\displaystyle{ s_{t+c} − s_t }[/math], follows a von Mises-Fisher distribution.

Architecture

The perceptual module [math]\displaystyle{ f^{percept} }[/math] is a convolutional network (CNN) followed by a fully connected layer. Each convolutional and fully-connected layer is followed by a rectifier non-linearity. [math]\displaystyle{ f_Mspace }[/math], which is another fully connected layer followed by a rectifier non-linearity, is used to compute the state space, which the Manager uses to formulate goals. The Worker’s recurrent network [math]\displaystyle{ f^{Wrnn} }[/math] is a standard LSTM (ref Hochreiter & Schmidhuber, 1997).

The Manager uses a novel architecture called a dilated LSTM (dLSTM), which operates at lower temporal resolution than the data stream. It is similar to dilated convolutional networks (ref Yu & Koltun, 2016) and clockwork RNN. For a dilation radius r,the network is composed of r separate groups of sub-states or ‘cores’. At time [math]\displaystyle{ t }[/math], the network is governed by the following equations: <Equation>, where % denotes the modulo operation and allows us to indicate which group of cores is currently being updated. At each time step only the corresponding part of the state is updated and the output is pooled across the previous c outputs. This allows the r groups of cores inside the dLSTM to preserve the memories for long periods, yet the dLSTM as a whole is still able to process and learn from every input experience, and is also able to update its output at every step.

Experiments

The baseline the authors are using is a recurrent LSTM (ref Hochreiter & Schmidhuber, 1997) network on top of a representation learned by a CNN. The A3C method (ref Mnih et al.,2016) for all reinforcement learning experiments. The trajectory is cut and run backpropagation through time (BPTT) (ref Mozer, 1989) after K forward passes of a network or if a terminal signal is received. For each method, 100 experiments were run. A training epoch is defined as as one million observations.

Montezuma’s Revenge

Montezuma’s revenge is a prime example of an environment with sparse rewards. FuN starts learning much earlier and achieves much higher scores. It takes > 300 epochs for LSTM to reach the score 400, which corresponds to solving the first room (take the key, open a door). FuN solves the first room in less than 200 epochs and immediately moves on to explore further, eventually visiting several other rooms and scoring up to 2600 points.

ATARI

The experiment was run on a diverse set of ATARI games, some of which involve long term credit assignment and some which are more reactive. Enduro stands out as all the LSTM agents completely fail at it. Frostbite is a hard game (ref Vezhnevets et al., 2016; Lake et al., 2016) that requires both long-term credit assignment and good exploration. The best-performing frostbite agent is FuN with 0.95 Manager discount, which outperforms the rest by a factor of 7. The other resuls can be seen in the figure.

Comparing the option-critic architecture

FuN network was run on the same games as Option-Critic (Asterix, Ms. Pacman, Seaquest and Zaxxon) and after 200 epochs it achieves a similar score on Seaquest, doubles it on Ms. Pacman, more than triples it on Zaxxon and gets more than 20x improvement on Asterix.

Memory in Labyrinth

DeepMind Lab (Beattie et al., 2016) is a first-person 3D game platform extended from OpenArena. The games on which the experiments were run on include a Water maze, T-maze and Non-match (which is a visual memorisation task). FuN consitently outperforms the LSTM baseline – it learns faster and also reaches a higher final reward. Interestingly, the LSTM agent doesn’t appear to use its memory for water maze task at all, always circling the maze at the roughly the same radius.