Convolutional Sequence to Sequence Learning: Difference between revisions

Rahulniyer (talk | contribs) |

Rahulniyer (talk | contribs) |

||

| Line 51: | Line 51: | ||

Refer to the appendix of the paper | Refer to the appendix of the paper | ||

= Experimental Setup = | = Experimental Setup = | ||

Revision as of 19:38, 30 October 2017

Introduction

Sequence to sequence learning has been used to solve many tasks such as machine translation, speech recognition and text summarization task. Most of the past models employ RNNs for this problem with a bidirectional RNNs with soft attention being the dominant approach. On contrary CNN have not been used for this tasks even though they have a lot of advantages

- Compared to recurrent layers, convolutions create representations for fixed size contexts, however, the effective context size of the network can easily be made larger by stacking several layers on top of each other. This allows to precisely control the maximum length of dependencies to be modeled.

- Convolutional networks do not depend on the computations of the previous time step and therefore allow parallelization over every element in a sequence. This contrasts with RNNs which maintain a hidden state of the entire past that prevents parallel computation within a sequence.

- Multi-layer convolutional neural networks create hierarchical representations over the input sequence in which nearby input elements interact at lower layers while distant elements interact at higher layers.

In this paper the authors introduce an architecture for sequence learning based entirely on convolutional neural networks. Compared to recurrent models, computations over all elements can be fully parallelized during training to better exploit the GPU hardware and optimization is easier since the number of non-linearities is fixed and independent of the input length. The use of gated linear units eases gradient propagation and equiping each decoder layer with a separate attention module. They outperform the accuracy of the deep LSTM setup of Wu et al. (2016) and is now the state of the art model for neural machine translation.

Related Work

Bradbury et al.(2016) introduce a quasi-recurrent neural network (QRNNs), an approach to neural sequence modelling that alternates convolutional layers, which apply in parallel across timesteps, and a minimalist recurrent pooling function that applies in parallel across channels. They use QRNNs for sentiment classification, language modelling and aslo briefly describe about an architecture consisting of QRNNs for sequence to sequence learning.

Kalchbrenner et al.(2016) introduce an architecture called "bytenet". The ByteNet is a one-dimensional convolutional neural network that is composed of two parts, one to encode the source sequence and the other to decode the target sequence. This network has two core properties: it runs in time that is linear in the length of the sequences and it sidesteps the need for excessive memorization.

However, none of the above approaches has been demonstrated improvements over state of the art results on large benchmark datasets. Gated convolutions have been previously explored for machine translation by Meng et al. (2015) but their evaluation was restricted to a small dataset. The author himself has explored architectures which used CNN but only in the encoder, the decoder part was still Recurrent.

Convolutional Architecture

Position Embeddings

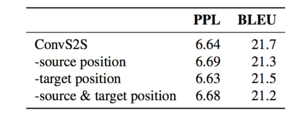

The architecture uses both word embeddings as well as positional embeddings as the input for the Convolutional Layer. The position order is used to equip the model to recognize the ordering of the word in the sequence and helps the model to know which element it is dealing with.

For input words [math]\displaystyle{ x = (x_1, ...,x_m) }[/math] we get the word vector represntation as [math]\displaystyle{ w = (w_1,....,w_m) }[/math] and position vectors as [math]\displaystyle{ p = (p_1,....,p_m) }[/math] where [math]\displaystyle{ p_i }[/math] denotes the actual position of the word in the input sequence.

Both the vectors are combined to get the element representation [math]\displaystyle{ e = (w_1 + p_1,....,w_m+p_m) }[/math]

Similarly for output elements that were already generated by the decoder network to yield output element representations that are being fed back into the decoder network [math]\displaystyle{ g = (g_1,....,g_n) }[/math]

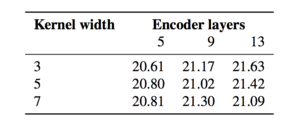

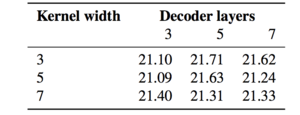

Convolutional Block Structure

Both encoder and decoder networks share a simple structure of blocks/layers that computes intermediate states based on a fixed number of input elements. The output of l-th block of decoder is denoted by [math]\displaystyle{ h^l = (h_1^l,....,h_n^l) }[/math] and [math]\displaystyle{ z^l = (z_1^l,....,z_m^l) }[/math]. y. Each block contains a one-dimensional convolution followed by a non-linearity. For a decoder network with a single block and kernel width k, each resulting state [math]\displaystyle{ h_i^1 }[/math] contains information over k input elements. Stacking several blocks on top of each other increases the number of input elements represented in a state. For instance, stacking 6 blocks with k = 5 results in an input field of 25 elements or we can also say that output depends on 25 input elements.

A kernal parameters is represented as [math]\displaystyle{ W ∈ ℝ^{2d x kd}, b_w ∈ ℝ^{2d} }[/math] and takes as input [math]\displaystyle{ X ∈ ℝ^{k×d} }[/math] to produce output element [math]\displaystyle{ Y ∈ ℝ^{2d} }[/math]. The non linearity chosen was Gated Linear Unit(GLU) mainly because it was shown to perform better in aspects of langauge modelling. A GLU produes an output [math]\displaystyle{ v([A B]) = A ⊗ σ(B), v([AB]) ∈ ℝ^{d} }[/math] and [math]\displaystyle{ Y = [AB] ∈ ℝ^{2d} }[/math].

A residual connection is added from the input of each block to the output of each block. This is done so that the model can be deep. He et al. (Deep Residual Learning for Image Recognition) showed that adding residual connections improve the model performance by making it deep and prevents degradation of training accuracy. This is given by the equation [math]\displaystyle{ h_i^l = v(W^l [h_{i-k/2}^{l-1},...,h_{i+k/2}^{l-1}] + b_w^l) + h_i^{l-1} }[/math]

Padding is performed in the encoder after the convolution step so that the output matches the length of the input. The same cannot be applied to the decoder as we don't know the size of the sequence. To overcome this they pad the input of decoder with k-1 zeroes on both the left and right side and then prune the last k elements from the convolutional output. They add a linear mapping to project between embedding size [math]\displaystyle{ f }[/math] and convolutional output of size 2d. They apply such a transform to w when feeding embeddings to the encoder network, to the encoder output [math]\displaystyle{ z_j^u }[/math], to the final layer of the decoder just before the softmax [math]\displaystyle{ h^l }[/math], and to all decoder layers [math]\displaystyle{ h^l }[/math] before computing attention scores.

Finally, a probability distribution is generated over next T possible candidates elements [math]\displaystyle{ p(y_{i+1} | y_i,...y_1,x) = softmax(W_o h_i^l + b_o) ∈ ℝ^T }[/math]

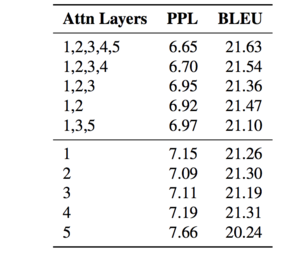

Multi-step Attention

A separate attention mechanism is used for each decoder block. To compute the attention decoder state of current layer is combined with the embedding of the last element generated [math]\displaystyle{ g_i }[/math] we can now write state summary as [math]\displaystyle{ d_i^l = W_d^l + b_i^l + g_i }[/math]. For a decoder layer l the attention [math]\displaystyle{ a_{ij}^l }[/math] with state i and source element j is computed as [math]\displaystyle{ a_{ij}^l = \frac{exp(d_i^l . z_j^u)}{\sum_{t=1}^m exp(d_i^l . z_t^u)} }[/math]. The conditional input to the decoder layer is weighted sum of encoder and element embeddings. This can be written as [math]\displaystyle{ c_i^l = \sum_{j=1}^m a_{i,j}^l (z_j^u + e_j) }[/math]. This conditional input is then added to the decoder state [math]\displaystyle{ h_i^l }[/math],

The attention in the first layer provides the source context which is then fed to the next layer which takes this information to compute other information in that layer. The decoder aslo has the history of previous attention as [math]\displaystyle{ h_i^l = h_i^l + c_i^l }[/math]

Normalization Strategy and initialization

Refer to the appendix of the paper

Experimental Setup

Datasets

- WMT 16 English-Romanian - remove sentences having words > 175, 2.8M senetnce pairs for training.

- WMT 14 English-German - 4.5M sentence pairs

- WMT 14 English- French - 36M sentence pairs, remove sentences with length > 175 words and source/target ratio exceeding 1.5

- Abstractive SUmmarization - Trained on Gigaword Corpus, 3.8M examples for training.

Model Parameters and Optimization

- Used 512 hidden units for both encoder and decoder with output embeddings also of the same size.

- Optimizer- Nestrov's accelerated gradient method using 0.99 momentum. Use gradient clipping if norm > 0.1

- Learning rate - 0.25, once validation perplexity stops improving reduce the Learning rate by a magnitude after each epoch until it reaches [math]\displaystyle{ 10^{-4} }[/math]

- Mini batch with 64 sentences.

- Use dropout on embeddings, decoder output and input of convolution blocks

Evaluation

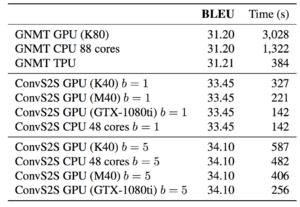

Translations are generated by beam search of width 5 and normalization is log likelihood scores by the length. For word-based models, unknown words are replaced based on attention scores after generation with help of pre-computed attention score dictionary. If the dictionary doesn't contain translation the source word is simply copied. Dictionaries were obtained from a word-aligned training data fast_align where each word is mapped to target word it is most frequently aligned to. The final attention scores are the average of attention scores from all layers. They finally use case-sensitive tokenized BLEU scores for all except WMT 16 where they use detokenized BLEU.

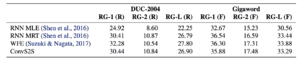

Results

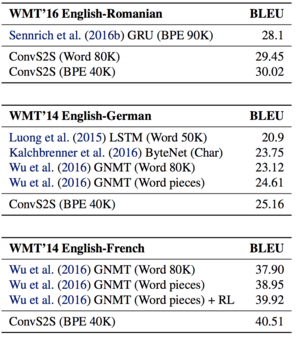

- ConvS2S outperforms the WMT’16 winning entry for English-Romanian by 1.9 BLEU with a BPE encoding and by 1.3 BLEU with a word factored vocabulary.

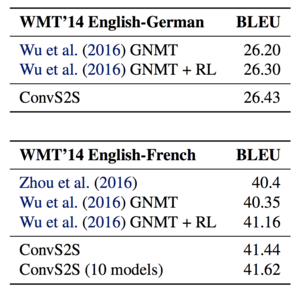

- The results (Result 1) show that the convolutional model outpeforms GNMT by 0.5 BLEU on WMT 14' English to German.

- Finally the model is compared to WMT '14 English to French. The model improves over GNMT in the same setting by 1.6 BLEU on average. It also outperforms their reinforcement (RL) models by 0.5 BLEU.

The authors ensemble eight likelihood-trained models for both WMT’14 English-German and WMT’14 English-French and compare to previous work which also reported ensemble results and find out that they outperform all the models.

References

- Cho, Kyunghyun, Van Merrienboer, Bart, Gulcehre, ¨ Caglar, Bahdanau, Dzmitry, Bougares, Fethi, Schwenk, Holger, and Bengio, Yoshua. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. In Proc. of EMNLP, 2014.

- Bradbury, James, Merity, Stephen, Xiong, Caiming, and Socher, Richard. Quasi-Recurrent Neural Networks. arXiv preprint arXiv:1611.01576, 2016.

- He, Kaiming, Zhang, Xiangyu, Ren, Shaoqing, and Sun, Jian. Delving deep into rectifiers: Surpassing humanlevel performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, pp. 1026–1034, 2015b.

- Meng, Fandong, Lu, Zhengdong, Wang, Mingxuan, Li, Hang, Jiang, Wenbin, and Liu, Qun. Encoding Source Language with Convolutional Neural Network for Machine Translation. In Proc. of ACL, 2015.

- Bahdanau, Dzmitry, Cho, Kyunghyun, and Bengio, Yoshua. Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473, 2014.

- Gehring, Jonas, Auli, Michael, Grangier, David, and Dauphin, Yann N. A Convolutional Encoder Model for Neural Machine Translation. arXiv preprint arXiv:1611.02344, 2016.

- Dyer, Chris, Chahuneau, Victor, and Smith, Noah A. A Simple, Fast, and Effective Reparameterization of IBM Model 2. In Proc. of ACL, 2013.