memory Networks: Difference between revisions

No edit summary |

No edit summary |

||

| Line 26: | Line 26: | ||

In a simple version of the memory network, input text is just written to memory in unaltered form. Or in other words, <math>\ I(x) </math> simply returns ''x'', and <math>\ G </math> writes this text to a new memory slot <math>\ m_{N+1} </math> if <math>\ N </math> is the number of currently filled slots. The memory is accordingly an array of strings, and the inclusion of a new string does nothing to modify existing strings. | In a simple version of the memory network, input text is just written to memory in unaltered form. Or in other words, <math>\ I(x) </math> simply returns ''x'', and <math>\ G </math> writes this text to a new memory slot <math>\ m_{N+1} </math> if <math>\ N </math> is the number of currently filled slots. The memory is accordingly an array of strings, and the inclusion of a new string does nothing to modify existing strings. | ||

Given as much, most of the work being done by the model is performed by the functions <math>\ O </math> and <math>\ R </math>. The job of <math>\ O </math> is to produce an output feature representation by selecting <math>\ k </math> supporting memories from <math>\ m </math> on the basis of the input ''x''. In the experiments described in this paper, | Given as much, most of the work being done by the model is performed by the functions <math>\ O </math> and <math>\ R </math>. The job of <math>\ O </math> is to produce an output feature representation by selecting <math>\ k </math> supporting memories from <math>\ m </math> on the basis of the input ''x''. In the experiments described in this paper, ''k'' is set to either 1 or 2. In the case that ''k=1'', <math>\ O </math> behaves as follows: | ||

<math>\ o_1 = O_1(x, m) = argmax_{i = 1 ... N} S_O(x, m_i) </math> | :<math>\ o_1 = O_1(x, m) = argmax_{i = 1 ... N} S_O(x, m_i) </math> | ||

<math>\ o_2 = O_2(x, m) = argmax_{i = 1 ... N} S_O([x, m_{o_1}], m_i) </math> | where <math>\ S_O </math> is a function that scores a candidate memory for its compatibility with ''x''. Essentially, one 'supporting' memory is selected from <math>\ m </math> as being most likely to contain the information needed to answer the question posed in <math>\ x </math>. In this case, the output is <math>\ o_1 = [x, m_{o_1}] </math>, or a list containing the input question and one supporting memory. Alternatively, in the case that ''k=2'', a second supporting memory is selected on the basis of the input and the first supporting memory as follows: | ||

:<math>\ o_2 = O_2(x, m) = argmax_{i = 1 ... N} S_O([x, m_{o_1}], m_i) </math> | |||

Now, the overall output is <math>\ o_2 = [x, m_{o_1}, m_{o_2}] </math>. (These lists are translated into feature representations as described below). Finally, the result of <math>\ O </math> is used to produce a response in the form of a single word via <math>\ R </math> as follows: | Now, the overall output is <math>\ o_2 = [x, m_{o_1}, m_{o_2}] </math>. (These lists are translated into feature representations as described below). Finally, the result of <math>\ O </math> is used to produce a response in the form of a single word via <math>\ R </math> as follows: | ||

<math>\ r = argmax_{w \epsilon W} S_R([x, m_{o_1}, m_{o_2}], w) </math> | :<math>\ r = argmax_{w \epsilon W} S_R([x, m_{o_1}, m_{o_2}], w) </math> | ||

In short, a response is produced by scoring each word in a set of candidate words against the representation produced by the combination of the input and the two supporting words. The highest scoring candidate word is then chosen as the model's output. The learned portions of <math>\ O </math> and <math>\ R </math> are the parameters of the functions <math>\ S_O </math> and <math>\ S_R </math>, which perform embeddings of the raw text constituting each function argument, and then return the dot product of these two embeddings as a score. Formally, the function <math>\ S_O </math> can be defined as follows; <math>\ S_R </math> is defined analogously: | In short, a response is produced by scoring each word in a set of candidate words against the representation produced by the combination of the input and the two supporting words. The highest scoring candidate word is then chosen as the model's output. The learned portions of <math>\ O </math> and <math>\ R </math> are the parameters of the functions <math>\ S_O </math> and <math>\ S_R </math>, which perform embeddings of the raw text constituting each function argument, and then return the dot product of these two embeddings as a score. Formally, the function <math>\ S_O </math> can be defined as follows; <math>\ S_R </math> is defined analogously: | ||

<math>\ S_O(x, y) = \Phi_x(x)^T U^T U \Phi_y(y) </math> | :<math>\ S_O(x, y) = \Phi_x(x)^T U^T U \Phi_y(y) </math> | ||

Revision as of 23:33, 16 November 2015

Content in progress

Introduction

Most supervised machine learning models are designed to approximate a function that maps input data to a desirable output (e.g. a class label for an image or a translation of a sentence from one language to another). In this sense, such models perform inference using a 'fixed' memory in the form of a set of parameters learned during training. For example, the memory of a recurrent neural network is constituted largely by the weights on the recurrent connections to its hidden layer (along with the layer's activities). As is well known, this form of memory is inherently limited given the fixed dimensionality of the weights in question. It is largely for this reason that recurrent nets have difficulty learning long-range dependencies in sequential data. Learning such dependencies, note, requires remembering items in a sequence for a large number of time steps.

For an interesting class of problems, it is essential for a model to be able to learn long-term dependencies, and to more generally be able to learn to perform inferences using an arbitrarily large memory. Question-answering tasks are paradigmatic of this class of problems, since performing well on such tasks requires remembering all of the information that constitutes a possible answer to the questions being posed. In principle, a recurrent network such as an LSTM could learn to perform QA tasks, but in practice, the amount of information that can be retained by the weights and the hidden states in the LSTM is simply insufficient.

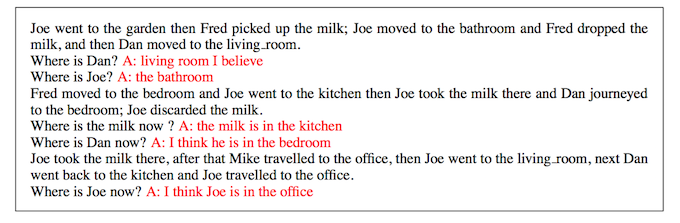

Given this need for a model architecture the combines inference and memory in a sophisticated manner, the authors of this paper propose what they refer to as a "Memory Network". In brief, a memory network is a model that learns to read and write data to an arbitrarily large long-term memory, while also using the data in this memory to perform inferences. The rest of this summary describes the components of a memory network in greater detail, along with some experiments describing its application to a question answering task involving short stories. Below is an example illustrating the model's ability to answer simple questions after being presented with short, multi-sentence stories.

Model Architecture

A memory network is composed of a memory [math]\displaystyle{ \ m }[/math] (in the form of a collection of vectors or strings, indexed individually as [math]\displaystyle{ \ m_i }[/math]), and four possibly learned functions [math]\displaystyle{ \ I }[/math], [math]\displaystyle{ \ G }[/math], [math]\displaystyle{ \ O }[/math], and [math]\displaystyle{ \ R }[/math]. The functions are defined as follows:

- [math]\displaystyle{ \ I }[/math] maps a natural language expression onto an 'input' feature representation (e.g., a real-valued vector). The input can either be a fact to be added to the memory [math]\displaystyle{ \ m }[/math] (e.g. 'John is at the university') , or a question for which an answer is being sought (e.g. 'Where is John?').

- [math]\displaystyle{ \ G }[/math] updates the contents of the memory [math]\displaystyle{ \ m }[/math] on the basis of an input. The updating can involve simply writing the input to new memory location, or it can involve the modification or compression of existing memories to perform a kind of generalization on the state of the memory.

- [math]\displaystyle{ \ O }[/math] produces an 'output' feature representation given a new input and the current state of the memory. The input and output feature representations reside in the same embedding space.

- [math]\displaystyle{ \ R }[/math] produces a response given an output feature representation. This response is usually a word or a sentence, but in principle it could also be an action of some kind (e.g. the movement of a robot)

To give a quick overview of how the model operates, an input x will first be mapped to a feature representation [math]\displaystyle{ \ I(x) }[/math] Then, for all memories i in m, the following update is applied: [math]\displaystyle{ \ m_i = G(m_i, I(x), m) }[/math]. This means that each memory is updated on the basis of the input x and the current state of the memory [math]\displaystyle{ \ m }[/math]. In the case where each input is simply written to memory, [math]\displaystyle{ \ G }[/math] might function to simply select an index that is currently empty and write [math]\displaystyle{ \ I(x) }[/math] to the memory location corresponding to this index. Next, an output feature representation is computed as [math]\displaystyle{ \ o=O(I(x), m) }[/math], and a response, [math]\displaystyle{ \ r }[/math], is computed directly from this feature representation as [math]\displaystyle{ \ r=R(o) }[/math]. [math]\displaystyle{ \ O }[/math] can be interpreted as retrieving a small selection of memories that are relevant to producing a good response, and [math]\displaystyle{ \ R }[/math] actually produces the response given the feature representation produced from the relevant memories by [math]\displaystyle{ \ O }[/math].

A Basic Implementation

In a simple version of the memory network, input text is just written to memory in unaltered form. Or in other words, [math]\displaystyle{ \ I(x) }[/math] simply returns x, and [math]\displaystyle{ \ G }[/math] writes this text to a new memory slot [math]\displaystyle{ \ m_{N+1} }[/math] if [math]\displaystyle{ \ N }[/math] is the number of currently filled slots. The memory is accordingly an array of strings, and the inclusion of a new string does nothing to modify existing strings.

Given as much, most of the work being done by the model is performed by the functions [math]\displaystyle{ \ O }[/math] and [math]\displaystyle{ \ R }[/math]. The job of [math]\displaystyle{ \ O }[/math] is to produce an output feature representation by selecting [math]\displaystyle{ \ k }[/math] supporting memories from [math]\displaystyle{ \ m }[/math] on the basis of the input x. In the experiments described in this paper, k is set to either 1 or 2. In the case that k=1, [math]\displaystyle{ \ O }[/math] behaves as follows:

- [math]\displaystyle{ \ o_1 = O_1(x, m) = argmax_{i = 1 ... N} S_O(x, m_i) }[/math]

where [math]\displaystyle{ \ S_O }[/math] is a function that scores a candidate memory for its compatibility with x. Essentially, one 'supporting' memory is selected from [math]\displaystyle{ \ m }[/math] as being most likely to contain the information needed to answer the question posed in [math]\displaystyle{ \ x }[/math]. In this case, the output is [math]\displaystyle{ \ o_1 = [x, m_{o_1}] }[/math], or a list containing the input question and one supporting memory. Alternatively, in the case that k=2, a second supporting memory is selected on the basis of the input and the first supporting memory as follows:

- [math]\displaystyle{ \ o_2 = O_2(x, m) = argmax_{i = 1 ... N} S_O([x, m_{o_1}], m_i) }[/math]

Now, the overall output is [math]\displaystyle{ \ o_2 = [x, m_{o_1}, m_{o_2}] }[/math]. (These lists are translated into feature representations as described below). Finally, the result of [math]\displaystyle{ \ O }[/math] is used to produce a response in the form of a single word via [math]\displaystyle{ \ R }[/math] as follows:

- [math]\displaystyle{ \ r = argmax_{w \epsilon W} S_R([x, m_{o_1}, m_{o_2}], w) }[/math]

In short, a response is produced by scoring each word in a set of candidate words against the representation produced by the combination of the input and the two supporting words. The highest scoring candidate word is then chosen as the model's output. The learned portions of [math]\displaystyle{ \ O }[/math] and [math]\displaystyle{ \ R }[/math] are the parameters of the functions [math]\displaystyle{ \ S_O }[/math] and [math]\displaystyle{ \ S_R }[/math], which perform embeddings of the raw text constituting each function argument, and then return the dot product of these two embeddings as a score. Formally, the function [math]\displaystyle{ \ S_O }[/math] can be defined as follows; [math]\displaystyle{ \ S_R }[/math] is defined analogously:

- [math]\displaystyle{ \ S_O(x, y) = \Phi_x(x)^T U^T U \Phi_y(y) }[/math]