goingDeeperWithConvolutions: Difference between revisions

No edit summary |

No edit summary |

||

| Line 12: | Line 12: | ||

The authors main motivation for their "Inception module" approach to CNN architecture is due to: | The authors main motivation for their "Inception module" approach to CNN architecture is due to: | ||

* Large CNN networks may allow for more expressive power, however it is also prone to over fitting due to the large number of parameters. | |||

* Uniform increased network size increases computational resources. | |||

* Sparse networks is theoretically possible however sparse data structures are very inefficient. | |||

== Why Sparse Matrices are inefficient == | == Why Sparse Matrices are inefficient == | ||

Revision as of 20:44, 26 October 2015

Introduction

In the last three years, due to the advances of deep learning and more concretely convolutional networks. [an introduction of CNN] , the quality of image recognition has increased dramatically. The error rates for ILSVRC competition dropped significantly year by year.[LSVRC] This paper<ref name=gl> Szegedy, Christian, et al. "Going deeper with convolutions." arXiv preprint arXiv:1409.4842 (2014). </ref> proposed a new deep convolutional neural network architecture codenamed Inception. With the inception module and carefully crafted design researchers build a 22 layers deep network called Google Lenet, which uses 12X fewer parameters while being significantly more accurate than the winners of ILSVRC 2012.<ref name=im> Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. "Imagenet classification with deep convolutional neural networks."

Advances in neural information processing systems. 2012.

</ref>

Motivation

The authors main motivation for their "Inception module" approach to CNN architecture is due to:

- Large CNN networks may allow for more expressive power, however it is also prone to over fitting due to the large number of parameters.

- Uniform increased network size increases computational resources.

- Sparse networks is theoretically possible however sparse data structures are very inefficient.

Why Sparse Matrices are inefficient

Sparse matrices if stored as Compressed Row Storage (CSR) format can be expensive to access at specific indices of the matrix. This is because they use an indirect addressing step for every single scalar operation in a matrix-vector product. (source: netlib.org manual)

For example a non-symmetric sparse matrix A defined by:

The CRS format for this matrix is then specified by arrays `{val, col_ind, row_ptr}` given below:

File:crs sparse matrix details 1.gif

File:crs sparse matrix details 2.gif

Related work

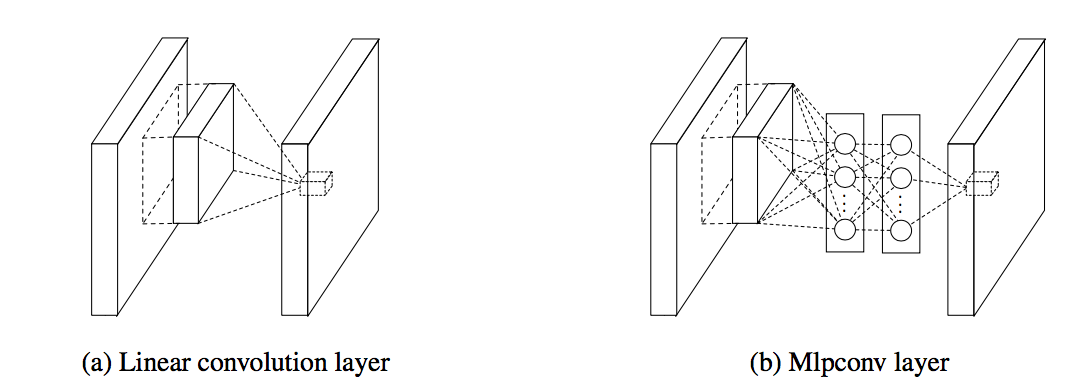

In 2013 Lin et al.<ref name=nin> Min Lin, Qiang Chen and Shuicheng Yan. Network in Network </ref> pointed out that the convolution filter in CNN is a generalized linear model (GLM) for the underlying data patch and the level of abstraction is low with GLM. They suggested replacing GLM with a ”micro network” structure which is a general nonlinear function approximator.

Also in this paper<ref name=nin></ref> Lin et al. proposed a new output layer to improve the performance. In tradition the feature maps of the last convolutional layers are vectorized and fed into a fully connected layers followed by a softmax logistic regression layer<ref name=im></ref>. Lin et al. argued that this structure is prone to overfitting, thus hampering the generalization ability of the overall network. To improve this they proposed another strategy called global average pooling. The idea is to generate one feature map for each corresponding category of the classification task in the last mlpconv layer, take the average of each feature map, and the resulting vector is fed directly into the softmax layer. Lin et al. claim that their strategy has following advantages over fully connected layers. First, it is more native to the convolution structure by enforcing correspondences between feature maps and categories. Thus the feature maps can be easily interpreted as categories confidence maps. Second, there is no parameter to optimize in the global average pooling thus overfitting is avoided at this layer. Furthermore, global average pooling sums out the spatial information, thus it is more robust to spatial translations of the input.

Inception module

In "Going deeper with convolutions", Szegedy, Christian, et al. argued convolutional filters with different sizes can cover different clusters of information. For convenience of computation, they choose to use 1 x 1, 3 x 3 and 5 x 5 filters. Additionally, since pooling operations have been essential for the success in other state of the art convolutional networks, they also add pooling layers in their module. Together these made up the naive Inception module.

Inspired by "Network in Network", Szegedy, Christian, et al. choose to use a 1 × 1 convolutional layer as the"micro network" suggested by Lin et al. The 1 x 1 convolutions also function as dimension reduction modules to remove computational bottlenecks. In practice, before doing the expensive 3 x 3 and 5 x 5 convolutions, they used 1 x 1 convolutions to reduce the number of input feature maps.

Google Lenet

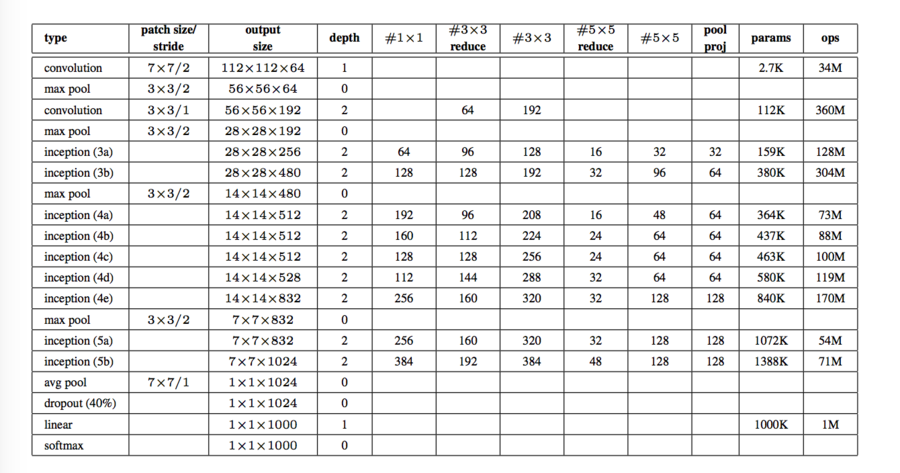

The structure of Google Lenet can be seen from this figure.

“#3 x 3 reduce” and “#5 x 5 reduce” stands for the number of 1 x 1 filters in the reduction layer used before the 3 x 3 and 5 x 5 convolutions.

In order to encourage discrimination in the lower stages in the classifier and increase the gradient signal that gets propagated back, the authors add auxiliary classifiers connected to the out put of (4a) and (4d). During training, their loss gets added to the total loss of the network with a discount weight (the losses of the auxiliary classifiers were weighted by 0.3). At inference time, these auxiliary networks are discarded.

The networks were trained using the DistBelief<ref> Dean, Jeffrey, et al. "Large scale distributed deep networks." Advances in Neural Information Processing Systems. 2012. </ref> distributed machine learning system using modest amount of model and data-parallelism.

ILSVRC 2014 Classification Challenge Results

The ILSVRC 2014 classification challenge involves the task of classifying the image into one of 1000 leaf-node categories in the Imagenet hierarchy ILSVRC.

Szegedy, Christian, et al. independently trained 7 versions of the same GoogLeNet model (they only differ in sampling methodologies and the random order in which they see input images), and performed ensemble prediction with them.

| Team | Year | Place | Error (top-5) | Uses external data |

|---|---|---|---|---|

| SuperVision | 2012 | 1st | 16.4% | no |

| SuperVision | 2012 | 1st | 15.3% | Imagenet 22k |

| Clarifi | 2013 | 1st | 11.7% | no |

| Clarifi | 2013 | 1st | 11.2% | Imagenet 22k |

| MSRA | 2014 | 3rd | 7.35% | no |

| VGG | 2014 | 2nd | 7.32% | no |

| GoogleLeNet | 2014 | 1st | 6.67% | no |

References

<references />