large-Scale Supervised Sparse Principal Component Analysis: Difference between revisions

| Line 48: | Line 48: | ||

Since <math> \beta </math> is very small, and we want to avoid large value of <math> z </math>, we could change variable <math>r=\beta z</math>, then the optimization problem become | Since <math> \beta </math> is very small, and we want to avoid large value of <math> z </math>, we could change variable <math>r=\beta z</math>, then the optimization problem become | ||

<math> {\phi}^' - \beta (log \beta) = min_{u,r} {\frac 1 r} u^T Yu - \beta (log r) + {\frac 1 2} (c+r)^2 : r>0, \| u-s \|_\infty \leq \lambda </math> | <math> {\phi}^' - \beta (log \beta) = min_{u,r} {\frac 1 r} u^T Yu - \beta (log r) + {\frac 1 2} (c+r)^2 : r>0, \| u-s \|_\infty \leq \lambda \qquad (5)</math> | ||

We can solve the sub-problem (5) by first the box constraint QP | |||

<math>R^2 := min_u u^T Yu : \| u - s \|_\infty \leq \lambda</math> | |||

and then set <math>r</math> by solving | |||

<math> min_{r>0} {\frac {R^2} r} - \beta (log r) + {\frac 1 2} (c+r)^2 </math> | |||

Revision as of 01:17, 14 August 2013

Introduction

The sparse PCA is a variant of the classical PCA, which assumes sparsity in the feature space. It has several advantages such as easy to interpret, and works for really high-dimensional data. The main issue about sparse PCA is that it is computationally expensive. Many algorithms have been proposed to solve the sparse PCA problem, and the authors introduced a fast block coordinate ascent algorithm with much better computational complexity.

1 Drawbacks of Existing techniques

Existing techniques include ad-hoc methods(e.g. factor rotation techniques, simple thresholding), greedy algorithms, SCoTLASS, the regularized SVD method, SPCA, the generalized power method. These methods are based on non-convex optimization and they don't guarantee global optimum.

A semi-definite relaxation method called DSPCA can guarantee global convergence and has better performance than above algorithms, however, it is computationally expensive.

2 Contribution of this paper

This paper solves DSPCA in a computationally easier way, and hence it is a good solution for large scale data sets. This paper applies a block coordinate ascent algorithm with computational complexity [math]\displaystyle{ O(\hat{n^3}) }[/math], where [math]\displaystyle{ \hat{n} }[/math] is the intrinsic dimension of the data. Since [math]\displaystyle{ \hat{n} }[/math] could be very small compared to the dimension [math]\displaystyle{ n }[/math] of the data, this algorithm is computationally easy.

Primal problem

The sparse PCA problem can be formulated as [math]\displaystyle{ max_x \ x^T \Sigma x - \lambda \| x \|_0 : \| x \|_2=1 }[/math].

This is equivalent to [math]\displaystyle{ max_z \ Tr(\Sigma Z) - \lambda \sqrt{\| Z \|_0} : Z \succeq 0, Tr Z=1, Rank(Z)=1 }[/math].

Replacing the [math]\displaystyle{ \sqrt{\| Z \|_0} }[/math] with [math]\displaystyle{ \| Z \|_1 }[/math] and dropping the rank constraint gives a relaxation of the original non-convex problem:

[math]\displaystyle{ \phi = max_z Tr (\Sigma Z) - \lambda \| Z \|_1 : Z \succeq 0 }[/math], [math]\displaystyle{ Tr(Z)=1 \qquad (1) }[/math] .

Fortunately, this relaxation approximates the original non-convex problem to a convex problem.

Here is an important theorem used by this paper:

Theorem(2.1) Let [math]\displaystyle{ \Sigma=A^T A }[/math] where [math]\displaystyle{ A=(a_1,a_2,......,a_n) \in {\mathbb R}^{m \times n} }[/math], we have [math]\displaystyle{ \psi = max_{\| \xi \|_2=1} }[/math] [math]\displaystyle{ \sum_{i=1}^{n} (({a_i}^T \xi)^2 - \lambda)_+ }[/math]. An optimal non-zero pattern corresponds to the indices [math]\displaystyle{ i }[/math] with [math]\displaystyle{ \lambda \lt (({a_i}^T \xi)^2-\lambda)_+ }[/math]

Block Coordinate Ascent Algorithm

There is a row-by-row algorithm applied to the problems of the form [math]\displaystyle{ min_X \ f(X)-\beta \ log(det X): \ L \leq X \leq U, X \succ 0 }[/math].

Problem (1) can be written as [math]\displaystyle{ {\frac 1 2} {\phi}^2 = max_X \ Tr \Sigma X - \lambda \| X \|_1 - \frac 1 2 (Tr X)^2: X \succeq 0 \qquad (2) }[/math] .

In order to apply the row by row algorithm, we need to add one more term [math]\displaystyle{ \beta \ log(det X) }[/math] to (2) where [math]\displaystyle{ \beta\gt 0 }[/math] is a penalty parameter.

That is to say, we address the problem [math]\displaystyle{ \ max_X \ Tr \Sigma X - \lambda \| X \|_1 - \frac 1 2 (Tr X)^2 + \beta \ log(det X): X \succeq 0 \qquad (3) }[/math]

By matrix partitioning, we could obtain the sub-problem:

[math]\displaystyle{ \phi = max_{x,y} \ 2(y^T s- \lambda \| y \|_1) +(\sigma - \lambda)x - {\frac 1 2}(t+x)^2 + \beta \ log(x-y^T Y^{\dagger} y ):y \in R(Y) \qquad (4) }[/math].

By taking the dual of (4), the sub-problem can be simplified to be

[math]\displaystyle{ {\phi}^' = min_{u,z} {\frac 1 {\beta z}} u^T Yu - \beta (log z) + {\frac 1 2} (c+ \beta z)^2 : z\gt 0, \| u-s \|_\infty \leq \lambda }[/math]

Since [math]\displaystyle{ \beta }[/math] is very small, and we want to avoid large value of [math]\displaystyle{ z }[/math], we could change variable [math]\displaystyle{ r=\beta z }[/math], then the optimization problem become

[math]\displaystyle{ {\phi}^' - \beta (log \beta) = min_{u,r} {\frac 1 r} u^T Yu - \beta (log r) + {\frac 1 2} (c+r)^2 : r\gt 0, \| u-s \|_\infty \leq \lambda \qquad (5) }[/math]

We can solve the sub-problem (5) by first the box constraint QP

[math]\displaystyle{ R^2 := min_u u^T Yu : \| u - s \|_\infty \leq \lambda }[/math]

and then set [math]\displaystyle{ r }[/math] by solving

[math]\displaystyle{ min_{r\gt 0} {\frac {R^2} r} - \beta (log r) + {\frac 1 2} (c+r)^2 }[/math]

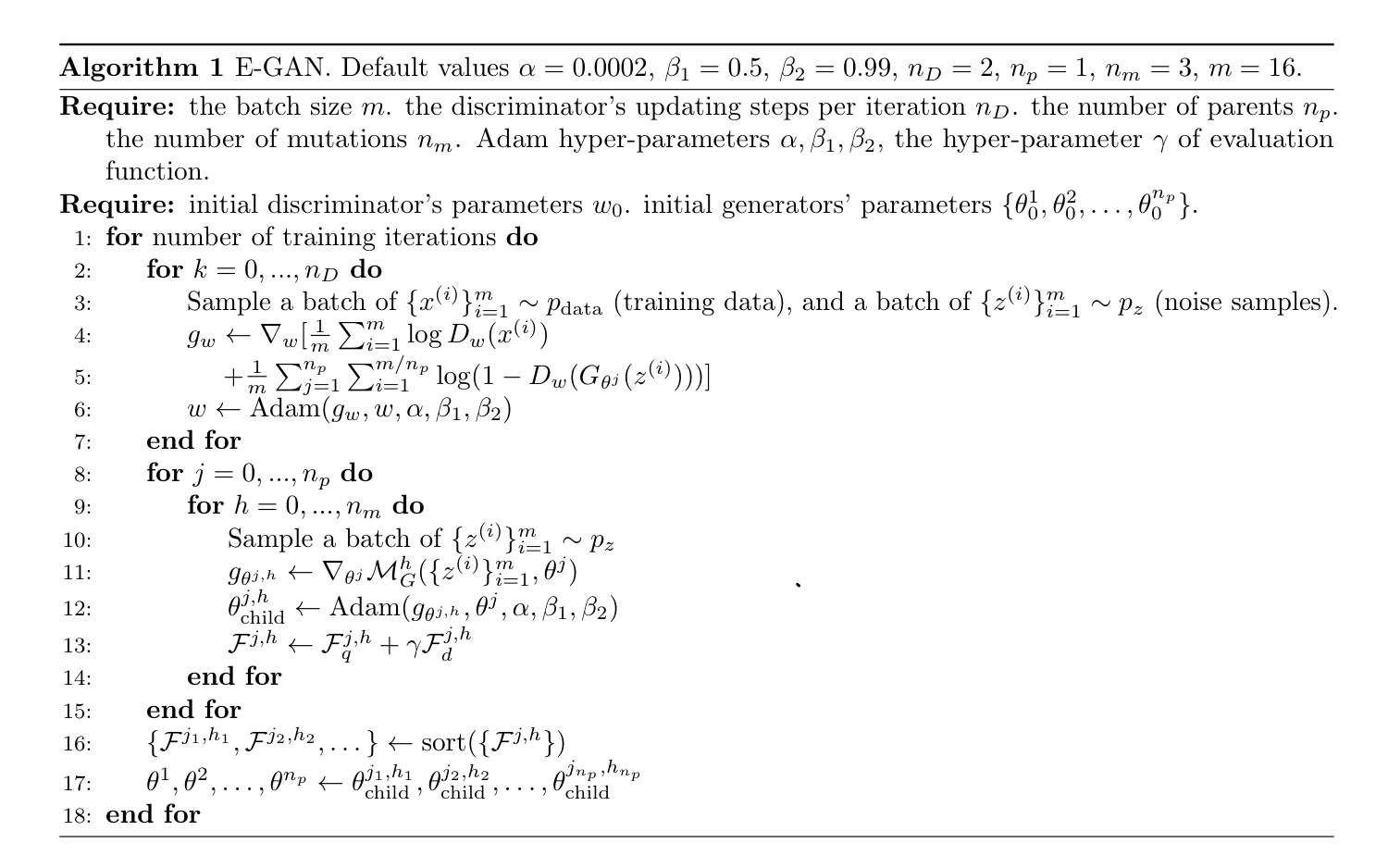

Here is the algorithm:

Convergence and complexity

1. The algorithm is guaranteed to converge to the global optimizer.

2. The complexity for the algorithm is [math]\displaystyle{ O(K \hat{n^3}) }[/math], where [math]\displaystyle{ K }[/math] is the number of sweeps through columns (fixed, typically [math]\displaystyle{ K=5 }[/math]), and [math]\displaystyle{ (\hat{n^3}) }[/math] is the intrinsic dimension of the data points.