stat946f11: Difference between revisions

Nohaelprince (talk | contribs) |

No edit summary |

||

| Line 593: | Line 593: | ||

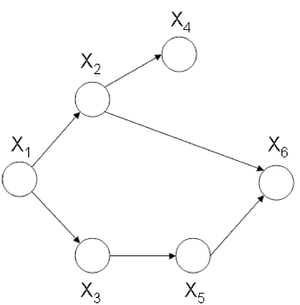

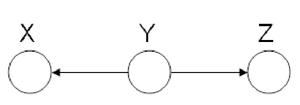

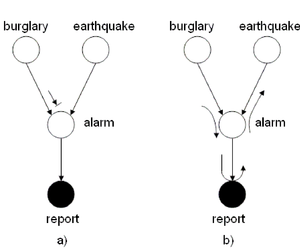

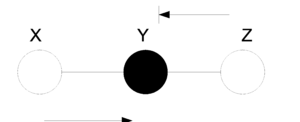

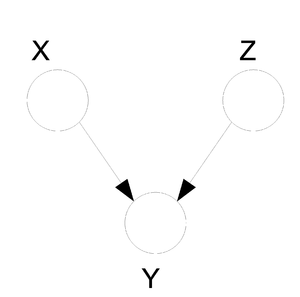

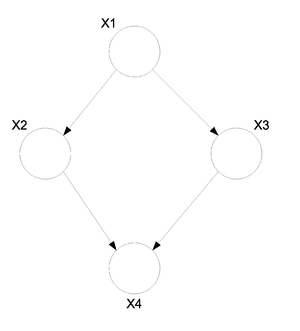

It is simple to see there is no directed graph satisfying both conditional independence properties. Recalling that directed graphs are acyclic, converting undirected graphs to directed graphs result in at least one node in which the arrows are inward-pointing(a v structure). Without loss of generality we can assume that node <math>Z</math> has two inward-pointing arrows. By conditional independence semantics of directed graphs, we have <math> X \perp Y|W</math>, yet the <math>X \perp Y|\{W,Z\}</math> property does not hold. On the other hand, (Fig.23 ) depicts a directed graph which is characterized by the singleton independence statement <math>X \perp Y </math>. There is no undirected graph on three nodes which can be characterized by this singleton statement. Basically, if we consider the set of all distribution over <math>n</math> random variables, a subset of which can be represented by directed graphical models while there is another subset which undirected graphs are able to model that. There is a narrow intersection region between these two subsets in which probabilistic graphical models may be represented by either directed or undirected graphs. | It is simple to see there is no directed graph satisfying both conditional independence properties. Recalling that directed graphs are acyclic, converting undirected graphs to directed graphs result in at least one node in which the arrows are inward-pointing(a v structure). Without loss of generality we can assume that node <math>Z</math> has two inward-pointing arrows. By conditional independence semantics of directed graphs, we have <math> X \perp Y|W</math>, yet the <math>X \perp Y|\{W,Z\}</math> property does not hold. On the other hand, (Fig.23 ) depicts a directed graph which is characterized by the singleton independence statement <math>X \perp Y </math>. There is no undirected graph on three nodes which can be characterized by this singleton statement. Basically, if we consider the set of all distribution over <math>n</math> random variables, a subset of which can be represented by directed graphical models while there is another subset which undirected graphs are able to model that. There is a narrow intersection region between these two subsets in which probabilistic graphical models may be represented by either directed or undirected graphs. | ||

===Undirected Graphical Models=== | |||

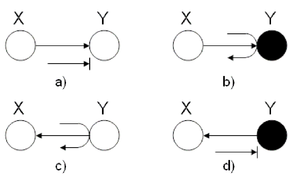

In the previous sections we discussed the Bayes Ball algorithm and the way we can use it to determine if there exists a conditional independence between two nodes in the graph. This algorithm can be easily modified to allow us to determine the same information in an undirected graph. An undirected graph that provides information about the relationships between different random variables can also be called a "Markov Random Field". | |||

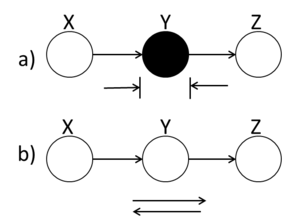

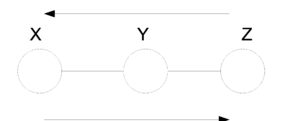

As before we must define a set of canonical graphs. The nice thing is that for undirected graphs there is really only one type of canonical graph: <br /> | |||

[[File:UnDirGraphCanon.png|thumb|right|Fig.20 The only way to connect 3 nodes in an undirected graph.]] | |||

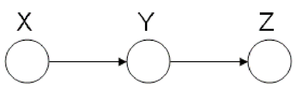

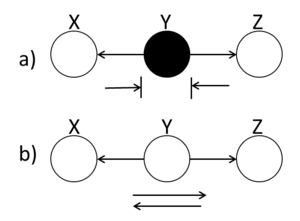

In the first figure (Fig. 21) we have no information about the node Y and so we can not say if the nodes X and Z are independent since the ball can pass from one to the other. On the other hand, in (Fig. 22) the value of Y is known and so the ball can not pass from X to Z or from Z to X. In this case we can say the X and Z are independent given Y. | |||

<center><math>X \amalg Z | Y</math></center> | |||

[[File:UnDirGraphCase1.png|thumb|right|Fig.21 The ball can pass through the middle node.]] | |||

[[File:UnDirGraphCase2.png|thumb|right|Fig.22 The ball can not pass through the middle node.]] | |||

Now that we have a type of Bayes Ball algorithm for both directed and undirected graphs we can ask ourselves the question: Is there an algorithm or method that we can use to convert between directed and undirected graphs? | |||

In general: '''NO'''. <br /> | |||

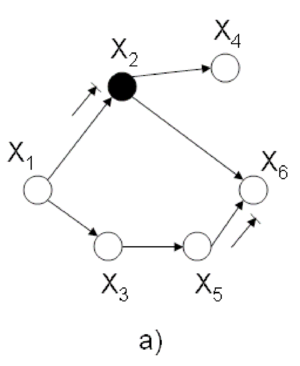

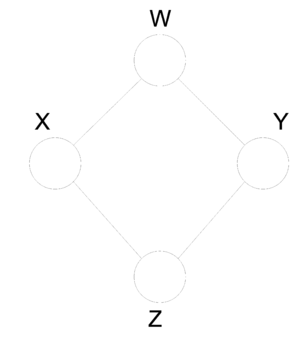

In fact, not only does there not exist a method for conversion but some graphs do not have an equivalent and may exist only in the undirected or directed form. Take the following undirected graph (Fig. 23). We can see that the radom variables that are represented in this graph have the following properties: | |||

<center><math>X \amalg Y | \lbrace W, Z \rbrace</math></center> | |||

<center><math>W \amalg Z | \lbrace X, Y \rbrace</math></center> | |||

[[File:UnDirGraphUnconvert.png|thumb|right|Fig.23 There is no directed equivalent to this graph.]] | |||

Now try building a directed graph with the same properties taking into consideration that directed graphs cannot contain a cycle. Under this restriction it is in fact impossible to find an equivalent directed graph that satisfies all of the above properties. | |||

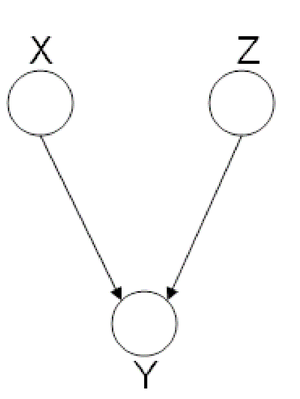

Similarly, consider the following directed graph (Fig. 24). It can not be represented by any undirected graph with 3 nodes. | |||

[[File:DirGraphUnconvert.png|thumb|right|Fig.24 There is no undirected equivalent to this graph.]] | |||

When we want to graph the relationships between a set of random variables it is important to consider both graph types since some relationships can only be graphed on a certain type of graph. We must therefore conclude that undirected graphs are just as important as the directed ones. For the directed graphs we have an expression for <math>P(x_V)</math>. We should try to develop a similar statement for the undirected graphs. <br /> | |||

In order to develop the expression we need to introduce more terminology. | |||

* Clique - | |||

A subset of fully connected nodes in a graph G. Every node in the clique C is directly connected to every other node in C. | |||

* Maximal Clique - | |||

A clique where if any other node from the graph G is added to it then the new set is no longer a clique. | |||

Let <math>C = /{ Set of all Maximal Cliques /}.</math> <br /> | |||

Let <math>\psi_{c_i}</math> = A non-negative real valued function. <br /> | |||

Now associate one <math>\psi_{c_i}</math> with each clique <math>c_i</math> then, | |||

<center><math> P(x_{V}) = \frac{1}{Z(\Psi)} \prod_{c_i \epsilon C} \psi_{c_i} (x_{c_i}) </math></center> | |||

Where, | |||

<center><math> Z(\Psi) = \sum_{x_v} \prod_{c_i \epsilon C} \psi_{c_i} (x_{c_i}) </math></center> | |||

==== Conditional independence ==== | |||

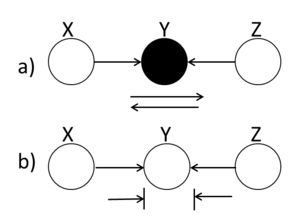

For directed graphs Bayes ball method was defined to determine the conditional independence properties of a given graph. We can also employ the Bayes ball algorithm to examine the conditional independency of undirected graphs. Here the Bayes ball rule is simpler and more intuitive. Considering Figure.... , a ball can be thrown either from x to z or from z to x if y is not observed. In other words, if y is not observed a ball thrown from x can reach z and vice versa. On the contrary, given a shaded y, the node can block the ball and make x and z conditionally independent. With this definition one can declare that in an undirected graph, a node is conditionally independent of non-neighbors given neighbours. Technically speaking, <math>X_A</math> is independent of <math>X_C</math> given <math>X_B</math> if the set of nodes <math>X_B</math> separates the nodes <math>X_A</math> from the nodes <math>X_C</math>. Hence, if every path from a node in <math>X_A</math> to a node in <math>X_C</math> includes at least one node in <math>X_B</math>, then we claim that <math> X_A \perp X_c | X_B </math>. | |||

==Graphical Algorithms== | |||

In the previous chapter there were two kinds of graphical models that were used to represent dependencies between variables. One is a directed graphical model while the other is an undirected graphical model. In the case of directed graphs we can define the joint probability distribution based on a product of conditional probabilities where each node is conditioned on the value(s) of its parent(s). In the case of the undirected graphs we can define the joint probability distribution based on the normalized product of <math> \psi </math> functions based on the nodes that form maximal cliques in the graph. A maximal clique is a clique where we can not add an additional node such that the clique remains fully connected. <br /> | |||

In the previous chapter we also developed the following two expressions for <math>P(x_V)</math>: | |||

====For Directed Graphs:==== | |||

<math> P(x_V) = \prod_{i=1}^{n} P(x_i | x_{\pi_i})</math> | |||

====For Undirected Graphs:==== | |||

<math> P(x_{V}) = \frac{1}{Z(\Psi)} \prod_{c_i \epsilon C} \psi_{c_i} (x_{c_i})</math> | |||

====Theorem: Hammersley - Clifford==== | |||

If we allow <math> U_1 </math> to represent the set of all the decompositions of <math> P(x_{V}) </math> based on a certain graphical representation and we allow <math> U_2 </math> to represent all possible conditional probabilities of those nodes then we will find that the sets <math> U_1 </math> and <math> U_2 </math> are in fact the same set. | |||

<math> U_{1} = \left \{ P(x_{V}) = \frac{1}{Z(\psi)} \prod_{c_i \epsilon C} \psi_{c_i} (x_{c_i}) \right \} </math><br /> | |||

<math> U_{2} = \left \{ P(x_{V}) | P(x_{V}) \mbox{ satisfies all conditional probabilities} \right \} </math><br /> | |||

Then: <math> U_{1} = U_{2} </math> | |||

There is a lot of information contained in the joint probability distribution <math> P(x_{V}) </math>. We have defined 6 tasks (listed bellow) that we would like to accomplish with various algorithms for a given disribution <math> P(x_{V}) </math>. These algorithms may each be able to perform a subset of the tasks listed bellow. | |||

===Tasks:=== | |||

* Marginalization <br /> | |||

Given <math> P(x_{V}) </math> find <math> P(x_{A}) </math> <br /> | |||

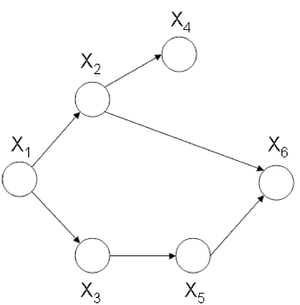

\underline{ex.} Given <math> P(x_1, x_2, ... , x_6) </math> find <math> P(x_2, x_6) </math> | |||

* Conditioning <br /> | |||

Given <math> P(x_V) </math> find <math>P(x_A|x_B) = \frac{P(x_A, x_B)}{P(x_B)}</math> . | |||

* Evaluation <br /> | |||

Evaluate the probability for a certain configuration. | |||

* Completion <br /> | |||

Compute the most probable configuration. In other words, which of the <math> P(x_A|x_B) </math> is the largest for a specific combinations of <math> A </math> and <math> B </math>. | |||

* Simulation <br /> | |||

Generate a random configuration for <math> P(x_V) </math> . | |||

* Learning <br /> | |||

We would like to find parameters for <math> P(x_V) </math> . | |||

===Exact Algorithms:=== | |||

We will be looking at three exact algorithms. An exact algorithm is an algorithm that will find the exact answer to one of the above tasks. The main disadvantage to the exact algorithms approach is that for large graphs which have a large number of nodes these algorithms take a long time to produce a result. When this occurs we can use inexact algorithms to more efficiently find a useful estimate. | |||

* Elimination | |||

* Sum-Product | |||

* Junction Tree | |||

===General Inference:=== | |||

Let us first define a set of nodes called Evidence Nodes. We will denote evidence nodes with <math>x_E</math>. These nodes represent the random varibles about which we have information. Similarily, let us define the set of nodes <math>x_F</math> as Query Nodes. These are the set of nodes for which we seek information. | |||

By Bayes Theorem we know that: | |||

<center><math> P(x_F|x_E) = \frac{P(x_F,x_E)}{P(x_E)}</math></center> | |||

Let <math> G(V, \epsilon) </math> be a graph with vertices <math> V </math> and edges <math> \epsilon </math> | |||

The group of nodes <math>V</math> is made up of the evidence nodes <math>E</math>, the query nodes <math>F</math> and the nodes that are neither query nor evidence nodes <math>R</math>. We can just call <math>R</math> the remainder nodes. All of these sets are mutually exclusive therefore, <br /> | |||

<math> V = E \cup F \cup R </math> and <math> R = V / (E \cup F) </math> <br /> | |||

<math>P(x_F, x_E) = \sum_{R} P(x_V) = \sum_{R} P(x_E, x_F, x_R)</math> | |||

'''Example:'''<br /> | |||

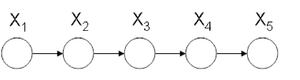

Consider once again the example from Figure \ref{fig:ClassicExample1}. Suppose we want to calculate <math>P(x_1|\bar{x}_6) </math>. Where <math>\bar{x}_6</math> refers to a fixed value of <math>x_6</math>. | |||

If we represent the joint probabilities normally we have, | |||

\[ P(x_1, x_2, ..., x_5) = \sum_{x_6}P(x_1, x_2, ..., x_6) \] | |||

which represents a table of probabilities of size <math>2^6</math>. In general this table is of size <math>k^n</math> where <math>k</math> is the number of values each variable can take on and <math>n</math> is the number of vertices. In a computer algorithm this is exponential: <math>O(k^n)</math> | |||

We can reduce the complexity if we represent the probabilities in factored form. | |||

<center><math>\begin{matrix} | |||

P(x_1, x_2, ..., x_5) &= \sum_{x_6} P(x_1)P(x_2|x_1)P(x_3|x_1)P(x_4|x_2)P(x_5|x_3)P(x_6|x_2, x_5) <br /> | |||

&= P(x_1)P(x_2|x_1)P(x_3|x_1)P(x_4|x_2)P(x_5|x_3) \sum_{x_6} P(x_6|x_2, x_5) | |||

\end{matrix}</math></center> | |||

Where the computational complexity is only <math>O(nk^r)</math> where <math>r</math> is the number of parents of a node. In our case the table has been reduced to <math>2^3</math> from <math>2^6</math>. | |||

Let <math> m_i(x_{s_i})</math> be the expression that arises when we perform <math>\sum_{x_i} P(x_i|x_{s_i})</math> where <math>x_{s_i}</math> represents a set of variables other than <math>x_i</math>. <br /> | |||

For instance, in our example we can say that <math>m_6(x_1, x_2) = \sum_{x_6} P(x_6|x_1, x_2)</math> . | |||

We know that according to Bayes Theorem we can calculate <math> P(x_1, \bar{x}_6) </math> and <math> P(\bar{x}_6) </math> separately in order to find the desired conditional probability. | |||

<center><math>P(x_1|\bar{x}_6) = \frac{P(x_1, \bar{x}_6)}{P(\bar{x}_6)}</math><center> | |||

Let us begin by calculating <math> P(x_1, \bar{x}_6) </math> . | |||

<center><math>\begin{matrix} | |||

P(x_1|\bar{x}_6) &= \sum_{x_2}\sum_{x_3}\sum_{x_4}\sum_{x_5}P(x_1)P(x_2|x_1)P(x_3|x_1)P(x_4|x_2)P(x_5|x_3)P(\bar{x}_6|x_2, x_5) \\ | |||

&= P(x_1)\sum_{x_2}P(x_2|x_1)\sum_{x_3}P(x_3|x_1)\sum_{x_4}P(x_4|x_2)\sum_{x_5}P(x_5|x_3)P(\bar{x}_6|x_2, x_5) \\ | |||

&= P(x_1)\sum_{x_2}P(x_2|x_1)\sum_{x_3}P(x_3|x_1)\sum_{x_4}P(x_4|x_2)m_5(x_2, x_3, \bar{x}_6) \\ | |||

&= P(x_1)\sum_{x_2}P(x_2|x_1)\sum_{x_3}P(x_3|x_1)m_5(x_2, x_3, \bar{x}_6)\sum_{x_4}P(x_4|x_2) \\ | |||

&= P(x_1)\sum_{x_2}P(x_2|x_1)\sum_{x_3}P(x_3|x_1)m_5(x_2, x_3, \bar{x}_6)m_4(x_2) \\ | |||

&= P(x_1)\sum_{x_2}P(x_2|x_1)m_4(x_2)m_3(x_1, x_2, \bar{x}_6) \\ | |||

&= P(x_1)m_2(x_1,\bar{x}_6) | |||

\end{matrix}</math></center> | |||

And then we can use the above result to calculate the next desired probability. : <math> P(\bar{x}_6) = \sum_{x_1}P(x_1|\bar{x}_6) </math>. | |||

Finally, by using the above two results we can calculate <math> P(x_1|\bar{x}_6) = \frac{P(x_1, \bar{x}_6)}{P(\bar{x}_6)} </math>. | |||

===Evaluation=== | |||

Define ''<math>X_i</math>'' as an evidence node whose observed value is | |||

<math>\overline{x_i}</math>. To show that ''<math>X_i</math>'' is fixed at the | |||

value <math>\overline{x_i}</math>, we define an evidence potential | |||

<math>\delta{(x_i,\overline{x_i})}</math> | |||

whose value is 1 if <math>x_i</math> = <math>\overline{x_i}</math> and 0 otherwise.<br /> | |||

So | |||

<center><math> g(\overline{x_i}) =\sum_{x_i}{g(x_i)\delta{(x_i,\overline{x_i})}}</math></center> | |||

<br /> | |||

When we have more than one variable such as p(F<math>|\overline{E}</math>), the total evidence potential is: | |||

<center><math> \delta{(x_i,\overline{x_E})}= \prod_{i\in E}\delta{(x_i,\overline{x_i})} </math></center> | |||

=== Elimination and Directed Graphs=== | |||

Given a graph G =(V,''E''), an evidence set E, and a query node F, we first choose an elimination ordering I such that F appears last in this ordering. | |||

'''Example:''' <br /> | |||

For the graph in (Fig. \ref{fig:ClassicExample1}): <math>G =(V,''E'')</math>. Consider once again that node <math>x_1</math> is the query node and <math>x_6</math> is the evidence node. <br /> | |||

<math>I = \left\{6,5,4,3,2,1\right\}</math> (1 should be the last node, ordering is crucial)<br /> | |||

We must now crete an active list. There are two rules that must be followed in order to create this list. | |||

# For i<math>\in{V}</math> put <math>p(x_i|x_{\pi_i})</math> in active list. | |||

# For i<math>\in</math>{E} put <math>p(x_i|\overline{x_i})</math> in active list. | |||

Here, our active list is: | |||

<math> p(x_1), p(x_2|x_1), p(x_3|x_1), p(x_3|x_2), p(x_5|x_3),\underbrace{p(x_6|x_2, x_5)\delta{(\overline{x_6},x_6)}}_{\phi_6(x_2,x_5, x_6), \sum_{x6}{\phi_6}=m_{6}(x2,x5) }</math> | |||

We first eliminate node <math>X_6</math>. We place <math>m_{6}(x_2,x_5)</math> on the active list, having removed <math>X_6</math>. We now eliminate <math>X_5</math>. | |||

<center><math> \underbrace{p(x_5|x_3)*m_6(x_2,x_5)}_{m_5(x_2,x_3)} </math></center> | |||

Likewise, we can also eliminate <math>X_4, X_3, X_2</math>(which yields the unnormalized conditional probability <math>p(x_1|\overline{x_6})</math> and <math>X_1</math>. Then it yields <math>m_1 = \sum_{x_1}{\phi_1(x_1)}</math> which is the normalization factor, <math>p(\overline{x_6})</math>. | |||

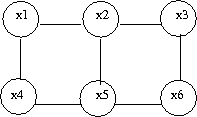

====Elimination and Undirected Graphs==== | |||

We would also like to do this elimination on undirected graphs such as G'.<br /> | |||

[[File:graph.png|thumb|right|Fig.XX Undirected graph G']] | |||

The first task is to find the maximal cliques and their associated potential functions. <br /> | |||

maximal clique: <math>\left\{x_1, x_2\right\}</math>, <math>\left\{x_1, x_3\right\}</math>, <math>\left\{x_2, x_4\right\}</math>, <math>\left\{x_3, x_5\right\}</math>, <math>\left\{x_2,x_5,x_6\right\}</math> <br /> | |||

potential functions: <math>\varphi{(x_1,x_2)},\varphi{(x_1,x_3)},\varphi{(x_2,x_4)}, \varphi{(x_3,x_5)}</math> and <math>\varphi{(x_2,x_3,x_6)}</math> | |||

<math> p(x_1|\overline{x_6})=p(x_1,\overline{x_6})/p(\overline{x_6})\cdots\cdots\cdots\cdots\cdots(*) </math> | |||

<math>p(x_1,x_6)=\frac{1}{Z}\sum_{x_2,x_3,x_4,x_5,x_6}\varphi{(x_1,x_2)}\varphi{(x_1,x_3)}\varphi{(x_2,x_4)}\varphi{(x_3,x_5)}\varphi{(x_2,x_3,x_6)}\delta{(x_6,\overline{x_6})} | |||

</math> | |||

The <math>\frac{1}{Z}</math> looks crucial, but in fact it has no effect because for (*) both the numerator and the denominator have the <math>\frac{1}{Z}</math> term. So in this case we can just cancel it. <br /> | |||

The general rule for elimination in an undirected graph is that we can remove a node as long as we connect all of the parents of that node together. Effectively, we form a clique out of the parents of that node. | |||

'''Example: ''' <br /> | |||

For the graph G in (Fig. \ref{fig:Ex1Lab}) <br /> | |||

when we remove x1, G becomes (Fig. \ref{fig:Ex2Lab}) <br /> | |||

if we remove x2, G becomes (Fig. \ref{fig:Ex3Lab}) | |||

[[File:ex.png|thumb|right|Fig.XX ]] | |||

[[File:ex2.png|thumb|right|Fig.XX ]] | |||

[[File:ex3.png|thumb|right|Fig.XX ]] | |||

An interesting thing to point out is that the order of the elimination matters a great deal. Consider the two results. If we remove one node the graph complexity is slightly reduced. (Fig. \ref{fig:Ex2Lab}). But if we try to remove another node the complexity is significantly increased. (Fig. \ref{fig:Ex3Lab}). The reason why we even care about the complexity of the graph is because the complexity of a graph denotes the number of calculations that are required to answer questions about that graph. If we had a huge graph with thousands of nodes the order of the node removal would be key in the complexity of the algorithm. Unfortunately, there is no efficient algorithm that can produce the optimal node removal order such that the elimination algorithm would run quickly. | |||

===Moralization=== | |||

So far we have shown how to use elimination to successively remove nodes from an undirected graph. We know that this is useful in the process of marginalization. We can now turn to the question of what will happen when we have a directed graph. It would be nice if we could somehow reduce the directed graph to an undirected form and then apply the previous elimination algorithm. This reduction is called moralization and the graph that is produced is called a moral graph. | |||

To moralize a graph we first need to connect the parents of each node together. This makes sense intuitively because the parents of a node need to be considered together in the undirected graph and this is only done if they form a type of clique. By connecting them together we create this clique. | |||

After the parents are connected together we can just drop the orientation on the edges in the directed graph. By removing the directions we force the graph to become undirected. | |||

The previous elimination algorithm can now be applied to the new moral graph. We can do this by assuming that the probability functions in directed graph <math> P(x_i|\pi_{x_i}) </math> are the same as the mass functions from the undirected graph. <math> \psi_{c_i}(c_{x_i}) </math> | |||

'''Example:'''<br /> | |||

I = <math>\left\{x_6,x_5,x_4,x_3,x_2,x_1\right\}</math><br /> | |||

When we moralize the directed graph (Fig. \ref{fig:Moral1}), then it becomes the | |||

undirected graph (Fig. \ref{fig:Moral2}). | |||

[[File:moral.png|thumb|right|Fig.XX Original Directed Graph]] | |||

[[File:moral3.png|thumb|right|Fig.XX Moral Undirected Graph]] | |||

===Sum Product Algorithm=== | |||

One of the main disadvantages to the elimination algorithm is that the ordering of the nodes defines the number of calculations that are required to produce a result. The optimal ordering is difficult to calculate and without a decent ordering the algorithm may become very slow. In response to this we can introduce the sum product algorithm. It has one major advantage over the elimination algorithm: it is faster. The sum product algorithm has the same complexity when it has to compute the probability of one node as it does to compute the probability of all the nodes in the graph. Unfortunately, the sum product algorithm also has one disadvantage. Unlike the elimination algorithm it can not be used on any graph. The sum product algorithm works only on trees. | |||

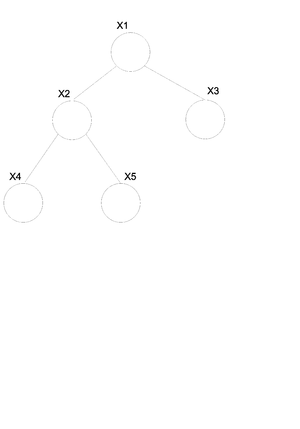

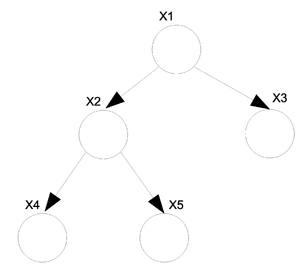

For undirected graphs if there is only one path between any two pair of nodes then that graph is a tree (Fig. \ref{fig:UnDirTree}). If we have a directed graph then we must moralize it first. If the moral graph is a tree then the directed graph is also considered a tree (Fig. \ref{fig:DirTree}). | |||

[[File:UnDirTree.png|thumb|right|Fig.XX Undirected tree]] | |||

[[File:Dir_Tree.png|thumb|right|Fig.XX Directed tree]] | |||

For the undirected graph <math>G(v, \varepsilon)</math> (Fig. \ref{fig:UnDirTree}) we can write the joint probability distribution function in the following way. | |||

<center><math> P(x_v) = \frac{1}{Z(\psi)}\prod_{i \varepsilon v}\psi(x_i)\prod_{i,j \varepsilon \varepsilon}\psi(x_i, x_j)</math></center> | |||

We know that in general we can not convert a directed graph into an undirected graph. There is however an exception to this rule when it comes to trees. In the case of a directed tree there is an algorithm that allows us to convert it to an undirected tree with the same properties. <br /> | |||

Take the above example (Fig. \ref{fig:DirTree}) of a directed tree. We can write the joint probability distribution function as: | |||

<center><math> P(x_v) = P(x_1)P(x_2|x_1)P(x_3|x_1)P(x_4|x_2)P(x_5|x_2) </math></center> | |||

If we want to convert this graph to the undirected form shown in (Fig. \ref{fig:UnDirTree}) then we can use the following set of rules. | |||

\begin{thinlist} | |||

* If <math>\gamma</math> is the root then: <math> \psi(x_\gamma) = P(x_\gamma) </math>. | |||

* If <math>\gamma</math> is NOT the root then: <math> \psi(x_\gamma) = 1 </math>. | |||

* If <math>\left\lbrace i \right\rbrace</math> = <math>\pi_j</math> then: <math> \psi(x_i, x_j) = P(x_j | x_i) </math>. | |||

\end{thinlist} | |||

So now we can rewrite the above equation for (Fig. \ref{fig:DirTree}) as: | |||

<center><math> P(x_v) = \frac{1}{Z(\psi)}\psi(x_1)...\psi(x_5)\psi(x_1, x_2)\psi(x_1, x_3)\psi(x_2, x_4)\psi(x_2, x_5) </math></center> | |||

<center><math> = \frac{1}{Z(\psi)}P(x_1)P(x_2|x_1)P(x_3|x_1)P(x_4|x_2)P(x_5|x_2) </math></center> | |||

====Elimination Algorithm on a Tree==== | |||

[[File:fig1.png|thumb|right|Fig.XX Message-passing in Elimination Algorithm]] | |||

We will derive \textsc{Sum-Product} algorithm from the point of view | |||

of the \textsc{Eliminate} algorithm. To marginalize <math>x_1</math> in | |||

(Fig. \ref{fig:TreeStdEx}), | |||

<center><math>\begin{matrix} | |||

p(x_i)&=&\sum_{x_2}\sum_{x_3}\sum_{x_4}\sum_{x_5}p(x_1)p(x_2|x_1)p(x_3|x_2)p(x_4|x_2)p(x_5|x_3) \\ | |||

&=&p(x_1)\sum_{x_2}p(x_2|x_1)\sum_{x_3}p(x_3|x_2)\sum_{x_4}p(x_4|x_2)\underbrace{\sum_{x_5}p(x_5|x_3)} \\ | |||

&=&p(x_1)\sum_{x_2}p(x_2|x_1)\underbrace{\sum_{x_3}p(x_3|x_2)m_5(x_3)}\underbrace{\sum_{x_4}p(x_4|x_2)} \\ | |||

&=&p(x_1)\underbrace{\sum_{x_2}m_3(x_2)m_4(x_2)} \\ | |||

&=&p(x_1)m_2(x_1) | |||

\end{matrix}</math></center> | |||

where, | |||

<center><math>\begin{matrix} | |||

m_5(x_3)=\sum_{x_5}p(x_5|x_3)=\psi(x_5)\psi(x_5,x_3)=\mathbf{m_{53}(x_3)} \\ | |||

m_4(x_2)=\sum_{x_4}p(x_4|x_2)=\psi(x_4)\psi(x_4,x_2)=\mathbf{m_{42}(x_2)} \\ | |||

m_3(x_2)=\sum_{x_3}p(x_3|x_2)=\psi(x_3)\psi(x_3,x_2)m_5(x_3)=\mathbf{m_{32}(x_2)}, \end{matrix}</math></center> | |||

which is essentially (potential of the node)<math>\times</math>(potential of | |||

the edge)<math>\times</math>(message from the child). | |||

The term "<math>m_{ji}(x_i)</math>" represents the intermediate factor between the eliminated variable, ''j'', and the remaining neighbor of the variable, ''i''. Thus, in the above case, we will use <math>m_{53}(x_3)</math> to denote <math>m_5(x_3)</math>, <math>m_{42}(x_2)</math> to denote | |||

<math>m_4(x_2)</math>, and <math>m_{32}(x_2)</math> to denote <math>m_3(x_2)</math>. We refer to the | |||

intermediate factor <math>m_{ji}(x_i)</math> as a "message" that ''j'' | |||

sends to ''i''. (Fig. \ref{fig:TreeStdEx}) | |||

In general,<center><math>\begin{matrix} | |||

m_{ji}=\sum_{x_i}( | |||

\psi(x_j)\psi(x_j,x_i)\prod_{k\in{\mathcal{N}(j)/ i}}m_{kj}) | |||

\end{matrix}</math></center> | |||

====Elimination To Sum Product Algorithm==== | |||

[[File:fig2.png|thumb|right|Fig.XX All of the messages needed to compute all singleton | |||

marginals]] | |||

The Sum-Product algorithm allows us to compute all | |||

marginals in the tree by passing messages inward from the leaves of | |||

the tree to an (arbitrary) root, and then passing it outward from the | |||

root to the leaves, again using (\ref{equ:MsgEquation}) at each step. The net effect is | |||

that a single message will flow in both directions along each edge. | |||

(See Figure \ref{fig:SumProdEx}) Once all such messages have been computed using (\ref{equ:MsgEquation}), | |||

we can compute desired marginals. | |||

As shown in Figure \ref{fig:SumProdEx}, to compute the marginal of <math>X_1</math> using | |||

elimination, we eliminate <math>X_5</math>, which involves computing a message | |||

<math>m_{53}(x_3)</math>, then eliminate <math>X_4</math> and <math>X_3</math> which involves | |||

messages <math>m_{32}(x_2)</math> and <math>m_{42}(x_2)</math>. We subsequently eliminate | |||

<math>X_2</math>, which creates a message <math>m_{21}(x_1)</math>. | |||

Suppose that we want to compute the marginal of <math>X_2</math>. As shown in | |||

Figure \ref{fig:MsgsFormed}, we first eliminate <math>X_5</math>, which creates <math>m_{53}(x_3)</math>, and | |||

then eliminate <math>X_3</math>, <math>X_4</math>, and <math>X_1</math>, passing messages | |||

<math>m_{32}(x_2)</math>, <math>m_{42}(x_2)</math> and <math>m_{12}(x_2)</math> to <math>X_2</math>. | |||

[[File:fig3.png|thumb|right|Fig.XX The messages formed when computing the marginal of <math>X_2</math>]] | |||

Since the messages can be "reused", marginals over all possible | |||

elimination orderings can be computed by computing all possible | |||

messages which is small in numbers compared to the number of | |||

possible elimination orderings. | |||

The Sum-Product algorithm is not only based on equation | |||

(\ref{equ:MsgEquation}), but also ''Message-Passing Protocol''. | |||

'''Message-Passing Protocol''' tells us that \textit{a node can | |||

send a message to a neighbouring node when (and only when) it has | |||

received messages from all of its other neighbors}. | |||

====For Directed Graph==== | |||

Previously we stated that: | |||

<center><math> | |||

p(x_F,\bar{x}_E)=\sum_{x_E}p(x_F,x_E)\delta(x_E,\bar{x}_E), | |||

</math></center> | |||

Using the above equation (\ref{eqn:Marginal}), we find the marginal of <math>\bar{x}_E</math>. | |||

<center><math>\begin{matrix} | |||

p(\bar{x}_E)&=&\sum_{x_F}\sum_{x_E}p(x_F,x_E)\delta(x_F,\bar{x}_E) \\ | |||

&=&\sum_{x_v}p(x_F,x_E)\delta (x_E,\bar{x}_E) | |||

\end{matrix}</math></center> | |||

Now we denote: | |||

<center><math> | |||

p^E(x_v) = p(x_v) \delta (x_E,\bar{x}_E) | |||

</math></center> | |||

Since the sets, ''F'' and ''E'', add up to <math>\mathcal{V}</math>, | |||

<math>p(x_v)</math> is equal to <math>p(x_F,x_E)</math>. Thus we can substitute the | |||

equation (\ref{eqn:Dir8}) into (\ref{eqn:Marginal}) and (\ref{eqn:Dir7}), and they become: | |||

<center><math>\begin{matrix} | |||

p(x_F,\bar{x}_E) = \sum_{x_E} p^E(x_v), \\ | |||

p(\bar{x}_E) = \sum_{x_v}p^E(x_v) | |||

\end{matrix}</math></center> | |||

We are interested in finding the conditional probability. We | |||

substitute previous results, (\ref{eqn:Dir9}) and (\ref{eqn:Dir10}) into the conditional | |||

probability equation. | |||

<center><math>\begin{matrix} | |||

p(x_F|\bar{x}_E)&=&\frac{p(x_F,\bar{x}_E)}{p(\bar{x}_E)} \\ | |||

&=&\frac{\sum_{x_E}p^E(x_v)}{\sum_{x_v}p^E(x_v)} | |||

\end{matrix}</math></center> | |||

<math>p^E(x_v)</math> is an unnormalized version of conditional probability, | |||

<math>p(x_F|\bar{x}_E)</math>. | |||

====For Undirected Graphs==== | |||

We denote <math>\psi^E</math> to be: | |||

<center><math>\begin{matrix} | |||

\psi^E(x_i) = \psi(x_i)\delta(x_i,\bar{x}_i),& & if i\in{E} \\ | |||

\psi^E(x_i) = \psi(x_i),& & otherwise | |||

\end{matrix}</math></center> | |||

===Max-Product=== | |||

We would like to find the Maximum probability that can be achieved by some set of random variables given a set of configurations. The algorithm is similar to the sum product except we replace the sum with max. <br /> | |||

[[File:suks.png|thumb|right|Fig.XX Max Product Example]] | |||

<center><math>\begin{matrix} | |||

\max_{x_1}{P(x_i)} & = & \max_{x_1}\max_{x_2}\max_{x_3}\max_{x_4}\max_{x_5}{P(x_1)P(x_2|x_1)P(x_3|x_2)P(x_4|x_2)P(x_5|x_3)} \\ | |||

& = & \max_{x_1}{P(x_1)}\max_{x_2}{P(x_2|x_1)}\max_{x_3}{P(x_3|x_4)}\max_{x_4}{P(x_4|x_2)}\max_{x_5}{P(x_5|x_3)} | |||

<math>p(x_F|\bar{x}_E)</math> | |||

<center><math>m_{ji}(x_i)=\sum_{x_j}{\psi^{E}{(x_j)}\psi{(x_i,x_j)}\prod_{k\in{N(j)\backslash{i}}}m_{kj}}</math></center> | |||

<center><math>m^{max}_{ji}(x_i)=\max_{x_j}{\psi^{E}{(x_j)}\psi{(x_i,x_j)}\prod_{k\in{N(j)\backslash{i}}}m_{kj}}</math></center> | |||

'''Example:''' | |||

Consider the graph in Figure \ref{fig:MaxProdEx}. | |||

<center><math> m^{max}_{53}(x_5)=\max_{x_5}{\psi^{E}{(x_5)}\psi{(x_3,x_5)}} </math></center> | |||

<center><math> m^{max}_{32}(x_3)=\max_{x_3}{\psi^{E}{(x_3)}\psi{(x_3,x_5)}m^{max}_{5,3}} </math></center> | |||

===Maximum configuration=== | |||

We would also like to find the value of the <math>x_i</math>s which produces the largest value for the given expression. To do this we replace the max from the previous section with argmax. <br /> | |||

<math>m_{53}(x_5)= argmax_{x_5}\psi{(x_5)}\psi{(x_5,x_3)}</math><br /> | |||

<math>\log{m^{max}_{ji}(x_i)}=\max_{x_j}{\log{\psi^{E}{(x_j)}}}+\log{\psi{(x_i,x_j)}}+\sum_{k\in{N(j)\backslash{i}}}\log{m^{max}_{kj}{(x_j)}}</math><br /> | |||

In many cases we want to use the log of this expression because the numbers tend to be very high. Also, it is important to note that this also works in the continuous case where we replace the summation sign with an integral. | |||

==Basic Statistical Problems== | |||

In statistics there are a number of different 'standard' problems that always appear in one form or another. They are as follows: | |||

\begin{thinlist} | |||

* Regression | |||

* Classification | |||

* Clustering | |||

* Density Estimation | |||

\end{thinlist} | |||

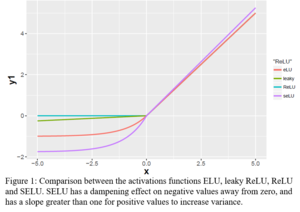

===Regression=== | |||

In regression we have a set of data points <math> (x_i, y_i) </math> for <math> i = 1...n </math> and we would like to determine the way that the variables x and y are related. In certain cases such as (Fig. \ref{img:regression.eps}) we try to fit a line (or other type of function) through the points in such a way that it describes the relationship between the two variables. | |||

[[File:regression.png|thumb|right|Fig.XX Regression]] | |||

Once the relationship has been determined we can give a functional value to the following expression. In this way we can determine the value (or distribution) of y if we have the value for x. | |||

<math>P(y|x)=\frac{P(y,x)}{P(x)} = \frac{P(y,x)}{\int_{y}{P(y,x)dy}}</math> | |||

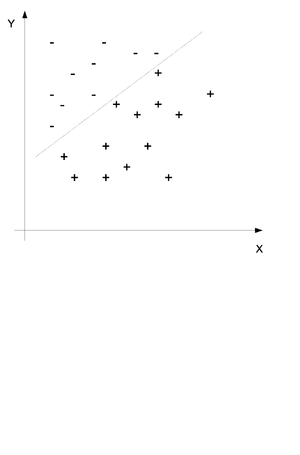

===Classification=== | |||

In classification we also have a set of points <math> (x_i, y_i) </math> for <math> i = 1...n </math> but we would like to use the x and y values to determine if a certain point belongs in group A or in group B. Consider the example in (Fig. \ref{img:Classification.eps}) where two sets of points have been divided into the set + and the set - by a line. The purpose of classification is to find this line and then place any new points into one group or the other. | |||

[[File:Classification.png|thumb|right|Fig.XX Classify Points into Two Sets]] | |||

We would like to obtain the probability distribution of to following equation where c is the class and x and y are the data points. In simple terms we would like to find the probability that this point is in class c when we know that the values of X and Y are x and y. | |||

<center><math> P(c|x,y)=\frac{P(c,x,y)}{P(x,y)} = \frac{P(c,x,y)}{\sum_{c}{P(c,x,y)}} </math></center> | |||

===Clustering=== | |||

Clustering is somewhat like classification only that we do not know the groups before we gather and examine the data. We would like to find the probability distribution of the following equation without knowing the value of y. | |||

<center><math> P(y|x)=\frac{P(y,x)}{P(x)}\ \ y\ unknown </math></center> | |||

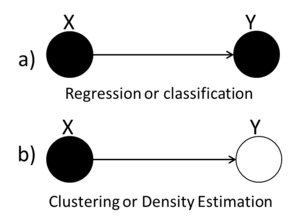

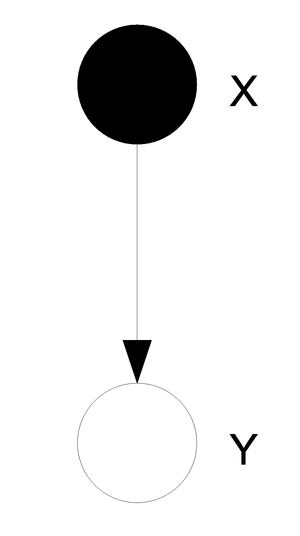

We can use graphs to represent the three types of statistical problems that have been introduced so far. The first graph (Fig. \ref{fig:RegClass} can be used to represent either the Regression or the Classification problem because both the X and the Y variables are known. The second graph (Fig. \ref{fig:Clustering}) we see that the value of the Y variable is unknown and so we can tell that this graph represents the Clustering situation. | |||

[[File:RegClass.png|thumb|right|Fig.XX Regression or classification]] | |||

[[File:Clustering.png|thumb|right|Fig.XX Clustering]] | |||

'''Classification example: Naive Bayes classifier''' <br /> | |||

First define a set of boolean random variables <math>X_i</math> and <math>Y</math> for <math>i = 1...n</math>. | |||

<center><math>Y=\left\{1,0\right\}, X_i =\left\{1,0\right\} </math></center> | |||

Then we will say that a certain pattern of Xs can either be classified as a 1 or a 0. The result of this classification will be represented by the variable Y. The graphical representation is shown in (Fig. \ref{img:classifi.eps}). One important thing to note here is that the two diagrams represent the same graph. The one on the right uses plate notation to simplify the representation of the graph for variables that are indexed. Such plate notation will also be used later in these notes. | |||

\begin{tabular}{ccc} | |||

<math> \stackrel{x}{\underbrace{<01110> }_{n}}</math> & <math> \rightarrow </math> & <math>\stackrel{Y}{1}</math> <br /> | |||

<math> <01110> </math> & <math> \rightarrow </math> & <math>0</math> | |||

\end{tabular} | |||

[[File:classifi.png|thumb|right|Fig.XX Two Types of Graphical Representation]] | |||

We are interested in finding the following: | |||

<center><math>\begin{matrix} | |||

P(y|x_1 .....x_n)=\frac{P(x_1....x_n|y)P(y)}{P(x_1.....x_n)} = \frac{P(x_1....x_n,y)}P(x_1.....x_n) = \frac{P(y)\prod_{i=1,2,..,n}{P(x_i|y)}}{P(x_1.....x_n)} | |||

\end{matrix}</math></center> | |||

The classification is very intuitive in this case. We will calculate the probability that we are in class 1 and we will calculate the probability that we are in class 0. The higher probability will decide the class. For example if we have a higher probability of being in class 1 then we will place this set of Xs in class 1. | |||

\begin{tabular}{ ccc } | |||

<math> \widehat{y}=1 </math> & <math> \Leftrightarrow </math> & <math> P(y=1|x_1.....x_n) > P(y=0|x_1.....x_n) </math> <br /> | |||

<math> \widehat{y}=1 </math> & <math> \Leftrightarrow </math> & <math> \frac{P(y=1|x_1.....x_n)}{P(y=0|x_1.....x_n)} >1 </math> <br /> | |||

& <math>\Leftrightarrow</math> & <math> \log{\frac{P(y=1)}{P(y=0)}} + \sum_{i=1..n}{\log{\frac{P(x_i|y=1)}{P(x_i|y=0)}}}>0 </math> | |||

\end{tabular} | |||

Now if we define the following: <br /> | |||

<math>P(y=1) =p</math> <br /> | |||

<math>P(x_i|y=1)=P_{i1}</math><br /> | |||

<math>P(x_i|y=0)=P_{i0}</math> | |||

We can continue with the above simplification and we arrive at the solution: <br /> | |||

\begin{tabular}{ ccc } | |||

<math>\widehat{y}=1</math> & <math>\Leftrightarrow</math> & <math>x_i\log{\frac{P_{i1}}{P_{i0}}}+ (1-x_i)\log{\frac{(1-P_{i1})}{(1-P_{i0})}} > 0</math><br /> | |||

& <math>\Leftrightarrow</math> & <math> =x_i\underbrace{\log{\frac{P_{i1}(1-P_{i0})}{P_{i0}(1-P_{i1})}}}_{slope} + \underbrace{ \log{\frac{(1-P_{i1})}{(1-P_{i0})}} }_{intercept} </math> | |||

\end{tabular} | |||

==Example from last class== | |||

John is not a professional trader. However he trades in the copper market. Copper stock increase if demand for copper is more than supply, and decrease if supply is more than demand. Given supply and demand, the price of copper stock is not completely determined because some unknown factors such as prediction of political stability of countries, which supply copper or news about potential new use of copper, may impact the market. | |||

If copper stock increases and John makes a right strategy, he will win; otherwise he will lose. Since John is not a professional trader sometimes he uses a bad trade strategy and in spite of increase of stock price he loses. | |||

S: A discrete variable which represents increasing or decreasing in copper supply. | |||

D: A discrete variable which represents increasing or decreasing in copper demand. | |||

C: A discrete variable which represents increasing or decreasing in stack price. | |||

P: A discrete variable that shows whether John wins or loses in his trade. | |||

J: A discrete variable which is 1 when John makes a right choice in his trade strategy and 0 otherwise. | |||

[[File:graphJan30.png|thumb|right|Fig.XX ]] | |||

p(S=1)=0.6, p(D=1)=0.7, p(J=1)=0.4<br /> | |||

\begin{tabular}{|c|c|} | |||

\hline | |||

% after <br />: \hline or \cline{col1-col2} \cline{col3-col4} ... | |||

S D & p(c=1) <br /> | |||

\hline | |||

1 1 & 0.5 <br /> | |||

\hline | |||

1 0 & 0.1 <br /> | |||

\hline | |||

0 1 & 0.85 <br /> | |||

\hline | |||

0 0 & 0.5 <br /> | |||

\hline | |||

\end{tabular} | |||

\begin{tabular}{|c|c|} | |||

\hline | |||

% after <br />: \hline or \cline{col1-col2} \cline{col3-col4} ... | |||

J C & p(p=1) <br /> | |||

\hline | |||

1 1 & 0.85 <br /> | |||

\hline | |||

1 0 & 0.5 <br /> | |||

\hline | |||

0 1 & 0.2 <br /> | |||

\hline | |||

0 0 & 0.1 <br /> | |||

\hline | |||

\end{tabular} | |||

\[ | |||

p(S,D,C,J,P) = p(S)p(D)p(J)p(C|S,D)p(P|J,C) | |||

\] | |||

\end{comment} | |||

===Bayesian and Frequentist Statistics=== | |||

There are two approaches of parameter estimation: the Bayesian and the Frequentist. This section focuses on the distinctions between these two approaches. We begin with a simple example,<br /> | |||

'''Example:''' <br /> | |||

Consider the following table of 1s and 2s. We would like to teach the computer to distinguish between the two sets of numbers so that when a person writes down a number the computer can use a statistical tool to decide if the written digit is a 1 or a 2. | |||

\begin{tabular}{|c|c|c|} | |||

\hline | |||

<math>\theta</math> & ''1'' & 2<br /> | |||

\hline | |||

X & ''1'' & 2<br /> | |||

\hline | |||

X & 1 & ''2''<br /> | |||

\hline | |||

X & ''1'' & ''2''<br /> | |||

\hline | |||

\end{tabular} | |||

The question that arises is: Given a written number what is the probability that that number belongs to the group of ones and what is the probability that that number belongs to the group of twos. | |||

In the Frequentist approach we use <math>p(x|\theta)</math>. We view the model <math>p(x|\theta)</math> as a conditional probability distribution. Here, <math>\theta</math> is known and X is unknown. However, Bayesian approach views X as known and <math>\theta</math> as unknown, which gives | |||

<center><math> | |||

p(\theta|x) = \frac {p(x|\theta)p(\theta)}{p(x)} | |||

</math></center> | |||

Where <math>p(\theta|x)</math> is the ''posterior probability'' , <math>p(x|\theta)</math> is ''likelihood'', and <math>p(\theta)</math> is the ''prior probability'' of the parameter. There are some important assumptions about this equation. First, we view <math>\theta</math> as a random variable. This is characteristic of the Bayesian approach, which is that all unknown quantities are treated as random variables. Second, we view the data x as a quantity to be conditioned on. Our inference is conditional on the event <math>\lbrace X=x \rbrace</math>. Third, in order to calculate <math>p(\theta|x)</math> we need <math>p(\theta)</math>. Finally, note that Bayes rule yields a distribution over <math>\theta</math>, not a single estimate of <math>\theta</math>. | |||

The Frequentist approach tries to avoid the use of prior probabilities. The goal of Frequentist methodology is to develop an "objective" statistical theory, in which two statisticians employing the methodology must necessarily draw the same conclusions from a particular set of data. | |||

Consider a coin-tossing experiment as an example. The model is the Bernoulli distribution, <math>p(x|\theta) = \theta^x(1-\theta)^{1-x} </math>. Bayesian approach requires us to assign a prior probability to <math>\theta</math> before observing the outcome from tossing the coin. Different conclusions may be obtained from the experiment if different priors are assigned to <math>\theta</math>. The Frequentist statistician wishes to avoid such "subjectivity". From another point of view, a Frequentist may claim that <math>\theta</math> is a fixed property of the coin, and that it makes no sense to assign probability to it. A Bayesian would believe that <math>p(\theta|x)</math> represents the ''statistician's uncertainty'' about the value of <math>\theta</math>. Bayesian statistics views the posterior probability and the prior probability alike as subjective. | |||

===Maximum Likelihood Estimator=== | |||

There is one particular estimator that is widely used in Frequentist statistics, namely the ''maximum likelihood estimator''. Recall that the probability model <math>p(x|\theta)</math> has the intuitive interpretation of assigning probability to X for each fixed value of <math>\theta</math>. In the Bayesian approach this intuition is formalized by treating <math>p(x|\theta)</math> as a conditional probability distribution. In the Frequentist approach, however, we treat <math>p(x|\theta)</math> as a function of <math>\theta</math> for fixed x, and refer to <math>p(x|\theta)</math> as the likelihood function. | |||

\[ | |||

\hat{\theta}_{ML}=argmax_{\theta}p(x|\theta) | |||

\] | |||

where <math>p(x|\theta)</math> is the likelihood L(<math>\theta, x</math>) | |||

\[ | |||

\hat{\theta}_{ML}=argmax_{\theta}log(p(x|\theta)) | |||

\] | |||

where <math>log(p(x|\theta))</math> is the log likelihood <math>l(\theta, x)</math> | |||

Since <math>p(x)</math> in the denominator of Bayes Rule is independent of <math>\theta</math> we can consider it as a constant and we can draw the conclusion that: | |||

<center><math> | |||

p(\theta|x) \propto p(x|\theta)p(\theta) | |||

</math></center> | |||

Symbolically, we can interpret this as follows: | |||

<center><math> | |||

Posterior \propto likelihood \times prior | |||

</math></center> | |||

where we see that in the Bayesian approach the likelihood can be | |||

viewed as a data-dependent operator that transforms between the | |||

prior probability and the posterior probability. | |||

===Connection between Bayesian and Frequentist Statistics=== | |||

Suppose in particular that we force the Bayesian to choose a | |||

particular value of <math>\theta</math>; that is, to remove the posterior | |||

distribution <math>p(\theta|x)</math> to a point estimate. Various | |||

possibilities present themselves; in particular one could choose the | |||

mean of the posterior distribution or perhaps the mode. | |||

(i) the mean of the posterior (expectation): | |||

<center><math> | |||

\hat{\theta}_{Bayes}=\int \theta p(\theta|x)\,d\theta | |||

</math></center> | |||

is called ''Bayes estimate''. | |||

OR | |||

(ii) the mode of posterior: | |||

<center><math>\begin{matrix} | |||

\hat{\theta}_{MAP}&=&argmax_{\theta} p(\theta|x) \\ | |||

&=&argmax_{\theta}p(x|\theta)p(\theta) | |||

\end{matrix}</math></center> | |||

Note that MAP is \textsl{Maximum a posterior}. | |||

<center><math> MAP -------> \hat\theta_{ML}</math></center> | |||

When the prior probabilities, <math>p(\theta)</math> is taken to be uniform on <math>\theta</math>, the MAP estimate reduces to the maximum likelihood estimate, <math>\hat{\theta}_{ML}</math>. | |||

<center><math> MAP = argmax_{\theta} p(x|\theta) p(\theta) </math></center> | |||

When the prior is not taken to be uniform, the MAP estimate will be the maximization over probability distributions(the fact that the logarithm is a monotonic function implies that it does not alter the optimizing value). | |||

Thus, one has: | |||

<center><math> | |||

\hat{\theta}_{MAP}=argmax_{\theta} \{ log p(x|\theta) + log | |||

p(\theta) \} | |||

</math></center> | |||

as an alternative expression for the MAP estimate. | |||

Here, <math>log (p(x|\theta))</math> is log likelihood and the "penalty" is the | |||

additive term <math>log(p(\theta))</math>. Penalized log likelihoods are widely | |||

used in Frequentist statistics to improve on maximum likelihood | |||

estimates in small sample settings. | |||

====Information for an Event==== | |||

Consider that we have a given event E. The event has a probability P(E). As the probability of that event decreases we say that we have more information about that event. We calculate the information as: | |||

<center><math> Information = log (\frac{1}{P(E)}) = - log (P(E)) </math></center> | |||

====Binomial Example==== | |||

'''Probability Example:''' <br /> | |||

Consider the set of observations <math>x = (x_1, x_2, \cdots, x_n)</math> which are iid, where <math>x_1, x_2, \cdots, x_n</math> are the different observations of <math>X</math>. We can also say that this random variable is parameterized by a <math>\theta</math> such that: | |||

<center><math>P(X|\theta) \equiv P_{\theta}(x)</math></center> | |||

In our example we will use the following model: | |||

<center><math> P(x_i = 1) = \theta </math></center> | |||

<center><math> P(x_i = 0) = 1 - \theta </math></center> | |||

<center><math> P(x|\theta) = \theta^{x_i}(1-\theta)^{(1-x_i)} </math></center> | |||

where <center><math> x_i = \{0, 1\} </math></center> | |||

Suppose now that we also have some data <math>D</math>: <br /> | |||

e.g. <math>D = \left\lbrace 1,1,0,1,0,0,0,1,1,1,1,\cdots,0,1,0 \right\rbrace </math> <br /> | |||

We want to use this data to estimate <math>\theta</math>. | |||

We would now like to use the ML technique. To do this we can construct the following graphical model: | |||

[[File:fig1Feb6.png|thumb|right|Fig.XX ]] | |||

Shade the random variables that we have already observed | |||

[[File:fig2Feb6.png|thumb|right|Fig.XX ]] | |||

Since all of the variables are iid then there are no dependencies between the variables and so we have no edges from one node to another. | |||

[[File:fig3Feb6.png|thumb|right|Fig.XX ]] | |||

How do we find the joint probability distribution function for these variables? Well since they are all independent we can just multiply the marginal probabilities and we get the joint probability. | |||

<center><math>L(\theta;x) = \prod_{i=1}^n P(x_i|\theta)</math></center> | |||

This is in fact the likelihood that we want to work with. Now let us try to maximise it: | |||

<center><math>\begin{matrix} | |||

l(\theta;x) & = & log(\prod_{i=1}^n P(x_i|\theta)) \\ | |||

& = & \sum_{i=1}^n log(P(x_i|\theta) \\ | |||

& = & \sum_{i=1}^n log(\theta^{x_i}(1-\theta)^{1-x_i}) \\ | |||

& = & \sum_{i=1}^n x_ilog(\theta) + \sum_{i=1}^n (1-x_i)log(1-\theta) \\ | |||

\end{matrix}</math></center> | |||

Take the derivative and set it to zero: | |||

<center><math> \frac{\partial l}{\partial\theta} = 0 </math></center> | |||

<center><math> \frac{\partial l}{\partial\theta} = \sum_{i=0}^{n}\frac{x_i}{\theta} - \sum_{i=0}^{n}\frac{1-x_i}{1-\theta} = 0 </math></center> | |||

<center><math> \Rightarrow \frac{\sum_{i=0}^{n}x_i}{\theta} = \frac{\sum_{i=0}^{n}(1-x_i)}{1-\theta} </math></center> | |||

<center><math> \frac{H}{\theta} = \frac{T}{1-\theta} </math></center> | |||

Where: | |||

\begin{center} H = \# of all <math>x_i = 1</math>, e.g. \# of heads <br /> | |||

T = \# of all <math>x_i = 0</math>, e.g. \# of tails <br /> | |||

Hence, <math>T + H = n</math> <br /> | |||

\end{center} | |||

And now we can solve for <math>\theta</math>: | |||

<center><math>\begin{matrix} | |||

\theta & = & \frac{(1-\theta)H}{T} \\ | |||

\theta + \theta\frac{H}{T} & = & \frac{H}{T} \\ | |||

\theta(\frac{T+H}{T}) & = & \frac{H}{T} \\ | |||

\theta & = & \frac{\frac{H}{T}}{\frac{n}{T}} = \frac{H}{n} | |||

\end{matrix}</math></center> | |||

====Univariate Normal==== | |||

Now let us assume that the observed values come from normal distribution. <br /> | |||

\includegraphics{images/fig4Feb6.eps} | |||

\newline | |||

Our new model looks like: | |||

<center><math>P(x_i|\theta) = \frac{1}{\sqrt{2\pi}\sigma}e^{-\frac{1}{2}(\frac{x_i-\mu}{\sigma})^{2}} </math></center> | |||

Now to find the likelihood we once again multiply the independent marginal probabilities to obtain the joint probability and the likelihood function. | |||

<center><math> L(\theta;x) = \prod_{i=1}^{n}\frac{1}{\sqrt{2\pi}\sigma}e^{-\frac{1}{2}(\frac{x_i-\mu}{\sigma})^{2}}</math></center> | |||

<center><math> \max_{\theta}l(\theta;x) = \max_{\theta}\sum_{i=1}^{n}(-\frac{1}{2}(\frac{x_i-\mu}{\sigma})^{2}+log\frac{1}{\sqrt{2\pi}\sigma} </math></center> | |||

Now, since our parameter theta is in fact a set of two parameters, | |||

<center><math>\theta = (\mu, \sigma)</math></center> | |||

we must estimate each of the parameters separately. | |||

<center><math>\frac{\partial}{\partial u} = \sum_{i=1}^{n} \left( \frac{\mu - x_i}{\sigma} \right) = 0 \Rightarrow \hat{\mu} = \frac{1}{n}\sum_{i=1}^{n}x_i</math></center> | |||

<center><math>\frac{\partial}{\partial \mu ^{2}} = -\frac{1}{2\sigma ^4} \sum _{i=1}^{n}(x_i-\mu)^2 + \frac{n}{2} \frac{1}{\sigma ^2} = 0</math></center> | |||

<center><math> \Rightarrow \hat{\sigma} ^2 = \frac{1}{n}\sum_{i=1}{n}(x_i - \hat{\mu})^2 </math></center> | |||

====Bayesian==== | |||

Now we can take a look at the Bayesian approach to the same problem. Assume <math>\theta</math> is a random variable, and we want to find <math>P(\theta | x)</math>. Also, assume <math>\theta</math> is the mean and variance of a Gaussian distribution like in the previous example. | |||

The graphical model is shown in Figure \ref{fig:fig5Feb6}. | |||

[[File:fig5Feb6.png|thumb|right|Fig.XX Graphical Model for Mean]] | |||

<center><math> P(\mu | x) = \frac{P(x|\mu)P(\mu)}{P(x)} </math></center> | |||

We can begin with the estimation of <math>\mu</math>. If we assume <math>\mu</math> as uniform, then we become a Frequentist and the result matches the one from the ML estimation. But, if we assume <math>\mu</math> is normal, then we get an interesting result. | |||

Assume <math>\mu</math> as normal, then | |||

<center><math>\mu \thicksim N(\mu _{0}, \tau)</math></center> | |||

<center><math>P(x, \mu) = \prod_{i=1}^{n}P(x_i|\mu)P(\mu)</math></center> | |||

We want to find <math>P(\mu | x)</math> and take expectation. | |||

<center><math>P(\mu | x) = \frac{1}{\sqrt{2\pi}\hat{\sigma}}e^(-\frac{1}{2})(\frac{x-\hat{\mu}}{\hat{\sigma}})^2</math></center> | |||

Where | |||

<center><math>\hat{\mu} = \frac{\frac{n}{\sigma}^{2}}{\frac{n}{\sigma ^ 2} + \frac{1}{\tau ^ 2}}\hat{x} + \frac{\frac{1}{\tau ^ 2}}{\frac{n}{\sigma ^2} + \frac{1}{\tau ^2}}\mu _0</math></center> | |||

is a linear combination of the sample mean and the mean of the prior. | |||

<center><math> \lim_{x \rightarrow \infty}\hat{\mu} = \hat{x} = \frac{\sum_{i=1}^{n}x_i}{n}</math></center> | |||

<math> P(\mu | x)</math> shows a distribution of <math>\mu</math>, not just a single value. Also if we were to do the calculations for the sigma we would find the following result: | |||

<center><math> (\hat{\sigma})^{2} = (\frac{n}{\sigma ^{2}} + \frac{1}{\tau^{2}})^{-1}</math></center> | |||

====ML Estimate for Completely Observed Graphical Models==== | |||

For a given graph G(V, E) each node represents a random variable. We can observe these variables and write down data for each one. If for example we had n nodes in the graph one observation would be <math>(x_1, x_2, ... , x_n)</math>. We can consider that these observations are independent and identically distributed. Note that <math>x_i</math> is not necessarily independent from <math>x_j</math>. | |||

'''Directed Graph Example''' <br /> | |||

Consider the following directed graph (Fig. \ref{img:DirGraphObs.eps}). | |||

[[File:DirGraphObs.png|thumb|right|Fig.XX Our Directed Graph]] | |||

We can assume that we have made a number of observations, say n, for each of the random variables in this graph.<br /> | |||

\begin{tabular}{ccccc} | |||

'''Observation''' & '''<math>X_1</math>''' & '''<math>X_2</math>''' & '''<math>X_3</math>''' & '''<math>X_4</math>''' <br /> | |||

1 & <math>x_{11}</math> & <math>x_{12}</math> & <math>x_{13}</math> & <math>x_{14}</math> <br /> | |||

2 & <math>x_{21}</math> & <math>x_{22}</math> & <math>x_{23}</math> & <math>x_{24}</math> <br /> | |||

3 & <math>x_{31}</math> & <math>x_{32}</math> & <math>x_{33}</math> & <math>x_{34}</math> <br /> | |||

& & ... & & <br /> | |||

n & <math>x_{n1}</math> & <math>x_{n2}</math> & <math>x_{n3}</math> & <math>x_{n4}</math> | |||

\end{tabular} | |||

Armed with this new information we would like to estimate <math>\theta = (\theta_1, \theta_2, \theta_3, \theta_4)</math>.<br /> | |||

We know from before that we can write the joint distribution function as: | |||

<center><math> P(x|\theta) = P(x_1)P(x_2|x_1)P(x_3|x_1)P(x_4|x_2,x_3) </math></center> | |||

Which means that our likelihood function is: | |||

<center><math> L(\theta, x) = \prod_{i=1..n}P(x_{i1}|\theta_1)P(x_{i2}|x_{i1}, \theta_2)P(x_{i3}|x_{i1}, \theta_3)P(x_{i4}|x_{i2}, x_{i3}, \theta_4) </math></center> | |||

And our log likelihood is: | |||

<center><math> l(\theta, x) = \sum_{i=1..n}log(P(x_{i1}|\theta_1))+log(P(x_{i2}|x_{i1}, \theta_2)) + log(P(x_{i3}|x_{i1}, \theta_3)) + log(P(x_{i4}|x_{i2}, x_{i3}, \theta_4)) </math></center> | |||

To maximise <math>\theta</math> we must maximise each of the <math>\theta_i</math> individually. The good thing is that each of our parameters appears in a different term and so the maximization of each <math>\theta_i</math> can be carried out independently of the others. <br /> | |||

For discrete random variables we can use Bayes Rule. For example: | |||

<center><math> | |||

P(x_2=1|x_1=1) & = & \frac{P(x_2=1,x_1=1)}{P(x_1=1)} <br /> | |||

& = & \frac{Number\ of\ times\ x_1\ and\ x_2\ are\ 1}{Number\ of\ times\ x_1\ is\ 1} | |||

</math></center> | |||

Intuitively, this means that we count the number of times that both of the variables satisfy their conditions and then divide by the number of times that only one of them satisfies the condition. Then we know what proportion of time the variables satisfy the conditions together. The proportion is in fact the <math>\theta_i</math> we are looking for. <br /> | |||

We can consider another example. We can try to find: | |||

<center><math> P(x_4|x_3, x_2) </math></center> | |||

\begin{tabular}{cccc} | |||

<math>x_3</math> & <math>x_2</math> & <math>P(x_4=0|x_3, x_2)</math> & <math>P(x_4=1|x_3, x_2)</math> <br /> | |||

0 & 0 & <math>\theta_{400}</math> & <math>1 - \theta_{400}</math> <br /> | |||

0 & 1 & <math>\theta_{401}</math> & <math>1 - \theta_{401}</math> <br /> | |||

1 & 0 & <math>\theta_{410}</math> & <math>1 - \theta_{410}</math> <br /> | |||

1 & 1 & <math>\theta_{411}</math> & <math>1 - \theta_{411}</math> | |||

\end{tabular} | |||

For the exponential family of distributions there is a general formula for the ML estimates but it does not have a closed form solution. To get around this, one can use the Interactive Reweighted Least Squares (IRLS) method also called the Newton Raphson method to find these parameters. | |||

In the case of the undirected model things get a little more complicated. The <math>\theta_i</math>s do not decouple and so they can not be calculated separately. To solve this we can use KL divergence which is a method that considers the distance between two distributions. | |||

==EM Algorithm== | |||

Let us once again consider the above example only this time the data that was supposed to be collected was not done so properly. Instead of having complete data about every random variable at every step some data points are missing. | |||

\begin{tabular}{ccccc} | |||

'''Observation''' & '''<math>X_1</math>''' & '''<math>X_2</math>''' & '''<math>X_3</math>''' & '''<math>X_4</math>''' <br /> | |||

1 & <math>x_{11}</math> & <math>x_{12}</math> & <math>Z_{13}</math> & <math>x_{14}</math> <br /> | |||

2 & <math>x_{21}</math> & <math>x_{22}</math> & <math>x_{23}</math> & <math>x_{24}</math> <br /> | |||

3 & <math>Z_{31}</math> & <math>x_{32}</math> & <math>x_{33}</math> & <math>x_{34}</math> <br /> | |||

4 & <math>Z_{41}</math> & <math>x_{42}</math> & <math>x_{43}</math> & <math>Z_{44}</math> <br /> | |||

& & ... & & <br /> | |||

n & <math>x_{n1}</math> & <math>x_{n2}</math> & <math>x_{n3}</math> & <math>x_{n4}</math> | |||

\end{tabular} | |||

In the above table the x values represent data as before and the Z values represent missing data (sometimes called latent data) at that point. Now the question here is how do we calculate the values of the parameters <math>\theta_i</math> if we do not have all the data we need. We can use the Expectation Maximization (or EM) Algorithm to estimate the parameters for the model even though we do not have a complete data set. <br /> | |||

One thing to note here is that in the case of missing values we now have multiple local maxima in the likelihood function and as a result the EM Algorithm does not always reach the global maximum. Instead it may find one of a number of local maxima. Multiple runs of the EM Algorithm with different starting values will possibly produce different results since it may reach a different local maxima. <br /> | |||

Define the following types of likelihoods:<br /> | |||

complete log likelihood = <math> l_c(\theta; x, z) = log(P(x, z|\theta)) </math>.<br /> | |||

incomplete log likelihood = <math> l(\theta; x) = log(P(x | \theta)) </math>. | |||

===Derivation of EM=== | |||

We can rewrite the incomplete likelihood in terms of the complete likelihood. This equation is in fact the discrete case but to convert to the continuous case all we have to do is turn the summation into an integral. | |||

<center><math> l(\theta; x) = log(P(x | \theta)) = log(\sum_zP(x, z|\theta)) </math></center> | |||

Since the z has not been observed that means that <math>l_c</math> is in fact a random quantity. In that case we can define the expectation of <math>l_c</math> in terms of some arbitrary density function <math>q(z|x)</math>. | |||

<center><math> E[{l_c(\theta, x, z)}_q] = \sum_z q(z|x)log(P(x, z|\theta)) </math></center> | |||

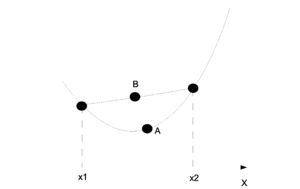

====Jensen's Inequality==== | |||

In order to properly derive the formula for the EM algorithm we need to first introduce the following theorem. | |||

For any '''convex''' function f: | |||

<center><math> f(\alpha x_1 + (1-\alpha)x_2) \leqslant \alpha f(x_1) + (1-\alpha)f(x_2) </math></center> | |||

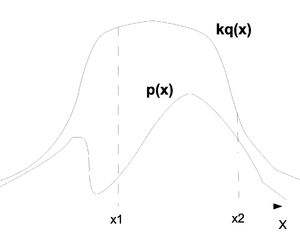

This can be shown intuitively through a graph. In the (Fig. \ref{img:JensenIneq.eps}) point A is the point on the function f and point B is the value represented by the right side of the inequality. On the graph one can see why point A will be smaller than point B in a convex graph. | |||

[[File:JensenIneq.png|thumb|right|Fig.XX Jensen's Inequality]] | |||

For us it is important that the log function is '''concave''' and so we must inverse the sign on the equation. Jensen's inequality is used in step (\ref{UseJensen}) of the EM derivation but for the concave log function. | |||

====Derivation==== | |||

<center><math>\begin{matrix} | |||

l(\theta, x) & = & log(\sum_z P(x,z|\theta)) \\ | |||

& = & log(\sum_z q(z|x) \frac{P(x,z|\theta)}{q(z|x)}) \\ | |||

& \geqslant & \sum_z q(z|x)log(\frac{P(x,z|\theta)}{q(z|x)}) \label{UseJensen} \\ | |||

& = & \mathfrak{L}(q;\theta) | |||

\end{matrix}</math></center> | |||

The function <math>\mathfrak{L}(q;\theta)</math> is called the axillary function and it is used in the EM algorithm. For the EM algorithm we have two steps that we repeat one after the other in order to get better estimates for <math>q(z|x)</math> and <math>\theta</math>. As the steps are repeated the parmeters converge to a local maximum in the likelihood function. | |||

'''E-Step''' | |||

<center><math> argmax_{q} \mathfrak{L}(q;\theta^{(t)}) = q^{(t+1)} </math></center> | |||

'''M-Step''' | |||

<center><math> argmax_{\theta} \mathfrak{L}(q^{(t+1)};\theta) = \theta^{(t+1)} </math></center> | |||

====Notes About M-Step==== | |||

<center><math>\begin{matrix} | |||

\mathfrak{L}(q;\theta) & = & \sum_z q(z|x) log(\frac{P(x,z|\theta)}{q(z|x)}) \\ | |||

& = & \sum_z q(z|x)log(P(x,z|\theta)) - \underbrace{\sum_z q(z|x)log(q(z|x))}_\text{Constant with respect to <math>\theta</math>} \\ | |||

& = & E[ l_c(\theta;x, y) ] | |||

\end{matrix}</math></center> | |||

Since the second part of the equation is only a constant with respect to <math>\theta</math>, in the M-step we only need to maximise the expectation of the complete likelihood. The complete likelihood is the only part that still depends on <math>\theta</math>. | |||

====Notes About E-Step==== | |||

In this step we are trying to find an estimate for <math>q(z|x)</math>. To do this we have to maximise <math>\mathfrak{L}(q;\theta^{(t)})</math>. | |||

<center><math> | |||

\mathfrak{L}(q;\theta^{t}) & = & \sum_z q(z|x) log(\frac{P(x,z|\theta)}{q(z|x)}) | |||

</math></center> | |||

It can be shown that <math>q(z|x) = P(z|x,\theta^{(t)})</math>. So, replace <math>q(z|x)</math> with <math>P(z|x,\theta^{(t)})</math>. | |||

<center><math>\begin{matrix} | |||

\mathfrak{L}(q;\theta^{t}) & = & \sum_z P(z|x,\theta^{(t)}) log(\frac{P(x,z|\theta)}{P(z|x,\theta^{(t)})}) \\ | |||

& = & \sum_z P(z|x,\theta^{(t)}) log(\frac{P(z|x,\theta^{(t)})P(x|\theta^{(t)})}{P(z|x,\theta^{(t)})}) \\ | |||

& = & \sum_z P(z|x,\theta^{(t)}) log(P(x|\theta^{(t)})) \\ | |||

& = & log(P(x|\theta^{(t)})) \\ | |||

& = & l(\theta; x) | |||

\end{matrix}</math></center> | |||

But <math>\mathfrak{L}(q;\theta^{(t)})</math> is the lower bound of <math> l(\theta, x) </math> so that means that <math>P(z|x,\theta^{(t)})</math> is in fact the maximum for <math>\mathfrak{L}</math>. We can therefore see that we only need to do the E-Step once and then we can use that result for each repetition of the M-Step. | |||

From the above results we can find that we have an alternative representation for the EM algorithm. We can reduce it to: | |||

'''E-Step''' <br /> | |||

Find <math> E[l_c(\theta; x, z)]_{P(z|x, \theta)} </math> only once. <br /> | |||

'''M-Step''' <br /> | |||

Maximise <math> E[l_c(\theta; x, z)]_{P(z|x, \theta)} </math> with respect to <math>theta</math>. | |||

The EM Algorithm is probably best understood through examples. | |||

====EM Algorithm Example==== | |||

Suppose we have the two independent and identically distributed random variables: | |||

<center><math> Y_1, Y_2 \sim P(y|\theta) = \theta e^{-\theta y} </math></center> | |||

In our case <math>y_1 = 5</math> has been observed but <math>y_2 = ?</math> has not. Our task is to find an estimate for <math>\theta</math>. We will try to solve the problem first without the EM algorithm. Luckily this problem is simple enough to be solveable without the need for EM. | |||

<center><math>\begin{matrix} | |||

L(\theta; Data) & = & \theta e^{-5\theta} \\ | |||

l(\theta; Data) & = & log(\theta)- 5\theta | |||

\end{matrix}</math></center> | |||

We take our derivative: | |||

<center><math>\begin{matrix} | |||

& \frac{dl}{d\theta} & = 0 \\ | |||

\Rightarrow & \frac{1}{\theta}-5 & = 0 \\ | |||

\Rightarrow & \theta & = 0.2 | |||

\end{matrix}</math></center> | |||

And now we can try the same problem with the EM Algorithm. | |||

<center><math>\begin{matrix} | |||

L(\theta; Data) & = & \theta e^{-5\theta}\theta e^{-y_2\theta} \\ | |||

l(\theta; Data) & = & 2log(\theta) - 5\theta - y_2\theta | |||

\end{matrix}</math></center> | |||

E-Step | |||

<center><math> E[l_c(\theta; Data)]_{P(y_2|y_1, \theta)} = 2log(\theta) - 5\theta - \frac{\theta}{\theta^{(t)}}</math></center> | |||

M-Step | |||

<center><math>\begin{matrix} | |||

& \frac{dl_c}{d\theta} & = 0 \\ | |||

\Rightarrow & \frac{2}{\theta}-5 - \frac{1}{\theta^{(t)}} & = 0 \\ | |||

\Rightarrow & \theta^{(t+1)} & = \frac{2\theta^{(t)}}{5\theta^{(t)}+1} | |||

\end{matrix}</math></center> | |||

Now we pick an initial value for <math>\theta</math>. Usually we want to pick something reasonable. In this case it does not matter that much and we can pick <math>\theta = 10</math>. Now we repeat the M-Step until the value converges. | |||

<center><math>\begin{matrix} | |||

\theta^{(1)} & = & 10 \\ | |||

\theta^{(2)} & = & 0.392 \\ | |||

\theta^{(3)} & = & 0.2648 \\ | |||

... & & \\ | |||

\theta^{(k)} & \simeq & 0.2 | |||

\end{matrix}</math></center> | |||

And as we can see after a number of steps the value converges to the correct answer of 0.2. In the next section we will discuss a more complex model where it would be difficult to solve the problem without the EM Algorithm. | |||

===Mixture Models=== | |||

In this section we discuss what will happen if the random variables are not identically distributed. The data will now sometimes be sampled from one distribution and sometimes from another. | |||

====Mixture of Gaussian ==== | |||

Given <math>P(x|\theta) = \alpha N(x;\mu_1,\sigma_1) + (1-\alpha)N(x;\mu_2,\sigma_2)</math>. We sample the data, <math>Data = \{x_1,x_2...x_n\} </math> and we know that <math>x_1,x_2...x_n</math> are iid. from <math>P(x|\theta)</math>.<br /> | |||

We would like to find: | |||

<center><math>\theta = \{\alpha,\mu_1,\sigma_1,\mu_2,\sigma_2\} </math></center> | |||

We have no missing data here so we can try to find the parameter estimates using the ML method. | |||

<center><math> L(\theta; Data) = \prod_i=1...n (\alpha N(x_i, \mu_1, \sigma_1) + (1 - \alpha) N(x_i, \mu_2, \sigma_2)) </math></center> | |||

And then we need to take the log to find <math>l(\theta, Data)</math> and then we take the derivative for each parameter and then we set that derivative equal to zero. That sounds like a lot of work because the Gaussian is not a nice distribution to work with and we do have 5 parameters. <br /> | |||

It is actually easier to apply the EM algorithm. The only thing is that the EM algorithm works with missing data and here we have all of our data. The solution is to introduce a latent variable z. We are basically introducing missing data to make the calculation easier to compute. | |||

<center><math> z_i = 1 \text{ with prob. } \alpha </math></center> | |||

<center><math> z_i = 0 \text{ with prob. } (1-\alpha) </math></center> | |||

Now we have a data set that includes our latent variable <math>z_i</math>: | |||

<center><math> Data = \{(x_1,z_1),(x_2,z_2)...(x_n,z_n)\} </math></center> | |||

We can calculate the joint pdf by: | |||

<center><math> P(x_i,z_i|\theta)=P(x_i|z_i,\theta)P(z_i|\theta) </math></center> | |||

Let, | |||

<math></math> P(x_i|z_i,\theta)= | |||

\left\{ \begin{tabular}{l l l} | |||

<math> \phi_1(x_i)=N(x;\mu_1,\sigma_1)</math> & if & <math> z_i = 1 </math> <br /> | |||

<math> \phi_2(x_i)=N(x;\mu_2,\sigma_2)</math> & if & <math> z_i = 0 </math> | |||

\end{tabular} \right. <math></math> | |||

Now we can write | |||

<center><math> P(x_i|z_i,\theta)=\phi_1(x_i)^{z_i} \phi_2(x_i)^{1-z_i} </math></center> | |||

and | |||

<center><math> P(z_i)=\alpha^{z_i}(1-\alpha)^{1-z_i} </math></center> | |||

We can write the joint pdf as: | |||

<center><math> P(x_i,z_i|\theta)=\phi_1(x_i)^{z_i}\phi_2(x_i)^{1-z_i}\alpha^{z_i}(1-\alpha)^{1-z_i} </math></center> | |||

From the joint pdf we can get the likelihood function as: | |||

<center><math> L(\theta;D)=\prod_{i=1}^n \phi_1(x_i)^{z_i}\phi_2(x_i)^{1-z_i}\alpha^{z_i}(1-\alpha)^{1-z_i} </math></center> | |||

Then take the log and find the log likelihood: | |||

<center><math> l_c(\theta;D)=\sum_{i=1}^n z_i log\phi_1(x_i) + (1-z_i)log\phi_2(x_i) + z_ilog\alpha + (1-z_i)log(1-\alpha) </math></center> | |||

In the E-step we need to find the expectation of <math>l_c</math> | |||

<center><math> E[l_c(\theta;D)] = \sum_{i=1}^n E[z_i]log\phi_1(x_i)+(1-E[z_i])log\phi_2(x_i)+E[z_i]log\alpha+(1-E[z_i])log(1-\alpha) </math></center> | |||

For now we can assume that <math><z_i></math> is known and assign it a value, let <math> <z_i>=w_i</math><br /> | |||

In M-step, we have to update our data by assuming the expectation is fixed | |||

<center><math> \theta^{(t+1)} <-- argmax_{\theta} E[l_c(\theta;D)] </math></center> | |||

Taking partial derivatives of the complete log likelihood with respect to the parameters and set them equal to zero, we get our estimated parameters at (t+1). | |||

<center><math>\begin{matrix} | |||

\frac{d}{d\alpha} = 0 \Rightarrow & \sum_{i=1}^n \frac{w_i}{\alpha}-\frac{1-w_i}{1-\alpha} = 0 & \Rightarrow \alpha=\frac{\sum_{i=1}^n w_i}{n} \\ | |||

\frac{d}{d\mu_1} = 0 \Rightarrow & \sum_{i=1}^n w_i(x_i-\mu_1)=0 & \Rightarrow \mu_1=\frac{\sum_{i=1}^n w_ix_i}{\sum_{i=1}^n w_i} \\ | |||

\frac{d}{d\mu_2}=0 \Rightarrow & \sum_{i=1}^n (1-w_i)(x_i-\mu_2)=0 & \Rightarrow \mu_2=\frac{\sum_{i=1}^n (1-w_i)x_i}{\sum_{i=1}^n (1-w_i)} \\ | |||

\frac{d}{d\sigma_1} = 0 \Rightarrow & \sum_{i=1}^n w_i(-\frac{1}{2\sigma_1^{2}}+\frac{(x_i-\mu_1)^2}{2\sigma_1^4})=0 & \Rightarrow \sigma_1=\frac{\sum_{i=1}^n w_i(x_i-\mu_1)^2}{\sum_{i=1}^n w_i} \\ | |||

\frac{d}{d\sigma_2} = 0 \Rightarrow & \sum_{i=1}^n (1-w_i)(-\frac{1}{2\sigma_2^{2}}+\frac{(x_i-\mu_2)^2}{2\sigma_2^4})=0 & \Rightarrow \sigma_2=\frac{\sum_{i=1}^n (1-w_i)(x_i-\mu_2)^2}{\sum_{i=1}^n (1-w_i)} | |||

\end{matrix}</math></center> | |||

We can verify that the results of the estimated parameters all make sense by considering what we know about the ML estimates from the standard Gaussian. But we are not done yet. We still need to compute <math><z_i>=w_i</math> in the E-step. | |||

<center><math>\begin{matrix} | |||

<z_i> & = & E_{z_i|x_i,\theta^{(t)}}(z_i) \\ | |||

& = & \sum_z z_i P(z_i|x_i,\theta^{(t)}) \\ | |||

& = & 1\times P(z_i=1|x_i,\theta^{(t)}) + 0\times P(z_i=0|x_i,\theta^{(t)}) \\ | |||

& = & P(z_i=1|x_i,\theta^{(t)}) \\ | |||

P(z_i=1|x_i,\theta^{(t)}) & = & \frac{P(z_i=1,x_i|\theta^{(t)})}{P(x_i|\theta^{(t)})} \\ | |||

& = & \frac {P(z_i=1,x_i|\theta^{(t)})}{P(z_i=1,x_i|\theta^{(t)}) + P(z_i=0,x_i|\theta^{(t)})} \\ | |||

& = & \frac{\alpha^{(t)}N(x_i,\mu_1^{(t)},\sigma_1^{(t)}) }{\alpha^{(t)}N(x_i,\mu_1^{(t)},\sigma_1^{(t)}) +(1-\alpha^{(t)})N(x_i,\mu_2^{(t)},\sigma_2^{(t)})} | |||

\end{matrix}</math></center> | |||

We can now combine the two steps and we get the expectation | |||

<center><math>E[z_i] =\frac{\alpha^{(t)}N(x_i,\mu_1^{(t)},\sigma_1^{(t)}) }{\alpha^{(t)}N(x_i,\mu_1^{(t)},\sigma_1^{(t)}) +(1-\alpha^{(t)})N(x_i,\mu_2^{(t)},\sigma_2^{(t)})} </math></center> | |||

Using the above results for the estimated parameters in the M-step we can evaluate the parameters at (t+2),(t+3)...until they converge and we get our estimated value for each of the parameters. | |||

The mixture model can be summarized as: | |||

* In each step, a state will be selected according to <math>p(z)</math>. | |||

* Given a state, a data vector is drawn from <math>p(x|z)</math>. | |||

* The value of each state is independent from the previous state. | |||

A good example of a mixture model can be seen in this example with two coins. Assume that there are two different coins that are not fair. Suppose that the probabilities for each coin are as shown in the table. <br /> | |||

\begin{tabular}{|c|c|c|} | |||

\hline | |||

& H & T <br /> | |||

coin1 & 0.3 & 0.7 <br /> | |||

coin2 & 0.1 & 0.9 <br /> | |||

\hline | |||

\end{tabular}<br /> | |||

We can choose one coin at random and toss it in the air to see the outcome. Then we place the con back in the pocket with the other one and once again select one coin at random to toss. The resulting outcome of: HHTH \dots HTTHT is a mixture model. In this model the probability depends on which coin was used to make the toss and the probability with which we select each coin. For example, if we were to select coin1 most of the time then we would see more Heads than if we were to choose coin2 most of the time. | |||

==Hidden Markov Models== | |||

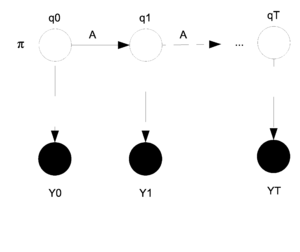

In a Hidden Markov Model (HMM) we consider that we have two levels of random variables. The first level is called the hidden layer because the random variables in that level cannot be observed. The second layer is the observed or output layer. We can sample from the output layer but not the hidden layer. The only information we know about the hidden layer is that it affects the output layer. The HMM model can be graphed as shown in Figure \ref{fig:HMM}. | |||

[[File:HMM.png|thumb|right|Fig.XX Hidden Markov Model]] | |||

In the model the <math>q_i</math>s are the hidden layer and the <math>y_i</math>s are the output layer. The <math>y_i</math>s are shaded because they have been observed. The parameters that need to be estimated are <math> \theta = (\pi, A, \eta)</math>. Where <math>\pi</math> represents the starting state for <math>q_0</math>. In general <math>\pi_i</math> represents the state that <math>q_i</math> is in. The matrix <math>A</math> is the transition matrix for the states <math>q_t</math> and <math>q_{t+1}</math> and shows the probability of changing states as we move from one step to the next. Finally, <math>\eta</math> represents the parameter that decides the probability that <math>y_i</math> will produce <math>y^*</math> given that <math>q_i</math> is in state <math>q^*</math>. <br /> | |||