stat841f11: Difference between revisions

| Line 560: | Line 560: | ||

</pre> | </pre> | ||

Using the ''plotdigits'' function in Matlab, clearly illustrates that the first PC captured the differences between the numbers ''2'' and ''3'' as they are projected onto different regions of the axis for the first PC. Also, the second PC captured the ''tilt'' of the handwritten numbers as numbers tilted to the left or right were projected onto different regions of the axis for the second PC. | Using the ''plotdigits'' function in Matlab, clearly illustrates that the first PC captured the differences between the numbers ''2'' and ''3'' as they are projected onto different regions of the axis for the first PC. Also, the second PC captured the ''tilt'' of the handwritten numbers as numbers tilted to the left or right were projected onto different regions of the axis for the second PC. | ||

==== Example 3 ==== | |||

(Not discussed in class - just a different way to think about it.) In the news recently was a story that captures some of the ideas behind PCA. Over the past two years, Scott Golder and Michael Macy, researchers from Cornell University, collected 509 million Twitter messages from 2.4 million users in 84 different countries. The data they used were words collected at various times of day and they classified the data into two different categories: positive emotion words and negative emotion words. Then, they were able to study this new data to evaluate subject's moods at different times of day, while the subjects were in different parts of the world. Using this data, they found that subjects are generally exhibit positive emotions in the mornings and late evenings, and negative emotions mid-day. So they were able to "project their data onto a smaller dimensional space". Their paper, "Diurnal and Seasonal Mood Vary with Work, Sleep, and Daylength Across Diverse Cultures," is available in the journal Science next Thursday. [13] Granted, this is not a true representation of PCA, but it is interesting that they are able to use these ideas for applications in many disciplines. | |||

=== Observations === | === Observations === | ||

Revision as of 19:56, 30 September 2011

Proposal for Final Project

Editor Sign Up

STAT 441/841 / CM 463/763 - Tuesday, 2011/09/20

Wiki Course Notes

Students will need to contribute to the wiki for 20% of their grade. Access via wikicoursenote.com Go to editor sign-up, and use your UW userid for your account name, and use your UW email.

primary (10%) Post a draft of lecture notes within 48 hours. You will need to do this 1 or 2 times, depending on class size.

secondary (10%) Make improvements to the notes for at least 60% of the lectures. More than half of your contributions should be technical rather than editorial. There will be a spreadsheet where students can indicate what they've done and when. The instructor will conduct random spot checks to ensure that students have contributed what they claim.

Classification (Lecture: Sep. 20, 2011)

Definitions

classification: Predict a discrete random variable [math]\displaystyle{ Y }[/math] (a label) by using another random variable [math]\displaystyle{ X }[/math] (new data point) picked iid from a distribution

[math]\displaystyle{ X_i = (X_{i1}, X_{i2}, ... X_{id}) \in \mathcal{X} \subset \mathbb{R}^d }[/math] ([math]\displaystyle{ d }[/math]-dimensional vector) [math]\displaystyle{ Y_i }[/math] in some finite set [math]\displaystyle{ \mathcal{Y} }[/math]

classification rule:

[math]\displaystyle{ h : \mathcal{X} \rightarrow \mathcal{Y} }[/math]

Take new observation [math]\displaystyle{ X }[/math] and use a classification function [math]\displaystyle{ h(x) }[/math] to generate a label [math]\displaystyle{ Y }[/math]. In other words, If we fit the function [math]\displaystyle{ h(x) }[/math] with a random variable [math]\displaystyle{ X }[/math], it generates the label [math]\displaystyle{ Y }[/math] which is the class to which we predict [math]\displaystyle{ X }[/math] belongs.

Example: Let [math]\displaystyle{ \mathcal{X} }[/math] be a set of 2D images and [math]\displaystyle{ \mathcal{Y} }[/math] be a finite set of people. We want to learn a classification rule [math]\displaystyle{ h:\mathcal{X}\rightarrow\mathcal{Y} }[/math] that with small true error predicts the person who appears in the image.

true error rate for classifier [math]\displaystyle{ h }[/math] is the error with respect to the underlying distribution (that we do not know).

[math]\displaystyle{ L(h) = P(h(X) \neq Y ) }[/math]

empirical error rate (or training error rate) is the amount of error that our classification function [math]\displaystyle{ h(x) }[/math] makes on the training data.

[math]\displaystyle{ \hat{L}_n(h) = (1/n) \sum_{i=1}^{n} \mathbf{I}(h(X_i) \neq Y_i) }[/math]

where [math]\displaystyle{ \mathbf{I}() }[/math] is an indicator function. Indicator function is defined by

[math]\displaystyle{ \mathbf{I}(x) = \begin{cases} 1 & \text{if } x \text{ is true} \\ 0 & \text{if } x \text{ is false} \end{cases} }[/math]

So in this case [math]\displaystyle{ \mathbf{I}(h(X_i)\neq Y_i) = 1 }[/math] when [math]\displaystyle{ h(X_i)\neq Y_i }[/math] i.e. when misclassifications happens.

e.g., 100 new data points with known (true) labels

[math]\displaystyle{ y_1 = h(x_1) }[/math]

...

[math]\displaystyle{ y_{100} = h(x_{100}) }[/math]

To calculate the empirical error we count how many labels our function [math]\displaystyle{ h(x) }[/math] assigned incorrectly and divide by n=100

Bayes Classifier

The principle of Bayes Classifier is to calculate the posterior probability of a given object from its prior probability via Bayes formula, and then place the object in the class with the largest posterior probability<ref> http://www.wikicoursenote.com/wiki/Stat841#Bayes_Classifier </ref>.

First recall Bayes' Rule, in the format [math]\displaystyle{ P(Y|X) = \frac{P(X|Y) P(Y)} {P(X)} }[/math]

P(Y|X) : posterior , probability of [math]\displaystyle{ Y }[/math] given [math]\displaystyle{ X }[/math]

P(X|Y) : likelihood, probability of [math]\displaystyle{ X }[/math] being generated by [math]\displaystyle{ Y }[/math]

P(Y) : prior, probability of [math]\displaystyle{ Y }[/math] being selected

P(X) : marginal, probability of obtaining [math]\displaystyle{ X }[/math]

We will start with the simplest case: [math]\displaystyle{ \mathcal{Y} = \{0,1\} }[/math]

[math]\displaystyle{ r(x) = P(Y=1|X=x) = \frac{P(X=x|Y=1) P(Y=1)} {P(X=x)} = \frac{P(X=x|Y=1) P(Y=1)} {P(X=x|Y=1) P(Y=1) + P(X=x|Y=0) P(Y=0)} }[/math]

Bayes' rule can be approached by computing either:

1) The posterior: [math]\displaystyle{ \ P(Y=1|X=x) }[/math] and [math]\displaystyle{ \ P(Y=0|X=x) }[/math] or

2) The likelihood: [math]\displaystyle{ \ P(X=x|Y=1) }[/math] and [math]\displaystyle{ \ P(X=x|Y=0) }[/math]

The former reflects a Bayesian approach. The Bayesian approach uses previous beliefs and observed data (e.g., the random variable [math]\displaystyle{ \ X }[/math]) to determine the probability distribution of the parameter of interest (e.g., the random variable [math]\displaystyle{ \ Y }[/math]). The probability, according to Bayesians, is a degree of belief in the parameter of interest taking on a particular value (e.g., [math]\displaystyle{ \ Y=1 }[/math]), given a particular observation (e.g., [math]\displaystyle{ \ X=x }[/math]). Historically, the difficulty in this approach lies with determining the posterior distribution, however, more recent methods such as Markov Chain Monte Carlo (MCMC) allow the Bayesian approach to be implemented <ref name="PCAustin">P. C. Austin, C. D. Naylor, and J. V. Tu, "A comparison of a Bayesian vs. a frequentist method for profiling hospital performance," Journal of Evaluation in Clinical Practice, 2001</ref>.

The latter reflects a Frequentist approach. The Frequentist approach assumes that the probability distribution, including the mean, variance, etc., is fixed for the parameter of interest (e.g., the variable [math]\displaystyle{ \ Y }[/math], which is not random). The observed data (e.g., the random variable [math]\displaystyle{ \ X }[/math]) is simply a sampling of a far larger population of possible observations. Thus, a certain repeatability or frequency is expected in the observed data. If it were possible to make an infinite number of observations, then the true probability distribution of the parameter of interest can be found. In general, frequentists use a technique called hypothesis testing to compare a null hypothesis (e.g. an assumption that the mean of the probability distribution is [math]\displaystyle{ \ \mu_0 }[/math]) to an alternative hypothesis (e.g. assuming that the mean of the probability distribution is larger than [math]\displaystyle{ \ \mu_0 }[/math]) <ref name="PCAustin"/>. For more information on hypothesis testing see <ref>R. Levy, "Frequency hypothesis testing, and contingency tables" class notes for LING251, Department of Linguistics, University of California, 2007. Available: http://idiom.ucsd.edu/~rlevy/lign251/fall2007/lecture_8.pdf </ref>.

There was some class discussion on which approach should be used. Both the ease of computation and the validity of both approaches were discussed. A main point that was brought up in class is that Frequentists consider X to be a random variable, but they do not consider Y to be a random variable because it has to take on one of the values from a fixed set (in the above case it would be either 0 or 1 and there is only one correct label for a given value X=x). Thus, from a Frequentist's perspective it does not make sense to talk about the probability of Y. This is actually a grey area and sometimes Bayesians and Frequentists use each others' approaches. So using Bayes' rule doesn't necessarily mean you're a Bayesian. Overall, the question remains unresolved.

The Bayes Classifier uses [math]\displaystyle{ \ P(Y=1|X=x) }[/math]

[math]\displaystyle{ P(Y=1|X=x) = \frac{P(X=x|Y=1) P(Y=1)} {P(X=x|Y=1) P(Y=1) + P(X=x|Y=0) P(Y=0)} }[/math]

P(Y=1) : the prior, based on belief/evidence beforehand

denominator : marginalized by summation

[math]\displaystyle{ h(x) = \begin{cases} 1 \ \ \hat{r}(x) \gt 1/2 \\ 0 \ \ otherwise \end{cases} }[/math]

The set [math]\displaystyle{ \mathcal{D}(h) = \{ x : P(Y=1|X=x) = P(Y=0|X=x)... \} }[/math]

which defines a decision boundary.

Theorem: Bayes rule is optimal. I.e., if h is any other classification rule,

then [math]\displaystyle{ L(h^*) \lt = L(h) }[/math]

(This is to be proved in homework.)

Why then do we need other classfication methods? A: Because X densities are often/typically unknown. I.e., [math]\displaystyle{ f_k(x) }[/math] and/or [math]\displaystyle{ \pi_k }[/math] unknown.

[math]\displaystyle{ P(Y=k|X=x) = \frac{P(X=x|Y=k)P(Y=k)} {P(X=x)} = \frac{f_k(x) \pi_k} {\sum_k f_k(x) \pi_k} }[/math] f_k(x) is referred to as the class conditional distribution (~likelihood).

Therefore, we rely on some data to estimate quantities.

Three Main Approaches

1. Empirical Risk Minimization: Choose a set of classifiers H (e.g., line, neural network) and find [math]\displaystyle{ h^* \in H }[/math] that minimizes (some estimate of) L(h).

2. Regression: Find an estimate ([math]\displaystyle{ \hat{r} }[/math]) of function [math]\displaystyle{ r }[/math] and define [math]\displaystyle{ h(x) = \begin{cases} 1 \ \ \hat{r}(x) \gt 1/2 \\ 0 \ \ otherwise \end{cases} }[/math]

The [math]\displaystyle{ 1/2 }[/math] in the expression above is a threshold set for the regression prediction output.

In general regression refers to finding a continuous, real valued y. The problem here is more difficult, because of the restricted domain (y is a set of discrete label values).

3. Density Estimation: Estimate [math]\displaystyle{ P(X=x|Y=0) }[/math] from [math]\displaystyle{ X_i }[/math]'s for which [math]\displaystyle{ Y_i = 0 }[/math] Estimate [math]\displaystyle{ P(X=x|Y=1) }[/math] from [math]\displaystyle{ X_i }[/math]'s for which [math]\displaystyle{ Y_i = 1 }[/math] and let [math]\displaystyle{ P(Y=?) = (1/n) \sum_{i=1}^{n} Y_i }[/math]

Define [math]\displaystyle{ \hat{r}(x) = \hat{P}(Y=1|X=x) }[/math] and [math]\displaystyle{ h(x) = \begin{cases} 1 \ \ \hat{r}(x) \gt 1/2 \\ 0 \ \ otherwise \end{cases} }[/math]

It is possible that there may not be enough data to estimate from for density estimation. But the main problem lies with high dimensional spaces, as the estimation results may not be good (high error rate) and sometimes even infeasible. The term curse of dimensionality was coined by Bellman <ref>R. E. Bellman, Dynamic Programming. Princeton University Press, 1957</ref> to describe this problem.

As the dimension of the space goes up, the learning requirements go up exponentially.

To Learn more about methods for handling high-dimensional data <ref> https://docs.google.com/viewer?url=http%3A%2F%2Fwww.bios.unc.edu%2F~dzeng%2FBIOS740%2Flecture_notes.pdf</ref>

Multi-Class Classification

Generalize to case Y takes on k>2 values.

Theorem: [math]\displaystyle{ Y \in \mathcal{Y} = {1,2,..., k} }[/math] optimal rule

[math]\displaystyle{ h*(x) = argmax_k P }[/math]

where [math]\displaystyle{ P(Y=k|X=x) = \frac{f_k(x) \pi_k} {\sum_r f_r \pi_r} }[/math]

LDA and QDA

Discriminant function analysis finds features that best allow discrimination between two or more classes. The approach is similar to analysis of Variance (ANOVA) in that discriminant function analysis looks at the mean values to determine if two or more classes are very different and should be separated. Once the discriminant functions (that separate two or more classes) have been determined, new data points can be classified (i.e. placed in one of the classes) based on the discriminant functions <ref> StatSoft, Inc. (2011). Electronic Statistics Textbook. [Online]. Available: http://www.statsoft.com/textbook/discriminant-function-analysis/. </ref>. Linear discriminant analysis (LDA) and Quadratic discriminant analysis (QDA) are methods of discriminant analysis that are best applied to linearly and quadradically separable classes, respectively. Fisher discriminant analysis (FDA) another method of discriminant analysis that is different from linear discriminant analysis, but oftentimes both terms are used interchangeably.

LDA

The simplest method is to use approach 3 (above) and assume a parametric model for densities. Assume class conditional is Gaussian.

[math]\displaystyle{ \mathcal{Y} = \{ 0,1 \} }[/math] assumed (i.e., 2 labels)

[math]\displaystyle{ h(x) = \begin{cases} 1 \ \ P(Y=1|X=x) \gt P(Y=0|X=x) \\ 0 \ \ otherwise \end{cases} }[/math]

[math]\displaystyle{ P(Y=1|X=x) = \frac{f_1(x) \pi_1} {\sum_k f_k \pi_k} \ \ }[/math] (denom = P(x))

1) Assume Gaussian distributions

[math]\displaystyle{ f_k(x) = \frac{1}{(2\pi)^{-d/2} |\Sigma_k|^{-1/2}} exp(-(1/2)(\mathbf{x} - \mathbf{\mu_k}) \Sigma_k^{-1}(\mathbf{x}-\mathbf{\mu_k}) ) }[/math]

must compare [math]\displaystyle{ \frac{f_1(x) \pi_1} {p(x)} }[/math] with [math]\displaystyle{ \frac{f_0(x) \pi_0} {p(x)} }[/math] Note that the p(x) denom can be ignored: [math]\displaystyle{ f_1(x) \pi_1 }[/math] with [math]\displaystyle{ f_0(x) \pi_0 }[/math]

To find the decision boundary, set [math]\displaystyle{ f_1(x) \pi_1 = f_0(x) \pi_0 }[/math]

2) Assume [math]\displaystyle{ \Sigma_1 = \Sigma_0 }[/math], we can use [math]\displaystyle{ \Sigma = \Sigma_0 = \Sigma_1 }[/math].

Cancel [math]\displaystyle{ (2\pi)^{-d/2} |\Sigma_k|^{-1/2} }[/math] from both sides.

Take log of both sides.

Subtract one side from both sides, leaving zero on one side.

[math]\displaystyle{ -(1/2)(\mathbf{x} - \mathbf{\mu_1})^T \Sigma^{-1} (\mathbf{x}-\mathbf{\mu_1}) + log(\pi_1) - [-(1/2)(\mathbf{x} - \mathbf{\mu_0})^T \Sigma^{-1} (\mathbf{x}-\mathbf{\mu_0}) + log(\pi_0)] = 0 }[/math]

[math]\displaystyle{ (1/2)[-\mathbf{x}^T \Sigma^{-1}\mathbf{x} - \mathbf{\mu_1}^T \Sigma^{-1} \mathbf{\mu_1} + 2\mathbf{\mu_1}^T \Sigma^{-1} \mathbf{x}

+ \mathbf{x}^T \Sigma^{-1}\mathbf{x} + \mathbf{\mu_0}^T \Sigma^{-1} \mathbf{\mu_0} - 2\mathbf{\mu_0}^T \Sigma^{-1} \mathbf{x} ]

+ log(\pi_1/\pi_0) = 0 }[/math]

Cancelling out the terms quadratic in [math]\displaystyle{ \mathbf{x} }[/math] and rearranging results in

[math]\displaystyle{ (1/2)[-\mathbf{\mu_1}^T \Sigma^{-1} \mathbf{\mu_1} + \mathbf{\mu_0}^T \Sigma^{-1} \mathbf{\mu_0} + (2\mathbf{\mu_1}^T \Sigma^{-1} - 2\mathbf{\mu_0}^T \Sigma^{-1}) \mathbf{x}] + log(\pi_1/\pi_0) = 0 }[/math]

We can see that the first pair of terms is constant, and the second pair is linear in x.

Therefore, we end up with something of the form

[math]\displaystyle{ ax + b = 0 }[/math].

LDA and QDA Continued (Lecture: Sep. 22, 2011)

If we relax assumption 2 (i.e. [math]\displaystyle{ \Sigma_1 != \Sigma_0 }[/math]) then we get a quadratic equation that can be written as [math]\displaystyle{ {x}^Ta{x}+b{x} + c = 0 }[/math]

Generalizing LDA and QDA

Theorem:

Suppose that [math]\displaystyle{ \,Y \in \{1,\dots,K\} }[/math], if [math]\displaystyle{ \,f_k(x) = Pr(X=x|Y=k) }[/math] is Gaussian, the Bayes Classifier rule is

- [math]\displaystyle{ \,h^*(x) = \arg\max_{k} \delta_k(x) }[/math]

Where

[math]\displaystyle{ \,\delta_k(x) = - \frac{1}{2}log(|\Sigma_k|) - \frac{1}{2}(x-\mu_k)^\top\Sigma_k^{-1}(x-\mu_k) + log (\pi_k) }[/math]

When the Gaussian variances are equal [math]\displaystyle{ \Sigma_1 = \Sigma_0 }[/math] (e.g. LDA), then

[math]\displaystyle{ \,\delta_k(x) = x^\top\Sigma^{-1}\mu_k - \frac{1}{2}\mu_k^\top\Sigma^{-1}\mu_k + log (\pi_k) }[/math]

In practice

We estimate the prior to be the chance that a random item from the collection belongs to set k, e.g.

[math]\displaystyle{ \,\hat{\pi_k} = \hat{Pr}(y=k) = \frac{n_k}{n} }[/math]

The mean to be the average item in set k, e.g.

[math]\displaystyle{ \,\hat{\mu_k} = \frac{1}{n_k}\sum_{i:y_i=k}x_i }[/math]

and calculate the covariance of each class e.g.

[math]\displaystyle{ \,\hat{\Sigma_k} = \frac{1}{n_k}\sum_{i:y_i=k}(x_i-\hat{\mu_k})(x_i-\hat{\mu_k})^\top }[/math]

If we wish to use LDA we must calculate a common covariance, so we average all the covariances e.g.

[math]\displaystyle{ \,\Sigma=\frac{\sum_{r=1}^{k}(n_r\Sigma_r)}{n} }[/math]

Where: [math]\displaystyle{ \,n_r }[/math] is the number of data points in class [math]\displaystyle{ \,r }[/math], [math]\displaystyle{ \,\Sigma_r }[/math] is the covariance of class [math]\displaystyle{ \,r }[/math], [math]\displaystyle{ \,n }[/math] is the total number of data points, and [math]\displaystyle{ \,k }[/math] is the number of classes.

Computation

For QDA we need to calculate: [math]\displaystyle{ \,\delta_k(x) = - \frac{1}{2}log(|\Sigma_k|) - \frac{1}{2}(x-\mu_k)^\top\Sigma_k^{-1}(x-\mu_k) + log (\pi_k) }[/math]

Lets first consider when [math]\displaystyle{ \, \Sigma_k = I, \forall k }[/math]. This is the case where each distribution is spherical, around the mean point.

Case 1

When [math]\displaystyle{ \, \Sigma_k = I }[/math]

We have:

[math]\displaystyle{ \,\delta_k = - \frac{1}{2}log(|I|) - \frac{1}{2}(x-\mu_k)^\top I(x-\mu_k) + log (\pi_k) }[/math]

but [math]\displaystyle{ \ \log(|I|)=\log(1)=0 }[/math]

and [math]\displaystyle{ \, (x-\mu_k)^\top I(x-\mu_k) = (x-\mu_k)^\top(x-\mu_k) }[/math] is the squared Euclidean distance between two points [math]\displaystyle{ \,x }[/math] and [math]\displaystyle{ \,\mu_k }[/math]

Thus in this condition, a new point can be classified by its distance away from the center of a class, adjusted by some prior.

Further, for two-class problem with equal prior, the discriminating function would be the bisector of the 2-class's means.

Case 2

When [math]\displaystyle{ \, \Sigma_k \neq I }[/math]

Using the Singular Value Decomposition (SVD) of [math]\displaystyle{ \, \Sigma_k }[/math] we get [math]\displaystyle{ \, \Sigma_k = U_kS_kV_k^\top }[/math]

but since [math]\displaystyle{ \, \Sigma }[/math] is symmetric<ref> http://en.wikipedia.org/wiki/Covariance_matrix#Properties </ref> [math]\displaystyle{ \, \Sigma_k = U_kS_kU_k^\top }[/math]

For [math]\displaystyle{ \,\delta_k }[/math], the second term becomes what is also known as the Mahalanobis distance <ref>P. C. Mahalanobis, "On The Generalised Distance in Statistics," Proceedings of the National Institute of Sciences of India, 1936</ref> :

- [math]\displaystyle{ \begin{align} (x-\mu_k)^\top\Sigma_k^{-1}(x-\mu_k)&= (x-\mu_k)^\top U_kS_k^{-1}U_k^T(x-\mu_k)\\ & = (U_k^\top x-U_k^\top\mu_k)^\top S_k^{-1}(U_k^\top x-U_k^\top \mu_k)\\ & = (U_k^\top x-U_k^\top\mu_k)^\top S_k^{-\frac{1}{2}}S_k^{-\frac{1}{2}}(U_k^\top x-U_k^\top\mu_k) \\ & = (S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top\mu_k)^\top I(S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top \mu_k) \\ & = (S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top\mu_k)^\top(S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top \mu_k) \\ \end{align} }[/math]

If we think of [math]\displaystyle{ \, S_k^{-\frac{1}{2}}U_k^\top }[/math] as a linear transformation that takes points in class [math]\displaystyle{ \,k }[/math] and distributes them spherically around a point, like in case 1. Thus when we are given a new point, we can apply the modified [math]\displaystyle{ \,\delta_k }[/math] values to calculate [math]\displaystyle{ \ h^*(\,x) }[/math]. After applying the singular value decomposition, [math]\displaystyle{ \,\Sigma_k^{-1} }[/math] is considered to be an identity matrix such that

[math]\displaystyle{ \,\delta_k = - \frac{1}{2}log(|I|) - \frac{1}{2}[(S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top\mu_k)^\top(S_k^{-\frac{1}{2}}U_k^\top x-S_k^{-\frac{1}{2}}U_k^\top \mu_k)] + log (\pi_k) }[/math]

and,

[math]\displaystyle{ \ \log(|I|)=\log(1)=0 }[/math]

For applying the above method with classes that have different covariance matrices (for example the covariance matrices [math]\displaystyle{ \ \Sigma_0 }[/math] and [math]\displaystyle{ \ \Sigma_1 }[/math] for the two class case), each of the covariance matrices has to be decomposed using SVD to find the according transformation. Then, each new data point has to be transformed using each transformation to compare its distance to the mean of each class (for example for the two class case, the new data point would have to be transformed by the class 1 transformation and then compared to [math]\displaystyle{ \ \mu_0 }[/math] and the new data point would also have to be transformed by the class 2 transformation and then compared to [math]\displaystyle{ \ \mu_1 }[/math]).

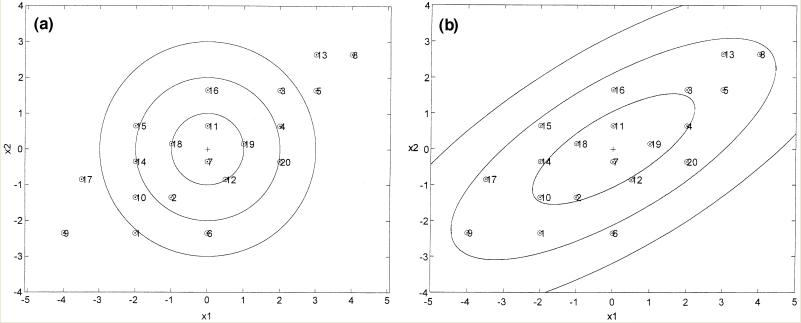

The difference between Case 1 and Case 2 (i.e. the difference between using the Euclidean and Mahalanobis distance) can be seen in the illustration below.

As can be seen from the illustration above, the Mahalanobis distance takes into account the distribution of the data points, whereas the Euclidean distance would treat the data as though it has a spherical distribution. Thus, the Mahalanobis distance applies for the more general classification in Case 2, whereas the Euclidean distance applies to the special case in Case 1 where the data distribution is assumed to be spherical.

Principal Component Analysis (Lecture: Sep. 27, 2011)

Principal Component Analysis (PCA) is a method of dimensionality reduction/feature extraction that transforms the data from a D dimensional space into a new coordinate system of dimension d, where d < D. The goal is to preserve as much of the variance in the original data as possible when switching the coordinate systems.

The new variables that form a new coordinate system are called principal components (PCs). PCs are denoted by [math]\displaystyle{ \ u_1, u_2, ... , u_D }[/math]. Since PCs are orthogonal linear transformations of the original variables there is at most D PCs. Normally, not all of the D PCs are used but rather a subset of d PCs, [math]\displaystyle{ \ u_1, u_2, ... , u_d }[/math], to approximate the space spanned by the original data points [math]\displaystyle{ \ x_1, x_2, ... , x_D }[/math].

Let [math]\displaystyle{ \ PC_j }[/math] be a linear combination of [math]\displaystyle{ \ x_1, x_2, ... , x_D }[/math] defined by the coefficients

[math]\displaystyle{ \ W^{(j)} }[/math] = [math]\displaystyle{ ( {w_1}^{(j)}, {w_2}^{(j)},...,{w_D}^{(j)} )^T }[/math]

Thus, [math]\displaystyle{ u_j = {w_1}^{(j)} x_1 + {w_2}^{(j)} x_2 + ... + {w_D}^{(j)} x_D = W^{(j)^T} X }[/math]

This is a unique configuration since it sets up the PCs in order from maximum to minimum variances. The first PC, [math]\displaystyle{ \ u_1 }[/math] is called first principal component and has the maximum variance, thus it accounts for the most significant variance in the data [math]\displaystyle{ \ x_1, x_2, ... , x_D }[/math]. The second PC, [math]\displaystyle{ \ u_2 }[/math] is called second principal component and has the second highest variance and so on until PC, [math]\displaystyle{ \ u_D }[/math] which has the minimum variance.

To get the first principal component, we would like to use the following equation:

[math]\displaystyle{ \ max (Var(W^T X)) = max (W^T S W) }[/math]

Where [math]\displaystyle{ \ S }[/math] is the covariance matrix. And we solve for [math]\displaystyle{ \ W }[/math].

Note: we require the constraint [math]\displaystyle{ \ W^T W = 1 }[/math] because if there is no constraint on the length of [math]\displaystyle{ \ W }[/math] then there is no upper bound. With the constraint, the direction and not the length that maximizes the variance can be found.

Lagrange Multiplier

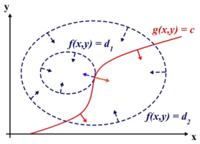

Before we proceed, we should review Lagrange multipliers.

Lagrange multipliers are used to find the maximum or minimum of a function [math]\displaystyle{ \displaystyle f(x,y) }[/math] subject to constraints [math]\displaystyle{ \displaystyle g(x,y)=0 }[/math]

we define a new constant [math]\displaystyle{ \lambda }[/math] called a Lagrange Multiplier and we form the Lagrangian,

[math]\displaystyle{ \displaystyle L(x,y,\lambda) = f(x,y) - \lambda g(x,y) }[/math]

If [math]\displaystyle{ \displaystyle (x^*,y^*) }[/math] is the max of [math]\displaystyle{ \displaystyle f(x,y) }[/math], there exists [math]\displaystyle{ \displaystyle \lambda^* }[/math] such that [math]\displaystyle{ \displaystyle (x^*,y^*,\lambda^*) }[/math] is a stationary point of [math]\displaystyle{ \displaystyle L }[/math] (partial derivatives are 0).

In addition [math]\displaystyle{ \displaystyle (x^*,y^*) }[/math] is a point in which functions [math]\displaystyle{ \displaystyle f }[/math] and [math]\displaystyle{ \displaystyle g }[/math] touch but do not cross. At this point, the tangents of [math]\displaystyle{ \displaystyle f }[/math] and [math]\displaystyle{ \displaystyle g }[/math] are parallel or gradients of [math]\displaystyle{ \displaystyle f }[/math] and [math]\displaystyle{ \displaystyle g }[/math] are parallel, such that:

[math]\displaystyle{ \displaystyle \nabla_{x,y } f = \lambda \nabla_{x,y } g }[/math]

where,

[math]\displaystyle{ \displaystyle \nabla_{x,y} f = (\frac{\partial f}{\partial x},\frac{\partial f}{\partial{y}}) \leftarrow }[/math] the gradient of [math]\displaystyle{ \, f }[/math]

[math]\displaystyle{ \displaystyle \nabla_{x,y} g = (\frac{\partial g}{\partial{x}},\frac{\partial{g}}{\partial{y}}) \leftarrow }[/math] the gradient of [math]\displaystyle{ \, g }[/math]

Example :

Suppose we want to maximize the function [math]\displaystyle{ \displaystyle f(x,y)=x-y }[/math] subject to the constraint [math]\displaystyle{ \displaystyle x^{2}+y^{2}=1 }[/math]. We can apply the Lagrange multiplier method to find the maximum value for the function [math]\displaystyle{ \displaystyle f }[/math]; the Lagrangian is:

[math]\displaystyle{ \displaystyle L(x,y,\lambda) = x-y - \lambda (x^{2}+y^{2}-1) }[/math]

We want the partial derivatives equal to zero:

[math]\displaystyle{ \displaystyle \frac{\partial L}{\partial x}=1+2 \lambda x=0 }[/math]

[math]\displaystyle{ \displaystyle \frac{\partial L}{\partial y}=-1+2\lambda y=0 }[/math]

[math]\displaystyle{ \displaystyle \frac{\partial L}{\partial \lambda}=x^2+y^2-1 }[/math]

Solving the system we obtain two stationary points: [math]\displaystyle{ \displaystyle (\sqrt{2}/2,-\sqrt{2}/2) }[/math] and [math]\displaystyle{ \displaystyle (-\sqrt{2}/2,\sqrt{2}/2) }[/math]. In order to understand which one is the maximum, we just need to substitute it in [math]\displaystyle{ \displaystyle f(x,y) }[/math] and see which one as the biggest value. In this case the maximum is [math]\displaystyle{ \displaystyle (\sqrt{2}/2,-\sqrt{2}/2) }[/math].

Determining W :

Use the Lagrange multiplier conversion to obtain: [math]\displaystyle{ \displaystyle L(W, \lambda) = W^T SW - \lambda (W^T W - 1) }[/math] where [math]\displaystyle{ \displaystyle λ }[/math] is a constant

Take the derivative and set it to zero:

[math]\displaystyle{ \displaystyle{\Delta L \over{\Delta W}} = 0 }[/math]

To obtain:

[math]\displaystyle{ \displaystyle 2SW - 2 \lambda W = 0 }[/math]

Rearrange to obtain:

[math]\displaystyle{ \displaystyle SW = \lambda W }[/math]

where [math]\displaystyle{ \displaystyle W }[/math] is eigenvector of [math]\displaystyle{ \displaystyle S }[/math] and [math]\displaystyle{ \ \lambda }[/math] is the eigenvalue of [math]\displaystyle{ \displaystyle S }[/math] as [math]\displaystyle{ \displaystyle SW= \lambda W }[/math] , and [math]\displaystyle{ \displaystyle W^T W=1 }[/math] , then we can write

[math]\displaystyle{ \displaystyle W^T SW= W^T\lambda W= \lambda W^T W =\lambda }[/math]

Note that the PCs decompose the total variance in the data in the following way

[math]\displaystyle{ \sum_{i=1}^{D} Var(u_i) }[/math]

[math]\displaystyle{ = \sum_{i=1}^{D} (\lambda_i) }[/math]

[math]\displaystyle{ \ = Tr(S) }[/math]

[math]\displaystyle{ = \sum_{i=1}^{D} Var(x_i) }[/math]

Principal Component Analysis (PCA) Continued (Lecture: Sep. 29, 2011)

As can be seen from the above expressions, [math]\displaystyle{ \ Var(u_i) }[/math] is maximized if [math]\displaystyle{ \ \lambda_i }[/math] is the maximum eigenvalue of the sample covariance [math]\displaystyle{ \ S }[/math] and the first principal component (PC) is the corresponding eigenvector. Each successive PC can be generated in the above manner by taking the eigenvectors of [math]\displaystyle{ \ S }[/math] that correspond to the eigenvalues:

[math]\displaystyle{ \ \lambda_1 \geq ... \geq \lambda_D }[/math]

such that

[math]\displaystyle{ \ Var(u_1) \geq ... \geq Var(u_D) }[/math]

Alternative Derivation

Another way of looking at PCA is to consider PCA as a projection from a higher D-dimension space to a lower d-dimensional subspace that minimizes the squared reconstruction error. The squared reconstruction error is the difference between the original data set [math]\displaystyle{ \ X }[/math] and the new data set [math]\displaystyle{ \hat{X} }[/math] obtained by first projecting the original data set into a lower d-dimensional subspace and then projecting it back into the the original higher D-dimension space. Since information is (normally) lost by compressing the the original data into a lower d-dimensional subspace, the new data set will (normally) differ from the original data even though both are part of the higher D-dimension space. The reconstruction error is computed as shown below.

Reconstruction Error

[math]\displaystyle{ \sum_{i=1}^{n} = || x_i - \hat{x}_i ||^2 }[/math]

Minimize Reconstruction Error

Suppose [math]\displaystyle{ \bar{x} = 0 }[/math] where [math]\displaystyle{ \hat{x}_i = x_i - \bar{x} }[/math]

Let [math]\displaystyle{ \ f(y) = U_d y }[/math] where [math]\displaystyle{ \ U_d }[/math] is a D by d matrix with d orthogonal unit vectors as columns.

Fit the model to the data and minimize the reconstruction error:

[math]\displaystyle{ \ min_{U_d, y_i} \sum_{i=1}^n || x_i - U_d y_i ||^2 }[/math]

Differentiate with respect to [math]\displaystyle{ \ y_i }[/math]:

[math]\displaystyle{ \frac{d}{dy_i} = 0 }[/math]

[math]\displaystyle{ \ 2(-U_d)(x_i - U_d y_i) = 0 }[/math]

[math]\displaystyle{ \ x_i = U_d y_i }[/math]

[math]\displaystyle{ \ y_i = U_d^T x_i }[/math]

Find the orthogonal matrix [math]\displaystyle{ \ U_d }[/math]:

[math]\displaystyle{ \ min_{U_d} \sum_{i=1}^n || x_i - U_d U_d^T x_i||^2 }[/math]

Using SVD

A unique solution can be obtained by finding the Singular Value Decomposition (SVD) of [math]\displaystyle{ \ X }[/math]:

[math]\displaystyle{ \ X = U S V^T }[/math]

For each rank d, [math]\displaystyle{ \ U_d }[/math] consists of the first d columns of [math]\displaystyle{ \ U }[/math]. Also, the covariance matrix can be expressed as follows [math]\displaystyle{ \ S = \Sigma_i (x_i - \mu)(x_i - \mu)^T }[/math].

Simply put, by subtracting the mean of each of the data point features and then applying SVD, one can find the principal components:

[math]\displaystyle{ \tilde{X} = X - \mu }[/math]

[math]\displaystyle{ \ \tilde{X} = U S V^T }[/math]

Where [math]\displaystyle{ \ X }[/math] is a d by n matrix of data points and the features of each data point form a column in [math]\displaystyle{ \ X }[/math]. Also, [math]\displaystyle{ \ \mu }[/math] is a d by n matrix of the mean of each of the data points. Note that the arrangement of data points is a convention and indeed in Matlab or conventional statistics, the transpose of the matrices in the above formulae is used.

As the [math]\displaystyle{ \ S }[/math] matrix from the SVD has the eigenvalues arranged from largest to smallest, the corresponding eigenvectors in the [math]\displaystyle{ \ U }[/math] matrix from the SVD will be such that the first column of [math]\displaystyle{ \ U }[/math] is the first principal component and the second column is the second principal component and so on.

Examples

Note that in the Matlab code in the examples below, the mean was not subtracted from the datapoints before performing SVD. This is what was shown in class. However, to properly perform PCA, the mean should be subtracted from the datapoints.

Example 1

Consider a matrix of data points [math]\displaystyle{ \ X }[/math] with the dimensions 560 by 1965. 560 is the number of elements in each column. Each column is a vector representation of a 20x28 grayscale pixel image of a face (see image below) and there is a total of 1965 different images of faces. Each of the images are corrupted by noise, but the noise can be removed using PCA. The corresponding Matlab commands are shown below:

>> % start with a 560 by 1965 matrix X that contains the data points >> load(noisy.mat); >> >> % set the colors to grayscale >> colormap gray >> >> % show image in column 10 by reshaping column 10 into a 20 by 28 matrix >> imagesc(reshape(X(:,10),20,28)') >> >> % perform SVD, if X matrix if full rank, will obtain 560 PCs >> [U S V] = svd(X); >> >> % reconstruct X using only the first ten principal components >> X_hat = U(:, 1:10)*S(1:10, 1:10)*V(:,1:10)'; >> >> % show image in column 10 of X_hat which is now a 560 by 1965 matrix >> imagesc(reshape(X_hat(:,10),20,28)')

The reason why the noise is removed in the reconstructed image is because the noise does not create a major variation in a single direction in the original data. Hence, the first ten PCs are not in the direction of the noise. Thus, reconstructing the image using the first ten PCs, will remove the noise.

Example 2

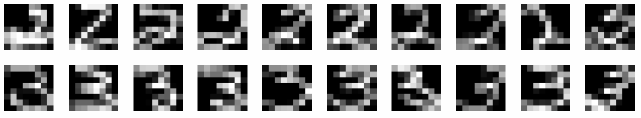

Consider a matrix of data points [math]\displaystyle{ \ X }[/math] with the dimensions 64 by 400. 64 is the number of elements in each column. Each column is a vector representation of a 8x8 grayscale pixel image of either a handwritten number 2 or a handwritten number 3 (see image below) and there are a total of 400 different images, where the first 200 images show a handwritten number 2 and the last 200 images show a handwritten number 3.

The corresponding Matlab commands for performing PCA on the data points are shown below:

>> % start with a 64 by 400 matrix X that contains the data points >> load(2_3.mat); >> >> % set the colors to grayscale >> colormap gray >> >> % show image in column 2 by reshaping column 2 into a 8 by 8 matrix >> imagesc(reshape(X(:,2),8,8)) >> >> % perform SVD, if X matrix if full rank, will obtain 64 PCs >> [U S V] = svd(X); >> >> % project data down onto the first two PCs >> Y = U(:,1:2)'*X; >> >> % show Y as an image (can see the change in the first PC at column 200, >> % when the handwritten number changes from 2 to 3) >> imagesc(Y) >> >> % perform PCA using Matlab build-in function (do not use for assignment) >> % also note that due to the Matlab convention, the transpose of X is used >> [COEFF, Y] = princomp(X'); >> >> % again, use the first two PCs >> Y = Y(:,1:2); >> >> % use plot digits to show the distribution of images on the first two PCs >> images = reshape(X, 8, 8, 400); >> plotdigits(images, Y, .1, 1);

Using the plotdigits function in Matlab, clearly illustrates that the first PC captured the differences between the numbers 2 and 3 as they are projected onto different regions of the axis for the first PC. Also, the second PC captured the tilt of the handwritten numbers as numbers tilted to the left or right were projected onto different regions of the axis for the second PC.

Example 3

(Not discussed in class - just a different way to think about it.) In the news recently was a story that captures some of the ideas behind PCA. Over the past two years, Scott Golder and Michael Macy, researchers from Cornell University, collected 509 million Twitter messages from 2.4 million users in 84 different countries. The data they used were words collected at various times of day and they classified the data into two different categories: positive emotion words and negative emotion words. Then, they were able to study this new data to evaluate subject's moods at different times of day, while the subjects were in different parts of the world. Using this data, they found that subjects are generally exhibit positive emotions in the mornings and late evenings, and negative emotions mid-day. So they were able to "project their data onto a smaller dimensional space". Their paper, "Diurnal and Seasonal Mood Vary with Work, Sleep, and Daylength Across Diverse Cultures," is available in the journal Science next Thursday. [13] Granted, this is not a true representation of PCA, but it is interesting that they are able to use these ideas for applications in many disciplines.

Observations

Some observations about the PCA were brought up in class:

1) PCA assumes that data is on a linear subspace or close to a linear subspace. For non-linear dimensionality reduction, other techniques are used. Amongst the first proposed techniques for non-linear dimensionality reduction are Locally Linear Embedding (LLE) and Isomap. More recent techniques include Maximum Variance Unfolding (MVU) and t-Distributed Stochastic Neighbor Embedding (t-SNE). Kernel PCAs may also be used, but they depend on the type of kernel used and generally do not work well in practice. (Kernels will be covered in more detail later in the course.)

2) Finding the number of PCs to use is not straightforward. It requires knowledge about the instrinsic dimentionality of data. In practice, oftentimes a heuristic approach is adopted by looking at the eigenvalues ordered from largest to smallest. If there is a "dip" in the magnitude of the eigenvalues, the "dip" is used as a cut off point and only the large eigenvalues before the "dip" are used. Otherwise, it is possible to add up the eigenvalues from largest to smallest until a certain percentage value is reached. This percentage value represents the percentage of variance that is preserved when projecting onto the PCs corresponding to the eigenvalues that have been added together to achieve the percentage.

3) It is a good idea to normalize the variance of the data before applying PCA. This will avoid PCA finding PCs in certain directions due to the scaling of the data, rather than the real variance of the data.

4) PCA can be considered as an unsupervised approach, since the main direction of variation is not known beforehand, i.e. it is not completely certain which dimension the first PC will capture. The PCs found may not correspond to the desired labels for the data set. There are, however, alternate methods for performing supervised dimensionality reduction.

Summary

The PCA algorithm can be summarized into the following steps.

Recover basis

Calculate [math]\displaystyle{ \ X X^T = \Sigma_{i=1}^{t} x_i x_{i}^{T} }[/math] and let [math]\displaystyle{ \ U= }[/math] eigenvectors of [math]\displaystyle{ \ X X^T }[/math] corresponding to the top d eigenvalues.

Encode training data

Let [math]\displaystyle{ \ Y = U^T X }[/math] where [math]\displaystyle{ \ Y }[/math] is a d by t matrix of encodings of the original data.

Reconstruct training data

[math]\displaystyle{ \hat{X}= U Y =U U^T X }[/math].

Encode test example

[math]\displaystyle{ \ y = U^T x }[/math] where [math]\displaystyle{ \ y }[/math] is a d-dimensional encoding of [math]\displaystyle{ \ x }[/math].

Reconstruct test example

[math]\displaystyle{ \hat{x} = U y = U U^T x }[/math].

Fisher Discriminant Analysis (FDA)

Fisher's discriminant Analysis (FDA) is a type of supervised dimensionality reduction technique, but it is oftentimes listed as a classification technique. The idea behind FDA is to find the direction that maximizes the separation of classes, i.e. each class is collapsed onto the smallest area possible (in the ideal case this would be a single point) and then the direction that separates the areas for each class as far as possible is found. This is in contrast to PCA which finds the direction that preserves the variability of the data the most.

For FDA consider [math]\displaystyle{ \ x }[/math] with covariance [math]\displaystyle{ \ \Sigma }[/math] and mean [math]\displaystyle{ \ \mu }[/math]. If we add a weighting [math]\displaystyle{ \ w }[/math]:

[math]\displaystyle{ \ x }[/math] will become [math]\displaystyle{ \ w^T x }[/math]

[math]\displaystyle{ \ \Sigma }[/math] will become [math]\displaystyle{ \ w^T \Sigma w }[/math]

[math]\displaystyle{ \ \mu }[/math] will become [math]\displaystyle{ \ w^T \mu }[/math]

The goal is to find [math]\displaystyle{ \ w }[/math] that minimizes the covariance [math]\displaystyle{ \ w^T \Sigma w }[/math].

References

<references />

Jgpitt - 2011/09/21

G5chang - 2011/09/29