stat841f10: Difference between revisions

| Line 42: | Line 42: | ||

=== Bayes Classifier === | === Bayes Classifier === | ||

After training its model using training data, the '''Bayes | After training its model using training data, the '''Bayes classifier''' classifies any new data input in two steps. First, it uses the input's known feature values and the [http://en.wikipedia.org/wiki/Bayes_formula Bayes formula] to calculate the input's [http://en.wikipedia.org/wiki/Posterior_probability posterior probability] of belonging to each class. Then, it uses its classification rule to place the input into its most-probable class, which is the one associated with the input's largest posterior probability. | ||

In mathematical terms, for a new data input having feature values <math>\,(X = x)\in \mathcal{X}</math>, the Bayes | In mathematical terms, for a new data input having feature values <math>\,(X = x)\in \mathcal{X}</math>, the Bayes classifier labels the input as <math>(Y = y) \in \mathcal{Y}</math>, such that the input's posterior probability <math>\,P(Y = y|X = x)</math> is maximum over all the members of <math>\mathcal{Y}</math>. | ||

Suppose there are <math>\,k</math> classes and we are given a new data input having feature values <math>\,X=x</math>. The following derivation shows how the Bayes | Suppose there are <math>\,k</math> classes and we are given a new data input having feature values <math>\,X=x</math>. The following derivation shows how the Bayes classifier finds the input's posterior probability <math>\,P(Y = y|X = x)</math> of belonging to each class <math>(Y = y) \in \mathcal{Y}</math>. | ||

:<math> | :<math> | ||

\begin{align} | \begin{align} | ||

| Line 55: | Line 55: | ||

Here, <math>\,P(Y=y|X=x)</math> is known as the posterior probability as mentioned above, <math>\,P(Y=y)</math> is known as the prior probability, <math>\,P(X=x|Y=y)</math> is known as the likelihood, and <math>\,P(X=x)</math> is known as the evidence. | Here, <math>\,P(Y=y|X=x)</math> is known as the posterior probability as mentioned above, <math>\,P(Y=y)</math> is known as the prior probability, <math>\,P(X=x|Y=y)</math> is known as the likelihood, and <math>\,P(X=x)</math> is known as the evidence. | ||

In the special case where there are two classes, i.e., <math>\, \mathcal{Y}=\{0, 1\}</math>, the Bayes | In the special case where there are two classes, i.e., <math>\, \mathcal{Y}=\{0, 1\}</math>, the Bayes classifier makes use of the function <math>\,r(x)=P\{Y=1|X=x\}</math> which is the prior probability of a new data input having feature values <math>\,X=x</math> belonging to the class <math>\,Y = 1</math>. Following the above derivation for the posterior probabilities of a new data input, the Bayes classifier calculates <math>\,r(x)</math> as follows: | ||

:<math> | :<math> | ||

\begin{align} | \begin{align} | ||

| Line 64: | Line 64: | ||

</math> | </math> | ||

The Bayes | The Bayes classifier's classification rule <math>\,h^*: \mathcal{X} \mapsto \mathcal{Y}</math>, then, is | ||

:<math>\, h^*(x)= \left\{\begin{matrix} | :<math>\, h^*(x)= \left\{\begin{matrix} | ||

| Line 70: | Line 70: | ||

0 &\text{if } \mathrm{otherwise} \end{matrix}\right.</math>. | 0 &\text{if } \mathrm{otherwise} \end{matrix}\right.</math>. | ||

Here, <math>\,x</math> is the feature values of a new data input and <math>\hat r(x)</math> is the estimated value of the function <math>\,r(x)</math> given by the Bayes | Here, <math>\,x</math> is the feature values of a new data input and <math>\hat r(x)</math> is the estimated value of the function <math>\,r(x)</math> given by the Bayes classifier's model after feeding <math>\,x</math> into the model. Still in this special case of two classes, the Bayes classifier's [http://en.wikipedia.org/wiki/Decision_boundary decision boundary] is defined as the set <math>\,D(h)=\{x: P(Y=1|X=x)=P(Y=0|X=x)\}</math>. The decision boundary <math>\,D(h)</math> essentially combines together the trained model and the decision function <math>\,h</math>, and it is used by the Bayes classifier to assign any new data input to a label of either <math>\,Y = 0</math> or <math>\,Y = 1</math> depending on which side of the decision boundary the input lies in. From this decision boundary, it is easy to see that, in the case where there are two classes, the Bayes classifier's classification rule can be re-expressed as | ||

:<math>\, h^*(x)= \left\{\begin{matrix} | :<math>\, h^*(x)= \left\{\begin{matrix} | ||

| Line 77: | Line 77: | ||

'''Bayes Classification Rule Optimality Theorem''' | '''Bayes Classification Rule Optimality Theorem''' | ||

:The Bayes | :The Bayes classifier is the optimal classifier in that it produces the least possible probability of misclassification for any given new data input, i.e., for any other classifier having classification rule <math>\,h</math>, it is always true that <math>\,L(h^*(x)) \le L(h(x))</math>. Here, <math>\,L</math> represents the true error rate, <math>\,h^*</math> is the Bayes classifier's classification rule, and <math>\,x</math> is any given data input's feature values. | ||

Although the Bayes | Although the Bayes classifier is optimal in the theoretical sense, other classifiers may nevertheless outperform it in practice. The reason for this is that various components which make up the Bayes classifier's model, such as the likelihood and prior probabilities, must either be estimated using training data or be guessed with a certain degree of belief, as a result, their estimated values in the trained model may deviate quite a bit from their true population values and this ultimately can cause the posterior probabilities to deviate quite a bit from their true population values. A rather detailed proof of this theorem is available [http://www.ee.columbia.edu/~vittorio/BayesProof.pdf here]. | ||

'''Defining the classification rule:''' | '''Defining the classification rule:''' | ||

In the special case of two classes, the Bayes | In the special case of two classes, the Bayes classifier can use three main approaches to define its classification rule </math>h</math>: | ||

:1) Empirical Risk Minimization: Choose a set of classifiers <math>\mathcal{H}</math> and find <math>\,h^*\in \mathcal{H}</math> that minimizes some estimate of the true error rate <math>\,L(h)</math>. | :1) Empirical Risk Minimization: Choose a set of classifiers <math>\mathcal{H}</math> and find <math>\,h^*\in \mathcal{H}</math> that minimizes some estimate of the true error rate <math>\,L(h)</math>. | ||

| Line 97: | Line 97: | ||

0 &\text{if } \mathrm{otherwise} \end{matrix}\right.</math>. | 0 &\text{if } \mathrm{otherwise} \end{matrix}\right.</math>. | ||

Typically, the Bayes | Typically, the Bayes classifier uses approach 3) to define its classification rule. These three approaches can easily be generalized to the case where the number of classes exceeds two. | ||

'''Multi-class classification:''' | '''Multi-class classification:''' | ||

| Line 120: | Line 120: | ||

Here, <math>\,f_y(x) = P(X=x|Y=y)</math> is known as the [http://en.wikipedia.org/wiki/Likelihood_function likelihood function] and <math>\,\pi_y = P(Y=y)</math> is known as the [http://en.wikipedia.org/wiki/Prior_probability prior probability]. | Here, <math>\,f_y(x) = P(X=x|Y=y)</math> is known as the [http://en.wikipedia.org/wiki/Likelihood_function likelihood function] and <math>\,\pi_y = P(Y=y)</math> is known as the [http://en.wikipedia.org/wiki/Prior_probability prior probability]. | ||

In the general case where there are at least two classes, the Bayes | In the general case where there are at least two classes, the Bayes classifier uses the following theorem to assign any new data input having feature values <math>\,x</math> into one of the <math>\,k</math> classes. | ||

''Theorem'' | ''Theorem'' | ||

| Line 140: | Line 140: | ||

<br /> | <br /> | ||

<math>\, \hat r(x) = P(Y=1|X =(0,1,0))=\frac{P(X=(0,1,0)|Y=1)P(Y=1)}{P(X=(0,1,0)|Y=0)P(Y=0)+P(X=(0,1,0)|Y=1)P(Y=1)}=\frac{0.025}{0.125}=0.2<\frac{1}{2}</math><br /> | <math>\, \hat r(x) = P(Y=1|X =(0,1,0))=\frac{P(X=(0,1,0)|Y=1)P(Y=1)}{P(X=(0,1,0)|Y=0)P(Y=0)+P(X=(0,1,0)|Y=1)P(Y=1)}=\frac{0.025}{0.125}=0.2<\frac{1}{2}.</math><br /> | ||

The Bayes | The Bayes classifier assigns the new student into the class <math>\, h^*(x)=0 </math>, and therefore we predict the new student to fail the course. | ||

=== Bayesian vs. Frequentist === | === Bayesian vs. Frequentist === | ||

Revision as of 15:12, 27 September 2010

Editor sign up

Classfication-2010.09.21

Classification

Statistical classification, or simply known as classification, is an area of supervised learning that addresses the problem of how to systematically assign unlabeled (classes unknown) novel data to their labels (classes or groups or types) by using knowledge of their features (characteristics or attributes) that are obtained from observation and/or measurement. A classifier is a specific technique or method for performing classification. {{

Template:namespace detect

| type = style | image = | imageright = | style = | textstyle = | text = This article may require cleanup to meet Wikicoursenote's quality standards. The specific problem is: classifiers are not always supervised, they could be unsupervised too.. Please improve this article if you can. (27 September 2010) | small = | smallimage = | smallimageright = | smalltext = }}To classify new data, a classifier first uses labeled (classes are known) training data to train a model, and then it uses a function known as its classification rule to assign a label to each new data input after feeding the input's known feature values into the model to determine how much the input belongs to each class.

Classification has been an important task for people and society since the beginnings of history. According to this link, the earliest application of classification in human society was probably done by prehistory peoples for recognizing which wild animals were beneficial to people and which one were harmful, and the earliest systematic use of classification was done by the famous Greek philosopher Aristotle when he, for example, grouped all living things into the two groups of plants and animals. Classification is generally regarded as one of four major areas of statistics, with the other three major areas being regression regression, clustering, and dimensionality reduction (feature extraction or manifold learning).

In classical statistics, classification techniques were developed to learn useful information using small data sets where there is usually not enough of data. When machine learning was developed after the application of computers to statistics, classification techniques were developed to work with very large data sets where there is usually too many data. A major challenge facing data mining using machine learning is how to efficiently find useful patterns in very large amounts of data. An interesting quote that describes this problem quite well is the following one made by the retired Yale University Librarian Rutherford D. Rogers.

"We are drowning in information and starving for knowledge."

- Rutherford D. Rogers

In the Information Age, machine learning when it is combined with efficient classification techniques can be very useful for data mining using very large data sets. This is most useful when the structure of the data is not well understood but the data nevertheless exhibit strong statistical regularity. Areas in which machine learning and classification have been successfully used together include search and recommendation (e.g. Google, Amazon), automatic speech recognition and speaker verification, medical diagnosis, analysis of gene expression, drug discovery etc.

The formal mathematical definition of classification is as follows:

Definition: Classification is the prediction of a discrete random variable [math]\displaystyle{ \mathcal{Y} }[/math] from another random variable [math]\displaystyle{ \mathcal{X} }[/math], where [math]\displaystyle{ \mathcal{Y} }[/math] represents the label assigned to a new data input and [math]\displaystyle{ \mathcal{X} }[/math] represents the known feature values of the input.

A set of training data used by a classifier to train its model consists of [math]\displaystyle{ \,n }[/math] independently and identically distributed (i.i.d) ordered pairs [math]\displaystyle{ \,\{(X_{1},Y_{1}), (X_{2},Y_{2}), \dots , (X_{n},Y_{n})\} }[/math], where the values of the [math]\displaystyle{ \,ith }[/math] training input's feature values [math]\displaystyle{ \,X_{i} = (\,X_{i1}, \dots , X_{id}) \in \mathcal{X} \subset \mathbb{R}^{d} }[/math] is a d-dimensional vector and the label of the [math]\displaystyle{ \, ith }[/math] training input is [math]\displaystyle{ \,Y_{i} \in \mathcal{Y} }[/math] that takes a finite number of values. The classification rule used by a classifier has the form [math]\displaystyle{ \,h: \mathcal{X} \mapsto \mathcal{Y} }[/math]. After the model is trained, each new data input whose feature values is [math]\displaystyle{ \,X \in \mathcal{X}, }[/math] is given the label [math]\displaystyle{ \,\hat{Y}=h(X) \in \mathcal{Y} }[/math].

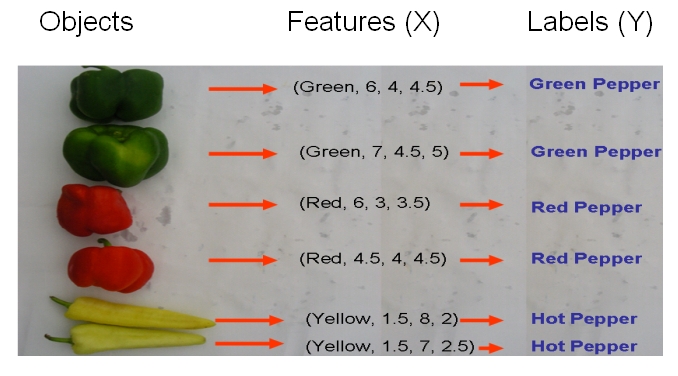

As an example, if we would like to classify some vegetables and fruits, then our training data might look something like the one shown in the following picture from Professor Ali Ghodsi's Fall 2010 STAT 841 slides.

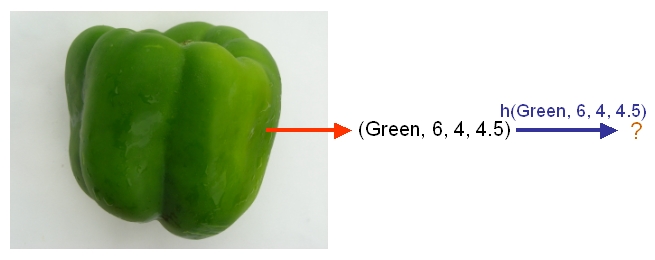

After we have selected a classifier and then built our model using our training data, we could use the classifier's classification rule [math]\displaystyle{ \ h }[/math] to classify any newly-given vegetable or fruit such as the one shown in the following picture from Professor Ali Ghodsi's Fall 2010 STAT 841 slides after first obtaining its feature values.

As another example, suppose we wish to classify newly-given fruits into apples and oranges by considering three features of a fruit that comprise its color, its diameter, and its weight. After selecting a classifier and constructing a model using training data [math]\displaystyle{ \,\{(X_{color, 1}, X_{diameter, 1}, X_{weight, 1}, Y_{1}), \dots , (X_{color, n}, X_{diameter, n}, X_{weight, n}, Y_{n})\} }[/math], we could then use the classifier's classification rule [math]\displaystyle{ \,h }[/math] to assign any newly-given fruit having known feature values [math]\displaystyle{ \,X = (\,X_{color}, X_{diameter} , X_{weight}) \in \mathcal{X} \subset \mathbb{R}^{3} }[/math] the label [math]\displaystyle{ \,\hat{Y}=h(X) \in \mathcal{Y} }[/math], where [math]\displaystyle{ \mathcal{Y}=\{\mathrm{apple}, \mathrm{orange}\} }[/math].

Error rate

The true error rate' [math]\displaystyle{ \,L(h) }[/math] of a classifier having classification rule [math]\displaystyle{ \,h }[/math] is defined as the probability that [math]\displaystyle{ \,h }[/math] does not correctly classify any new data input, i.e., it is defined as [math]\displaystyle{ \,L(h)=P(h(X) \neq Y) }[/math]. Here, [math]\displaystyle{ \,X \in \mathcal{X} }[/math] and [math]\displaystyle{ \,Y \in \mathcal{Y} }[/math] are the known feature values and the true class of that input, respectively.

The empirical error rate (or training error rate) of a classifier having classification rule [math]\displaystyle{ \,h }[/math] is defined as the frequency at which [math]\displaystyle{ \,h }[/math] does not correctly classify the data inputs in the training set, i.e., it is defined as [math]\displaystyle{ \,\hat{L}_{n} = \frac{1}{n} \sum_{i=1}^{n} I(h(X_{i}) \neq Y_{i}) }[/math], where [math]\displaystyle{ \,I }[/math] is an indicator variable and [math]\displaystyle{ \,I = \left\{\begin{matrix} 1 &\text{if } h(X_i) \neq Y_i \\ 0 &\text{if } h(X_i) = Y_i \end{matrix}\right. }[/math]. Here, [math]\displaystyle{ \,X_{i} \in \mathcal{X} }[/math] and [math]\displaystyle{ \,Y_{i} \in \mathcal{Y} }[/math] are the known feature values and the true class of the [math]\displaystyle{ \,ith }[/math] training input, respectively.

Bayes Classifier

After training its model using training data, the Bayes classifier classifies any new data input in two steps. First, it uses the input's known feature values and the Bayes formula to calculate the input's posterior probability of belonging to each class. Then, it uses its classification rule to place the input into its most-probable class, which is the one associated with the input's largest posterior probability.

In mathematical terms, for a new data input having feature values [math]\displaystyle{ \,(X = x)\in \mathcal{X} }[/math], the Bayes classifier labels the input as [math]\displaystyle{ (Y = y) \in \mathcal{Y} }[/math], such that the input's posterior probability [math]\displaystyle{ \,P(Y = y|X = x) }[/math] is maximum over all the members of [math]\displaystyle{ \mathcal{Y} }[/math].

Suppose there are [math]\displaystyle{ \,k }[/math] classes and we are given a new data input having feature values [math]\displaystyle{ \,X=x }[/math]. The following derivation shows how the Bayes classifier finds the input's posterior probability [math]\displaystyle{ \,P(Y = y|X = x) }[/math] of belonging to each class [math]\displaystyle{ (Y = y) \in \mathcal{Y} }[/math].

- [math]\displaystyle{ \begin{align} P(Y=y|X=x) &= \frac{P(X=x|Y=y)P(Y=y)}{P(X=x)} \\ &=\frac{P(X=x|Y=y)P(Y=y)}{\Sigma_{\forall i \in \mathcal{Y}}P(X=x|Y=i)P(Y=i)} \end{align} }[/math]

Here, [math]\displaystyle{ \,P(Y=y|X=x) }[/math] is known as the posterior probability as mentioned above, [math]\displaystyle{ \,P(Y=y) }[/math] is known as the prior probability, [math]\displaystyle{ \,P(X=x|Y=y) }[/math] is known as the likelihood, and [math]\displaystyle{ \,P(X=x) }[/math] is known as the evidence.

In the special case where there are two classes, i.e., [math]\displaystyle{ \, \mathcal{Y}=\{0, 1\} }[/math], the Bayes classifier makes use of the function [math]\displaystyle{ \,r(x)=P\{Y=1|X=x\} }[/math] which is the prior probability of a new data input having feature values [math]\displaystyle{ \,X=x }[/math] belonging to the class [math]\displaystyle{ \,Y = 1 }[/math]. Following the above derivation for the posterior probabilities of a new data input, the Bayes classifier calculates [math]\displaystyle{ \,r(x) }[/math] as follows:

- [math]\displaystyle{ \begin{align} r(x)&=P(Y=1|X=x) \\ &=\frac{P(X=x|Y=1)P(Y=1)}{P(X=x)}\\ &=\frac{P(X=x|Y=1)P(Y=1)}{P(X=x|Y=1)P(Y=1)+P(X=x|Y=0)P(Y=0)} \end{align} }[/math]

The Bayes classifier's classification rule [math]\displaystyle{ \,h^*: \mathcal{X} \mapsto \mathcal{Y} }[/math], then, is

- [math]\displaystyle{ \, h^*(x)= \left\{\begin{matrix} 1 &\text{if } \hat r(x)\gt \frac{1}{2} \\ 0 &\text{if } \mathrm{otherwise} \end{matrix}\right. }[/math].

Here, [math]\displaystyle{ \,x }[/math] is the feature values of a new data input and [math]\displaystyle{ \hat r(x) }[/math] is the estimated value of the function [math]\displaystyle{ \,r(x) }[/math] given by the Bayes classifier's model after feeding [math]\displaystyle{ \,x }[/math] into the model. Still in this special case of two classes, the Bayes classifier's decision boundary is defined as the set [math]\displaystyle{ \,D(h)=\{x: P(Y=1|X=x)=P(Y=0|X=x)\} }[/math]. The decision boundary [math]\displaystyle{ \,D(h) }[/math] essentially combines together the trained model and the decision function [math]\displaystyle{ \,h }[/math], and it is used by the Bayes classifier to assign any new data input to a label of either [math]\displaystyle{ \,Y = 0 }[/math] or [math]\displaystyle{ \,Y = 1 }[/math] depending on which side of the decision boundary the input lies in. From this decision boundary, it is easy to see that, in the case where there are two classes, the Bayes classifier's classification rule can be re-expressed as

- [math]\displaystyle{ \, h^*(x)= \left\{\begin{matrix} 1 &\text{if } P(Y=1|X=x)\gt P(Y=0|X=x) \\ 0 &\text{if } \mathrm{otherwise} \end{matrix}\right. }[/math].

Bayes Classification Rule Optimality Theorem

- The Bayes classifier is the optimal classifier in that it produces the least possible probability of misclassification for any given new data input, i.e., for any other classifier having classification rule [math]\displaystyle{ \,h }[/math], it is always true that [math]\displaystyle{ \,L(h^*(x)) \le L(h(x)) }[/math]. Here, [math]\displaystyle{ \,L }[/math] represents the true error rate, [math]\displaystyle{ \,h^* }[/math] is the Bayes classifier's classification rule, and [math]\displaystyle{ \,x }[/math] is any given data input's feature values.

Although the Bayes classifier is optimal in the theoretical sense, other classifiers may nevertheless outperform it in practice. The reason for this is that various components which make up the Bayes classifier's model, such as the likelihood and prior probabilities, must either be estimated using training data or be guessed with a certain degree of belief, as a result, their estimated values in the trained model may deviate quite a bit from their true population values and this ultimately can cause the posterior probabilities to deviate quite a bit from their true population values. A rather detailed proof of this theorem is available here.

Defining the classification rule:

In the special case of two classes, the Bayes classifier can use three main approaches to define its classification rule </math>h</math>:

- 1) Empirical Risk Minimization: Choose a set of classifiers [math]\displaystyle{ \mathcal{H} }[/math] and find [math]\displaystyle{ \,h^*\in \mathcal{H} }[/math] that minimizes some estimate of the true error rate [math]\displaystyle{ \,L(h) }[/math].

- 2) Regression: Find an estimate [math]\displaystyle{ \hat r }[/math] of the function [math]\displaystyle{ r }[/math] and define

- [math]\displaystyle{ \, h(x)= \left\{\begin{matrix} 1 &\text{if } \hat r(x)\gt \frac{1}{2} \\ 0 &\text{if } \mathrm{otherwise} \end{matrix}\right. }[/math].

- 3) Density Estimation: Estimate [math]\displaystyle{ \,P(X=x|Y=0) }[/math] from the [math]\displaystyle{ \,X_{i} }[/math]'s for which [math]\displaystyle{ \,Y_{i} = 0 }[/math], estimate [math]\displaystyle{ \,P(X=x|Y=1) }[/math] from the [math]\displaystyle{ \,X_{i} }[/math]'s for which [math]\displaystyle{ \,Y_{i} = 1 }[/math], and estimate [math]\displaystyle{ \,P(Y = 1) }[/math] as [math]\displaystyle{ \,\frac{1}{n} \sum_{i=1}^{n} Y_{i} }[/math]. Then, calculate [math]\displaystyle{ \,\hat r(x) = \hat P(Y=1|X=x) }[/math] and define

- [math]\displaystyle{ \, h(x)= \left\{\begin{matrix} 1 &\text{if } \hat r(x)\gt \frac{1}{2} \\ 0 &\text{if } \mathrm{otherwise} \end{matrix}\right. }[/math].

Typically, the Bayes classifier uses approach 3) to define its classification rule. These three approaches can easily be generalized to the case where the number of classes exceeds two.

Multi-class classification:

Suppose there are [math]\displaystyle{ \,k }[/math] classes, where [math]\displaystyle{ \,k \ge 2 }[/math].

In the above discussion, we introduced the Bayes formula for this general case:

- [math]\displaystyle{ \begin{align} P(Y=y|X=x) &=\frac{P(X=x|Y=y)P(Y=y)}{\Sigma_{\forall i \in \mathcal{Y}}P(X=x|Y=i)P(Y=i)} \end{align} }[/math]

which can re-worded as:

- [math]\displaystyle{ \begin{align} P(Y=y|X=x) &=\frac{f_y(x)\pi_y}{\Sigma_{\forall i \in \mathcal{Y}} f_i(x)\pi_i} \end{align} }[/math]

Here, [math]\displaystyle{ \,f_y(x) = P(X=x|Y=y) }[/math] is known as the likelihood function and [math]\displaystyle{ \,\pi_y = P(Y=y) }[/math] is known as the prior probability.

In the general case where there are at least two classes, the Bayes classifier uses the following theorem to assign any new data input having feature values [math]\displaystyle{ \,x }[/math] into one of the [math]\displaystyle{ \,k }[/math] classes.

Theorem

- Suppose that [math]\displaystyle{ \,Y \in \mathcal{Y} = \{1, \dots, k\} }[/math], where [math]\displaystyle{ \,k \ge 2 }[/math]. Then, the optimal classification rule is [math]\displaystyle{ \,h^*(x) = argmax_{i} P(Y=i|X=x) }[/math], where [math]\displaystyle{ \,i \in \{1, \dots, k\} }[/math].

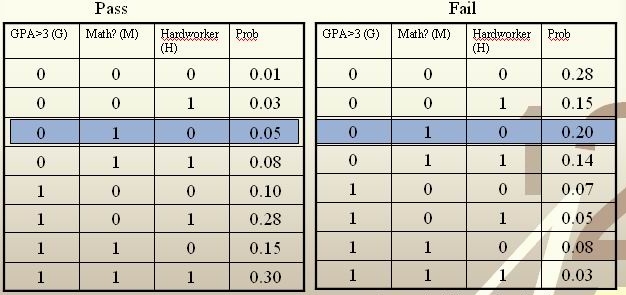

Example: We are going to predict if a particular student will pass STAT 441/841. There are two classes represented by [math]\displaystyle{ \, \mathcal{Y}= \{ 0,1 \} }[/math], where 1 refers to pass and 0 refers to fail. Suppose that the prior probabilities are estimated or guessed to be [math]\displaystyle{ \,\hat P(Y = 1) = \hat P(Y = 0) = 0.5 }[/math]. We have data on past student performances, which we shall use to train the model. For each student, we know the following:

- Whether or not the student’s GPA was greater than 3.0 (G).

- Whether or not the student had a strong math background (M).

- Whether or not the student was a hard worker (H).

- Whether or not the student passed or failed the course.

These known data are summarized in the following tables:

For each student, his/her feature values is [math]\displaystyle{ \, x = \{G, M, H\} }[/math] and his or her class is [math]\displaystyle{ \, y \in \{0, 1\} }[/math].

Suppose there is now a new student having feature values [math]\displaystyle{ \, x = \{0, 1, 0\} }[/math]. We can then calculate [math]\displaystyle{ \,\hat r(x) }[/math] as follows:

[math]\displaystyle{ \, \hat r(x) = P(Y=1|X =(0,1,0))=\frac{P(X=(0,1,0)|Y=1)P(Y=1)}{P(X=(0,1,0)|Y=0)P(Y=0)+P(X=(0,1,0)|Y=1)P(Y=1)}=\frac{0.025}{0.125}=0.2\lt \frac{1}{2}. }[/math]

The Bayes classifier assigns the new student into the class [math]\displaystyle{ \, h^*(x)=0 }[/math], and therefore we predict the new student to fail the course.

Bayesian vs. Frequentist

Linear and Quadratic Discriminant Analysis

Linear and Quadratic Discriminant Analysis cont'd - 2010.09.23

In the second lecture, Professor Ali Ghodsi recapitulates that by calculating the class posteriors [math]\displaystyle{ \Pr(Y=k|X=x) }[/math] we have optimal classification. He also shows that by assuming that the classes have common covariance matrix [math]\displaystyle{ \Sigma_{k}=\Sigma \forall k }[/math] the decision boundary between classes [math]\displaystyle{ k }[/math] and [math]\displaystyle{ l }[/math] is linear (LDA). However, if we do not assume same covariance between the two classes the decision boundary is quadratic function (QDA).

Some MATLAB samples are used to demonstrated LDA and QDA

LDA x QDA

Linear discriminant analysis[1] is a statistical method used to find the linear combination of features which best separate two or more classes of objects or events. It is widely applied in classifying diseases, positioning, product management, and marketing research.

Quadratic Discriminant Analysis[2], on the other had, aims to find the quadratic combination of features. It is more general than Linear discriminant analysis. Unlike LDA however, in QDA there is no assumption that the covariance of each of the classes is identical.

Summarizing LDA and QDA

We can summarize what we have learned so far into the following theorem.

Theorem:

Suppose that [math]\displaystyle{ \,Y \in \{1,\dots,k\} }[/math], if [math]\displaystyle{ \,f_k(x) = Pr(X=x|Y=k) }[/math] is Gaussian, the Bayes Classifier rule is

- [math]\displaystyle{ \,h(X) = \arg\max_{k} \delta_k(x) }[/math]

where

- [math]\displaystyle{ \,\delta_k = - \frac{1}{2}log(|\Sigma_k|) - \frac{1}{2}(x-\mu_k)^\top\Sigma_k^{-1}(x-\mu_k) + log (\pi_k) }[/math] (quadratic)

- Note The decision boundary between classes [math]\displaystyle{ k }[/math] and [math]\displaystyle{ l }[/math] is quadratic in [math]\displaystyle{ x }[/math].

If the covariance of the Gaussians are the same, this becomes

- [math]\displaystyle{ \,\delta_k = x^\top\Sigma^{-1}\mu_k - \frac{1}{2}\mu_k^\top\Sigma^{-1}\mu_k + log (\pi_k) }[/math] (linear)

- Note [math]\displaystyle{ \,\arg\max_{k} \delta_k(x) }[/math]returns the set of k for which [math]\displaystyle{ \,\delta_k(x) }[/math] attains its largest value.

Reference

The Elements of Statistical Learning: Data Mining, Inference, and Prediction

The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Second Edition, February 2009 Trevor Hastie, Robert Tibshirani, Jerome Friedman

http://www-stat.stanford.edu/~tibs/ElemStatLearn/ (3rd Edition is available)