XGBoost: Difference between revisions

| Line 27: | Line 27: | ||

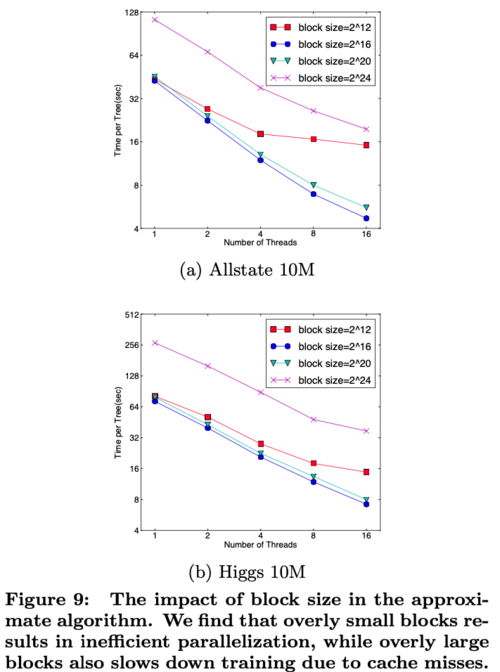

#:* XGBoost chose 2^16 as the block size | #:* XGBoost chose 2^16 as the block size | ||

[[File: blocksize.png|center]] | [[File: blocksize.png|500px|thumb|center]] | ||

=== Blocks for Out-of-core Computation === | === Blocks for Out-of-core Computation === | ||

Revision as of 17:49, 23 November 2021

Presented by

- Chun Waan Loke

- Peter Chong

- Clarice Osmond

- Zhilong Li

Introduction

Tree Boosting In A Nutshell

Split Finding Algorithms

System Design

Column Block for Parallel Learning

Cache-aware Access

The proposed block structure optimizes the computation complexity but requires indirect fetches of gradient statistics by row index. XGBoost optimizes the process by using the following methods.

- For the exact greedy algorithm, XGBoost uses a cache-aware prefetching algorithm

- It stores Gradient and Hessians in the cache to make calculations fast

- It runs twice as fast as the naive method when the dataset is large

- For approximate algorithms, XGBoost chooses a specific block size

- Choosing a small block size results in inefficient parallelization

- Choosing a large block size results in cache misses

- Various choices of block size are compared and the results are shown in Figure 9

- XGBoost chose 2^16 as the block size

Blocks for Out-of-core Computation

When the dataset is too large for the cache and main memory, XGBoost utilizes disk spaces as well. Since reading and writing data to the disks are slow, XGBoost optimizes the process by using the following two methods.

- Block Compression

- Blocks are compressed by columns

- Although decompressing takes time, it is still faster than reading from the disks

- Block Sharding

- If multiple disks are available, data is split into those disks

- When the CPU needs data, all the disks can be read at the same time

End To End Evaluations

Conclusion

References

[1] R. Bekkerman. The present and the future of the kdd cup competition: an outsider’s perspective.

[2] R. Bekkerman, M. Bilenko, and J. Langford. Scaling Up Machine Learning: Parallel and Distributed Approaches. Cambridge University Press, New York, NY, USA, 2011.

[3] J. Bennett and S. Lanning. The netflix prize. In Proceedings of the KDD Cup Workshop 2007, pages 3–6, New York, Aug. 2007.

[4] L. Breiman. Random forests. Maching Learning, 45(1):5–32, Oct. 2001.

[5] C. Burges. From ranknet to lambdarank to lambdamart: An overview. Learning, 11:23–581, 2010.

[6] O. Chapelle and Y. Chang. Yahoo! Learning to Rank Challenge Overview. Journal of Machine Learning Research - W & CP, 14:1–24, 2011.

[7] T. Chen, H. Li, Q. Yang, and Y. Yu. General functional matrix factorization using gradient boosting. In Proceeding of 30th International Conference on Machine Learning (ICML’13), volume 1, pages 436–444, 2013.

[8] T. Chen, S. Singh, B. Taskar, and C. Guestrin. Efficient second-order gradient boosting for conditional random fields. In Proceeding of 18th Artificial Intelligence and Statistics Conference (AISTATS’15), volume 1, 2015.

[9] R.-E. Fan, K.-W. Chang, C.-J. Hsieh, X.-R. Wang, and C.-J. Lin. LIBLINEAR: A library for large linear classification. Journal of Machine Learning Research, 9:1871–1874, 2008.

[10] J. Friedman. Greedy function approximation: a gradient boosting machine. Annals of Statistics, 29(5):1189–1232, 2001.

[11] J. Friedman. Stochastic gradient boosting. Computational Statistics & Data Analysis, 38(4):367–378, 2002.

[12] J. Friedman, T. Hastie, and R. Tibshirani. Additive logistic regression: a statistical view of boosting. Annals of Statistics, 28(2):337–407, 2000.

[13] J. H. Friedman and B. E. Popescu. Importance sampled learning ensembles, 2003.

[14] M. Greenwald and S. Khanna. Space-efficient online computation of quantile summaries. In Proceedings of the 2001 ACM SIGMOD International Conference on Management of Data, pages 58–66, 2001.

[15] X. He, J. Pan, O. Jin, T. Xu, B. Liu, T. Xu, Y. Shi, A. Atallah, R. Herbrich, S. Bowers, and J. Q. n. Candela. Practical lessons from predicting clicks on ads at facebook. In Proceedings of the Eighth International Workshop on Data Mining for Online Advertising, ADKDD’14, 2014.

[16] P. Li. Robust Logitboost and adaptive base class (ABC) Logitboost. In Proceedings of the Twenty-Sixth Conference Annual Conference on Uncertainty in Artificial Intelligence (UAI’10), pages 302–311, 2010.

[17] P. Li, Q. Wu, and C. J. Burges. Mcrank: Learning to rank using multiple classification and gradient boosting. In Advances in Neural Information Processing Systems 20, pages 897–904. 2008.

[18] X. Meng, J. Bradley, B. Yavuz, E. Sparks, S. Venkataraman, D. Liu, J. Freeman, D. Tsai, M. Amde, S. Owen, D. Xin, R. Xin, M. J. Franklin, R. Zadeh, M. Zaharia, and A. Talwalkar. MLlib: Machine learning in apache spark. Journal of Machine Learning Research, 17(34):1–7, 2016.

[19] B. Panda, J. S. Herbach, S. Basu, and R. J. Bayardo. Planet: Massively parallel learning of tree ensembles with mapreduce. Proceeding of VLDB Endowment, 2(2):1426–1437, Aug. 2009.

[20] F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay. Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12:2825–2830, 2011.

[21] G. Ridgeway. Generalized Boosted Models: A guide to the gbm package.

[22] S. Tyree, K. Weinberger, K. Agrawal, and J. Paykin. Parallel boosted regression trees for web search ranking. In Proceedings of the 20th international conference on World wide web, pages 387–396. ACM, 2011.

[23] J. Ye, J.-H. Chow, J. Chen, and Z. Zheng. Stochastic gradient boosted distributed decision trees. In Proceedings of the 18th ACM Conference on Information and Knowledge Management, CIKM ’09.

[24] Q. Zhang and W. Wang. A fast algorithm for approximate quantiles in high speed data streams. In Proceedings of the 19th International Conference on Scientific and Statistical Database Management, 2007.

[25] T. Zhang and R. Johnson. Learning nonlinear functions using regularized greedy forest. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(5), 2014.