Evaluating Machine Accuracy on ImageNet: Difference between revisions

| Line 12: | Line 12: | ||

== Experiment Setup == | == Experiment Setup == | ||

=== Overview === | |||

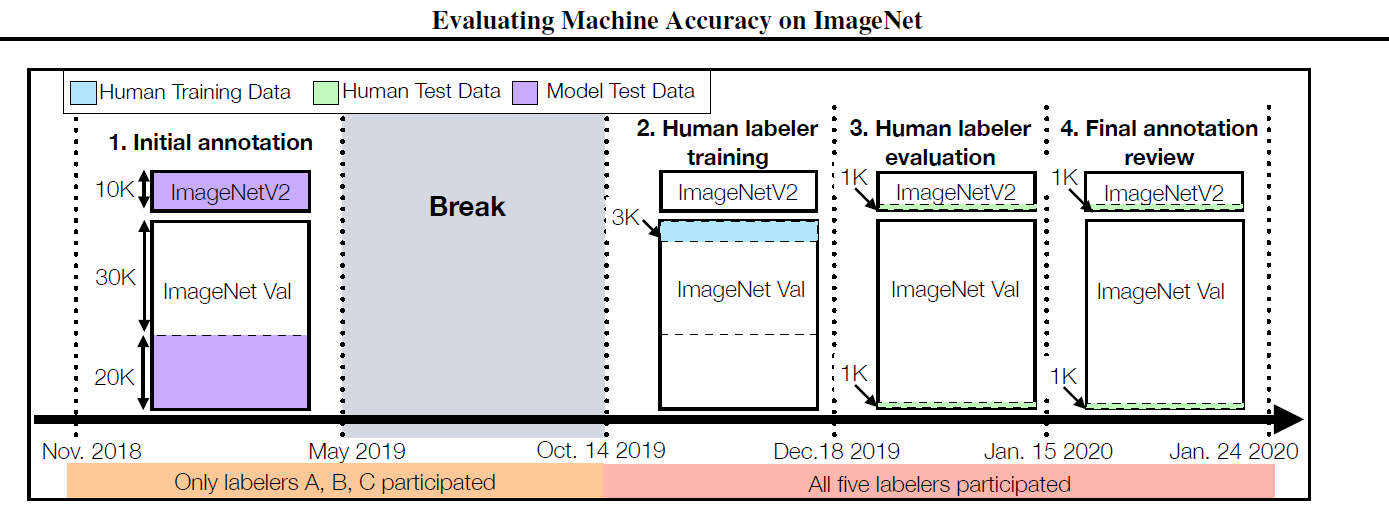

There are four main phases to the experiment, which are (i) initial multilabel annotation, (ii) human labeler training, (iii) human labeler evaluation, and (iv) final annotation overview. The five authors of the paper are the participants in the experiments. | |||

A brief overview of the four phases is as follows: | |||

[[File:Experiment_Set_Up.png | center]] | |||

=== Initial multi-label annotation === | |||

Three labelers A, B, and C provided multi-label annotations for a subset from the ImageNet validation set, and all images from the ImageNetV2 test sets. These experiences give A, B, and C extensive experience with the ImageNet dataset. | |||

=== Human Labeler Training === | |||

Human Labeler Training | |||

=== Human Labeler Evaluation === | |||

Class-balanced random samples are generated from both the ImageNet validation set and ImageNetV2. Five participants labeled these images over 28 days. | |||

=== Final annotation Review === | |||

All labelers reviewed the additional annotations generated in the human labeler evaluation phase. | |||

== Multi-label annotations== | == Multi-label annotations== | ||

Revision as of 01:53, 30 November 2020

Presented by

Siyuan Xia, Jiaxiang Liu, Jiabao Dong, Yipeng Du

Introduction

ImageNet is the most influential data set in machine learning with images and corresponding labels over 1000 classes. This paper intends to explore the causes for performance differences between human experts and machine learning models, more specifically, CNN, on ImageNet.

Firstly, some images may fall into multiple classes. As a result, it is possible to underestimate the performance if we map each image to strictly one label, which is what is being done in the top-1 metric. Therefore, we adopt both top-1 and top-5 metrics where the performances of models, unlike human labelers, are linearly correlated in both cases.

Secondly, in contrast to the uniform performance of models on classes, humans tend to achieve better performances on inanimate objects. Human labelers achieve similar overall accuracies as the models, which indicates spaces of improvements on specific classes for machines.

Lastly, the setup of drawing training and test sets from the same distribution may favour models over human labelers. That is, the accuracy of multi-class prediction from models drops when the testing set is drawn from a different distribution than the training set, ImageNetV2. But this shift in distribution does not cause a problem for human labelers.

Experiment Setup

Overview

There are four main phases to the experiment, which are (i) initial multilabel annotation, (ii) human labeler training, (iii) human labeler evaluation, and (iv) final annotation overview. The five authors of the paper are the participants in the experiments.

A brief overview of the four phases is as follows:

Initial multi-label annotation

Three labelers A, B, and C provided multi-label annotations for a subset from the ImageNet validation set, and all images from the ImageNetV2 test sets. These experiences give A, B, and C extensive experience with the ImageNet dataset.

Human Labeler Training

Human Labeler Training

Human Labeler Evaluation

Class-balanced random samples are generated from both the ImageNet validation set and ImageNetV2. Five participants labeled these images over 28 days.

Final annotation Review

All labelers reviewed the additional annotations generated in the human labeler evaluation phase.

Multi-label annotations

Human Accuracy Measurement Process

Main Results

Other Observations

Difficult Images

The experiment also shed some light on images that are difficult to label. 10 images were misclassified by all of the human labelers. Among those 10 images, there was 1 image of a monkey and 9 of dogs. In addition, 27 images, with 19 in object classes and 8 in organism classes, were misclassified by all 72 machine learning models in this experiment. Only 2 images were labeled wrong by all human labelers and models. Both images contained dogs. Researchers also noted that difficult images for models are mostly images of objects and exclusively images of animals for human labelers.

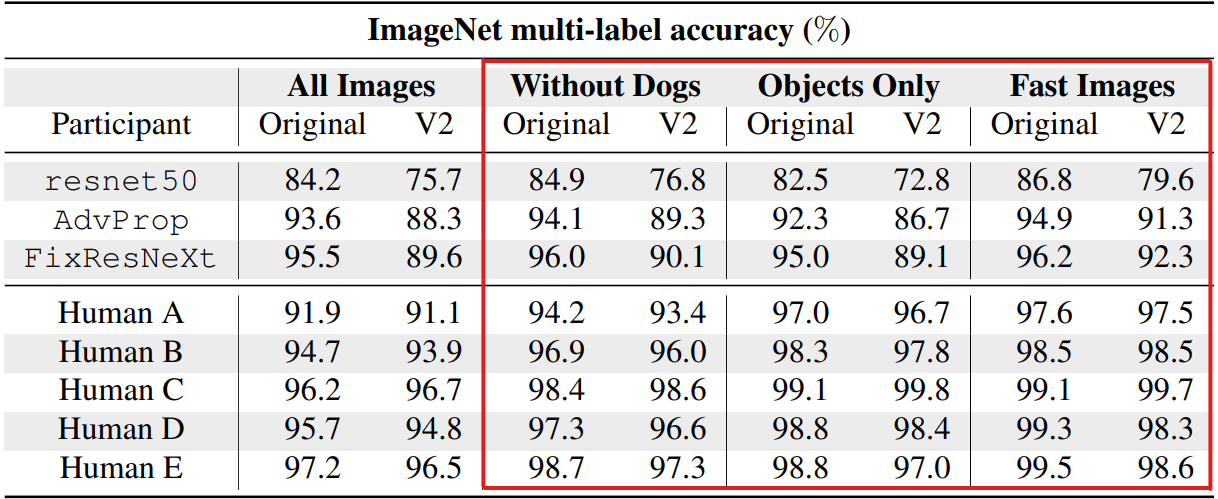

Accuracies without dogs

As previously discussed in the paper, machine learning models tend to outperform human labelers when classifying the 118 dog classes. To better understand to what extent does models outperform human labelers, researchers computed the accuracies again by excluding all the dog classes. Results showed a 0.6% increase in accuracy on the ImageNet images using the best model and a 1.1% increase on the ImageNet V2 images. In comparison, the mean increases in accuracy for human labelers are 1.9% and 1.8% on the ImageNet and ImageNet V2 images respectively. Researchers also conducted a simulation to demonstrate that the increase in human labeling accuracy on non-dog images is significant. This simulation was done by bootstrapping to estimate the changes in accuracy when only using data for the non-dog classes, and simulation results show smaller increases than in the experiment.

In conclusion, it's more difficult for human labelers to classify images with dogs than it is for machine learning models.

Accuracies on objects

Researchers also computed machine and human labelers' accuracies on a subset of data with only objects, as opposed to organisms, to better illustrate the differences in performance. This test involved 590 object classes. As shown in the table above, there is a 3.3% and 3.4% increase in mean accuracies for human labelers on the ImageNet and ImageNet V2 images. In contrast, there is a 0.5% decrease in accuracy for the best model on both ImageNet and ImageNet V2. This indicates that human labelers are much better at classifying objects than these models are.

Accuracies on fast images

Unlike the CNN models, human labelers spent different amounts of time on different images, spanning from several seconds to 40 minutes. To further analyze the images that take human labelers less time to classify, researchers took a subset of images with median labeling time spent by human labelers of at most 60 seconds. These images were referred to as "fast images". There are 756 and 714 fast images from ImageNet and ImageNet V2 respectively, out of the total 2000 images used for evaluation. Accuracies of models and humans on the fast images increased significantly, especially for humans.

This result suggests that human labelers know when an image is difficult to label and would spend more time on it. It also shows that the models are more likely to correctly label images that human labelers can label relatively quickly.

Related Work

Human accuracy on ImageNet

Russakovsky et al. (2015) studied two trained human labelers' accuracies on 1500 and 258 images in the context of the ImageNet challenge. The top-5 accuracy of the labeler who labeled 1500 images was the well-known human baseline on ImageNet.

As introduced before, the researchers went beyond by using multi-label accuracy, using more labelers, and focusing on robustness to small distribution shifts. Although the researchers had some different findings, some results are also consistent with results from (Russakovsky et al., 2015). An example is that both experiments indicated that it takes human labelers around one minute to label an image. The time distribution also has a long tail, due to the difficult images as mentioned before.

Human performance in computer vision broadly

There are many examples of recent studies about humans in the area of computer vision, such as investigating human robustness to synthetic distribution change (Geirhos et al., 2017) and studying what characteristics do humans use to recognize objects (Geirhos et al., 2018). Other examples include the adversarial examples constructed to fool both machines and time-limited humans (Elsayed et al., 2018) and illustrating foreground/background objects' effects on human and machine performance (Zhu et al., 2016).

Multi-label annotations

Stock & Cissé (2017) also studied ImageNet's multi-label nature, which aligns with the researchers' study in this paper. According to Stock & Cissé (2017), the top-1 accuracy measure could underestimate multi-label by up to 13.2%.

ImageNet inconsistencies and label error

Researches have found and recorded some incorrectly labeled images from ImageNet and ImageNet V2 during this study. Earlier studies (Van Horn et al., 2015) also shown that at least 4% of the birds in ImageNet are misclassified. This work also noted that the inconsistent taxonomic structure in birds' classes could lead to weak class boundaries. Researchers also noted that the majority of the fine-grained organism classes also had similar taxonomic issues.

Distribution shift

There has been an increasing amount of studies in this area. One focus of the studies is distributionally robust optimization (DRO), which finds the model that has the smallest worst-case expected error over a set of probability distributions. Another focus is on finding the model with the lowest error rates on adversarial examples. Work in both areas has been productive, but none was shown to resolve the drop in accuracies between ImageNet and ImageNet V2.

Conclusion and Future Work

Conclusion

Researchers noted that in order to achieve truly reliable machine learning, people need a deeper understanding of what input changes should models be robust to. This study has provided valuable insights into the desired robustness properties by comparing model performance to human performance. The results have shown that current performance benchmarks are not addressing the robustness to small and natural distribution shifts, which are easily handled by humans.

Future work

Other than improving the robustness of models, researchers should consider investigating if less-trained human labelers can achieve a similar level of robustness to distributional shifts. In addition, researchers can study the robustness to temporal changes, which is another form of natural distribution shift (Gu et al., 2019; Shankar et al., 2019).

Critiques

- Table 1 simply showed a difference in ImageNet multi-label accuracy yet does not give an explicit reason as to why such a difference is present. Although the paper suggested the distribution shift has caused the difference, it does not give other factors to concretely explain why the distribution shift was the cause.

- With the recommendation to future machine evaluations, the paper proposed to "Report performances on dogs, other animals, and inanimate objects separately.". Despite its intentions, it is narrowly specific and requires further generalization for it to be convincing.

- With choosing human subjects as samplers, no further information was given as to how they are chosen nor there are any background information was given. As it is a classification problem involving many classes as specific to species, a biology student would give far more accurate results than a computer science student or a sanitation worker.

- As explaining the importance of multi-label metrics using comparison to Top-5 metric, the turtle example falls within the overall similarity (simony) classification of the multi-label evaluation metric, as such, if the Top-5 evaluation suggests any one of the turtle species were selected, the algorithm is considered to produce a correct prediction which is the intention. The example does not convey the necessity of changing to the proposed metric over the Top-5 metric.

- With the definition in the paper regarding multi-label metrics, it is hard to see why expanding the label set is different from a traditional Top-5 metric or rather necessary, ergo does not yield the claim which the proposed metric is necessary for rigorous accuracy evaluation on ImageNet.

- When discussing the main results, the paper discusses the hypothesis on distribution shift having no effects on human and machine model accuracies; the presentation is poor at best with no clear centric to what they are trying to convey to how (in detail) they resulted in such claims.