Speech2Face: Learning the Face Behind a Voice: Difference between revisions

| Line 53: | Line 53: | ||

[[File:FeatSim.JPG]] | [[File:FeatSim.JPG]] | ||

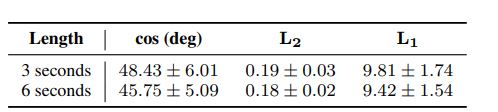

Another examination of the result is the similarity of features predicted by the Speech2Face model. The cosine, L1, and L2 distance between the facial feature vector produced by the model and true facial feature vector from the face decoder were computed, and presented above * * *. A comparison of facial similarity was also done based on the length of audio inputted. From the table, it is evident that the 6 second audio produced a lower cosine, L1, and L2, resulting in a facial feature vector that is closer to the ground truth. | Another examination of the result is the similarity of features predicted by the Speech2Face model. The cosine, L1, and L2 distance between the facial feature vector produced by the model and true facial feature vector from the face decoder were computed, and presented above * * *. A comparison of facial similarity was also done based on the length of audio inputted. From the table, it is evident that the 6 second audio produced a lower cosine, L1, and L2 distance, resulting in a facial feature vector that is closer to the ground truth. | ||

'''S2f -> Face retrieval performance''' | '''S2f -> Face retrieval performance''' | ||

Revision as of 17:46, 28 November 2020

Presented by

Ian Cheung, Russell Parco, Scholar Sun, Jacky Yao, Daniel Zhang

Introduction

This paper presents a deep neural network architecture called Speech2Face. This architecture utilizes millions of Internet/Youtube videos of people speaking to learn the correlation between a voice and the respective face. The model learns the correlations, allowing it to produce facial reconstruction images that capture specific physical attributes, such as a person's age, gender, or ethnicity, through a self-supervised procedure. Namely, the model utilizes the simultaneous occurrence of faces and speech in videos and does not need to model the attributes explicitly. The model is evaluated and numerically quantifies how closely the reconstruction, done by the Speech2Face model, resembles the true face images of the respective speakers.

Previous Work

With visual and audio signals being so dominant and accessible in our daily life, there has been huge interest in how visual and audio perceptions interact with each other. Arandjelovic and Zisserman [1] leveraged the existing database of mp4 files to learn a generic audio representation to classify whether a video frame and an audio clip correspond to each other. These learned audio-visual representations have been used in a variety of setting, including cross-modal retrieval, sound source localization and sound source separation. This also paved the path for specifically studying the association between faces and voices of agents in the field of computer vision. In particular, cross-modal signals extracted from faces and voices have been proposed as a binary or multi-task classification task and there have been some promising results. Studies have been able to identify active speakers of a video, to predict lip motion from speech and even learn the emotion of the agents based on their voices.

Recently, various methods have been suggested to use various audio signals to reconstruct visual information, where the reconstructed subject is subjected to a priori. Notably, Duarte et al. [2] were able to synthesize the exact face images and expression of an agent from speech using a GAN model. This paper instead hopes to recover the dominant and generic facial structure from a speech.

Motivation

Often, when people listen to a person speaking, without seeing his/her face, whether it is on the phone or on the radio, they build a mental image in their head for what we think that person may look like. There is a strong connection between speech and appearance, which is a direct result of the factors that affect speech, including age, gender, and facial bone structure. In addition, other voice-appearance correlations stem from the way in which we talk: language, accent, speed, pronunciations, etc. These properties of speech are often common among many different nationalities and cultures, which can, in turn, translate to common physical features among different voices. Namely, from an input audio segment of a person speaking, the method would reconstruct an image of the person’s face in a canonical form (frontal-facing, neutral expression). The goal was to study to what extent people can infer how someone else looks from the way they talk. Rather than predicting a recognizable image of the exact face, the authors were more interested in capturing the dominant facial features.

Model Architecture

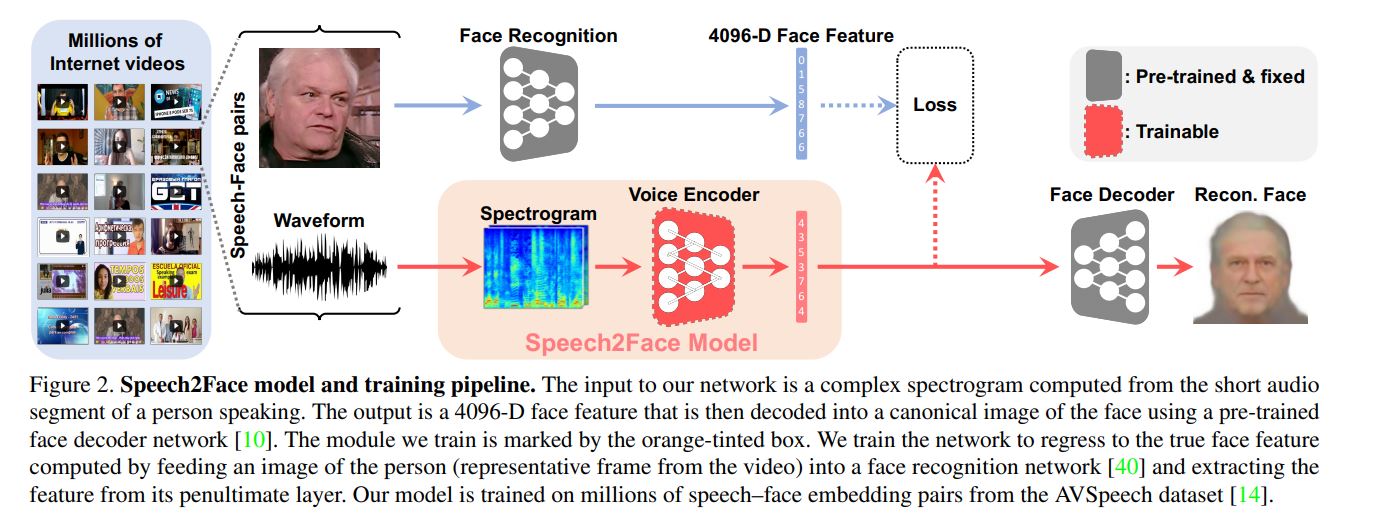

Speech2Face model and training pipeline

The Speech2Face Model used to achieve the desired result consist of 2 parts - a voice encoder which takes in a spectrogram of speech as input and outputs low dimensional face features, and a face decoder which takes in face features as input and outputs a normalized image of a face (neutral expression, looking forward). The image above * * * gives a visual representation of the pipeline of the entire model, from video input to a recognizable face. The face decoder itself was taken from previous work by Cole et al (cite) and will not be explored in great detail here, but in essence the facenet model (cite) is combined with a single multilayer perceptron layer, the result of which is passed through a convolutional neural network to determine the texture of the image, and a multilayer perception to determine the landmark locations. The two results are combined to form an image. This model was trained using the VGG-Face model as input. It was also trained separately and remained fixed during the voice encoder training. The variability in facial expressions, head positions and lighting conditions of the face images creates a challenge to both the design and training of the Speech2Face model. To avoid this problem the model is trained to first regress to a low dimensional intermediate representation of the face. The VGG-Face model, a face recognition model that is pretrained on a largescale face database (cite) is used to extract a 4069-D face feature from the penultimate layer of the network.

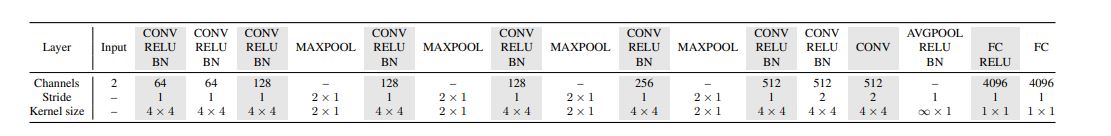

Voice Encoder Architecture

The voice encoder itself is a convolutional neural network, which transforms the input spectrogram into pseudo face features. The exact architecture is given above * * *. The model alternates between convolution, ReLU, batch normalization layers, and layers of max-pooling. In each max-pooling layer, pooling is only done along the temporal dimension of the data. This is to ensure that the frequency, an important factor in determining vocal characteristics such as tone, is preserved. In the final pooling layer, an average pooling is applied along the temporal dimension. This allows the model to aggregate information over time, and allows the model to be used for input speeches of varying length. Two fully connected layers at the end are used to return a 4096 dimensional facial feature output.

Training

The AVSSpeech dataset, a large scale audio-visual dataset is used for the training. AVSSpeech dataset is comprised of millions of video segments from Youtube with over 100,000 different people. The training data is composed of educational videos and does not provide an accurate representation of the global population, which will clearly affect the model. Also note that facial features that are irrelevant to speech, like hair colour, may be predicted by the model. From each video, a 224x224 pixels image of the face was passed through the face decoder to compute a facial feature vector. Combined with a spectrogram of the audio, a training and test set of 1.7 and 0.15 million entries respectively were constructed.

The voice encoder is trained in a self-supervised manner. A frame which contains the face is extracted from each video and then inputed to the VGG-Face model to extract the feature vector [math]\displaystyle{ v_f }[/math], the 4096 dimensional facial feature vector given by the face decoder on a single frame from the input video. This provides the supervision signal for the voice-encoder. The feature [math]\displaystyle{ v_s }[/math], the 4096 dimensional facial feature vector from the voice encoder, is trained to predict [math]\displaystyle{ v_f }[/math].

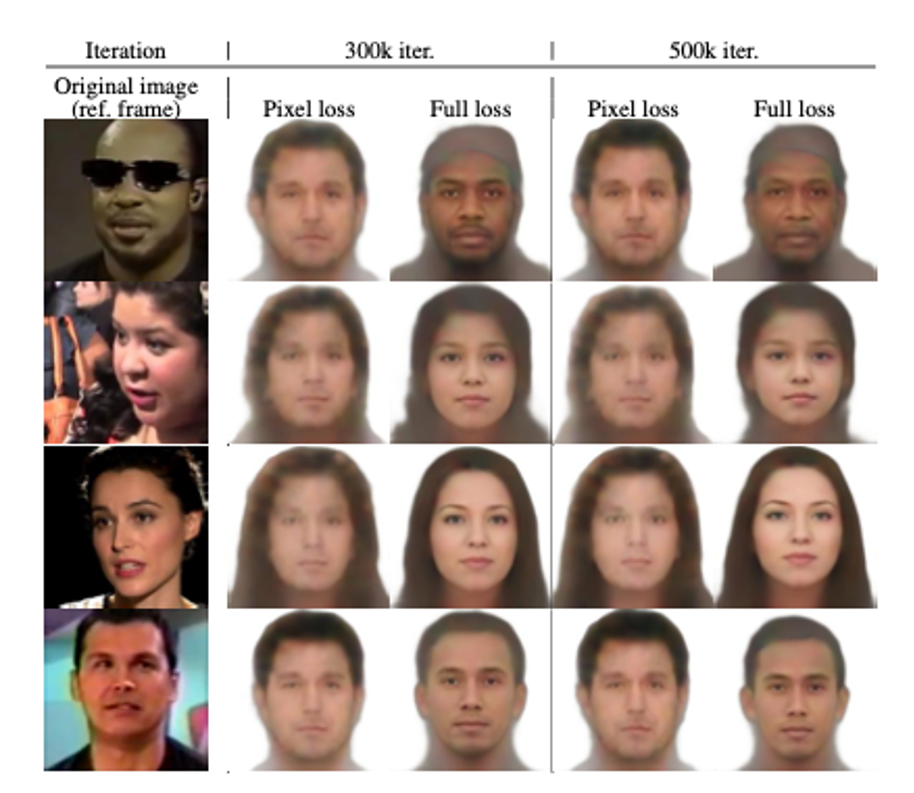

In order to train this model, a proper loss function must be defined. The L1 norm of the difference between [math]\displaystyle{ v_s }[/math] and [math]\displaystyle{ v_f }[/math], given by [math]\displaystyle{ ||v_f - v_s||_1 }[/math], may seem like a suitable loss function, but in actuality results in unstable results and long training times. The image below shows the difference in predicted facial features given by [math]\displaystyle{ ||v_f - v_s||_1 }[/math] and the following loss * * *. Based on the work of Castrejon et al. (cite), a loss function is used which penalizes the differences in the last layer of the face decoder [math]\displaystyle{ f_{VGG} }[/math] and the first layer [math]\displaystyle{ f_{dec} }[/math]. The final loss function is given by: $$L_{total} = ||f_{dec}(v_f) - f_{dec}(v_s)|| + \lambda_1||\frac{v_f}{||v_f||} - \frac{v_s}{||v_s||}||^2_2 + \lambda_2 L_{distill}(f_{VGG}(v_f), f_{VGG}(v_s))$$ This loss penalizes on both the normalized Euclidean distance between the 2 facial feature vectors and the knowledge distillation loss, which is given by: $$L_{distill}(a,b) = -\sum_ip_{(i)}logp_{(i)}(b)$$ $$p_{(i)}(a) = \frac{exp(a_i/T)}{\sum_jexp(a_j/T)}$$ Knowledge distillation is used as an alternative to Cross-Entropy. By recommendation of Cole et al (cite), [math]\displaystyle{ T = 2 }[/math] was used to ensure a smooth activation. [math]\displaystyle{ \lambda_1 = 0.025 }[/math] and [math]\displaystyle{ \lambda_2 = 200 }[/math] were chosen so that magnitude of the gradient of each term with respect to [math]\displaystyle{ v_s }[/math] are of similar scale at the [math]\displaystyle{ 1000^{th} }[/math] iteration.

Results

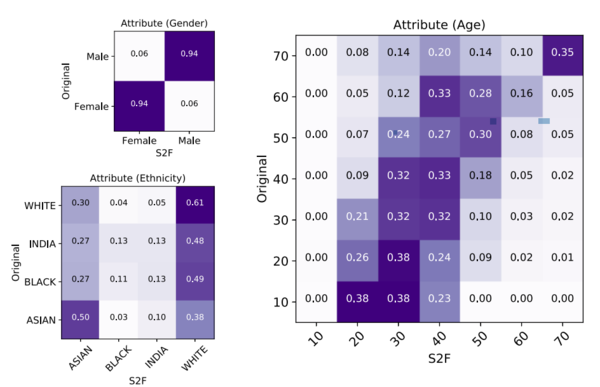

Confusion Matrix and Dataset statistics

In order to determine the similarity between the generated images and the ground truth, a commercial service known as Face++ which classifies faces for distinct attributes (such as gender, ethnicity, etc) was used. The following image * * * gives a confusion matrix based on gender, ethnicity, and age. By examining these matrices, it is seen that the Speech2Face model performs very well on gender, only misclassifying 0.12% of the time. Similarly, the model performs fairly well on ethnicities, especially with white or asian faces. Although the model performs worse on black and Indian faces, that can be attributed to the vastly unbalanced data, where 50% of the data represented a white face, and 80% represented a white or asian face.

Feature Similarity

Another examination of the result is the similarity of features predicted by the Speech2Face model. The cosine, L1, and L2 distance between the facial feature vector produced by the model and true facial feature vector from the face decoder were computed, and presented above * * *. A comparison of facial similarity was also done based on the length of audio inputted. From the table, it is evident that the 6 second audio produced a lower cosine, L1, and L2 distance, resulting in a facial feature vector that is closer to the ground truth.

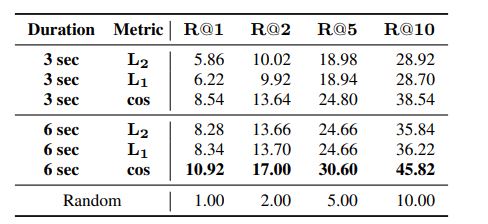

S2f -> Face retrieval performance

The performance of the model was also examined on how well it could produce the original image. The R@K metric, also known as retrieval performance by recall at K, was developed in which the K closest images in distance to the output of the model are found, and the chance that the original image is within those K images is the R@K score. A higher R@K score indicates better performance. From the table, both the 3 second and 6 second audio showed significant improvement over random chance, with the 6 second audio performing slightly better.

Conclusion

The report presented a novel study of face reconstruction from audio recordings of a person speaking. The model was demonstrated to be able to predict plausible face reconstructions with similar facial features to real images of the person speaking. The problme was addressed by learning to align the feature space of speech to that of a pretrained face decoder. The model was trained on millions of videos of people speaking from YouTube. The model was then evaluated by comparing the reconstructed faces with. The authors believe that facial reconstruction allows a more comprehensive view of voice-face correlation compared to predicting individual features, which may lead to new research opportunities and applications.

Discussion and Critiques

- Their is evidence that the results of the model may be heavily influenced by external factors. Their method of sampling random YouTube videos resulted in an unbalanced sample in terms of ethnicity. Over half of the samples were white. We also saw a large bias in the models prediction of ethnicity towards white. The bias in the results show that the model may be overfitting the training data and puts into question what the performance of the model would be when trained and tested on a balanced dataset. Also the model was shown to infer different faces features based on language. This puts into question how heavily the model depends on the spoken language. testing a more controlled sample where all speech recording were of the same language may help address this concern to determine the models reliance on spoken language. The evaluation of the result is also highly dependent on the Face++ classifiers. Since they compare the age, gender and ethnicity by running the Face++ classifiers on the original images and the reconstructions to evaluate their model, the model that they create can only be as good as the one they are using to evaluate it. Therefore, any limitations of the Face++ classifier may become a limitation of Speech2Face.

References

[1] R. Arandjelovic and A. Zisserman. Look, listen and learn. In IEEE International Conference on Computer Vision (ICCV), 2017.

[2] A. Duarte, F. Roldan, M. Tubau, J. Escur, S. Pascual, A. Salvador, E. Mohedano, K. McGuinness, J. Torres, and X. Giroi-Nieto. Wav2Pix: speech-conditioned face generation using generative adversarial networks. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2019.