CRITICAL ANALYSIS OF SELF-SUPERVISION: Difference between revisions

| Line 40: | Line 40: | ||

[1] Y. Asano, C. Rupprecht, and A. Vedaldi, “A critical analysis of self-supervision, or what we can learn from a single image,” inInternational Conference on Learning Representations, 2019 | [1] Y. Asano, C. Rupprecht, and A. Vedaldi, “A critical analysis of self-supervision, or what we can learn from a single image,” inInternational Conference on Learning Representations, 2019 | ||

[2] J. Donahue, P. Kr ̈ahenb ̈uhl, and T. Darrell, “Adversarial feature learning,”arXiv preprint arXiv:1605.09782, 2016. | [2] J. Donahue, P. Kr ̈ahenb ̈uhl, and T. Darrell, “Adversarial feature learning,”arXiv preprint arXiv:1605.09782, 2016. | ||

[3] S. Gidaris, P. Singh, and N. Komodakis, “Unsupervised representation learning by predicting image rotations,”arXiv preprintarXiv:1803.07728, 2018 | [3] S. Gidaris, P. Singh, and N. Komodakis, “Unsupervised representation learning by predicting image rotations,”arXiv preprintarXiv:1803.07728, 2018 | ||

[4] M. Caron, P. Bojanowski, A. Joulin, and M. Douze, “Deep clustering for unsupervised learning of visual features,” inProceedings ofthe European Conference on Computer Vision (ECCV), 2018, pp. 132–149 | [4] M. Caron, P. Bojanowski, A. Joulin, and M. Douze, “Deep clustering for unsupervised learning of visual features,” inProceedings ofthe European Conference on Computer Vision (ECCV), 2018, pp. 132–149 | ||

Revision as of 20:31, 26 November 2020

Presented by

Maral Rasoolijaberi

Introduction

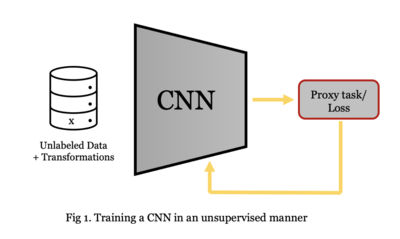

This paper evaluated the performance of state-of-the-art unsupervised (self-supervised) methods on learning weights of convolutional neural networks (CNNs) to figure out whether current self-supervision techniques can learn deep features from only one image. The main goal of self-supervised learning is to take advantage of vast amount of unlabeled data for training CNNs and finding a generalized image representation. In self-supervised learning, data generate ground truth labels per se by pretext tasks such as rotation estimation. For example, we have a picture of a cat without the label "cat". We rotate the cat image by 90 degrees clockwise and the CNN is trained in a way that to find the rotation axis.

Previous Work

In recent literature, several papers addressed unsupervised learning methods and learning from a single sample.

A BiGAN [2], or Bidirectional GAN, is simply a generative adversarial network plus an encoder. The generator maps latent samples to generated data and the encoder performs as the opposite of the generator. After training BiGAN, the encoder has learned to generate a rich image representation. In RotNet method [3], images are rotated and the CNN learns to figure out the direction. DeepCluster [4] alternates k-means clustering to learn stable feature representations under several image transformations.

Method

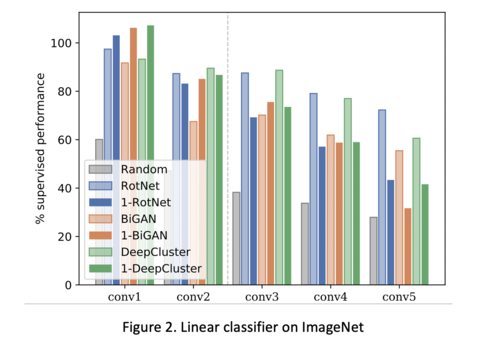

In the self-supervision methods, a hypothesis function should be defined without target labels. Let [math]\displaystyle{ x }[/math] be a sample from the unlabeled dataset. The weights of the CNN are learnt in a way that minimizes [math]\displaystyle{ ||h(x)-x|| }[/math] where [math]\displaystyle{ h(x) }[/math] is a hypothesis function, i.e. BiGAN, RotNet and DeepCluster. AlexNet as CNN, and various methods of data augmentation including cropping, rotation, scaling, contrast changes, and adding noise, have been used in this paper. To measure the quality of features, they train a linear classifier on top of each convolutional layer of AlexNet to find whether features are linearly separable. In general, the main purpose of CNN is to reach a linearly separable representation for images. Next, they compared the results of a million images in the ImageNet dataset with a million augmented imaged generated from a single image.

results

Figure 2 shows how well representations at each level are linearly separable. As can been seen, training the CNN with self-supervision methods can match the performance of fully supervised learning in the first two convolutional layers. It must be pointed out that only one single image with massive augmentation is used in this experiment.

Conclusion

This paper revealed that if a strong data-augmentation be employed, as little as a single image is sufficient for self-supervision techniques to learn the first few layers of popular CNNs. The results confirmed that the weights of the first layers of deep networks contain limited information about natural images. Accordingly, current unsupervised learning is only about augmentation, and we don not use the capacity of million images, yet.

References

[1] Y. Asano, C. Rupprecht, and A. Vedaldi, “A critical analysis of self-supervision, or what we can learn from a single image,” inInternational Conference on Learning Representations, 2019 [2] J. Donahue, P. Kr ̈ahenb ̈uhl, and T. Darrell, “Adversarial feature learning,”arXiv preprint arXiv:1605.09782, 2016. [3] S. Gidaris, P. Singh, and N. Komodakis, “Unsupervised representation learning by predicting image rotations,”arXiv preprintarXiv:1803.07728, 2018 [4] M. Caron, P. Bojanowski, A. Joulin, and M. Douze, “Deep clustering for unsupervised learning of visual features,” inProceedings ofthe European Conference on Computer Vision (ECCV), 2018, pp. 132–149