User:Cvmustat: Difference between revisions

| Line 10: | Line 10: | ||

Text classification is the task of assigning a set of predefined categories to natural language texts. It is a fundamental task in Natural Language Processing (NLP) with various applications such as sentiment analysis, and topic classification. A classic example involving text classification is given a set of News articles, is it possible to classify the genre or subject of each article? Text classification is useful as text data is a rich source of information, but extracting insights from it directly can be difficult and time consuming as most text data is unstructured.(Grimes S,2008) NLP text classification can help automatically structure and analyze text, quickly and cost-effectively, allowing for individuals to extract import features from the text easier than before. | Text classification is the task of assigning a set of predefined categories to natural language texts. It is a fundamental task in Natural Language Processing (NLP) with various applications such as sentiment analysis, and topic classification. A classic example involving text classification is given a set of News articles, is it possible to classify the genre or subject of each article? Text classification is useful as text data is a rich source of information, but extracting insights from it directly can be difficult and time consuming as most text data is unstructured.(Grimes S,2008) NLP text classification can help automatically structure and analyze text, quickly and cost-effectively, allowing for individuals to extract import features from the text easier than before. | ||

In | In practice, pre-trained word embeddings and deep neural networks are used together for NLP text classification. Word embeddings are used to map the raw text data to an implicit space where the semantic relationships of the words are preserved; words with similar meaning have a similar representation. One can then feed these embeddings into deep neural networks to learn different features of the text. Convolutional neural networks can be used to determine the semantic composition of the text(the meaning),as it is able to capture both local and position invariant features of the text. Alternatively, Recurrent Neural Networks can be used to determine the contextual meaning of each word in the text (how each word relates to one another) by treating the text as sequential data and then analyzing each word separately. Previous approaches to attempt to combine these two neural networks to in corporate the advantages of both models involve streamlining the two networks which might decrease the performance of them. In addition, most methods incorporating a bi-directional Recurrent Neural Network usually choose to concatenate the forward and backward hidden states at each time step which results in a vector that does not have the interaction information between the forward and backward hidden states. The hidden state in one direction only contains the contextual meaning in that particular direction, however a word's contextual representation is more accurate when collected and viewed from both directions. Failure to observe the meaning of a word in both directions causes the loss of the true meaning of the word, especially for polysemic words (words with more than one meaning) that are context sensitive. | ||

== Paper Key Contributions == | == Paper Key Contributions == | ||

Revision as of 00:36, 14 November 2020

Combine Convolution with Recurrent Networks for Text Classification

Team Members: Bushra Haque, Hayden Jones, Michael Leung, Cristian Mustatea

Date: Week of Nov 23

Introduction

Text classification is the task of assigning a set of predefined categories to natural language texts. It is a fundamental task in Natural Language Processing (NLP) with various applications such as sentiment analysis, and topic classification. A classic example involving text classification is given a set of News articles, is it possible to classify the genre or subject of each article? Text classification is useful as text data is a rich source of information, but extracting insights from it directly can be difficult and time consuming as most text data is unstructured.(Grimes S,2008) NLP text classification can help automatically structure and analyze text, quickly and cost-effectively, allowing for individuals to extract import features from the text easier than before.

In practice, pre-trained word embeddings and deep neural networks are used together for NLP text classification. Word embeddings are used to map the raw text data to an implicit space where the semantic relationships of the words are preserved; words with similar meaning have a similar representation. One can then feed these embeddings into deep neural networks to learn different features of the text. Convolutional neural networks can be used to determine the semantic composition of the text(the meaning),as it is able to capture both local and position invariant features of the text. Alternatively, Recurrent Neural Networks can be used to determine the contextual meaning of each word in the text (how each word relates to one another) by treating the text as sequential data and then analyzing each word separately. Previous approaches to attempt to combine these two neural networks to in corporate the advantages of both models involve streamlining the two networks which might decrease the performance of them. In addition, most methods incorporating a bi-directional Recurrent Neural Network usually choose to concatenate the forward and backward hidden states at each time step which results in a vector that does not have the interaction information between the forward and backward hidden states. The hidden state in one direction only contains the contextual meaning in that particular direction, however a word's contextual representation is more accurate when collected and viewed from both directions. Failure to observe the meaning of a word in both directions causes the loss of the true meaning of the word, especially for polysemic words (words with more than one meaning) that are context sensitive.

Paper Key Contributions

This paper suggests an enhanced method of text classification by proposing a new way of combining Convolutional and Recurrent Neural Networks involving the addition of a neural tensor layer. The proposed method maintains each network's respective strengths that are normally lost in previous combination methods. The new suggested architecture is called CRNN, which utilizes both a CNN and RNN that run in parallel on the same input sentence. The CNN produces a 2D matrix that shows the importance of each word based on local and position-invariant features. The bidirectional RNN produces a matrix that learns each word's contextual representation; the words' importance in relation to the rest of the sentence. A neural tensor layer is introduced on top of the RNN to obtain the fusion of bi-directional contextual information surrounding a particular word. The architecture combines these two matrix representations to classify the text as well as offer the importance information of each word for the prediction which can help with the interpretation of the results.

CRNN Results vs Benchmarks

CRNN Model Architecture

RNN Pipeline:

The goal of the RNN pipeline is to input each word in a text, and retrieve the contextual information surrounding the word and compute the contextual representation of the word itself. This is accomplished by use of a bi-directional RNN, such that a Neural Tensor Layer (NTL) can combine the results of the RNN to obtain the final output. RNNs are well-suited to NLP tasks because of their ability to sequentially process data such as ordered text.

A RNN is similar to a feed-forward neural network, but it relies on the use of hidden states. Hidden states are layers in the neural net that produce two outputs: [math]\displaystyle{ \hat{y}_{t} }[/math] and [math]\displaystyle{ h_t }[/math]. For a time step [math]\displaystyle{ t }[/math], [math]\displaystyle{ h_t }[/math] is fed back into the layer to compute [math]\displaystyle{ \hat{y}_{t+1} }[/math] and [math]\displaystyle{ h_{t+1} }[/math].

The pipeline will actually use a variant of RNN called GRU, short for Gated Recurrent Units. This is done to address the vanishing gradient problem which causes the network to struggle memorizing words that came earlier in the sequence. Traditional RNNs are only able to remember the most recent words in a sequence, which may be problematic since words that came in the beginning of the sequence that are important to the classification problem may be forgotten. A GRU attempts to solve this by controlling the flow of information through the network using update and reset gates.

Let [math]\displaystyle{ h_{t-1} \in \mathbb{R}^m, x_t \in \mathbb{R}^d }[/math] be the inputs, and let [math]\displaystyle{ \mathbf{W}_z, \mathbf{W}_r, \mathbf{W}_h \in \mathbb{R}^{m \times d}, \mathbf{U}_z, \mathbf{U}_r, \mathbf{U}_h \in \mathbb{R}^{m \times m} }[/math] be trainable weight matrices. Then the following equations describe the update and reset gates:

[math]\displaystyle{ z_t = \sigma(\mathbf{W}_zx_t + \mathbf{U}_zh_{t-1}) \text{update gate} \\ r_t = \sigma(\mathbf{W}_rx_t + \mathbf{U}_rh_{t-1}) \text{reset gate} \\ \tilde{h}_t = \text{tanh}(\mathbf{W}_hx_t + r_t \circ \mathbf{U}_hh_{t-1}) \text{new memory} \\ h_t = (1-z_t)\circ \tilde{h}_t + z_t\circ h_{t-1} }[/math]

Note that [math]\displaystyle{ \sigma, \text{tanh}, \circ }[/math] are all element-wise functions. The above equations do the following:

- [math]\displaystyle{ h_{t-1} }[/math] carries information from the previous iteration and [math]\displaystyle{ x_t }[/math] is the current input

- the update gate [math]\displaystyle{ z_t }[/math] controls how much past information should be forwarded to the next hidden state

- the rest gate [math]\displaystyle{ r_t }[/math] controls how much past information is forgotten or reset

- new memory [math]\displaystyle{ \tilde{h}_t }[/math] contains the relevant past memory as instructed by [math]\displaystyle{ r_t }[/math] and current information from the input [math]\displaystyle{ x_t }[/math]

- then [math]\displaystyle{ z_t }[/math] is used to control what is passed on from [math]\displaystyle{ h_{t-1} }[/math] and [math]\displaystyle{ (1-z_t) }[/math] controls the new memory that is passed on

Thus, each [math]\displaystyle{ h_t }[/math] can be computed as above to yield results for the bi-directional RNN.

CNN Pipeline:

The goal of the CNN pipeline is to learn the relative importance of words in an input sequence based on different aspects. The process of this CNN pipeline is summarized as the following steps:

- Given a sequence of words, each word is converted into a word vector using the word2vec algorithm which gives matrix X.

- Word vectors are then convolved through the temporal dimension with filters of various sizes (ie. different K) with learnable weights to capture various numerical K-gram representations. These K-gram representations are stored in matrix C.

- The convolution makes this process capture local and position-invariant features. Local means the K words are contiguous. Position-invariant means K contiguous words at any position are detected in this case via convolution.

- Temporal dimension example: convolve words from 1 to K, then convolve words 2 to K+1, etc

- Since not all K-gram representations are equally meaningful, there is a learnable matrix W which takes the linear combination of K-gram representations to more heavily weigh the more important K-gram representations for the classification task.

- Each linear combination of the K-gram representations gives the relative word importance based on the aspect that the linear combination encodes.

- The relative word importance vs aspect gives rise to an interpretable attention matrix A, where each element says the relative importance of a specific word for a specific aspect.

Merging RNN & CNN Pipeline Outputs

The results from both the RNN and CNN pipeline can be merged by computed by simply multiplying the output matrices. That is, we compute [math]\displaystyle{ S=A^TH }[/math] which has shape [math]\displaystyle{ z \times 3m }[/math] and is essentially a linear combination of the hidden states. The concatenated rows of S results in a vector in [math]\displaystyle{ \mathbb{R}^{3zm} }[/math], and can be passed to a fully connected Softmax layer to output a vector of probabilities for our classification task.

To train the model, we make the following decisions:

- Use cross-entropy loss as the loss function

- Perform dropout on random columns in matrix C in the CNN pipeline

- Perform L2 regularization on all parameters

- Use stochastic gradient descent with a learning rate of 0.001

Interpreting Learned CRNN Weights

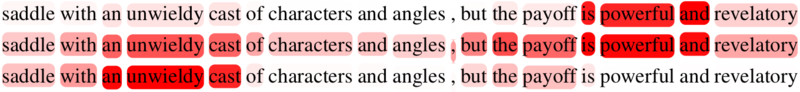

Recall that attention matrix A essentially stores the relative importance of every word in the input sequence for every aspect chosen. Naturally, this means that A is an n-by-z matrix, because n is the number of words in the input sequence and z is the number of aspects being considered in the classification task.

Furthermore, for a specific aspect, words with higher attention values are more important relative to other words in the same input sequence. For a specific word, aspects with higher attention values make the specific word more important compared to other aspects.

For example, in this paper, a sentence is sampled from the Movie Reviews dataset and the transpose of attention matrix A is visualized. Each word represents an element in matrix A, the intensity of red represents the magnitude of an attention value in A, and each sentence is the relative importance of each word for a specific context. In the first row, the words are weighted in terms of a positive aspect, in the last row, the words are weighted in terms of a negative aspect, and in the middle row, the words are weighted in terms of a positive and negative aspect. Notice how the relative importance of words is a function of the aspect.

Conclusion & Summary

References

Grimes, Seth. “Unstructured Data and the 80 Percent Rule.” Breakthrough Analysis, 1 Aug. 2008, breakthroughanalysis.com/2008/08/01/unstructured-data-and-the-80-percent-rule/.

N. Kalchbrenner, E. Grefenstette, and P. Blunsom, “A convolutional neural network for modelling sentences,” arXiv preprint arXiv:1404.2188, 2014.

S. Lai, L. Xu, K. Liu, and J. Zhao, “Recurrent convolutional neural networks for text classification,” in Proceedings of AAAI, 2015, pp. 2267–2273.