what game are we playing: Difference between revisions

No edit summary |

|||

| Line 3: | Line 3: | ||

== Introduction == | == Introduction == | ||

== Quantal response in Normal form games == | == Quantal response in Normal form games == | ||

| Line 62: | Line 60: | ||

$$ | $$ | ||

where <math>\nabla_{u,v,\mu,\nu}^2 \mathcal{L} </math> is the Hessian of the Lagrangian and <math>\nabla_{u,v,\mu,\nu} \mathcal{L} </math> is simply a column vector of the KKT stationarity conditions. Combined with the previous section, this completes the goal of the paper: To construct a differentiable problem for learning normal form and extensive form games. | where <math>\nabla_{u,v,\mu,\nu}^2 \mathcal{L} </math> is the Hessian of the Lagrangian and <math>\nabla_{u,v,\mu,\nu} \mathcal{L} </math> is simply a column vector of the KKT stationarity conditions. Combined with the previous section, this completes the goal of the paper: To construct a differentiable problem for learning normal form and extensive form games. | ||

== Conclusion == | |||

Revision as of 20:52, 13 November 2020

Authors

Yuxin Wang, Evan Peters, Yifan Mou, Sangeeth Kalaichanthiran

Introduction

Quantal response in Normal form games

Learning Extensive form games

The normal form representation for games where players have _many_ choices quickly becomes intractable. For example, consider a chess game: One the first turn, player 1 has 20 possible moves and then player 2 has 20 possible responses. If in the following number of turns each player is estimated to have ~30 possible moves and if a typical game is 40 moves per player, the total number of strategies is roughly [math]\displaystyle{ 10^{120} }[/math] per player (this is known as the Shannon number for game-tree complexity of chess) and so the payoff matrix for a typical game of chess must therefore have [math]\displaystyle{ O(10^{240}) }[/math] entries.

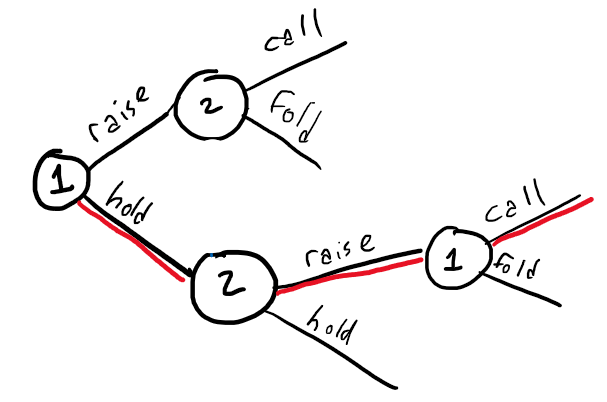

Instead, it is much more useful to represent the game graphically (in "Extensive form"). We'll also need to consider types of games where there is incomplete information - players do not necessarily have access to the full state of the game. An example of this is one-card poker: (1) Each player draws a single card from a 13-card deck (ignore suits) (2) Player 1 decides whether to bet/hold (3) Player 2 decides whether to call/raise (4) Player 1 must either call/fold if Player 2 raised. From this description, player 1 has [math]\displaystyle{ 2^{13} }[/math] possible first moves (all combinations of (card, raise/hold)) and has [math]\displaystyle{ 2^{13} }[/math] possible second moves (whenever player 1 gets a second move) for a total of [math]\displaystyle{ 2^{26} }[/math] possible strategies. In addition, Player 1 never knows what cards player 2 has and vice versa. So instead of representing the game with a huge payoff matrix we can instead represent it as a simple decision tree (for a single drawn card of player 1):

where player 1 is represented by "1", a node that has two branches corresponding to the allowed moves of player 1. However there must also be a notion of information available to either player: While this tree might correspond to say, player 1 holding a "9", it contains no information on what card player 2 is holding. This leads to the definition of an information set: the set of all nodes belonging to a single player for which the other player cannot distinguish which node has been reached. The information set may therefore be treated as a node itself, for which actions stemming from the node must be chosen in ignorance to what the other player did immediately before arriving at the node. In the poker example, the full game tree consists of 13 repetitions of the tree shown above and an example of an information set for player 1 is the set of all of nodes owned by player 2 that immediately follow player 1's decision to hold. In other words, if player 1 holds there are 13 possible nodes describing the responses of player 2 (raise/hold for player 2 having card = ace, 1, ... King) and all 13 of these nodes are indistinguishable to player 1, and so form an information set for player 1.

Let [math]\displaystyle{ \mathcal{I}_i }[/math] be the set of all information sets for player i, and for each [math]\displaystyle{ t \in \mathcal{I}_i }[/math] let [math]\displaystyle{ \sigma_t }[/math] be the actions taken by player i to arrive at [math]\displaystyle{ t }[/math] and [math]\displaystyle{ C_t }[/math] be the actions that player i can take from [math]\displaystyle{ u }[/math]. Then the set of all possible sequences that can be taken by player i is given by

$$ S_i = \{\emptyset \} \cup \{ \sigma_t c | u\in \mathcal{I}_i, c \in C_t \} $$

So for the one-card poker we would have [math]\displaystyle{ S_1 = \{\emptyset, \text{raise}, \text{hold}, \text{hold-call}, \text{hold-fold\} } }[/math]. From the possible sequences follows two important concepts:

- The EFG payoff matrix [math]\displaystyle{ P }[/math] is size [math]\displaystyle{ |S_1| \times |S_2| }[/math] (this is all possible actions available to either player), is populated with rewards from each leaf of the tree (or "zero" for each [math]\displaystyle{ (s_1, s_2) }[/math] that is an invalid pair), and the expected payoff for realization plans [math]\displaystyle{ (u, v) }[/math] is given by [math]\displaystyle{ u^T P v }[/math]

- A realization plan [math]\displaystyle{ u \in \mathbb{R}^{|S_1|} }[/math] for player 1 ([math]\displaystyle{ v \in \mathbb{R}^{|S_2|} }[/math] for player 2 ) will describe probabilities for players to carry out each possible sequence, and each realization plan must be constrained by (i) compatibility of sequences (e.g. "raise" is not compatible with "hold-call") and (ii) information sets available to the player. These constraints are linear: $$ Eu = e \\ Fv = f $$ where [math]\displaystyle{ e = f = (1, 0, ..., 0)^T }[/math] and [math]\displaystyle{ E, F }[/math] contain entries in [math]\displaystyle{ {-1, 0, 1} }[/math] describing compatibility and information sets.

The paper's main contribution is to develop a minmax problem for extensive form games:

$$

\min_u \max_v u^T P v + \sum_{t\in \mathcal{I}_1} \sum_{c \in C_t} u_c \log \frac{u_c}{u_{p_t}} - \sum_{t\in \mathcal{I}_2} \sum_{c \in C_t} v_c \log \frac{v_c}{v_{p_t}}

$$

where [math]\displaystyle{ p_t }[/math] is the action immediately preceding information set [math]\displaystyle{ t }[/math]. Intuitively, each sum resembles a cross entropy over the distribution of probabilities in the realization plan comparing each probability to proceed from an information set to the probability to arrive at that information set. Importantly, these entropies are strictly convex or concave (for player 1 and player 2 respectively) so that the minmax problem will have a unique solution and the objective function is continuous and continuously differntiable - this means there is a way to optimize the function. As noted in Theorem 1 of [1], the solution to this problem is equivalently a solution for the QRE of the game in reduced normal form.

Having decided on a cost function, the method of Lagrange multipliers my be used to construct the Lagrangian that encodes the known constraints ([math]\displaystyle{ Eu = e \,, Fv = f }[/math], and [math]\displaystyle{ u, v \geq 0 }[/math]), and then optimize the Lagrangian using Newton's method. Accounting for the constraints, the Lagrangian becomes

$$

\mathcal{L} = g(u, v) + \sum_i \mu_i(Eu - e)_i + \sum_i \nu_i (Fv - f)_i

$$

where [math]\displaystyle{ g }[/math] is the argument from the minmax statement above and [math]\displaystyle{ u, v \geq 0 }[/math] become KKT conditions. The general update rule for Newton's method may be written in terms of the derivatives of [math]\displaystyle{ \mathcal{L} }[/math] with respect to primal variables [math]\displaystyle{ u, v }[/math] and dual variables [math]\displaystyle{ \mu, \nu }[/math], yielding:

$$ \nabla_{u,v,\mu,\nu}^2 \mathcal{L} \cdot (\Delta u, \Delta v, \Delta \mu, \Delta \nu)^T= - \nabla_{u,v,\mu,\nu} \mathcal{L} $$ where [math]\displaystyle{ \nabla_{u,v,\mu,\nu}^2 \mathcal{L} }[/math] is the Hessian of the Lagrangian and [math]\displaystyle{ \nabla_{u,v,\mu,\nu} \mathcal{L} }[/math] is simply a column vector of the KKT stationarity conditions. Combined with the previous section, this completes the goal of the paper: To construct a differentiable problem for learning normal form and extensive form games.