Memory-Based Parameter Adaptation: Difference between revisions

| Line 11: | Line 11: | ||

= Introduction = | = Introduction = | ||

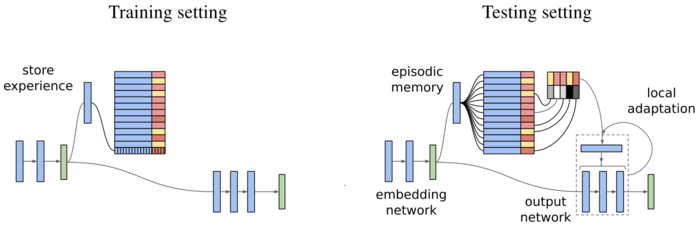

Model-based parameter adaptation (MbPA) is based on the theory of complementary learning systems which states that intelligent agents must possess two learning systems, one that allows the gradual acquisition of knowledge and another that allows rapid learning of the specifics of individual experiences<sup>[[#References|[2]]]</sup> . Similarly, MbPA consists of two components: a parametric component and a non-parametric component. The parametric component is the standard neural network which learns slowly (low learning rates) but generalizes well. The non-parametric component, on the other hand, is a neural network augmented with an episodic memory that allows storing of previous experiences and local adaptation of the weights of the parametric component. The parametric and non-parametric components therefore serve different purposes during the training and testing phases. | Model-based parameter adaptation (MbPA) is based on the theory of complementary learning systems which states that intelligent agents must possess two learning systems, one that allows the gradual acquisition of knowledge and another that allows rapid learning of the specifics of individual experiences<sup>[[#References|[2]]]</sup>. Similarly, MbPA consists of two components: a parametric component and a non-parametric component. The parametric component is the standard neural network which learns slowly (low learning rates) but generalizes well. The non-parametric component, on the other hand, is a neural network augmented with an episodic memory that allows storing of previous experiences and local adaptation of the weights of the parametric component. The parametric and non-parametric components therefore serve different purposes during the training and testing phases. | ||

= Model Architecture = | = Model Architecture = | ||

Revision as of 19:09, 9 November 2018

This is a summary based on the paper, Memory-based Parameter Adaptation by Sprechmann et al.[1]

The paper generalizes some approaches in language modelling that seek to overcome some of the shortcomings of neural networks including the phenomenon of catastrophic forgetting using memory-based adaptation. Catastrophic forgetting occurs when neural networks perform poorly on old tasks after they have been trained to perform well on a new task. The paper also presents experimental results where the model in question is applied to continual and incremental learning tasks.

Presented by

- J.Walton

- J.Schneider

- Z.Abbas

- A.Na

Introduction

Model-based parameter adaptation (MbPA) is based on the theory of complementary learning systems which states that intelligent agents must possess two learning systems, one that allows the gradual acquisition of knowledge and another that allows rapid learning of the specifics of individual experiences[2]. Similarly, MbPA consists of two components: a parametric component and a non-parametric component. The parametric component is the standard neural network which learns slowly (low learning rates) but generalizes well. The non-parametric component, on the other hand, is a neural network augmented with an episodic memory that allows storing of previous experiences and local adaptation of the weights of the parametric component. The parametric and non-parametric components therefore serve different purposes during the training and testing phases.

Model Architecture

Training Phase

The model consists of three components: an embedding network [math]\displaystyle{ f_{\gamma} }[/math], a memory [math]\displaystyle{ M }[/math] and an output network [math]\displaystyle{ g_{\theta} }[/math]. The embedding network and the output network can be thought of as the standard feedforward neural networks for our purposes, with parameters (weights) [math]\displaystyle{ \gamma }[/math] and [math]\displaystyle{ \theta }[/math], respectively. The memory, denoted by [math]\displaystyle{ M }[/math], stores “experiences” in the form of key and value pairs [math]\displaystyle{ \{(h_{i},v_{i})\} }[/math] where the keys [math]\displaystyle{ h_{i} }[/math] are the outputs of the embedding network [math]\displaystyle{ f_{\gamma}(x_{i}) }[/math] and the values [math]\displaystyle{ v_{i} }[/math], in the context of classification, are simply the true class labels [math]\displaystyle{ y_{i} }[/math]. Thus, for a given input [math]\displaystyle{ x_{j} }[/math]

[math]\displaystyle{ f_{\gamma}(x_{j}) \rightarrow h_{j}, }[/math]

[math]\displaystyle{ y_{j} \rightarrow v_{j}. }[/math]

Note that the memory has a fixed size; thus when it is full, the oldest data is discarded first.

During training, the authors sample of a set of [math]\displaystyle{ b }[/math] training examples randomly (ie. mini-batch size [math]\displaystyle{ b }[/math]), say [math]\displaystyle{ \{(x_{b},y_{b})\}_{b} }[/math], from the training data that they input into the embedding network [math]\displaystyle{ f_{\gamma} }[/math], followed by the output network [math]\displaystyle{ g_{\theta} }[/math]. The parameters of the embedding and output networks are updated by maximizing the likelihood function (equivalently, minimizing the loss function) of the target values

[math]\displaystyle{ p(y|x,\gamma,\theta)=g_{\theta}(f_{\gamma}(x)). }[/math]

The last layer of the output network [math]\displaystyle{ g_{\theta} }[/math] is a softmax layer, such that the output can be interpreted as a probability distribution. This process is also known as backpropagation with mini-batch gradient descent. Finally, the embedded samples [math]\displaystyle{ \{(f_{\gamma}(x_{b}),y_{b})\}_{b} }[/math] are stored into the memory. No local adaptation takes place during this phase.

Testing Phase

Examples

Continual Learning

Incremental Learning

Conclusion

The MbPA model can successfully overcome several shortcomings associated with neural networks through its non-parametric, episodic memory. In fact, many other works in the context of classification and language modelling among others have successfully used variants of this architecture, where traditional neural network systems are augmented with memories. Likewise, the experiments in incremental and continual learning presented in this paper use a memory architecture similar to the Differential Neural Dictionary (DND) used in Neural Episodic Control (NEC) found in \cite{Pritzel2017}, though the gradients from the memory in the MbPA model are not used during training. In conclusion, MbPA presents a natural way to improve the performance of standard deep networks.

References

- 1Sprechmann. Pablo, Jayakumar. Siddhant, Rae. Jack, Pritzel. Alexander,Badia. Adria, Uria. Benigno, Vinyals. Oriol, Hassabis. Demis, Pascanu.Razvan, and Blundell. Charles. Memory-based parameter adaptation.ICLR, 2018.

- 2Kumaran. Dhushan, Hassabis. Demis, and McClelland. James. Whatlearning systems do intelligent agents need?Trends in Cognitive Sciences,2016.

- 3Goodfellow. Ian, Warde-Farley. David, Mirza. Mehdi, Courville. Aaron,and Bengio. Yohsua. Maxout networks.arXiv preprint, 2013.

- 4Russakovsky. Olga, Deng. Jia, Su. Hao, Krause. Jonathan, Satheesh. San-jeev, Ma. Sean, Huang. Zhiheng, Karpathy. Andrej, Khosla. Aditya, andBernstein. Michael. Imagenet large scale visual recognition challenge.International Journal of Computer Vision, 2015.

- 5He. Kaiming, Zhang. Xiangyu, Ren. Shaoqing, and Sun. Jian. Deepresidual learning for image recognition.IEEE conference on computervision and pattern recognition, 2016.

- 6Pritzel. Alexander, Uria. Benigno, Srinivasan. Sriram, Puigdomenech.Adria, Vinyals. Oriol, Hassabis. Demis, Wierstra. Daan, and Blundell.Charles. Neural episodic control.ICML, 2017.