Generating Image Descriptions: Difference between revisions

| Line 38: | Line 38: | ||

== The Model: Alignment == | == The Model: Alignment == | ||

=== Overview === | |||

Given a picture, there are usually multiple things that can appear in the picture. Thus one of the first problems that need to be addressed is how to align the visual data with the language data. Sentences often refer to a particular location to the image, however the algorithm must be able to identify which location it is referring to. The following section assumes that we are given an input dataset of images and sentence descriptions, and it will describe how the alignment model matches the two. | Given a picture, there are usually multiple things that can appear in the picture. Thus one of the first problems that need to be addressed is how to align the visual data with the language data. Sentences often refer to a particular location to the image, however the algorithm must be able to identify which location it is referring to. The following section assumes that we are given an input dataset of images and sentence descriptions, and it will describe how the alignment model matches the two. | ||

| Line 48: | Line 48: | ||

ii) An objective which learns this "common embedding" so that semantically similar concepts will occupy similar regions within the space | ii) An objective which learns this "common embedding" so that semantically similar concepts will occupy similar regions within the space | ||

=== Part I: Representing Images and Sentences === | |||

''' Images ''' | |||

Given an image, a Region Convolutional Neural Network (Girshick et al) is used to detect every possible object that appears in the image. From all the possible objects detected, the top 19 are selected. These 19 images, along with the full image are used as the data to be labeled by the sentences. | |||

'''Part II: Alignment Objective | '''Sentences ''' | ||

We want to embed the sentences into the same h-dimensional space as above. | |||

=== Part II: Alignment Objective === | |||

== Experimental Results == | == Experimental Results == | ||

Revision as of 23:44, 26 March 2018

This Page is Under Construction.

Introduction and Motivation

People often say that a picture is worth a thousand words, but with the current tools available, NLP and image recognition algorithms are unable to extract nearly that much meaningful text from images. Contrarily, when a human glances at an image, they can easily identify numerous details and relationships within a detailed visual scene. Despite numerous advancements in image classification, object detection, and natural language processing, this particular task has proven challenging for existing visual recognition models. The majority of similar visual recognition models focus on labeling objects [CITATIONS], or generating image descriptions based on limited vocabularies and fixed sentence models [CITATIONS]. The authors of this paper believed that the assumptions of these types of models were too restrictive, leading to an inability to generate rich descriptions that the human mind is capable of.

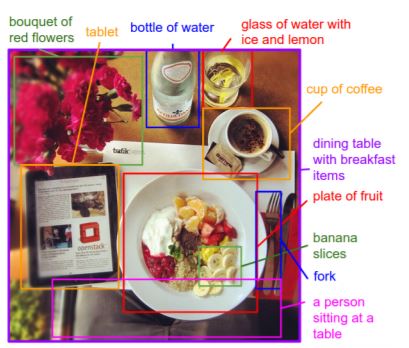

In this paper, the restriction of hard-coded word templates, and sentence structures are removed. Using this assumption-free ideal, the Kapersky and Li create a model that can generate richer descriptions drawn from a wider vocabulary. Due to the lack of directly relevant training data for this task, the authors leveraged the large quantity of images with detailed captions available online. Although these images contained no explicit information about image region labels or relationships between regions, they could treat individual captions as weak labels in which contiguous segments of words correspond to some particular, but unknown location in the image. By inferring the location of alignment between the corpus and image, a generative model is created that can generate detailed descriptions as seen in Figure 1.

In particular, this paper introduces a neural network that infers latent text relationships with corresponding image region relationships. This is achieved through an optimization problem which associates probabilities related to a multimodal image embedding space to a carefully constructed objective function. Validation of this approach is conducted on image-sentence retrieval tasks.

Furthermore, a multimodal Recurrent Neural Network (RNN) structure is used to take an input image, and generate text descriptions along with their corresponding regions. Simulations show that the generated sentences outperform rigid, retrieval based models.

Relevant Concepts

RNN(Recurrent Neural Network): The main feature of Recurrent neural network is for each step the decision of last step also become a source of input in the next step. Along with the original input, recurrent neural network have two sources of input.

Give a simple example, if you decide to cook apple pie, burger and chicken in that order. If it’s sunny then next day you cook the same dish, otherwise you cook the next dish. This is a simple recurrent neural network. The dish you cooked today is a also an input for the next day’s decision making. (detail in here)

So mathematically speaking, The decision a recurrent net reached at time step t-1 affects the decision it will reach one moment later at time step t.

BRNN(Bidirection Recurrent Neural Network) A regular recurrent neural network can only take past information into the current input, the future information cannot be reached from the current state. However, BRNN don’t have such restriction. So the BRNN have two sources of inputs from opposite directions.

Additive interaction:

Literature Review

Before analyzing Kapersky and Li's visual recognition model in more depth, we first discuss the shortcomings of similar models.

The Model: Alignment

Overview

Given a picture, there are usually multiple things that can appear in the picture. Thus one of the first problems that need to be addressed is how to align the visual data with the language data. Sentences often refer to a particular location to the image, however the algorithm must be able to identify which location it is referring to. The following section assumes that we are given an input dataset of images and sentence descriptions, and it will describe how the alignment model matches the two.

There are two main parts to this task:

i) A neural network that maps sentences and images into a "common embedding"

ii) An objective which learns this "common embedding" so that semantically similar concepts will occupy similar regions within the space

Part I: Representing Images and Sentences

Images

Given an image, a Region Convolutional Neural Network (Girshick et al) is used to detect every possible object that appears in the image. From all the possible objects detected, the top 19 are selected. These 19 images, along with the full image are used as the data to be labeled by the sentences.

Sentences

We want to embed the sentences into the same h-dimensional space as above.

Part II: Alignment Objective

Experimental Results

Conclusions

Throughout the entire paper, authors have introduced the following concepts:

1.Generates natural language descriptions of image regions based on weak labels.

Q: What labels exactly?

A: Dataset with images and sentences.

2. This model has few hard-coded assumptions. Novel ranking through a common, multi-modal embedding.

We have taken several generative steps underlying here:

Align visual and language data:

---Detect image using RCNN, with CNN pre-trained.

---Compute the sentenced describing the image in the same dimensional embedding layers, using BRNN.

---MRNN for generating descriptions:

Main challenges —— variable size not fixed Solution: based on RNN and time series, define the next series based on current variables that we have.

Experiments:

---Image-sentence alignment ranking: High among all other methods, with a top 5 median.

---Full-frame evaluation-Image: slightly outperform many of the existing models.

---Region evaluation: Though still significant lower than human opinion, but surpassed most of the existing models.

Steps to take afterwards

Limited input properties (e.g. one input array of pixels, images need to have a fixed resolution) ***very big issue as currently a lot of images are varied in the size and resolution.***

The RNN receives the image information only through additive bias interactions, which are known to be less expressive. That being said, the interaction the model chose is case sensitive, which is hard to achieve in real life.

In this model, they divided the image-sentence data set into two parts, descriptive sentences and visual images. Is there any possibility to skip the division and solve the thing using one single model?

Reference

1. Karpathy, A., & Li, F. (2015, April 14). Deep Visual-Semantic Alignments for Generating Image Descriptions. Retrieved March 19, 2018, from https://arxiv.org/pdf/1412.2306v2.pdf

2. A Beginner’s Guide to Recurrent Networks and LSTMs. (n.d.). Retrieved from https://deeplearning4j.org/lstm.html#recurrent

3. Recurrent neural network. (n.d.). Retrieved from https://en.wikipedia.org/wiki/Recurrent_neural_network

4. Bidirectional recurrent neural networks. (n.d.). Retrieved from https://en.wikipedia.org/wiki/Bidirectional_recurrent_neural_networks

5. Embedding. (n.d.). Retrieved from https://en.wikipedia.org/wiki/Embedding