Do Deep Neural Networks Suffer from Crowding: Difference between revisions

No edit summary |

No edit summary |

||

| Line 25: | Line 25: | ||

==Eccentricity-dependent Model== | ==Eccentricity-dependent Model== | ||

In order to take care of the scale invariance in the input image, the eccentricity dependent DNN is utilized. The main intuition behind this architecture is that as we increase eccentricity, the receptive fields also increase and hence the model will become invariant to changing input scales. In this model the input image is cropped into varying scales(11 crops increasing by a factor of ........... which are then resized to 60x60 pixels) and then fed to the network. The model computes an invariant representation of the input by sampling the inverted pyramid at a discrete set of scales with the same number of filters at each scale. Since the same number of filters are used for each scale, the smaller crops will be sampled at a high resolution while the larger crops will be sampled with a low resolution. These scales are fed into the network as an input channel to the convolutional layers and share the weights across scale and space. | |||

[[File:EDM.png|2000x450px|center]] | [[File:EDM.png|2000x450px|center]] | ||

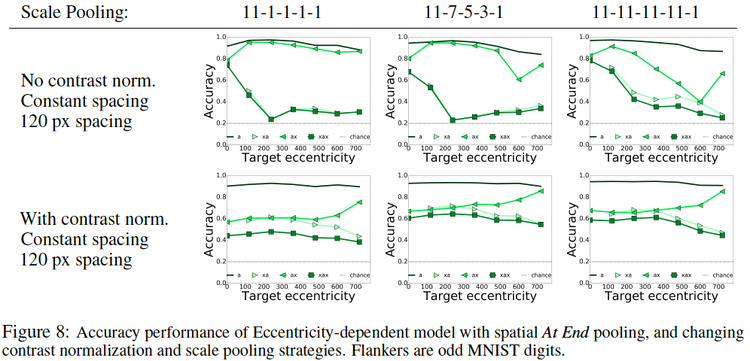

The architecture of this model is the same as the previous DCNN model with the only change being the extra filters added for each of the scales. The authors perform spatial pooling, the aforementioned ''At end pooling'' is used here, and scale pooling which helps in reducing number of scales by taking the maximum value of corresponding locations in the feature maps across multiple scales. It has three configurations: (1) at the beginning, in which all the different scales are pooled together after the first layer, 11-1-1-1-1 (2) progressively, 11-7-5-3-1 and (3) at the end, 11-11-11-11-1, in which all 11 scales are pooled together at the last layer. | |||

===Contrast Normalization=== | ===Contrast Normalization=== | ||

| Line 34: | Line 34: | ||

=Experiments and its Set-Up = | =Experiments and its Set-Up = | ||

Targets are the set of objects to be recognized and flankers act as clutter with respect to these target objects. The target objects are the even MNIST numbers having translational variance (shifted at different locations of the image along the horizontal axis). Examples of the target and flanker configurations is shown below: | |||

[[File:eximages.png|800px|center]] | [[File:eximages.png|800px|center]] | ||

| Line 40: | Line 40: | ||

==DNNs trained with Target and Flankers== | ==DNNs trained with Target and Flankers== | ||

This is a constant spacing training setup where identical flankers are placed at a distance of 120 pixels either side of the target(xax) with the | This is a constant spacing training setup where identical flankers are placed at a distance of 120 pixels either side of the target(xax) with the target having translational variance. THe tests are evaluated on (i) DCNN with at the end pooling, and (ii) eccentricity-dependent model with 11-11-11-11-1 scale pooling, at the end spatial pooling and contrast normalization. The test data has different flanker configurations as described above. | ||

[[File:result1.png|x450px|center]] | [[File:result1.png|x450px|center]] | ||

| Line 50: | Line 50: | ||

==DNNs trained with Images with the Target in Isolation== | ==DNNs trained with Images with the Target in Isolation== | ||

Here the target objects are in isolation and with translational variance while the test-set is the same set of flanker configurations as used before. | |||

[[File:result2.png|750x400px|center]] | [[File:result2.png|750x400px|center]] | ||

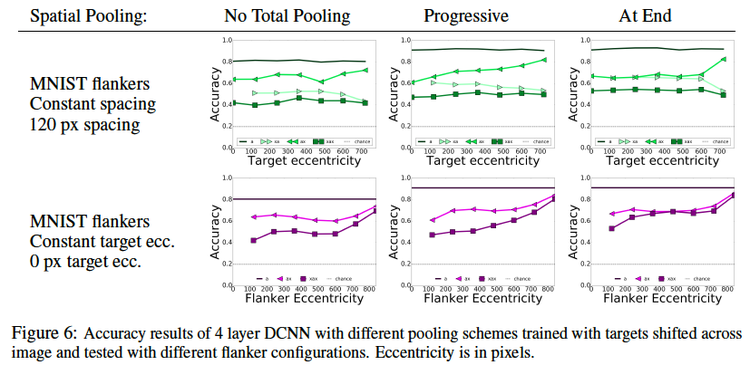

===DCNN Observations=== | |||

- The recognition gets worse with the increase in the number of flankers. | |||

- Convolutional networks are capable of being invariant to translations. | |||

- In the constant target eccentricity setup, where target is fixed at the center of the image with varying target-flanker spacing, we observe that as the distance between target and flankers increase, recognition gets better. | |||

- Spatial pooling helps in learning invariance. | |||

-Flankers similar to the target object helps in recognition since they dont activate the convolutional filter more. | |||

- notMNIST data affects leads to more crowding since they have many more edges and white image pixels which activate the convolutional layers more. | |||

===Eccentric Model=== | ===Eccentric Model=== | ||

[[File:result3.png|750x400px|center]] | [[File:result3.png|750x400px|center]] | ||

| Line 64: | Line 71: | ||

*'''Effect of pooling''': adding pooling leads to better recognition accuracy of the models. Yet, in the eccentricity model, pooling across the scales too early in the hierarchy leads to lower accuracy. | *'''Effect of pooling''': adding pooling leads to better recognition accuracy of the models. Yet, in the eccentricity model, pooling across the scales too early in the hierarchy leads to lower accuracy. | ||

=Critique= | |||

This paper just tries to check the impact of flankers on targets as to how crowding can affect recognition but it does not propose anything novel in terms of architecture to take care of such a type of crowding. The eccentricity based model does well only when the target is placed at the center of the image but maybe windowing over the frames instead of taking crops starting from the middle might help. | |||

=References= | |||

1) Volokitin A, Roig G, Poggio T:"Do Deep Neural Networks Suffer from Crowding?" Conference on Neural Information Processing Systems (NIPS). 2017 | |||

2) Francis X. Chen, Gemma Roig, Leyla Isik, Xavier Boix and Tomaso Poggio: "Eccentricity Dependent Deep Neural Networks for Modeling Human Vision" Journal of Vision. 17. 808. 10.1167/17.10.808. | |||

Revision as of 01:53, 21 March 2018

Still working on this.

Introduction

Ever since the evolution of Deep Networks, there has been tremendous amount of research and effort that has been put into making machines capable of recognizing objects the same way as humans do. Humans can recognize objects in a way that is invariant to scale, translation, and clutter. Crowding is another visual effect suffered by humans, in which an object that can be recognized in isolation can no longer be recognized when other objects, called flankers, are placed close to it and this is a very common real-life experience. This paper focuses on studying the impact of crowding on Deep Neural Networks (DNNs) by adding clutter to the images and then analyzing which models and settings suffer less from such effects.

The paper investigates two types of DNNs for crowding: traditional deep convolutional neural networks(DCNN) and a multi-scale eccentricity-dependent model which is an extension of the DCNNs and inspired by the retina where the receptive field size of the convolutional filters in the model grows with increasing distance from the center of the image, called the eccentricity and will be explained below. The authors focus on the dependence of crowding on image factors, such as flanker configuration, target-flanker similarity, target eccentricity and premature pooling in particular.

Models

Deep Convolutional Neural Networks

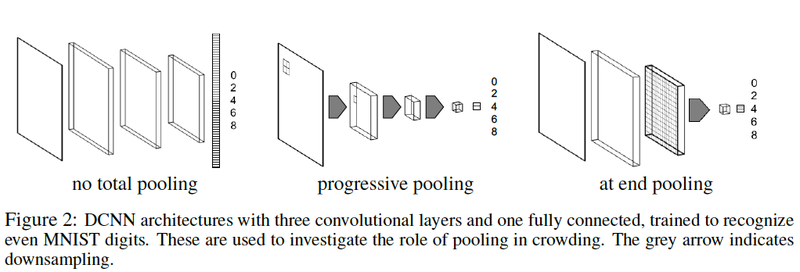

The DCNN is a basic architecture with 3 convolutional layers, spatial 3x3 max-pooling with varying strides and a fully connected layer for classification as shown in the below figure.

The network is fed with images resized to 60x60, with minibatches of 128 images, 32 feature channels for all convolutional layers, and convolutional filters of size 5x5 and stride 1.

As highlighted earlier, the effect of pooling is into main consideration and hence three different configurations have been investigated as below:

1. No total pooling Feature maps sizes decrease only due to boundary effects, as the 3x3 max pooling has stride 1. The square feature maps sizes after each pool layer are 60-54-48-42.

2. Progressive pooling 3x3 pooling with a stride of 2 halves the square size of the feature maps, until we pool over what remains in the final layer, getting rid of any spatial information before the fully connected layer. (60-27-11-1).

3. At end pooling Same as no total pooling, but before the fully connected layer, max-pool over the entire feature map. (60-54-48-1).

What is the problem in CNNs?

CNNs fall short in explaining human perceptual invariance. First, CNNs typically take input at a single uniform resolution. Biological measurements suggest that resolution is not uniform across the human visual field, but rather decays with eccentricity, i.e. distance from the center of focus Even more importantly, CNNs rely on data augmentation to achieve transformation-invariance and obviously a lot of processing is needed for CNNs.

Eccentricity-dependent Model

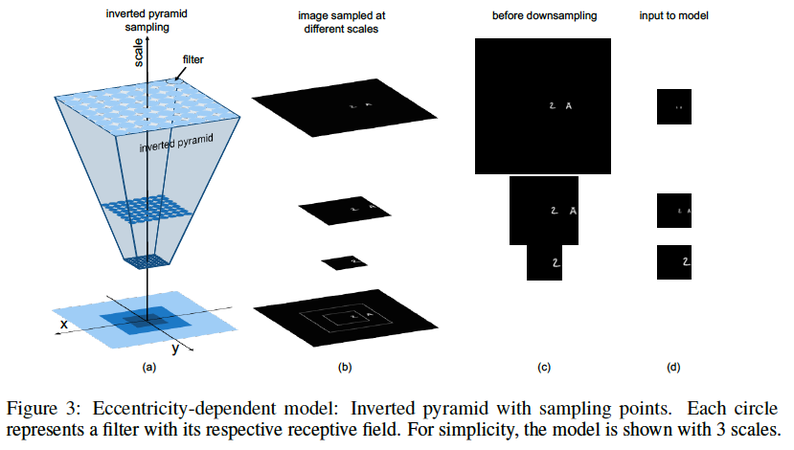

In order to take care of the scale invariance in the input image, the eccentricity dependent DNN is utilized. The main intuition behind this architecture is that as we increase eccentricity, the receptive fields also increase and hence the model will become invariant to changing input scales. In this model the input image is cropped into varying scales(11 crops increasing by a factor of ........... which are then resized to 60x60 pixels) and then fed to the network. The model computes an invariant representation of the input by sampling the inverted pyramid at a discrete set of scales with the same number of filters at each scale. Since the same number of filters are used for each scale, the smaller crops will be sampled at a high resolution while the larger crops will be sampled with a low resolution. These scales are fed into the network as an input channel to the convolutional layers and share the weights across scale and space.

The architecture of this model is the same as the previous DCNN model with the only change being the extra filters added for each of the scales. The authors perform spatial pooling, the aforementioned At end pooling is used here, and scale pooling which helps in reducing number of scales by taking the maximum value of corresponding locations in the feature maps across multiple scales. It has three configurations: (1) at the beginning, in which all the different scales are pooled together after the first layer, 11-1-1-1-1 (2) progressively, 11-7-5-3-1 and (3) at the end, 11-11-11-11-1, in which all 11 scales are pooled together at the last layer.

Contrast Normalization

Since we have multiple scales of input image, we perform normalization such that the sum of the pixel intensities in each scale is in the same range [0,1] followed by dividing them by a factor proportional to the crop area.

Experiments and its Set-Up

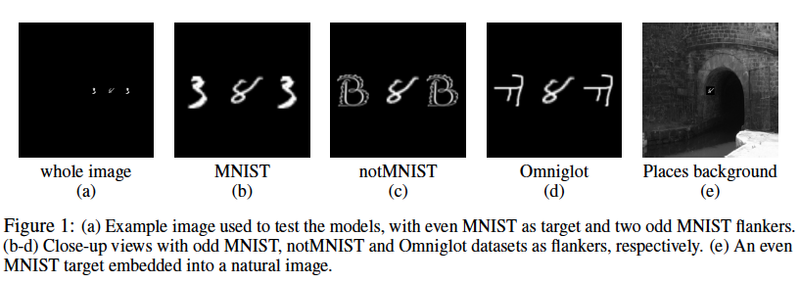

Targets are the set of objects to be recognized and flankers act as clutter with respect to these target objects. The target objects are the even MNIST numbers having translational variance (shifted at different locations of the image along the horizontal axis). Examples of the target and flanker configurations is shown below:

The target and the object are referred to as a and x respectively with the below four conifgurations: (1) No flankers. Only the target object. (a in the plots) (2) One central flanker closer to the center of the image than the target. (xa) (3) One peripheral flanker closer to the boundary of the image that the target. (ax) (4) Two flankers spaced equally around the target, being both the same object (xax).

DNNs trained with Target and Flankers

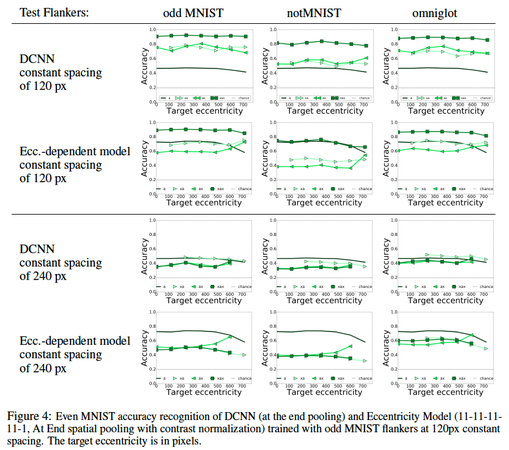

This is a constant spacing training setup where identical flankers are placed at a distance of 120 pixels either side of the target(xax) with the target having translational variance. THe tests are evaluated on (i) DCNN with at the end pooling, and (ii) eccentricity-dependent model with 11-11-11-11-1 scale pooling, at the end spatial pooling and contrast normalization. The test data has different flanker configurations as described above.

Observations

- With the flanker configuration same as the training one, models are better at recognizing objects in clutter rather than isolated objects for all image locations -If the target-flanker spacing is changed, then models perform worse -the eccentricity model is much better at recognizing objects in isolation than the DCNN because the multi-scale crops divide the image into discrete regions, letting the model learn from image parts as well as the whole image -Only the eccentricity-dependent model is robust to different flanker configurations not included in training, when the target is centered.

DNNs trained with Images with the Target in Isolation

Here the target objects are in isolation and with translational variance while the test-set is the same set of flanker configurations as used before.

DCNN Observations

- The recognition gets worse with the increase in the number of flankers. - Convolutional networks are capable of being invariant to translations. - In the constant target eccentricity setup, where target is fixed at the center of the image with varying target-flanker spacing, we observe that as the distance between target and flankers increase, recognition gets better. - Spatial pooling helps in learning invariance. -Flankers similar to the target object helps in recognition since they dont activate the convolutional filter more. - notMNIST data affects leads to more crowding since they have many more edges and white image pixels which activate the convolutional layers more.

Eccentric Model

Conclusions

We often think that just training the network with data similar to the test data would achieve good results in a general scenario too but thats not the case as we trained the model with flankers and it did not give us the ideal results for the target obects.

- Flanker Configuration: When models are trained with images of objects in isolation, adding flankers harms recognition. Adding two flankers is the same or worse than adding just one and the smaller the spacing between flanker and target, the more crowding occurs. These is because the pooling operation merges nearby responses, such as the target and flankers if they are close.

- Similarity between target and flanker: Flankers more similar to targets cause more crowding, because of the selectivity property of the learned DNN filters.

- Dependence on target location and contrast normalization: In DCNNs and eccentricitydependent models with contrast normalization, recognition accuracy is the same across all eccentricities. In eccentricity-dependent networks without contrast normalization, recognition does not decrease despite presence of clutter when the target is at the center of the image.

- Effect of pooling: adding pooling leads to better recognition accuracy of the models. Yet, in the eccentricity model, pooling across the scales too early in the hierarchy leads to lower accuracy.

Critique

This paper just tries to check the impact of flankers on targets as to how crowding can affect recognition but it does not propose anything novel in terms of architecture to take care of such a type of crowding. The eccentricity based model does well only when the target is placed at the center of the image but maybe windowing over the frames instead of taking crops starting from the middle might help.

References

1) Volokitin A, Roig G, Poggio T:"Do Deep Neural Networks Suffer from Crowding?" Conference on Neural Information Processing Systems (NIPS). 2017

2) Francis X. Chen, Gemma Roig, Leyla Isik, Xavier Boix and Tomaso Poggio: "Eccentricity Dependent Deep Neural Networks for Modeling Human Vision" Journal of Vision. 17. 808. 10.1167/17.10.808.