the Manifold Tangent Classifier: Difference between revisions

(Created page with "== Introduction == The goal in many machine learning problems is to extract information from data with minimal prior knowledge<ref name = "main"> Rifai, S., Dauphin, Y. N., Vinc...") |

|||

| Line 220: | Line 220: | ||

* It would be interesting to investigate applying the method of tangent extraction to other unsupervised methods, and then create a classifier based on these tangents in the same way that it is done in this paper. Further work could be done to apply this approach to clustering algorithms, kernel PCA, E-M, etc. This is more of a suggestion than a concrete idea, however. | * It would be interesting to investigate applying the method of tangent extraction to other unsupervised methods, and then create a classifier based on these tangents in the same way that it is done in this paper. Further work could be done to apply this approach to clustering algorithms, kernel PCA, E-M, etc. This is more of a suggestion than a concrete idea, however. | ||

* It is not exactly clear to me how a <math>h</math> could ever define a true diffeomorphism, since <math>h: \mathbb{R}^{d} \mapsto \mathbb{R}^{d_h}</math>, where <math>d \ne d_h</math>, in general. Normally, if <math>d > d_h</math>, we would not expect such a map <math>h</math> to possibly be injective, since the cardinality of the domain is higher than that of the codomain. However, they may be able to "manufacture" the injectivity of <math>h</math> using the fact that <math>\mathcal{D}</math> is a discrete set of points. I'm not sure that this approach defines a continuous manifold, but I'm also not sure if that really matters in this case. | |||

== Bibliography == | == Bibliography == | ||

<references /> | <references /> | ||

Revision as of 21:43, 18 November 2015

Introduction

The goal in many machine learning problems is to extract information from data with minimal prior knowledge<ref name = "main"> Rifai, S., Dauphin, Y. N., Vincent, P., Bengio, Y., & Muller, X. (2011). The manifold tangent classifier. In Advances in Neural Information Processing Systems (pp. 2294-2302). </ref> These algorithms are designed to work on numerous problems which they may not be specifically tailored towards, thus domain-specific knowledge is generally not incorporated into the models. However, some generic "prior" hypotheses are considered to aid in the general task of learning, and three very common ones are presented below:

- The semi-supervised learning hypothesis: This states that knowledge of the input distribution [math]\displaystyle{ p\left(x\right) }[/math] can aid in learning the output distribution [math]\displaystyle{ p\left(y|x\right) }[/math] .<ref>Lasserre, J., Bishop, C. M., & Minka, T. P. (2006, June). Principled hybrids of generative and discriminative models. In Computer Vision and Pattern Recognition, 2006 IEEE Computer Society Conference on (Vol. 1, pp. 87-94). IEEE.</ref> This hypothesis lends credence to not only the theory of strict semi-supervised learning, but also unsupervised pretraining as a method of feature extraction.<ref> Hinton, G. E., Osindero, S., & Teh, Y. W. (2006). A fast learning algorithm for deep belief nets. Neural computation, 18(7), 1527-1554.</ref><ref>Erhan, D., Bengio, Y., Courville, A., Manzagol, P. A., Vincent, P., & Bengio, S. (2010). Why does unsupervised pre-training help deep learning?. The Journal of Machine Learning Research, 11, 625-660.</ref>

- The unsupervised manifold hypothesis: This states that real-world data presented in high-dimensional spaces is likely to concentrate around a low-dimensional sub-manifold.<ref>Cayton, L. (2005). Algorithms for manifold learning. Univ. of California at San Diego Tech. Rep, 1-17.</ref>

- The manifold hypothesis for classification: This states that points of different classes are likely to concentrate along different sub-manifolds, separated by low-density regions of the input space.<ref name = "main"></ref>

The recently-proposed Contractive Auto-Encoder (CAE) algorithm has shown success in the task of unsupervised feature extraction,<ref name = "CAE">Rifai, S., Vincent, P., Muller, X., Glorot, X., & Bengio, Y. (2011). Contractive auto-encoders: Explicit invariance during feature extraction. In Proceedings of the 28th International Conference on Machine Learning (ICML-11) (pp. 833-840).</ref> with its successful application in pre-training of Deep Neural Networks (DNN) an illustration of the merits of adopting Hypothesis 1. CAE also yields a mostly contractive mapping that is locally only sensitive to a few input directions, which implies that it models a lower-dimensional manifold (exploiting Hypothesis 2) since the directions of sensitivity are in the tangent space of the manifold.

This paper furthers the previous work by using the information about the tangent spaces by considering Hypothesis 3: it extracts basis vectors for the local tangent space around each training point from the parameters of the CAE. Then, older supervised classification algorithms that exploit tangent directions as domain-specific prior knowledge can be used on the tangent spaces generated by CAE for fine-tuning the overall classification network. This approach seamlessly integrates all three of the above hypotheses and produces record-breaking results (for 2011) on image classification.

Contractive Auto-Encoders (CAE) and Tangent Classification

The problem is to find a non-linear feature extractor for a dataset [math]\displaystyle{ \mathcal{D} = \{x_1, \ldots, x_n\} }[/math], where [math]\displaystyle{ x_i \in \mathbb{R}^d }[/math] are i.i.d. samples from an unknown distribution [math]\displaystyle{ p\left(x\right) }[/math].

Traditional Auto-Encoders

A traditional auto-encoder learns an encoder function [math]\displaystyle{ h: \mathbb{R}^d \rightarrow \mathbb{R}^{d_h} }[/math] along with a decoder function [math]\displaystyle{ g: \mathbb{R}^{d_h} \rightarrow \mathbb{R} }[/math], represented as [math]\displaystyle{ r = g\left(h\left(x\right)\right) }[/math]. [math]\displaystyle{ h\, }[/math] maps input [math]\displaystyle{ x\, }[/math] to the hidden input space, and [math]\displaystyle{ g\, }[/math] reconstructs [math]\displaystyle{ x\, }[/math]. When [math]\displaystyle{ L\left(x,g\left(h\left(x\right)\right)\right) }[/math] denotes the average reconstruction error, the objective function being optimized to learn the parameters [math]\displaystyle{ \theta\, }[/math] of the encoder/decoder is as follows:

- [math]\displaystyle{ \mathcal{J}_{AE}\left(\theta\right) = \sum_{x\in\mathcal{D}}L\left(x,g\left(h\left(x\right)\right)\right) }[/math]

The form of the encoder is [math]\displaystyle{ h\left(x\right) = s\left(Wx + b_h\right) }[/math], where [math]\displaystyle{ s\left(z\right) = \frac{1}{1 + e^{-z}} }[/math] is the element-wise logistic sigmoid. [math]\displaystyle{ W \in \mathbb{R}^{d_h \times d} }[/math] and [math]\displaystyle{ b_h \in \mathbb{R}^{d_h} }[/math] are the parameters (weight matrix and bias vector, respectively). The form of the decoder is [math]\displaystyle{ r = g\left(h\left(x\right)\right) = s_2\left(W^Th\left(x\right)+b_r\right) }[/math], where [math]\displaystyle{ \,s_2 = s }[/math] or the identity. The weight matrix [math]\displaystyle{ W^T\, }[/math] is shared with the encoder, with the only new parameter being the bias vector [math]\displaystyle{ b_r \in \mathbb{R}^d }[/math].

The loss function can either be the squared error [math]\displaystyle{ L\left(x,r\right) = \|x - r\|^2 }[/math] or the Bernoulli cross-entropy, given by:

- [math]\displaystyle{ L\left(x, r\right) = -\sum_{i=1}^d \left[x_i \mbox{log}\left(r_i\right) + \left(1 - x_i\right)\mbox{log}\left(1 - r_i\right)\right] }[/math]

First- and Higher-Order Contractive Auto-Encoders

Additional Penalty on Jacobian

The Contractive Auto-Encoder (CAE), proposed by Rifai et al.<ref name = "CAE"></ref>, encourages robustness of [math]\displaystyle{ h\left(x\right) }[/math] to small variations in [math]\displaystyle{ x }[/math] by penalizing the Frobenius norm of the encoder's Jacobian [math]\displaystyle{ J\left(x\right) = \frac{\partial h}{\partial x}\left(x\right) }[/math]. The new objective function to be minimized is:

- [math]\displaystyle{ \mathcal{J}_{CAE}\left(\theta\right) = \sum_{x\in\mathcal{D}}L\left(x,g\left(h\left(x\right)\right)\right) + \lambda\|J\left(x\right)\|_F^2 }[/math]

where [math]\displaystyle{ \lambda }[/math] is a non-negative regularization parameter. We can compute the [math]\displaystyle{ j^{th} }[/math] row of the Jacobian of the sigmoidal encoder quite easily using the [math]\displaystyle{ j^{th} }[/math] row of [math]\displaystyle{ W }[/math]:

- [math]\displaystyle{ J\left(x\right)_j = \frac{\partial h_j\left(x\right)}{\partial x} = h_j\left(x\right)\left(1 - h_j\left(x\right)\right)W_j }[/math]

Additional Penalty on Hessian

It is also possible to penalize higher-order derivatives by approximating the Hessian (explicit computation of the Hessian is costly). It is sufficient to penalize the difference between [math]\displaystyle{ J\left(x\right) }[/math] and [math]\displaystyle{ J\left(x + \varepsilon\right) }[/math] where [math]\displaystyle{ \,\varepsilon }[/math] is small, as this represents the rate of change of the Jacobian. This yields the "CAE+H" variant, with objective function as follows:

- [math]\displaystyle{ \mathcal{J}_{CAE+H}\left(\theta\right) = \mathcal{J}_{CAE}\left(\theta\right) + \gamma\sum_{x \in \mathcal{D}}\mathbb{E}_{\varepsilon\sim\mathcal{N}\left(0,\sigma^2I\right)} \left[\|J\left(x\right) - J\left(x + \varepsilon\right)\|^2\right] }[/math]

The expectation above, in practice, is taken over stochastic samples of the noise variable [math]\displaystyle{ \varepsilon\, }[/math] at each stochastic gradient descent step. [math]\displaystyle{ \gamma\, }[/math] is another regularization parameter. This formulation will be the one used within this paper.

Characterizing the Tangent Bundle Captured by a CAE

Although the regularization term encourages insensitivity of [math]\displaystyle{ h(x) }[/math] in all input space directions, the pressure to form an accurate reconstruction counters this somewhat, and the result is that [math]\displaystyle{ h(x) }[/math] is only sensitive to a few input directions necessary to distinguish close-by training points.<ref name = "CAE"></ref> Geometrically, the interpretation is that these directions span the local tangent space of the underlying manifold the characterizes the input data.

Geometric Terms

- Tangent Bundle: The tangent bundle of a smooth manifold is the manifold along with the set of tangent planes taken at all points in it.

- Chart: A local Euclidean coordinate system equipped to a tangent plane. Each tangent plane has its own chart.

- Atlas: A collection of local charts.

Conditions for Feature Mapping to Define an Atlas on a Manifold

To obtain a proper atlas of charts, [math]\displaystyle{ h }[/math] must be a local diffeomorphism (locally smooth and invertible). Since the sigmoidal mapping is smooth, [math]\displaystyle{ \,h }[/math] is guaranteed to be smooth. To determine injectivity of [math]\displaystyle{ h\, }[/math], consider the following, [math]\displaystyle{ \forall x_i, x_j \in \mathcal{D} }[/math]:

- [math]\displaystyle{ \begin{align} h(x_i) = h(x_j) &\Leftrightarrow s\left(Wx_i + b_h\right) = s\left(Wx_j + b_h\right) \\ & \Leftrightarrow Wx_i + b_h = Wx_j + b_h \mbox{, since } s \mbox{ is invertible} \\ & \Leftrightarrow W\Delta_{ij} = 0 \mbox{, where } \Delta_{ij} = x_i - x_j \end{align} }[/math]

Thus, as long as [math]\displaystyle{ W\, }[/math] forms a basis spanned by its rows [math]\displaystyle{ W_k\, }[/math] such that [math]\displaystyle{ \forall i,j \,\,\exists \alpha \in \mathbb{R}^{d_h} | \Delta_{ij} = \sum_{k=1}^{d_h}\alpha_k W_k }[/math], then the injectivity of [math]\displaystyle{ h\left(x\right) }[/math] will be preserved (as this would imply [math]\displaystyle{ \Delta_{ij} = 0\, }[/math] above). Furthermore, if we limit the domain of [math]\displaystyle{ \,h }[/math] to [math]\displaystyle{ h\left(\mathcal{D}\right) \subset \left(0,1\right)^{d_h} }[/math], containing only the values obtainable by [math]\displaystyle{ h\, }[/math] applied to the training set [math]\displaystyle{ \mathcal{D} }[/math], then [math]\displaystyle{ \,h }[/math] is surjective by definition. Therefore, [math]\displaystyle{ \,h }[/math] will be bijective between [math]\displaystyle{ h\, }[/math] and [math]\displaystyle{ h\left(\mathcal{D}\right) }[/math], meaning that [math]\displaystyle{ h\, }[/math] will be a local diffeomorphism around each point in the training set.

Generating an Atlas from a Learned Feature Mapping

We now need to determine how to generate local charts around each [math]\displaystyle{ x \in \mathcal{D} }[/math]. Since [math]\displaystyle{ h }[/math] must be sensitive to changes between [math]\displaystyle{ x_i }[/math] and one of its neighbours [math]\displaystyle{ x_j }[/math], but insensitive to other changes, we expect this to be encoded in the spectrum of the Jacobian [math]\displaystyle{ J\left(x\right) = \frac{\partial h}{\partial x}\left(x\right) }[/math]. Thus, we define a local chart around [math]\displaystyle{ x }[/math] using the singular value decomposition of [math]\displaystyle{ \,J^T(x) = U(x)S(x)V^T(x) }[/math]. The tangent plane [math]\displaystyle{ \mathcal{H}_x }[/math] at [math]\displaystyle{ \,x }[/math] is then given by the span of the set of principal singular vectors [math]\displaystyle{ \mathcal{B}_x }[/math], as long as the associated singular value is above a given small [math]\displaystyle{ \varepsilon\, }[/math]:

- [math]\displaystyle{ \mathcal{B}_x = \{U_{:,k}(x) | S_{k,k}(x) \gt \varepsilon\} \mbox{ and } \mathcal{H}_x = \{x + v | v \in \mbox{span}\left(\mathcal{B}_x\right)\} }[/math]

where [math]\displaystyle{ U_{:,k}(x)\, }[/math] is the [math]\displaystyle{ k^{th} }[/math] column of [math]\displaystyle{ U\left(x\right) }[/math].

Then, we can define an atlas [math]\displaystyle{ \mathcal{A} }[/math] captured by [math]\displaystyle{ h\, }[/math], based on the local linear approximation around each example:

- [math]\displaystyle{ \mathcal{A} = \{\left(\mathcal{M}_x, \phi_x\right) | x\in\mathcal{D}, \phi_x\left(\tilde{x}\right) = \mathcal{B}_x\left(x - \tilde{x}\right)\} }[/math]

Exploiting Learned Directions for Classification

We would like to use the local charts defined above as additional information for the task of classification. In doing so, we will adopt the manifold hypothesis for classification.

CAE-Based Tangent Distance

We start by defining the tangent distance between two points as the difference between their two respective hyperplanes [math]\displaystyle{ \mathcal{H}_x, \mathcal{H}_y }[/math] defined above, where distance is defined as:

- [math]\displaystyle{ d\left(\mathcal{H}_x,\mathcal{H}_y\right) = \mbox{inf}\{\|z - w\|^2\,\, | \left(z,w\right) \in \mathcal{H}_x \times \mathcal{H}_y\} }[/math]

Finding this distance is a convex problem which is solvable by solving a system of linear equations.<ref>Simard, P., LeCun, Y., & Denker, J. S. (1993). Efficient pattern recognition using a new transformation distance. In Advances in neural information processing systems (pp. 50-58).</ref> Minimizing the distance in this way allows [math]\displaystyle{ x, y \in \mathcal{D} }[/math] to move along their associated tangent spaces, and have the distance evaluated where [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math] are closest. A nearest-neighbour classifier could then be used based on this distance.

CAE-Based Tangent Propagation

Nearest-neighbour techniques work in theory, but are often impractical for large-scale datasets. Classifying test points in this way grows linearly with the number of training points. Neural networks, however, can quickly classify test points once they are trained. We would like the output [math]\displaystyle{ o }[/math] of the classifier to be insensitive to variations in the directions of the local chart around [math]\displaystyle{ x }[/math]. To this end, we add the following penalty to the objective function of the (supervised) network:

- [math]\displaystyle{ \Omega\left(x\right) = \sum_{u \in \mathcal{B}_x} \left|\left| \frac{\partial o}{\partial x}\left(x\right) u \right|\right|^2 }[/math]

The Manifold Tangent Classifier (MTC)

Finally, we are able to put all of the results together into a full algorithm for training a network. The steps follow below:

- Train (unsupervised) a stack of [math]\displaystyle{ K\, }[/math] CAE+H layers as in section 2.2.2. Each layer is trained on the representation learned by the previous layer.

- For each [math]\displaystyle{ x_i \in \mathcal{D} }[/math], compute the Jacobian of the last layer representation [math]\displaystyle{ J^{(K)}(x_i) = \frac{\partial h^{(K)}}{\partial x}\left(x_i\right) }[/math] and its SVD. Note that [math]\displaystyle{ J^{(K)}\, }[/math] is the product of the Jacobians of each encoder. Store the leading [math]\displaystyle{ d_M\, }[/math] singular vectors in [math]\displaystyle{ \mathcal{B}_{x_i} }[/math].

- After the [math]\displaystyle{ K\, }[/math] CAE+H layers, add a sigmoidal output layer with a node for each class. Train the entire network for supervised classification, adding in the propagation penalty in 2.4.2. Note that for each [math]\displaystyle{ x_i, \mathcal{B}_{x_i} }[/math] contains the set of tangent vectors to use.

Results

Datasets Considered

The MTC was tested on the following datasets:

- MNIST: Set of 28 by 28 images of handwritten digits, and the goal is to predict the digit contained in the image.

- Reuters Corpus Volume I: Contains 800,000 real-world news stories. Used the 2000 most frequent words calculated on the whole dataset to create a bag-of-words representation.

- CIFAR-10: Dataset of 70,000 32 by 32 RGB real-world images.

- Forest Cover Type: Large-scale database of cartographic variables for prediction of forest cover types.

Method

To investigate the improvements made by CAE-learned tangents, the following method is employed: Optimal hyper-parameters (e.g. [math]\displaystyle{ \gamma, \lambda\,, }[/math] etc.) were selected by cross-validation on a disjoint validation set disjoint from the training set. The quality of the features extracted by the CAE is evaluated by initializing a standard multi-layer perceptron network with the same parameters as the trained CAE and fine-tuning it by backpropagation on the supervised task.

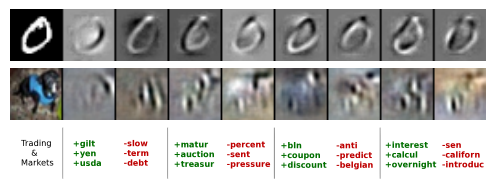

Visualization of Learned Tangents

Figure 1 visualizes the tangents learned by CAE. The example is on the left, and 8 tangents are shown to the right. On the MNIST dataset, the tangents are small geometric transformations. For CIFAR-10, the tangents appear to be parts of the image. For Reuters, the tangents correspond to addition/removal of similar words, with the positive terms in green and the negative terms in red. We see that the tangents do not seem to change the class of the example (e.g. the tangents of the above "0" in MNIST all resemble zeroes).

MTC in Semi-Supervised Setting

The MTC method was evaluated on the MNIST dataset in a semi-supervised setting: the unsupervised feature extractor is trained on the full training set, and the supervised classifier is only trained on a restricted label set. The results with a single layer perceptron initialized with CAE+H pretraining (abbreviated CAE), and the same classifier with tangent propagation added (i.e. MTC) are in table 1. The performance is compared to other methods the do not consider the semi-supervised learning hypothesis (Support Vector Machines (SVM), Neural Networks (NN), Convolutional Neural Networks (CNN)), and those methods perform poorly against MTC, especially when labeled data is low.

| # Labeled | NN | SVM | CNN | CAE | MTC |

| 100 | 25.81 | 23.44 | 22.98 | 13.47 | 12.03 |

| 600 | 11.44 | 8.85 | 7.68 | 6.3 | 5.13 |

| 1000 | 10.7 | 7.77 | 6.45 | 4.77 | 3.64 |

| 3000 | 6.04 | 4.21 | 3.35 | 3.22 | 2.57 |

MTC in Full Classification Problems

We consider using MTC to classify using the full MNIST dataset (i.e. the fully supervised problem), and compare with other methods. The CAE used for tangent discovery is a two-layer deep network with 2000 units per-layer pretrained with the CAE+H objective. The MTC uses the same stack of CAEs trained with tangent propagation, using [math]\displaystyle{ d_M = 15\, }[/math] tangents. The MTC produces state-of-the-art results, achieving a 0.81% error on the test set (as opposed to the previous state-of-the-art result of 0.95% error, achieved by Deep Boltzmann Machines). Table 2 summarizes this result. Note that MTC also beats out CNN, which utilizes prior knowledge about vision using convolutions and pooling.

| K-NN | NN | SVM | CAE | DBM | CNN | MTC |

| 3.09% | 1.60% | 1.40% | 1.04% | 0.95% | 0.95% | 0.81% |

A 4-layer MTC was trained on the Forest CoverType dataset. The MTC produces the best performance on this classification task, beating out the previous best method which used a mixture of non-linear SVMs (denoted as distributed SVM).

| SVM | Distributed SVM | MTC |

| 4.11% | 3.46% | 3.13% |

Conclusion

This paper unifies three common generic prior hypotheses in a unified manner. It uses a semi-supervised manifold approach to examine local charts around training points in the data, and then uses the tangents generated by these local charts to compare different classes. The tangents that are generated seem to be a meaningful decompositions of the training examples. When combining the tangents with the classifier, state-of-the-art results are obtained on classification problems in a variety of domains.

Discussion

- I thought about how it could be possible to use an element-wise rectified linear unit [math]\displaystyle{ R\left(x\right) = \mbox{max}\left(0,x\right) }[/math] in place of the sigmoidal function for encoding, as this type of functional form has seen success in other deep learning methods. However, I believe that this type of functional form would preclude [math]\displaystyle{ h }[/math] from being diffeomorphic, as the [math]\displaystyle{ x }[/math]-values that are negative could not possibly be reconstructed. Thus, the sigmoidal form should likely be retained, although it would be interesting to see how other invertible non-linearities would perform (e.g. hyperbolic tangent).

- It would be interesting to investigate applying the method of tangent extraction to other unsupervised methods, and then create a classifier based on these tangents in the same way that it is done in this paper. Further work could be done to apply this approach to clustering algorithms, kernel PCA, E-M, etc. This is more of a suggestion than a concrete idea, however.

- It is not exactly clear to me how a [math]\displaystyle{ h }[/math] could ever define a true diffeomorphism, since [math]\displaystyle{ h: \mathbb{R}^{d} \mapsto \mathbb{R}^{d_h} }[/math], where [math]\displaystyle{ d \ne d_h }[/math], in general. Normally, if [math]\displaystyle{ d \gt d_h }[/math], we would not expect such a map [math]\displaystyle{ h }[/math] to possibly be injective, since the cardinality of the domain is higher than that of the codomain. However, they may be able to "manufacture" the injectivity of [math]\displaystyle{ h }[/math] using the fact that [math]\displaystyle{ \mathcal{D} }[/math] is a discrete set of points. I'm not sure that this approach defines a continuous manifold, but I'm also not sure if that really matters in this case.

Bibliography

<references />