goingDeeperWithConvolutions: Difference between revisions

No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

= Introduction = | = Introduction = | ||

In the last three years, due to the advances of deep learning and more concretely convolutional networks. [http://white.stanford.edu/teach/index.php/An_Introduction_to_Convolutional_Neural_Networks [an introduction of CNN]] , the quality of image recognition has increased dramatically. The error rates for ILSVRC competition dropped significantly year by year.[http://image-net.org/challenges/LSVRC/ [LSVRC]] This paper<ref name= | In the last three years, due to the advances of deep learning and more concretely convolutional networks. [http://white.stanford.edu/teach/index.php/An_Introduction_to_Convolutional_Neural_Networks [an introduction of CNN]] , the quality of image recognition has increased dramatically. The error rates for ILSVRC competition dropped significantly year by year.[http://image-net.org/challenges/LSVRC/ [LSVRC]] This paper<ref name=gl> | ||

Szegedy, Christian, et al. [http://arxiv.org/pdf/1409.4842.pdf "Going deeper with convolutions."] arXiv preprint arXiv:1409.4842 (2014). | Szegedy, Christian, et al. [http://arxiv.org/pdf/1409.4842.pdf "Going deeper with convolutions."] arXiv preprint arXiv:1409.4842 (2014). | ||

</ref> proposed a new deep convolutional neural network architecture codenamed Inception. With the inception module and carefully crafted design researchers build a 22 layers deep network called Google Lenet, which uses 12X fewer parameters while being significantly more accurate than the winners of ILSVRC 2012.<ref> | </ref> proposed a new deep convolutional neural network architecture codenamed Inception. With the inception module and carefully crafted design researchers build a 22 layers deep network called Google Lenet, which uses 12X fewer parameters while being significantly more accurate than the winners of ILSVRC 2012.<ref name=im> | ||

Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. [http://www.cs.toronto.edu/~fritz/absps/imagenet.pdf "Imagenet classification with deep convolutional neural networks."] | Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. [http://www.cs.toronto.edu/~fritz/absps/imagenet.pdf "Imagenet classification with deep convolutional neural networks."] | ||

Advances in neural information processing systems. 2012. | Advances in neural information processing systems. 2012. | ||

Revision as of 17:23, 20 October 2015

Introduction

In the last three years, due to the advances of deep learning and more concretely convolutional networks. [an introduction of CNN] , the quality of image recognition has increased dramatically. The error rates for ILSVRC competition dropped significantly year by year.[LSVRC] This paper<ref name=gl> Szegedy, Christian, et al. "Going deeper with convolutions." arXiv preprint arXiv:1409.4842 (2014). </ref> proposed a new deep convolutional neural network architecture codenamed Inception. With the inception module and carefully crafted design researchers build a 22 layers deep network called Google Lenet, which uses 12X fewer parameters while being significantly more accurate than the winners of ILSVRC 2012.<ref name=im> Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. "Imagenet classification with deep convolutional neural networks."

Advances in neural information processing systems. 2012.

</ref>

Related work

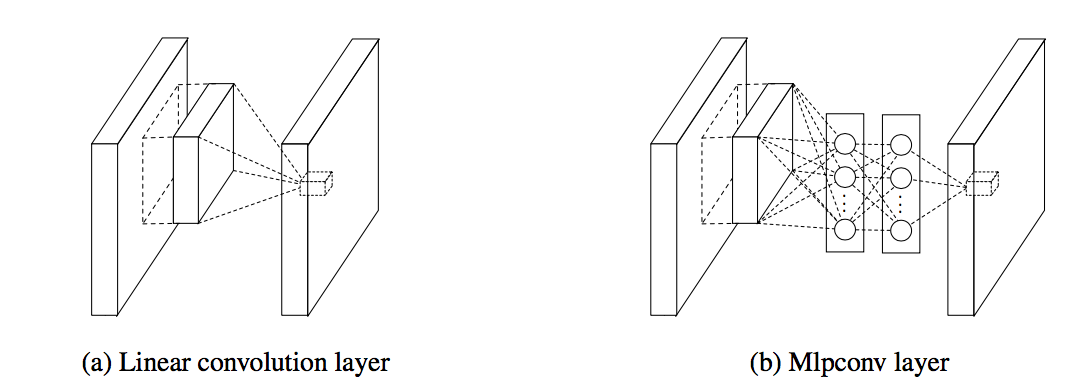

In 2013 Lin et al.<ref name=nin> Min Lin, Qiang Chen and Shuicheng Yan. Network in Network </ref> pointed out that the convolution filter in CNN is a generalized linear model (GLM) for the underlying data patch and the level of abstraction is low with GLM. They suggested replacing GLM with a ”micro network” structure which is a general nonlinear function approximator.

Also in this paper<ref name=nin></ref> Lin et al. proposed a new output layer to improve the performance. In tradition the feature maps of the last convolutional layers are vectorized and fed into a fully connected layers followed by a softmax logistic regression layer<ref name=im></ref>

Architectural details

In "Going deeper with convolutions", Szegedy, Christian, et al. argued convolutional filters with different sizes can cover different clusters of information. For convenience of computation, they choose to use 1 x 1, 3 x 3 and 5 x 5 filters. Additionally, since pooling operations have been essential for the success in other state of the art convolutional networks, they also add pooling layers in their module. Together these made up the naive Inception module.

Inspired by "Network in Network", Szegedy, Christian, et al. choose to use a 1 × 1 convolutional layer as the"micro network" suggested by Lin et al. The 1 x 1 convolutions also function as dimension reduction modules to remove computational bottlenecks. In practice, before doing the expensive 3 x 3 and 5 x 5 convolutions, they used 1 x 1 convolutions to reduce the number of input feature maps.

References

<references />