deflation Methods for Sparse PCA: Difference between revisions

m (Conversion script moved page Deflation Methods for Sparse PCA to deflation Methods for Sparse PCA: Converting page titles to lowercase) |

|||

| (24 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==Introduction== | ==Introduction== | ||

[http://en.wikipedia.org/wiki/Principle_components_analysis Principal component analysis (PCA)] is a popular change of variables technique used in [http://en.wikipedia.org/wiki/Dimension_reduction dimension reduction] and visualization. The goal of PCA is to extract several principal components, linear combinations of input variables that together best account for the [http://en.wikipedia.org/wiki/Variance variance] in a data set. Often, PCA is formulated as an [http://en.wikipedia.org/wiki/Eigendecomposition_of_a_matrix eigenvalue decomposition] problem: each eigenvector of the sample covariance matrix of a data set corresponds to the loadings or coefficients of a principal component. A common approach to solving this partial eigenvalue decomposition is to iteratively alternate between two sub-problems: rank-one variance maximization (which is done by finding the leading eigenvector of the covariance matrix) and [http://www.miislita.com/information-retrieval-tutorial/matrix-tutorial-3-eigenvalues-eigenvectors.html#deflation-method matrix deflation] (which is done by modifying the covariance matrix to eliminate the influence of that eigenvector). | [http://en.wikipedia.org/wiki/Principle_components_analysis Principal component analysis (PCA)] is a popular change of variables technique used in [http://en.wikipedia.org/wiki/Dimension_reduction dimension reduction] and visualization. The goal of PCA is to extract several principal components, [http://en.wikipedia.org/wiki/Linear_combination linear combinations] of input variables that together best account for the [http://en.wikipedia.org/wiki/Variance variance] in a data set. Often, PCA is formulated as an [http://en.wikipedia.org/wiki/Eigendecomposition_of_a_matrix eigenvalue decomposition] problem: each eigenvector of the sample [http://en.wikipedia.org/wiki/Covariance_matrix covariance matrix] of a data set corresponds to the loadings or coefficients of a principal component. A common approach to solving this partial eigenvalue decomposition is to iteratively alternate between two sub-problems: rank-one variance maximization (which is done by finding the leading eigenvector of the covariance matrix) and [http://www.miislita.com/information-retrieval-tutorial/matrix-tutorial-3-eigenvalues-eigenvectors.html#deflation-method matrix deflation] (which is done by modifying the covariance matrix to eliminate the influence of that eigenvector). | ||

A primary drawback of PCA is its lack of [http://en.wikipedia.org/wiki/Sparsity sparsity]. Each principal component is a linear combination of all variables; the loadings (weights) are all typically non-zero. Sparsity is desirable as it often leads to more interpretable results, reduced computation time, and improved generalization. One approach to avoid this drawback of PCA is the formulation of sparse PCA (SPCA), a link to the 2004 paper of which by Iain M. Johnstone ''et al.''<ref name="R1">Iain M. Johnstone and Arthur Y. Lu. Sparse Principal Components Analysis. Technical report, | A primary drawback of PCA is its lack of [http://en.wikipedia.org/wiki/Sparsity sparsity]. Each principal component is a linear combination of all variables; the loadings (weights) are all typically non-zero. Sparsity is desirable as it often leads to more interpretable results, reduced computation time, and improved generalization. One approach to avoid this drawback of PCA is the formulation of sparse PCA (SPCA), a link to the 2004 paper of which by Iain M. Johnstone ''et al.''<ref name="R1">Iain M. Johnstone and Arthur Y. Lu. "Sparse Principal Components Analysis". Technical report, | ||

Stanford University, Dept. of Statistics, 2004.</ref> is available [http://www-stat.stanford.edu/~imj/WEBLIST/AsYetUnpub/sparse.pdf here]. SPCA makes the PCA process sparse by searching for ''pseudo-eigenvectors'' and their corresponding sparse (few such values are non-zero) loadings that explain the maximal amount of variance in the data. | Stanford University, Dept. of Statistics, 2004.</ref> is available [http://www-stat.stanford.edu/~imj/WEBLIST/AsYetUnpub/sparse.pdf here]. SPCA makes the PCA process sparse by searching for ''pseudo-eigenvectors'' and their corresponding sparse (few such values are non-zero) loadings that explain the maximal amount of variance in the data. | ||

| Line 14: | Line 14: | ||

=== Hotelling's deflation and PCA=== | === Hotelling's deflation and PCA=== | ||

In the PCA setting, the goal is to extract the <math>r</math> leading eigenvectors of the sample covariance matrix, <math>A_0 \in\mathbb{S}^{p}_{+}</math>, as its eigenvectors are equivalent to the loadings of the first <math>\,r</math> principal components. Hotelling’s deflation method is a simple and popular technique for sequentially extracting these eigenvectors. On the <math>\,t</math>th iteration of the deflation method, we first extract the leading eigenvector of <math>\,A_{t -1}</math>, | In the PCA setting, the goal is to extract the <math>\,r</math> leading eigenvectors of the sample covariance matrix, <math>A_0 \in\mathbb{S}^{p}_{+}</math>, as its eigenvectors are equivalent to the loadings of the first <math>\,r</math> principal components. Hotelling’s deflation method is a simple and popular technique for sequentially extracting these eigenvectors. On the <math>\,t</math>-th iteration of the deflation method, we first extract the leading eigenvector of <math>\,A_{t -1}</math>, | ||

<br /><br /> | <br /><br /> | ||

<center><math>x_t = {\arg\max}_{x:x^Tx=1}x^TA_{t-1}x</math></center> | <center><math>x_t = {\arg\max}_{x:x^Tx=1}x^TA_{t-1}x</math></center> | ||

and we then use Hotelling's deflation to annihilate <math>x_t</math>: | and we then use Hotelling's deflation to annihilate <math>\,x_t</math>: | ||

<br/><br/> | <br/><br/> | ||

<center><math>A_{t}=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t</math></center> | <center><math>A_{t}=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t</math></center> | ||

The deflation step ensures that the <math>\,t+1 | The deflation step ensures that the <math>\,t+1</math>-st leading eigenvector of <math>\,A_0</math> is the leading eigenvector of | ||

<math>\,A_{t}</math>. The following proposition explains why. | <math>\,A_{t}</math>. The following proposition explains why. | ||

====Proposition==== | ====Proposition==== | ||

If <math>\lambda _{1}\ge ...\ge \lambda _{p}</math> are the eigenvalues of <math>A\in\mathbb{S}_{+}^{p},x_{1},\ldots,x_{p}</math>are the corresponding eigenvectors, and <math>\hat{A}=A-x_{j}x_{j}^{T}Ax_{j}x_{j}^{T}</math>for some <math>j\in 1, | If <math>\lambda _{1}\ge ...\ge \lambda _{p}</math> are the eigenvalues of <math>A\in\mathbb{S}_{+}^{p},x_{1},\ldots,x_{p}</math> are the corresponding eigenvectors, and <math>\hat{A}=A-x_{j}x_{j}^{T}Ax_{j}x_{j}^{T}</math> for some <math>j\in 1,\ldots,p,</math> then <math>\hat{A}</math> has eigenvectors <math>\,x_{1},...,x_{p}</math> with corresponding eigenvalues <math>\,\lambda _{1},\ldots,\lambda _{j-1},0,\lambda _{j+1},\lambda _{p}.</math> | ||

The proof of this proposition is simple and is given as follows: | The proof of this proposition is simple and is given as follows: | ||

<math>\, \hat{A} x_j = A x_j − x_j x_j^T A x_j x_j^T x_j = A x_j − x_j x_j^T A x_j = \lambda_j x_j − \lambda_j x_j = 0 x_j</math>.<br> | <math>\, \hat{A} x_j = A x_j − x_j x_j^T A x_j x_j^T x_j = A x_j − x_j x_j^T A x_j = \lambda_j x_j − \lambda_j x_j = 0 x_j</math>.<br> | ||

<math>\, \hat{A} x_i = A x_i − x_j x_j^T A x_j x_j^T x_i = A x_i − 0 = \lambda_i x_i</math>, <math>\,\forall i \neq j</math>. QED | <math>\, \hat{A} x_i = A x_i − x_j x_j^T A x_j x_j^T x_i = A x_i − 0 = \lambda_i x_i</math>, <math>\,\forall i \neq j </math>. QED | ||

Thus, Hotelling’s deflation preserves all eigenvectors of <math>\,A</math> whilst removing a selected eigenvalue of <math>\,A</math>. This implies that Hotelling's deflation preserves the positive semi-definiteness of <math>\,A</math>, i.e. the matrix resulting from deflating <math>\,A</math> | Thus, Hotelling’s deflation preserves all eigenvectors of <math>\,A</math> whilst removing a selected eigenvalue of <math>\,A</math>. This implies that Hotelling's deflation preserves the positive semi-definiteness of <math>\,A</math>, i.e. the matrix resulting from deflating <math>\,A</math> is positive semi-definite if and only if <math>\,A</math> is positive semi-definite. | ||

For the interested reader, a paper by Wang ''et al.'' titled ''Sparse PCA by Iterative Elimination Algorithm'' | For the interested reader, a paper by Wang ''et al.'' titled ''Sparse PCA by Iterative Elimination Algorithm'' provides the details regarding this algorithm and is available [http://www.math.msu.edu/~ywang/Preprints/spca1002-WangWu.pdf here]. | ||

===Hotelling's deflation and sparse PCA=== | ===Hotelling's deflation and sparse PCA=== | ||

In the [http://en.wikipedia.org/wiki/Sparse_PCA sparse PCA] setting, we seek <math>\,r</math> sparse loadings which together capture the maximum amount of variance in the data. To find the first such "pseudo-eigenvector" equation, we can consider cardinality-constrained version of | In the [http://en.wikipedia.org/wiki/Sparse_PCA sparse PCA] setting, we seek <math>\,r</math> sparse loadings which together capture the maximum amount of variance in the data. To find the first such "pseudo-eigenvector" equation, we can consider a cardinality-constrained version of the problem: | ||

<br/><br/> | <br/><br/> | ||

<center><math>x_1= | <center><math>x_1={\arg\max}_{x:x^Tx=1,\textbf{Card}(x)\leq k_1}x^TA_0x.</math></center> | ||

Typically, Hotelling’s deflation is utilized by substituting an extracted pseudo-eigenvector for a true eigenvector in the deflation step of <math>A_{t}=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t</math>. This substitution, however, is seldom justified, for the properties of Hotelling’s deflation depend crucially on the use of a true eigenvector. | Typically, Hotelling’s deflation is utilized by substituting an extracted pseudo-eigenvector for a true eigenvector in the deflation step of <math>A_{t}=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t</math>. This substitution, however, is seldom justified, for the properties of Hotelling’s deflation depend crucially on the use of a true eigenvector. If pseudo-eigenvectors are used in place of true eigenvectors, the [http://en.wikipedia.org/wiki/Cauchy%E2%80%93Schwarz_inequality Cauchy-Schwarz inequality] is violated and the pseudo-eigenvectors may not be orthogonal to the deflated matrix. As well, the deflated matrix may not be positive semidefinite under pseudo-eigenvector Hotelling's deflation. This shortcoming can be a serious problem since most iterative sparse PCA methods assume a positive semidefinite matrix at each step. | ||

===Alternative deflation techniques=== | ===Alternative deflation techniques=== | ||

To rectify the failings of pseudo-eigenvector Hotelling's deflation the author considers several alternative deflation techniques better suited to the [http://en.wikipedia.org/wiki/Sparse_PCA sparse PCA] setting. It should be noted that any deflation-based sparse PCA method can utilize any of the deflation techniques discussed below. | |||

====Projection deflation==== | ====Projection deflation==== | ||

Given a data matrix <math>Y \in \mathbb{R}^{n\times p}</math> and an arbitrary unit vector <math>x\in \mathbb{R}</math>, an intuitive way to remove the contribution of <math>\,x</math> from <math>\,Y</math> is to project <math>\,Y</math> onto the orthocomplement of the space spanned by <math>x:\hat{Y} = Y(I-xx^T) </math>. If <math>\,A</math> is the sample covariance matrix of <math>\,Y</math> , then the sample covariance of <math>\hat{Y}</math> is given by<math>\hat{A}=(I-xx^T)A(I-xx^T)</math>, which leads to our formulation for projection deflation: | Given a data matrix <math>Y \in \mathbb{R}^{n\times p}</math> and an arbitrary unit vector <math>x\in \mathbb{R}</math>, an intuitive way to remove the contribution of <math>\,x</math> from <math>\,Y</math> is to project <math>\,Y</math> onto the orthocomplement of the space spanned by <math>x:\hat{Y} = Y(I-xx^T) </math>. If <math>\,A</math> is the sample covariance matrix of <math>\,Y</math> , then the sample covariance of <math>\hat{Y}</math> is given by<math>\hat{A}=(I-xx^T)A(I-xx^T)</math>, which leads to our formulation for projection deflation: | ||

<center><math>A_t=A_{t-1}-x_tx_t^TA_{t-1}-A_{t-1}x_tx_t^T+x_tx_t^TA_{t-1}x_tx_t^T=(I-x_tx_t^T)A_{t-1}(I-x_tx_t^T)</math></center> | <center><math>A_t=A_{t-1}-x_tx_t^TA_{t-1}-A_{t-1}x_tx_t^T+x_tx_t^TA_{t-1}x_tx_t^T=(I-x_tx_t^T)A_{t-1}(I-x_tx_t^T)</math></center> | ||

*When <math>\,x_t</math> is a true eigenvector of <math>\,A_{t-1}</math> with eigenvalue <math>\,\lambda_t</math>, projection deflation reduces to Hotelling's deflation. The derivation of this property is shown below. | |||

:<center><math>A_t=A_{t-1}-x_tx_t^TA_{t-1}-A_{t-1}x_tx_t^T+x_tx_t^TA_{t-1}x_tx_t^T</math></center> | :<center><math>A_t=A_{t-1}-x_tx_t^TA_{t-1}-A_{t-1}x_tx_t^T+x_tx_t^TA_{t-1}x_tx_t^T</math></center> | ||

:<center><math>=A_{t-1}-\lambda_tx_tx_t^T-\lambda_tx_tx_t^T+\lambda_tx_tx_t^T</math></center> | :<center><math>=A_{t-1}-\lambda_tx_tx_t^T-\lambda_tx_tx_t^T+\lambda_tx_tx_t^T</math></center> | ||

:<center><math>=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t</math></center> | :<center><math>=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t</math></center> | ||

*In the general case in which <math>\,x_t</math> is not a true eigenvector, projection deflation maintains the desirable properties that were lost to Hotelling's deflation. Unlike Hotelling's deflation, projection deflation preserves positive semi-definiteness of a matrix. This property is easy to show, and its derivation is given as follows: <math>\, \forall y,\; y^T A_t y = y^T (I - x_t x_t^T) A_{t-1} (I - x_t x_t^T) y = z^T A_{t-1} z</math>, where <math>\, z = (I - x_t x_t^T) y. </math> Also, unlike Hotelling's deflation, projection deflation renders <math>\,A_t</math> both left and right orthogonal to <math>\,x_t</math>. This is because <math>\,(I - x_t x_t^T) x_t = x_t - x_t = 0</math> and <math>\,A_t</math> is symmetric. This property also implies another important property of projection deflation: projection deflation annihilates all covariances with <math>\,x_t</math>. | |||

<math>\, \forall y, y^T A_t y = y^T (I - x_t x_t^T) A_{t-1} (I - x_t x_t^T) y = z^T A_{t-1} z</math>, where <math>\, z = (I - x_t x_t^T) y. </math> | |||

orthogonal to <math>\,x_t</math>. This is because <math>\,(I - x_t x_t^T) x_t = x_t - x_t = 0</math> and <math>\,A_t</math> is symmetric. | |||

This property also implies another important property of projection deflation: projection deflation annihilates all covariances with <math>\,x_t</math>. | |||

====Schur complement deflation==== | ====Schur complement deflation==== | ||

Let <math>x\in\mathbb{R}^p</math>be a unit vector and <math>W\in\mathbb{R}^p</math> be a Gaussian random vector, representing the joint distribution of the data variables. If <math>\,W</math> has covariance matrix <math>\,\ | Let <math>x\in\mathbb{R}^p</math> be a unit vector and <math>W\in\mathbb{R}^p</math> be a Gaussian random vector, representing the joint distribution of the data variables. If <math>\,W</math> has covariance matrix <math>\,\Sigma</math>, then (<math>\,W,Wx</math>) has covariance matrix <math>\,V=\left( \begin{matrix} \Sigma & \Sigma x_{{}} \\ x^{T}\Sigma & x^{T}\Sigma x \\\end{matrix} \right)</math>, and <math>Var(W|Wx)=\Sigma -\frac{\Sigma xx^{T}\Sigma }{x^{T}\Sigma x}</math> whenever <math>x^{T}\Sigma x\ne 0</math>. That is, the conditional variance is the [http://en.wikipedia.org/wiki/Schur_complement Schur complement] of the vector variance <math>\,x^T \Sigma x</math> in the full covariance matrix <math>\,V</math>. Substituting sample covariance matrices for their population counterparts, Schur complement deflation is: | ||

<center><math>A_{t}=A_{t-1}-\frac{A_{t-1}x_{t}x_{t}^{T}A_{t-1}}{x_{t}^{T}A_{t-1}x_{t}}</math></center> | <center><math>A_{t}=A_{t-1}-\frac{A_{t-1}x_{t}x_{t}^{T}A_{t-1}}{x_{t}^{T}A_{t-1}x_{t}}.</math></center> | ||

Like projection deflation, Schur complement deflation preserves positive-semi-definiteness and also renders <math>\,x_t</math> both left and right orthogonal to <math>\,A_t</math>. These two properties of Schur complement deflation are easy to show, and their derivations can be found in Mackey' | Like projection deflation, Schur complement deflation preserves positive-semi-definiteness and also renders <math>\,x_t</math> both left and right orthogonal to <math>\,A_t</math>. These two properties of Schur complement deflation are easy to show, and their derivations can be found in the Reference section of <ref name="R2">Lester Mackey. "Deflation Methods for Sparse PCA." ''In Advances in Neural Information Processing Systems 21'', pages 1017–1024, 2009.</ref> | ||

: | :*When <math>\,x_t</math> is an eigenvector of <math>\,A_{t-1}</math> with eigenvalue <math>\,\lambda_t \ne 0</math>, it reduces to Hotelling's deflation. | ||

:<center><math>A_{t}=A_{t-1}-\frac{A_{t-1}x_{t}x_{t}^{T}A_{t-1}}{x_{t}^{T}A_{t-1}x_{t}}</math></center> | :<center><math>A_{t}=A_{t-1}-\frac{A_{t-1}x_{t}x_{t}^{T}A_{t-1}}{x_{t}^{T}A_{t-1}x_{t}}</math></center> | ||

:<center><math>=A_{t-1}-\frac{\ | :<center><math>=A_{t-1}-\frac{\lambda_t x_tx_t^T\lambda_t}{\lambda_t}</math></center> | ||

:<center><math>=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t</math></center> | :<center><math>=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t.</math></center> | ||

It should be noted that, even though we motivated Schur complement deflation with a Gaussianity assumption, in general, this technique has a more general interpretation as a column projection of a data matrix. | It should be noted that, even though we motivated Schur complement deflation with a Gaussianity assumption, in general, this technique has a more general interpretation as a column projection of a data matrix. | ||

====Orthogonalized deflation==== | ====Orthogonalized deflation==== | ||

Whenever we deal with a sequence of non-orthogonal vectors, we must take care to distinguish between the variance explained by a vector and the additional variance explained, given all previous vectors.The | Whenever we deal with a sequence of non-orthogonal vectors, we must take care to distinguish between the variance explained by a vector and the additional variance explained, given all previous vectors. The | ||

additional variance explained by the <math>\,t</math>-th pseudo-eigenvector, <math>\,x_t</math>, is equivalent to the variance explained by the component of <math>\,x_t</math> orthogonal to the space spanned by all previous pseudo-eigenvectors, <math>\,q_t=x_t-P_{t-1}x_t</math> is the orthogonal projection onto the space spanned by <math>\,x_1,...,x_{t-1}</math>. On each deflation step, therefore, we only want to eliminate the variance associated with <math>\,q_t</math>. This is because annihilating | additional variance explained by the <math>\,t</math>-th pseudo-eigenvector, <math>\,x_t</math>, is equivalent to the variance explained by the component of <math>\,x_t</math> orthogonal to the space spanned by all previous pseudo-eigenvectors, <math>\,q_t=x_t-P_{t-1}x_t</math> where <math>\,P_t</math> is the orthogonal projection onto the space spanned by <math>\,x_1,...,x_{t-1}</math>. On each deflation step, therefore, we only want to eliminate the variance associated with <math>\,q_t</math>. This is because annihilating | ||

the full vector <math>\,x_t</math> would often lead to double counting by re-introducing components that are parallel to previously annihilated vectors. We shall now look at how this issue can be resolved. First, we can consider the issue of non-orthogonality in the context of Hotelling’s deflation. A modified version of Hotelling's deflation as described in [12] of the paper in the Reference section, known as orthogonalized Hotelling’s deflation (OHD), has been introduced. For this deflation technique, the following hold true: | the full vector <math>\,x_t</math> would often lead to double counting by re-introducing components that are parallel to previously annihilated vectors. We shall now look at how this issue can be resolved. First, we can consider the issue of non-orthogonality in the context of Hotelling’s deflation. A modified version of Hotelling's deflation as described in [12] of the paper in the Reference section, known as orthogonalized Hotelling’s deflation (OHD), has been introduced. For this deflation technique, the following hold true: | ||

: | :*OHD is equivalent to Hotelling's deflation for <math>\,t = 1</math>: | ||

:<center><math>A_{t}=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t</math></center> | :<center><math>A_{t}=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t</math></center><br /> | ||

: | :*When <math>\,t > 1</math>, OHD can be expressed in terms of running [http://en.wikipedia.org/wiki/Gram%E2%80%93Schmidt_process Gram-Schmidt] decomposition: | ||

:<center><math>q_{t}=\frac{(I-Q_{t-1}Q_{t-1}^{T})x_{t}}{\left\| (I-Q_{t-1}Q_{t-1}^{T})x{}_{t} \right\|}</math></center> | :<center><math>q_{t}=\frac{(I-Q_{t-1}Q_{t-1}^{T})x_{t}}{\left\| (I-Q_{t-1}Q_{t-1}^{T})x{}_{t} \right\|}</math></center> | ||

:<center><math>A_{t}=A_{t-1}-q_{t}q_{t}^{T}A_{t-1}q_{t}q_{t}^{T}</math></center> | :<center><math>A_{t}=A_{t-1}-q_{t}q_{t}^{T}A_{t-1}q_{t}q_{t}^{T},</math></center> where <math>\,q_t = x_1</math>, and <math>\,q_1,...,q_{t-1}</math> form the columns of <math>\,Q_{t-1}</math>. Since <math>\,q_1,...,q_{t-1}</math> form an [http://en.wikipedia.org/wiki/Orthonormal_basis orthonormal basis] for the space spanned by <math>\,x_1,...,x_{t-1}</math>, we have <math>\,Q_{t-1}Q_{t-1}^T=P_{t-1}</math>, which is the aforementioned orthogonal projection. | ||

Because the first round of OHD is equivalent to an application of Hotelling’s deflation, OHD inherits all of the weaknesses associated with Hotelling's deflation. Fortunately, the same principles behind OHD that preserve orthogonality may also be applied to projection deflation to create an orthogonalized variant of projection deflation that inherits all of the desirable properties of projection deflation that Hotelling's deflation lacks. Furthermore, it should be noted that the approach described above is unnecessary for Schur complement deflation. This is because Schur complement deflation is unique in that it preserves orthogonality in all subsequent rounds of its application.<br /><br /> | |||

'''Proposition:''' Orthogonalized Schur complement deflation is equivalent to Schur complement deflation.<br /><br /> | |||

'''Proof:''' Consider the <math>\,t</math>-th round of Schur complement deflation. We may write <math>\,x_t=o_t+p_t</math>, where <math>\,p_t</math> is in the subspace spanned by all previously extracted pseudo-eigenvectors and <math>\,o_t</math> is orthogonal to this subspace. Then we know that <math>\,A_{t-1}p_t=0</math>, as <math>\,p_t</math> is a linear combination of <math>x_1, \ldots , x_{t-1}</math>, and <math>\,A_{t-1}x_i = 0.</math> Thus, <math>x^T_tA_tx_t = p^T_t A_tp_t + o^T_t A_tp_t + p^T_t A_to_t + o^T_tA_to_t = o^T_t A_to_t</math>. Further, <math> A_{t-1}x_tx^T_t A_{t-1} = A_{t-1}p_tp^T_t A_{t-1}+A_{t-1}p_to^T_t A_{t-1}+A_{t-1}o_tp^T_t A_{t-1}+A_{t-1}o_to^T_t A_{t-1} =A_{t-1}o_to^T_t A_{t-1}</math>. Hence <math>A_t = A_{t-1} -\frac{A_{t-1}o_t o^T_t A_{t-1}}{o^T_t A_{t-1}o_t}=A_{t-1}-\frac{A_{t-1}q_tq^T_t A_{t-1}}{q^T_t A_{t-1}q_t}</math> as <math>q_t=\frac{o_t}{\|o_t\|}.</math> | |||

====Summary of sparse PCA deflation method properties==== | ====Summary of sparse PCA deflation method properties==== | ||

=====Proposition===== | =====Proposition===== | ||

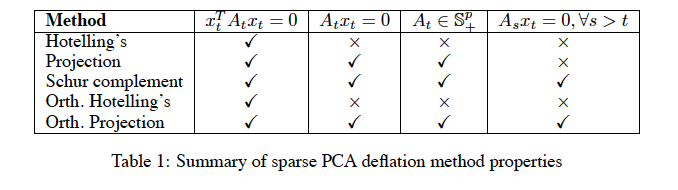

The following table compares properties of the various deflation techniques studied above. | The following table compares properties of the various deflation techniques studied above. | ||

<center>[[File:Table.png]]</center> | <center>[[File:Table.png]]</center> | ||

==Reformulating sparse PCA== | ==Reformulating sparse PCA== | ||

Recall that the goal of [http://en.wikipedia.org/wiki/Sparse_PCA sparse PCA] is to find <math>\,r</math> cardinality-constrained pseudo-eigenvectors which together explain the most variance in the data. If we additionally constrain the sparse loadings to be generated sequentially, as in the PCA setting and the previous section, then a greedy approach of maximizing the additional variance of each new vector naturally suggests itself. On round <math>\,t</math>, the additional variance of a vector <math>\,x</math> is given by <math>\,\frac{ | Recall that the goal of [http://en.wikipedia.org/wiki/Sparse_PCA sparse PCA] is to find <math>\,r</math> cardinality-constrained pseudo-eigenvectors which together explain the most variance in the data. If we additionally constrain the sparse loadings to be generated sequentially, as in the PCA setting and the previous section, then a greedy approach of maximizing the additional variance of each new vector naturally suggests itself. On round <math>\,t</math>, the additional variance of a vector <math>\,x</math> is given by <math>\,\frac{qA_{0}q}{q^{T}q}</math>, where <math>\,A_{0}</math> is the data covariance matrix, <math>\,q=(I-P_{t-1})x</math>, and <math>\,P_{t-1}</math> is the projection onto the space spanned by the previous pseudo-eigenvectors <math>\,x_{1},...,x_{t-1}</math>. As <math>\,q^{T}q=x^{T}(I-P_{t-1})(I-P_{t-1})x=x^{T}(I-P_{t-1})x</math>, maximizing the additional variance is equivalent to solving a cardinality-constrained maximum generalized eigenvalue problem, | ||

:<center><math> \underset{x}{\mathop{\max }}\,x^{T}(I-P_{t-1})A_{0}(I-P_{t-1})x</math></center> | :<center><math> \underset{x}{\mathop{\max }}\,x^{T}(I-P_{t-1})A_{0}(I-P_{t-1})x</math></center> | ||

:<center>subject to <math>\,x^{T}(I-P_{t-1})x=1</math></center> | :<center>subject to <math>\,x^{T}(I-P_{t-1})x=\textbf{1},</math></center><br /> | ||

:<center><math>\textbf{Card}(x)\leq k_t</math></center> | :<center><math>\textbf{Card}(x)\leq k_t</math></center> | ||

If we let <math>q_{s}=(I-P_{t-1})x_{s},\forall s\le t-1</math>,then<math>q_1,...,q_{t-1}</math> | If we let <math>q_{s}=(I-P_{t-1})x_{s},\forall s\le t-1</math>, then <math>\,q_1,...,q_{t-1}</math> form an orthonormal basis for the space spanned by <math>\,x_1,...,x_{t-1}</math>. Writing <math>I-P_{t-1}=I-\sum\limits_{s=1}^{t-1}{q_{s}q_{s}^{T}=\prod\limits_{s=1}^{t-1}{(I-q_{s}q_{s}^{T})}}</math> suggests a generalized deflation techniques that leads to the solution on each round. | ||

===Algorithm 1=== | ===Algorithm 1=== | ||

Generalized deflation method for [http://en.wikipedia.org/wiki/Sparse_PCA sparse PCA]: | Generalized deflation method for [http://en.wikipedia.org/wiki/Sparse_PCA sparse PCA]: | ||

Given | Given <math>A_{0}\in S_{+}^{p},r\in N,\{k_{1},...,k_{r}\}\subset N</math>, | ||

:Execute: | :Execute: | ||

:1.<math>B_{0}\leftarrow I</math> | :1.<math>B_{0}\leftarrow I</math> | ||

:2.For <math>\,t:=1,...r</math> | :2.For <math>\,t:=1,...,r</math> | ||

: <math>\bullet x_{t}\leftarrow \underset{x:x^{T}B_{t-1}x=1,\text{Card}(x)\le k_{t}}{\mathop{\arg \max }}\,x^{T}A_{t-1}x</math> | : <math>\bullet x_{t}\leftarrow \underset{x:x^{T}B_{t-1}x=1,\text{Card}(x)\le k_{t}}{\mathop{\arg \max }}\,x^{T}A_{t-1}x</math> | ||

: <math>\bullet q_{t}\leftarrow B_{t-1}x_{t}</math> | : <math>\bullet q_{t}\leftarrow B_{t-1}x_{t}</math> | ||

| Line 107: | Line 103: | ||

: <math>\bullet B_{t}\leftarrow B_{t-1}(I-q_{t}q_{t}^{T})</math> | : <math>\bullet B_{t}\leftarrow B_{t-1}(I-q_{t}q_{t}^{T})</math> | ||

: <math>\bullet x_{t}\leftarrow x_{t}/\left\| x_{t} \right\|</math> | : <math>\bullet x_{t}\leftarrow x_{t}/\left\| x_{t} \right\|</math> | ||

:Return: <math>\,\{x_{1},...x_{r}\}</math> | :Return: <math>\,\{x_{1},...,x_{r}\}</math> | ||

==Experiment== | ==Experiment== | ||

===Gene expression data=== | ===Gene expression data=== | ||

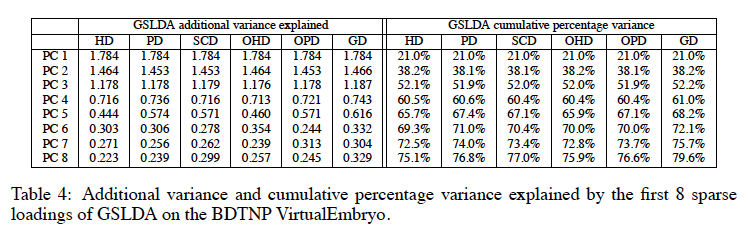

The Berkeley Drosophila Transcription Network Project (BDTNP) 3D gene expression data contains gene expression levels measured in each nucleus of developing Drosophila embryos and averaged across many embryos and developmental stages. An aggregate VirtualEmbryo contains 21 genes and 5759 example nuclei. | The Berkeley Drosophila Transcription Network Project (BDTNP) 3D gene expression data contains gene expression levels measured in each nucleus of developing Drosophila embryos and averaged across many embryos and developmental stages. An aggregate VirtualEmbryo contains 21 genes and 5759 example nuclei. With this data we can test the various deflation techniques. Using the GSLDA algorithm <ref name="R3">B.Moghaddam, Y. Weiss, and S. Avidan. "Generalized spectral bounds for sparse LDA." ''In Proc. ICML'', 2006.</ref> to solve the cardinality-constrained rank-one variance maximization and the following deflation techniques: Hotelling's (HD), projection )PD), Schur complement (SCD), orthogonalized Hotelling's (OHD), orthogonalized projection (OPD) and generalized(GD) we get the following results. | ||

<center>[[File:Picture 2.png]]</center> | <center>[[File:Picture 2.png]]</center> | ||

It shows a clear hierarchy among the deflation | It shows a clear hierarchy among the deflation methods and the generalized deflation method performs best. | ||

==Conclusion== | ==Conclusion== | ||

The utility of above Hotelling's deflation procedures is not limited to the [http://en.wikipedia.org/wiki/Sparse_PCA sparse PCA] setting. Indeed, the methods presented can be applied to any of a number of constrained eigendecomposition-based problems, including [http://en.wikipedia.org/wiki/Canonical_correlation_analysis sparse canonical correlation analysis] and [http://en.wikipedia.org/wiki/Linear_discriminant_analysis linear discriminant analysis]. | The utility of above Hotelling's deflation procedures is not limited to the [http://en.wikipedia.org/wiki/Sparse_PCA sparse PCA] setting. Indeed, the methods presented can be applied to any of a number of constrained eigendecomposition-based problems, including [http://en.wikipedia.org/wiki/Canonical_correlation_analysis sparse canonical correlation analysis] and [http://en.wikipedia.org/wiki/Linear_discriminant_analysis linear discriminant analysis]. | ||

==Relationship to Other Sparse PCA Methods== | |||

[[Deflation Method for Penalized Matrix Decomposition Sparse PCA]] | |||

==Reference== | ==Reference== | ||

<references /> | |||

Latest revision as of 08:45, 30 August 2017

Introduction

Principal component analysis (PCA) is a popular change of variables technique used in dimension reduction and visualization. The goal of PCA is to extract several principal components, linear combinations of input variables that together best account for the variance in a data set. Often, PCA is formulated as an eigenvalue decomposition problem: each eigenvector of the sample covariance matrix of a data set corresponds to the loadings or coefficients of a principal component. A common approach to solving this partial eigenvalue decomposition is to iteratively alternate between two sub-problems: rank-one variance maximization (which is done by finding the leading eigenvector of the covariance matrix) and matrix deflation (which is done by modifying the covariance matrix to eliminate the influence of that eigenvector).

A primary drawback of PCA is its lack of sparsity. Each principal component is a linear combination of all variables; the loadings (weights) are all typically non-zero. Sparsity is desirable as it often leads to more interpretable results, reduced computation time, and improved generalization. One approach to avoid this drawback of PCA is the formulation of sparse PCA (SPCA), a link to the 2004 paper of which by Iain M. Johnstone et al.<ref name="R1">Iain M. Johnstone and Arthur Y. Lu. "Sparse Principal Components Analysis". Technical report, Stanford University, Dept. of Statistics, 2004.</ref> is available here. SPCA makes the PCA process sparse by searching for pseudo-eigenvectors and their corresponding sparse (few such values are non-zero) loadings that explain the maximal amount of variance in the data.

In analogy to the PCA setting, many authors attempt to solve the sparse PCA problem by iteratively alternating between two sub-tasks: cardinality-constrained rank-one variance maximization and matrix deflation. The first sub-task is an NP-hard problem, and a variety of relaxations and approximate solutions have been developed in the literature. The latter sub-task has received relatively little attention and is typically borrowed without justification from the PCA context. In this paper, the author demonstrated that the standard PCA deflation procedure is seldom appropriate for the sparse PCA setting. To rectify the situation, the author first develops several heuristic deflation alternatives with more desirable properties, then reformulates the sparse PCA optimization problem to explicitly reflect the maximum additional variance objective on each round. The result is a generalized deflation procedure that typically outperforms more standard techniques on real-world data-sets.

Notation

[math]\displaystyle{ \,I }[/math] is the identity matrix. [math]\displaystyle{ \mathbb{S}^{p}_{+} }[/math] is the set of all symmetric, positive semidefinite matrices in [math]\displaystyle{ \mathbb{R}^{p\times p} }[/math]. [math]\displaystyle{ \textbf{Card}(x) }[/math] represents the cardinality of or the number of non-zero entries in the vector [math]\displaystyle{ \,x }[/math].

Deflation methods

A matrix deflation modifies a matrix to eliminate the influence of a given eigenvector, typically by setting the associated eigenvalue to zero.

Hotelling's deflation and PCA

In the PCA setting, the goal is to extract the [math]\displaystyle{ \,r }[/math] leading eigenvectors of the sample covariance matrix, [math]\displaystyle{ A_0 \in\mathbb{S}^{p}_{+} }[/math], as its eigenvectors are equivalent to the loadings of the first [math]\displaystyle{ \,r }[/math] principal components. Hotelling’s deflation method is a simple and popular technique for sequentially extracting these eigenvectors. On the [math]\displaystyle{ \,t }[/math]-th iteration of the deflation method, we first extract the leading eigenvector of [math]\displaystyle{ \,A_{t -1} }[/math],

and we then use Hotelling's deflation to annihilate [math]\displaystyle{ \,x_t }[/math]:

The deflation step ensures that the [math]\displaystyle{ \,t+1 }[/math]-st leading eigenvector of [math]\displaystyle{ \,A_0 }[/math] is the leading eigenvector of [math]\displaystyle{ \,A_{t} }[/math]. The following proposition explains why.

Proposition

If [math]\displaystyle{ \lambda _{1}\ge ...\ge \lambda _{p} }[/math] are the eigenvalues of [math]\displaystyle{ A\in\mathbb{S}_{+}^{p},x_{1},\ldots,x_{p} }[/math] are the corresponding eigenvectors, and [math]\displaystyle{ \hat{A}=A-x_{j}x_{j}^{T}Ax_{j}x_{j}^{T} }[/math] for some [math]\displaystyle{ j\in 1,\ldots,p, }[/math] then [math]\displaystyle{ \hat{A} }[/math] has eigenvectors [math]\displaystyle{ \,x_{1},...,x_{p} }[/math] with corresponding eigenvalues [math]\displaystyle{ \,\lambda _{1},\ldots,\lambda _{j-1},0,\lambda _{j+1},\lambda _{p}. }[/math]

The proof of this proposition is simple and is given as follows:

[math]\displaystyle{ \, \hat{A} x_j = A x_j − x_j x_j^T A x_j x_j^T x_j = A x_j − x_j x_j^T A x_j = \lambda_j x_j − \lambda_j x_j = 0 x_j }[/math].

[math]\displaystyle{ \, \hat{A} x_i = A x_i − x_j x_j^T A x_j x_j^T x_i = A x_i − 0 = \lambda_i x_i }[/math], [math]\displaystyle{ \,\forall i \neq j }[/math]. QED

Thus, Hotelling’s deflation preserves all eigenvectors of [math]\displaystyle{ \,A }[/math] whilst removing a selected eigenvalue of [math]\displaystyle{ \,A }[/math]. This implies that Hotelling's deflation preserves the positive semi-definiteness of [math]\displaystyle{ \,A }[/math], i.e. the matrix resulting from deflating [math]\displaystyle{ \,A }[/math] is positive semi-definite if and only if [math]\displaystyle{ \,A }[/math] is positive semi-definite.

For the interested reader, a paper by Wang et al. titled Sparse PCA by Iterative Elimination Algorithm provides the details regarding this algorithm and is available here.

Hotelling's deflation and sparse PCA

In the sparse PCA setting, we seek [math]\displaystyle{ \,r }[/math] sparse loadings which together capture the maximum amount of variance in the data. To find the first such "pseudo-eigenvector" equation, we can consider a cardinality-constrained version of the problem:

Typically, Hotelling’s deflation is utilized by substituting an extracted pseudo-eigenvector for a true eigenvector in the deflation step of [math]\displaystyle{ A_{t}=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t }[/math]. This substitution, however, is seldom justified, for the properties of Hotelling’s deflation depend crucially on the use of a true eigenvector. If pseudo-eigenvectors are used in place of true eigenvectors, the Cauchy-Schwarz inequality is violated and the pseudo-eigenvectors may not be orthogonal to the deflated matrix. As well, the deflated matrix may not be positive semidefinite under pseudo-eigenvector Hotelling's deflation. This shortcoming can be a serious problem since most iterative sparse PCA methods assume a positive semidefinite matrix at each step.

Alternative deflation techniques

To rectify the failings of pseudo-eigenvector Hotelling's deflation the author considers several alternative deflation techniques better suited to the sparse PCA setting. It should be noted that any deflation-based sparse PCA method can utilize any of the deflation techniques discussed below.

Projection deflation

Given a data matrix [math]\displaystyle{ Y \in \mathbb{R}^{n\times p} }[/math] and an arbitrary unit vector [math]\displaystyle{ x\in \mathbb{R} }[/math], an intuitive way to remove the contribution of [math]\displaystyle{ \,x }[/math] from [math]\displaystyle{ \,Y }[/math] is to project [math]\displaystyle{ \,Y }[/math] onto the orthocomplement of the space spanned by [math]\displaystyle{ x:\hat{Y} = Y(I-xx^T) }[/math]. If [math]\displaystyle{ \,A }[/math] is the sample covariance matrix of [math]\displaystyle{ \,Y }[/math] , then the sample covariance of [math]\displaystyle{ \hat{Y} }[/math] is given by[math]\displaystyle{ \hat{A}=(I-xx^T)A(I-xx^T) }[/math], which leads to our formulation for projection deflation:

- When [math]\displaystyle{ \,x_t }[/math] is a true eigenvector of [math]\displaystyle{ \,A_{t-1} }[/math] with eigenvalue [math]\displaystyle{ \,\lambda_t }[/math], projection deflation reduces to Hotelling's deflation. The derivation of this property is shown below.

[math]\displaystyle{ A_t=A_{t-1}-x_tx_t^TA_{t-1}-A_{t-1}x_tx_t^T+x_tx_t^TA_{t-1}x_tx_t^T }[/math] [math]\displaystyle{ =A_{t-1}-\lambda_tx_tx_t^T-\lambda_tx_tx_t^T+\lambda_tx_tx_t^T }[/math] [math]\displaystyle{ =A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t }[/math]

- In the general case in which [math]\displaystyle{ \,x_t }[/math] is not a true eigenvector, projection deflation maintains the desirable properties that were lost to Hotelling's deflation. Unlike Hotelling's deflation, projection deflation preserves positive semi-definiteness of a matrix. This property is easy to show, and its derivation is given as follows: [math]\displaystyle{ \, \forall y,\; y^T A_t y = y^T (I - x_t x_t^T) A_{t-1} (I - x_t x_t^T) y = z^T A_{t-1} z }[/math], where [math]\displaystyle{ \, z = (I - x_t x_t^T) y. }[/math] Also, unlike Hotelling's deflation, projection deflation renders [math]\displaystyle{ \,A_t }[/math] both left and right orthogonal to [math]\displaystyle{ \,x_t }[/math]. This is because [math]\displaystyle{ \,(I - x_t x_t^T) x_t = x_t - x_t = 0 }[/math] and [math]\displaystyle{ \,A_t }[/math] is symmetric. This property also implies another important property of projection deflation: projection deflation annihilates all covariances with [math]\displaystyle{ \,x_t }[/math].

Schur complement deflation

Let [math]\displaystyle{ x\in\mathbb{R}^p }[/math] be a unit vector and [math]\displaystyle{ W\in\mathbb{R}^p }[/math] be a Gaussian random vector, representing the joint distribution of the data variables. If [math]\displaystyle{ \,W }[/math] has covariance matrix [math]\displaystyle{ \,\Sigma }[/math], then ([math]\displaystyle{ \,W,Wx }[/math]) has covariance matrix [math]\displaystyle{ \,V=\left( \begin{matrix} \Sigma & \Sigma x_{{}} \\ x^{T}\Sigma & x^{T}\Sigma x \\\end{matrix} \right) }[/math], and [math]\displaystyle{ Var(W|Wx)=\Sigma -\frac{\Sigma xx^{T}\Sigma }{x^{T}\Sigma x} }[/math] whenever [math]\displaystyle{ x^{T}\Sigma x\ne 0 }[/math]. That is, the conditional variance is the Schur complement of the vector variance [math]\displaystyle{ \,x^T \Sigma x }[/math] in the full covariance matrix [math]\displaystyle{ \,V }[/math]. Substituting sample covariance matrices for their population counterparts, Schur complement deflation is:

Like projection deflation, Schur complement deflation preserves positive-semi-definiteness and also renders [math]\displaystyle{ \,x_t }[/math] both left and right orthogonal to [math]\displaystyle{ \,A_t }[/math]. These two properties of Schur complement deflation are easy to show, and their derivations can be found in the Reference section of <ref name="R2">Lester Mackey. "Deflation Methods for Sparse PCA." In Advances in Neural Information Processing Systems 21, pages 1017–1024, 2009.</ref>

- When [math]\displaystyle{ \,x_t }[/math] is an eigenvector of [math]\displaystyle{ \,A_{t-1} }[/math] with eigenvalue [math]\displaystyle{ \,\lambda_t \ne 0 }[/math], it reduces to Hotelling's deflation.

[math]\displaystyle{ A_{t}=A_{t-1}-\frac{A_{t-1}x_{t}x_{t}^{T}A_{t-1}}{x_{t}^{T}A_{t-1}x_{t}} }[/math] [math]\displaystyle{ =A_{t-1}-\frac{\lambda_t x_tx_t^T\lambda_t}{\lambda_t} }[/math] [math]\displaystyle{ =A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t. }[/math]

It should be noted that, even though we motivated Schur complement deflation with a Gaussianity assumption, in general, this technique has a more general interpretation as a column projection of a data matrix.

Orthogonalized deflation

Whenever we deal with a sequence of non-orthogonal vectors, we must take care to distinguish between the variance explained by a vector and the additional variance explained, given all previous vectors. The additional variance explained by the [math]\displaystyle{ \,t }[/math]-th pseudo-eigenvector, [math]\displaystyle{ \,x_t }[/math], is equivalent to the variance explained by the component of [math]\displaystyle{ \,x_t }[/math] orthogonal to the space spanned by all previous pseudo-eigenvectors, [math]\displaystyle{ \,q_t=x_t-P_{t-1}x_t }[/math] where [math]\displaystyle{ \,P_t }[/math] is the orthogonal projection onto the space spanned by [math]\displaystyle{ \,x_1,...,x_{t-1} }[/math]. On each deflation step, therefore, we only want to eliminate the variance associated with [math]\displaystyle{ \,q_t }[/math]. This is because annihilating the full vector [math]\displaystyle{ \,x_t }[/math] would often lead to double counting by re-introducing components that are parallel to previously annihilated vectors. We shall now look at how this issue can be resolved. First, we can consider the issue of non-orthogonality in the context of Hotelling’s deflation. A modified version of Hotelling's deflation as described in [12] of the paper in the Reference section, known as orthogonalized Hotelling’s deflation (OHD), has been introduced. For this deflation technique, the following hold true:

- OHD is equivalent to Hotelling's deflation for [math]\displaystyle{ \,t = 1 }[/math]:

[math]\displaystyle{ A_{t}=A_{t-1}-x_tx^T_tA_{t-1}x_tx^T_t }[/math]

- When [math]\displaystyle{ \,t \gt 1 }[/math], OHD can be expressed in terms of running Gram-Schmidt decomposition:

[math]\displaystyle{ q_{t}=\frac{(I-Q_{t-1}Q_{t-1}^{T})x_{t}}{\left\| (I-Q_{t-1}Q_{t-1}^{T})x{}_{t} \right\|} }[/math] [math]\displaystyle{ A_{t}=A_{t-1}-q_{t}q_{t}^{T}A_{t-1}q_{t}q_{t}^{T}, }[/math] where [math]\displaystyle{ \,q_t = x_1 }[/math], and [math]\displaystyle{ \,q_1,...,q_{t-1} }[/math] form the columns of [math]\displaystyle{ \,Q_{t-1} }[/math]. Since [math]\displaystyle{ \,q_1,...,q_{t-1} }[/math] form an orthonormal basis for the space spanned by [math]\displaystyle{ \,x_1,...,x_{t-1} }[/math], we have [math]\displaystyle{ \,Q_{t-1}Q_{t-1}^T=P_{t-1} }[/math], which is the aforementioned orthogonal projection.

Because the first round of OHD is equivalent to an application of Hotelling’s deflation, OHD inherits all of the weaknesses associated with Hotelling's deflation. Fortunately, the same principles behind OHD that preserve orthogonality may also be applied to projection deflation to create an orthogonalized variant of projection deflation that inherits all of the desirable properties of projection deflation that Hotelling's deflation lacks. Furthermore, it should be noted that the approach described above is unnecessary for Schur complement deflation. This is because Schur complement deflation is unique in that it preserves orthogonality in all subsequent rounds of its application.

Proposition: Orthogonalized Schur complement deflation is equivalent to Schur complement deflation.

Proof: Consider the [math]\displaystyle{ \,t }[/math]-th round of Schur complement deflation. We may write [math]\displaystyle{ \,x_t=o_t+p_t }[/math], where [math]\displaystyle{ \,p_t }[/math] is in the subspace spanned by all previously extracted pseudo-eigenvectors and [math]\displaystyle{ \,o_t }[/math] is orthogonal to this subspace. Then we know that [math]\displaystyle{ \,A_{t-1}p_t=0 }[/math], as [math]\displaystyle{ \,p_t }[/math] is a linear combination of [math]\displaystyle{ x_1, \ldots , x_{t-1} }[/math], and [math]\displaystyle{ \,A_{t-1}x_i = 0. }[/math] Thus, [math]\displaystyle{ x^T_tA_tx_t = p^T_t A_tp_t + o^T_t A_tp_t + p^T_t A_to_t + o^T_tA_to_t = o^T_t A_to_t }[/math]. Further, [math]\displaystyle{ A_{t-1}x_tx^T_t A_{t-1} = A_{t-1}p_tp^T_t A_{t-1}+A_{t-1}p_to^T_t A_{t-1}+A_{t-1}o_tp^T_t A_{t-1}+A_{t-1}o_to^T_t A_{t-1} =A_{t-1}o_to^T_t A_{t-1} }[/math]. Hence [math]\displaystyle{ A_t = A_{t-1} -\frac{A_{t-1}o_t o^T_t A_{t-1}}{o^T_t A_{t-1}o_t}=A_{t-1}-\frac{A_{t-1}q_tq^T_t A_{t-1}}{q^T_t A_{t-1}q_t} }[/math] as [math]\displaystyle{ q_t=\frac{o_t}{\|o_t\|}. }[/math]

Summary of sparse PCA deflation method properties

Proposition

The following table compares properties of the various deflation techniques studied above.

Reformulating sparse PCA

Recall that the goal of sparse PCA is to find [math]\displaystyle{ \,r }[/math] cardinality-constrained pseudo-eigenvectors which together explain the most variance in the data. If we additionally constrain the sparse loadings to be generated sequentially, as in the PCA setting and the previous section, then a greedy approach of maximizing the additional variance of each new vector naturally suggests itself. On round [math]\displaystyle{ \,t }[/math], the additional variance of a vector [math]\displaystyle{ \,x }[/math] is given by [math]\displaystyle{ \,\frac{qA_{0}q}{q^{T}q} }[/math], where [math]\displaystyle{ \,A_{0} }[/math] is the data covariance matrix, [math]\displaystyle{ \,q=(I-P_{t-1})x }[/math], and [math]\displaystyle{ \,P_{t-1} }[/math] is the projection onto the space spanned by the previous pseudo-eigenvectors [math]\displaystyle{ \,x_{1},...,x_{t-1} }[/math]. As [math]\displaystyle{ \,q^{T}q=x^{T}(I-P_{t-1})(I-P_{t-1})x=x^{T}(I-P_{t-1})x }[/math], maximizing the additional variance is equivalent to solving a cardinality-constrained maximum generalized eigenvalue problem,

[math]\displaystyle{ \underset{x}{\mathop{\max }}\,x^{T}(I-P_{t-1})A_{0}(I-P_{t-1})x }[/math] subject to [math]\displaystyle{ \,x^{T}(I-P_{t-1})x=\textbf{1}, }[/math] [math]\displaystyle{ \textbf{Card}(x)\leq k_t }[/math]

If we let [math]\displaystyle{ q_{s}=(I-P_{t-1})x_{s},\forall s\le t-1 }[/math], then [math]\displaystyle{ \,q_1,...,q_{t-1} }[/math] form an orthonormal basis for the space spanned by [math]\displaystyle{ \,x_1,...,x_{t-1} }[/math]. Writing [math]\displaystyle{ I-P_{t-1}=I-\sum\limits_{s=1}^{t-1}{q_{s}q_{s}^{T}=\prod\limits_{s=1}^{t-1}{(I-q_{s}q_{s}^{T})}} }[/math] suggests a generalized deflation techniques that leads to the solution on each round.

Algorithm 1

Generalized deflation method for sparse PCA: Given [math]\displaystyle{ A_{0}\in S_{+}^{p},r\in N,\{k_{1},...,k_{r}\}\subset N }[/math],

- Execute:

- 1.[math]\displaystyle{ B_{0}\leftarrow I }[/math]

- 2.For [math]\displaystyle{ \,t:=1,...,r }[/math]

- [math]\displaystyle{ \bullet x_{t}\leftarrow \underset{x:x^{T}B_{t-1}x=1,\text{Card}(x)\le k_{t}}{\mathop{\arg \max }}\,x^{T}A_{t-1}x }[/math]

- [math]\displaystyle{ \bullet q_{t}\leftarrow B_{t-1}x_{t} }[/math]

- [math]\displaystyle{ \bullet A_{t}\leftarrow (I-q_{t}q_{t}^{T})A_{t-1}(I-q_{t}q_{t}^{T}) }[/math]

- [math]\displaystyle{ \bullet B_{t}\leftarrow B_{t-1}(I-q_{t}q_{t}^{T}) }[/math]

- [math]\displaystyle{ \bullet x_{t}\leftarrow x_{t}/\left\| x_{t} \right\| }[/math]

- Return: [math]\displaystyle{ \,\{x_{1},...,x_{r}\} }[/math]

Experiment

Gene expression data

The Berkeley Drosophila Transcription Network Project (BDTNP) 3D gene expression data contains gene expression levels measured in each nucleus of developing Drosophila embryos and averaged across many embryos and developmental stages. An aggregate VirtualEmbryo contains 21 genes and 5759 example nuclei. With this data we can test the various deflation techniques. Using the GSLDA algorithm <ref name="R3">B.Moghaddam, Y. Weiss, and S. Avidan. "Generalized spectral bounds for sparse LDA." In Proc. ICML, 2006.</ref> to solve the cardinality-constrained rank-one variance maximization and the following deflation techniques: Hotelling's (HD), projection )PD), Schur complement (SCD), orthogonalized Hotelling's (OHD), orthogonalized projection (OPD) and generalized(GD) we get the following results.

It shows a clear hierarchy among the deflation methods and the generalized deflation method performs best.

Conclusion

The utility of above Hotelling's deflation procedures is not limited to the sparse PCA setting. Indeed, the methods presented can be applied to any of a number of constrained eigendecomposition-based problems, including sparse canonical correlation analysis and linear discriminant analysis.

Relationship to Other Sparse PCA Methods

Deflation Method for Penalized Matrix Decomposition Sparse PCA

Reference

<references />