stat946W25: Difference between revisions

| (291 intermediate revisions by 28 users not shown) | |||

| Line 11: | Line 11: | ||

= Topic 12: State Space Models = | = Topic 12: State Space Models = | ||

== Introduction == | |||

State Space Models (SSMs) are introduced as powerful alternatives to traditional sequence modeling approaches. These models demonstrate good performance in various modalities, including time series analysis, audio generation, and image processing and they can capture long-range dependencies more efficiently. | State Space Models (SSMs) are introduced as powerful alternatives to traditional sequence modeling approaches. These models demonstrate good performance in various modalities, including time series analysis, audio generation, and image processing and they can capture long-range dependencies more efficiently. | ||

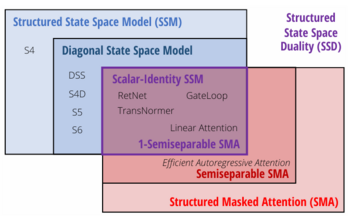

SSMs initially struggled to match the performance of Transformers in language modeling tasks and there were some gaps between them. To address their challenges, recent advances in their architecture such as the Structured State Space Model (S4) have been introduced, which succeeded in long-range reasoning tasks and allowed for more efficient computation while preserving theoretical strengths. However, its implementation remains complex and computationally demanding. So further research led to simplified variants such as the Diagonal State Space Model (DSS), which achieves comparable performance with a more straightforward formulation. In parallel, hybrid approaches, like the H3 model, that integrate SSMs with attention mechanisms try to bridge the mentioned gaps. To understand better what I mean from the hybrid word, for example in H3 the authors try replacing almost all the attention layers in transformers with SSMs. More recently, models like Mamba have pushed the boundaries of SSMs by selectively parameterizing state matrices as functions of the input and allowing more flexible and adaptive information propagation. | SSMs initially struggled to match the performance of Transformers in language modeling tasks and there were some gaps between them. To address their challenges, recent advances in their architecture such as the Structured State Space Model (S4) have been introduced, which succeeded in long-range reasoning tasks and allowed for more efficient computation while preserving theoretical strengths. However, its implementation remains complex and computationally demanding. So further research led to simplified variants such as the Diagonal State Space Model (DSS), which achieves comparable performance with a more straightforward formulation. In parallel, hybrid approaches, like the H3 model, that integrate SSMs with attention mechanisms try to bridge the mentioned gaps. To understand better what I mean from the hybrid word, for example in H3 the authors try replacing almost all the attention layers in transformers with SSMs. More recently, models like Mamba have pushed the boundaries of SSMs by selectively parameterizing state matrices as functions of the input and allowing more flexible and adaptive information propagation. | ||

Research in SSMs continues to resolve the remaining challenges and the potential to substitute attention-based architectures with SSMs grows stronger. They will likely play a crucial role in the next generation of sequence modeling frameworks. | Research in SSMs continues to resolve the remaining challenges and the potential to substitute attention-based architectures with SSMs grows stronger. They will likely play a crucial role in the next generation of sequence modeling frameworks. | ||

=== Advantages of SSMs === | ===Advantages of SSMs=== | ||

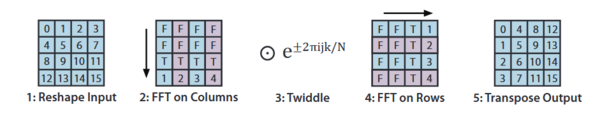

* Efficient Long-Range Dependency Handling: Unlike Transformers, which require <math>O(n^2)</math> complexity for self-attention, SSMs can process sequences in <math>O(n \log n)</math> time complexity using efficient matrix-vector multiplications and the Fast Fourier Transform (FFT) or <math>O(n)</math> in case of Mamba architectures. | * Efficient Long-Range Dependency Handling: Unlike Transformers, which require <math>O(n^2)</math> complexity for self-attention, SSMs can process sequences in <math>O(n \log n)</math> time complexity using efficient matrix-vector multiplications and the Fast Fourier Transform (FFT) or <math>O(n)</math> in case of Mamba architectures. | ||

* Effective Long-Range Dependency Handling: By leveraging a state update mechanism (parametrized by λ) that preserves and accumulates signals over arbitrarily large time spans, SSMs effectively capture and retain information from distant points in a sequence, enabling robust long-range dependency handling. | * Effective Long-Range Dependency Handling: By leveraging a state update mechanism (parametrized by λ) that preserves and accumulates signals over arbitrarily large time spans, SSMs effectively capture and retain information from distant points in a sequence, enabling robust long-range dependency handling. | ||

| Line 26: | Line 26: | ||

* Interpretability and Diagnostic Insight: State Space Models offer a distinct interpretability advantage by representing system behavior through their state-transition dynamics explicitly. Unlike black-box models, the learned parameters in SSMs (matrices <math>\mathbf{A}</math>, <math>\mathbf{B}</math>, <math>\mathbf{C}</math>, and <math>\mathbf{D}</math>) can be analyzed to infer how inputs influence future states and outputs over time. This interpretability is especially valuable in fields where understanding model behavior is critical, such as financial risk modeling or biological systems analysis. | * Interpretability and Diagnostic Insight: State Space Models offer a distinct interpretability advantage by representing system behavior through their state-transition dynamics explicitly. Unlike black-box models, the learned parameters in SSMs (matrices <math>\mathbf{A}</math>, <math>\mathbf{B}</math>, <math>\mathbf{C}</math>, and <math>\mathbf{D}</math>) can be analyzed to infer how inputs influence future states and outputs over time. This interpretability is especially valuable in fields where understanding model behavior is critical, such as financial risk modeling or biological systems analysis. | ||

=== Core concepts === | ===Core concepts=== | ||

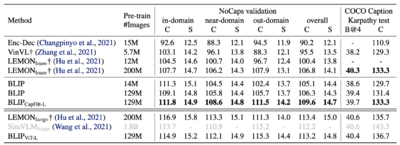

To understand State Space Models better, let's first take a look at the problem with Transformers and Recurrent Neural Networks (RNNs) and the relation between them and SSMs. | To understand State Space Models better, let's first take a look at the problem with Transformers and Recurrent Neural Networks (RNNs) and the relation between them and SSMs. | ||

In transformers during training, we create a matrix comparing each token with every token that came before and the weights in each cell of this matrix show how similar they are to each other. For calculating the weights, we don't do a sequential operation and each two tokens' relation can be computed in parallel. | In transformers during training, we create a matrix comparing each token with every token that came before and the weights in each cell of this matrix show how similar they are to each other. For calculating the weights, we don't do a sequential operation and each two tokens' relation can be computed in parallel. | ||

| Line 124: | Line 124: | ||

* In SSMs, we have D u(t) in the second equation which is commonly left out in control problems | * In SSMs, we have D u(t) in the second equation which is commonly left out in control problems | ||

===HiPPO Matrix=== | |||

Higher-Order-Polynomial Projection Operator (HiPPO) Matrix is a class of specially designed state transfer matrix '''A''' that enables the state space model to capture long-term dependencies in a continuous time setting. The most important matrix in this class is defined as: | Higher-Order-Polynomial Projection Operator (HiPPO) Matrix is a class of specially designed state transfer matrix '''A''' that enables the state space model to capture long-term dependencies in a continuous time setting. The most important matrix in this class is defined as: | ||

| Line 136: | Line 136: | ||

This matrix is designed to compress past history into a state that contains enough information to roughly reconstruct history. The interpretation of this matrix is that it produces a hidden state that remembers its history. | This matrix is designed to compress past history into a state that contains enough information to roughly reconstruct history. The interpretation of this matrix is that it produces a hidden state that remembers its history. | ||

===Recurrent Representation of SSM=== | |||

The input is a discrete sequence <math>(u_0,u_1,\ldots)</math> instead of continuous function. To discretize the continuous-time SSM, we follow prior work in using the bilinear method [https://arxiv.org/pdf/2406.02923], which converts the state matrix <math>A</math> into an approximation <math>\overline{A}</math> . The discrete SSM is: | The input is a discrete sequence <math>(u_0,u_1,\ldots)</math> instead of continuous function. To discretize the continuous-time SSM, we follow prior work in using the bilinear method [https://arxiv.org/pdf/2406.02923], which converts the state matrix <math>A</math> into an approximation <math>\overline{A}</math> . The discrete SSM is: | ||

| Line 231: | Line 231: | ||

These methods ensure that state-space models remain numerically robust and efficient when handling long sequences. | These methods ensure that state-space models remain numerically robust and efficient when handling long sequences. | ||

==Structured State Space (S4)== | |||

====Objective==== | ====Objective==== | ||

| Line 245: | Line 245: | ||

S4 has been particularly successful in domains that require continuous, structured data, such as time-series processing. However, its reliance on structured state matrices makes it less adaptable to unstructured data like natural language, where attention-based models still hold an advantage. | S4 has been particularly successful in domains that require continuous, structured data, such as time-series processing. However, its reliance on structured state matrices makes it less adaptable to unstructured data like natural language, where attention-based models still hold an advantage. | ||

Theorem 1: | |||

All HiPPO matrices in the paper[https://arxiv.org/abs/2008.07669] have a NPLR representation: | All HiPPO matrices in the paper[https://arxiv.org/abs/2008.07669] have a NPLR representation: | ||

| Line 256: | Line 256: | ||

The core idea of this form is to diagonalize the matrix A using the unitary-ary transformation V and use a low-rank decomposition to further compress the computation, which is important in efficient computation and storage optimization. | The core idea of this form is to diagonalize the matrix A using the unitary-ary transformation V and use a low-rank decomposition to further compress the computation, which is important in efficient computation and storage optimization. | ||

Theorem 2: | |||

Given any step size <math>∆</math>, computing one step of the [[ #Recurrent Representation of SSM|recurrence]] can be done in <math>O(N)</math> operations where <math>N</math> is the state size. | Given any step size <math>∆</math>, computing one step of the [[ #Recurrent Representation of SSM|recurrence]] can be done in <math>O(N)</math> operations where <math>N</math> is the state size. | ||

Theorem 3: | |||

Given any step size <math>∆</math>, computing the SSM convolution filter <math>\overline{\boldsymbol{K}}</math> can be reduced to 4 Cauchy multiplies, requiring only <math>{\widetilde{O}}(N+L)</math> operations and <math>O(N + L))</math> space. | Given any step size <math>∆</math>, computing the SSM convolution filter <math>\overline{\boldsymbol{K}}</math> can be reduced to 4 Cauchy multiplies, requiring only <math>{\widetilde{O}}(N+L)</math> operations and <math>O(N + L))</math> space. | ||

| Line 285: | Line 285: | ||

Some of the applications of S4 are language and audio processing (text generation, speech recognition, music and voice synthesis), forecasting (predicting stock prices in financial markets, modelling climate information), and scientific computing (simulations in physics, chemistry, astronomy). | Some of the applications of S4 are language and audio processing (text generation, speech recognition, music and voice synthesis), forecasting (predicting stock prices in financial markets, modelling climate information), and scientific computing (simulations in physics, chemistry, astronomy). | ||

==Diagonal State Space Model (DSS)== | |||

As mentioned above, S4 relies on the Diagonal Plus Low Rank (DPLR) structure of state matrix <math>A</math>, which improves efficiency but introduces significant complexity in both understanding and implementation. To simplify S4 while maintaining its performance, Diagonal State Space Model (DSS) is proposed. It simplifies the state matrix by replacing it with a purely diagonal form, reducing computational overhead and improving interpretability. It also maintains the ability to capture long-range dependencies. | As mentioned above, S4 relies on the Diagonal Plus Low Rank (DPLR) structure of state matrix <math>A</math>, which improves efficiency but introduces significant complexity in both understanding and implementation. To simplify S4 while maintaining its performance, Diagonal State Space Model (DSS) is proposed. It simplifies the state matrix by replacing it with a purely diagonal form, reducing computational overhead and improving interpretability. It also maintains the ability to capture long-range dependencies. | ||

| Line 314: | Line 314: | ||

The initialization of parameters can significantly influence the performance of DSS, as improper initialization may lead to slower convergence or suboptimal solutions. Additionally, DSS tends to be less effective at modeling information-dense data, such as text, where complex patterns and intricate relationships between words or phrases are crucial. In these cases, more sophisticated models may be helpful. However, DSS still provides a viable alternative in scenarios where simplicity and efficiency are prioritized over capturing deep contextual relationships. | The initialization of parameters can significantly influence the performance of DSS, as improper initialization may lead to slower convergence or suboptimal solutions. Additionally, DSS tends to be less effective at modeling information-dense data, such as text, where complex patterns and intricate relationships between words or phrases are crucial. In these cases, more sophisticated models may be helpful. However, DSS still provides a viable alternative in scenarios where simplicity and efficiency are prioritized over capturing deep contextual relationships. | ||

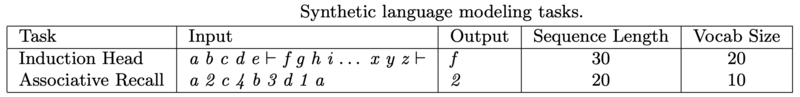

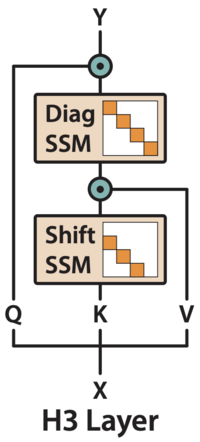

==Hungry Hungry Hippos (H3)== | |||

====SSMs vs Attention==== | ====SSMs vs Attention==== | ||

| Line 347: | Line 347: | ||

Initially, we convert our input sequence into query, key, and value matrices, as seen in regular attention. We then apply two SSM transformations to these input matrices to generate the final output. | Initially, we convert our input sequence into query, key, and value matrices, as seen in regular attention. We then apply two SSM transformations to these input matrices to generate the final output. | ||

==== Shift SSM ==== | |||

The first SSM is the shift SSM that you can think of as performing a local lookup across the sequence. In a sliding window fashion, the Shift SSM shifts the state vector by one position at each time to store the most recent inputs. The purpose is to detect specific events and remember the surrounding tokens, As an analogy, this is similar to how we remember the most recent words we have read. Our brain captures those last few words (tokens, for the model). For specific words or events in the book, we associate them with the surrounding context (words) around the specific entity. | The first SSM is the shift SSM that you can think of as performing a local lookup across the sequence. In a sliding window fashion, the Shift SSM shifts the state vector by one position at each time to store the most recent inputs. The purpose is to detect specific events and remember the surrounding tokens, As an analogy, this is similar to how we remember the most recent words we have read. Our brain captures those last few words (tokens, for the model). For specific words or events in the book, we associate them with the surrounding context (words) around the specific entity. | ||

| Line 362: | Line 362: | ||

If you draw it out, it would be a square matrix where the 1s appear directly below the main diagonal with all other entries 0. The action of this matrix on the hidden state <math>x_i</math> is to shift each coordinate down by one—thereby creating a “memory” of the previous states. If <math>\mathbf{B} = \textit{e}_1</math>, the first basis vector, then <math>x_i = [u_i, u_{i-1}, . . . , u_{i-m+1}]</math> contains the inputs from the previous m time steps. Both <math>\mathbf{B}</math> and <math>\mathbf{C}</math> are learnable matrices, but <math>\mathbf{B}</math> is usually fixed to <math>\textit{e}_1</math> for simplicity, in which case the output is a 1D convolution with kernel size m. | If you draw it out, it would be a square matrix where the 1s appear directly below the main diagonal with all other entries 0. The action of this matrix on the hidden state <math>x_i</math> is to shift each coordinate down by one—thereby creating a “memory” of the previous states. If <math>\mathbf{B} = \textit{e}_1</math>, the first basis vector, then <math>x_i = [u_i, u_{i-1}, . . . , u_{i-m+1}]</math> contains the inputs from the previous m time steps. Both <math>\mathbf{B}</math> and <math>\mathbf{C}</math> are learnable matrices, but <math>\mathbf{B}</math> is usually fixed to <math>\textit{e}_1</math> for simplicity, in which case the output is a 1D convolution with kernel size m. | ||

==== Diag SSM ==== | |||

The diagonal SSM serve as a kind of global memory that keeps track of important information over the entire sequence. A diagonal matrix is used to summarize the information we have seen. As an analogy, the diagonal SSM acts like the notes that we take when we are in class. It is super difficult to recall every single detail our professor states during a lecture. We will take notes during lecture to retain important concepts and information. We can then refer to these notes when we review. The diagonal SSM serves as our notes that the input sequence can refer to when recalling the summary of past information. | The diagonal SSM serve as a kind of global memory that keeps track of important information over the entire sequence. A diagonal matrix is used to summarize the information we have seen. As an analogy, the diagonal SSM acts like the notes that we take when we are in class. It is super difficult to recall every single detail our professor states during a lecture. We will take notes during lecture to retain important concepts and information. We can then refer to these notes when we review. The diagonal SSM serves as our notes that the input sequence can refer to when recalling the summary of past information. | ||

| Line 368: | Line 368: | ||

The diagonal SSM constrains A to be diagonal and initializes it from the diagonal version of HiPPO. This parameterization allows the model to remember state over the entire sequence. The shift SSM can detect when a particular event occurs, and the diagonal SSM can remember a token afterwards for the rest of the sequence. | The diagonal SSM constrains A to be diagonal and initializes it from the diagonal version of HiPPO. This parameterization allows the model to remember state over the entire sequence. The shift SSM can detect when a particular event occurs, and the diagonal SSM can remember a token afterwards for the rest of the sequence. | ||

==== Multiplicative Interaction ==== | |||

Multiplicative interactions, which is represented by the teal elementwise multiplication symbol, it offers H3 the ability to compare tokens across the sequence. Specifically, we can use this to compare and obtain information between the Shift SSM and Diagonal SSM. This is similar to the similarity score (dot product) seen in vanilla attention. | Multiplicative interactions, which is represented by the teal elementwise multiplication symbol, it offers H3 the ability to compare tokens across the sequence. Specifically, we can use this to compare and obtain information between the Shift SSM and Diagonal SSM. This is similar to the similarity score (dot product) seen in vanilla attention. | ||

| Line 374: | Line 374: | ||

Holistically, we can kind of view the outputs from Shift SSM as local or short-term information. The outputs from diagonal SSM servers as long-term information. The multiplicative interaction enables us to use both types of information to extract important information. This is critical for tasks like associative recall. For example, if the model needs to recall a value associated with a key, it can compare the current key with all previously seen keys using these interactions. | Holistically, we can kind of view the outputs from Shift SSM as local or short-term information. The outputs from diagonal SSM servers as long-term information. The multiplicative interaction enables us to use both types of information to extract important information. This is critical for tasks like associative recall. For example, if the model needs to recall a value associated with a key, it can compare the current key with all previously seen keys using these interactions. | ||

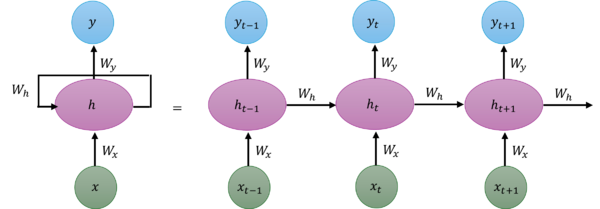

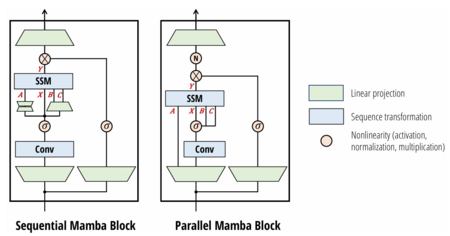

==Mamba== | |||

Mamba is a technique which builds on S4 models. It was introduced to increase efficiency for long sequences by leveraging selective attention mechanisms, allowing them to save memory and computational cost by not focusing on irrelevant information. It does so by combining the H3 block traditionally used in SSM models with a gated multilayered perceptron (MLP), as shown in the figure below. | Mamba is a technique which builds on S4 models. It was introduced to increase efficiency for long sequences by leveraging selective attention mechanisms, allowing them to save memory and computational cost by not focusing on irrelevant information. It does so by combining the H3 block traditionally used in SSM models with a gated multilayered perceptron (MLP), as shown in the figure below. | ||

| Line 455: | Line 455: | ||

In summary, Mamba's selective state space models marry the efficiency of classical SSMs with the flexibility of input-dependent gating. This combination allows the architecture to handle extremely long sequences—up to millions of tokens—while still capturing the nuanced patterns essential for tasks ranging from language modeling to audio generation. | In summary, Mamba's selective state space models marry the efficiency of classical SSMs with the flexibility of input-dependent gating. This combination allows the architecture to handle extremely long sequences—up to millions of tokens—while still capturing the nuanced patterns essential for tasks ranging from language modeling to audio generation. | ||

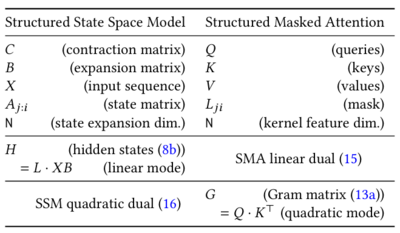

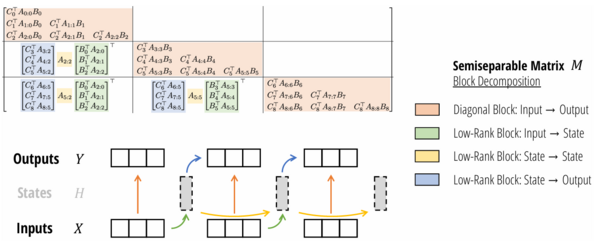

==Mamba-2== | |||

====Overview==== | ====Overview==== | ||

| Line 749: | Line 749: | ||

===Sparse Sinkhorn Attention=== | ===Sparse Sinkhorn Attention=== | ||

==== Evaluation Metrics for Sparse Attention ==== | |||

To comprehensively evaluate the performance of sparse attention mechanisms, several key metrics are considered: | |||

* '''Time Complexity''' | |||

** The number of operations required during inference. | |||

** Vanilla attention has quadratic complexity <math>O(n^2)</math>, while sparse variants aim for linear or sub-quadratic complexity, such as <math>O(n \cdot N_k)</math> where <math>N_k \ll n</math>. | |||

* '''Memory Complexity''' | |||

** Represents the total memory consumption needed for model parameters and intermediate activations. | |||

** Sparse attention reduces this by avoiding full pairwise attention computations. | |||

* '''Perplexity''' | |||

** A standard measure to evaluate the predictive performance of language models. | |||

** Defined as: | |||

<math> | |||

\text{Perplexity}(P) = \exp\left(- \frac{1}{N} \sum_{i=1}^N \log P(w_i | w_1, \dots, w_{i-1}) \right) | |||

</math> | |||

** Lower perplexity indicates better prediction capability. | |||

These metrics help compare the efficiency and effectiveness of sparse attention methods to the original dense attention in practical applications. | |||

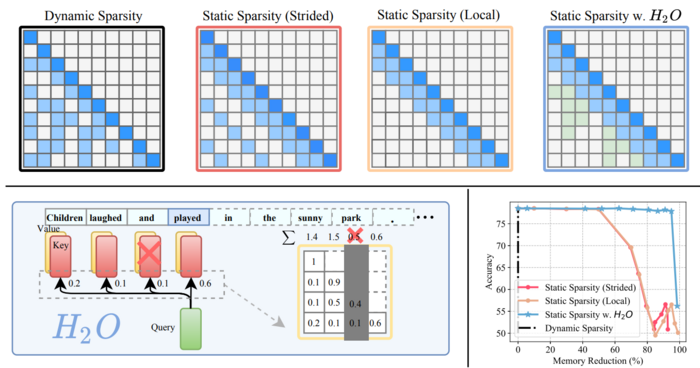

==== Simplified Intuition ==== | ==== Simplified Intuition ==== | ||

| Line 844: | Line 867: | ||

By using sparse attention (i.e., not calculating the relationship between each pair of tokens), we hope that it allows us to scale to longer sequences while preserving the majority of the model's performance. | By using sparse attention (i.e., not calculating the relationship between each pair of tokens), we hope that it allows us to scale to longer sequences while preserving the majority of the model's performance. | ||

==== Theoretical Guarantee ==== | |||

The paper prove that sparse attention mechanisms, such as the one used in BigBird, can serve as universal approximators for dense attention Transformers. | |||

* '''Universal Approximation Theorem:''' | |||

** Given a class of functions <math> \mathcal{F}_{n,p} </math>, any function <math> f \in \mathcal{F}_{n,p} </math> can be approximated within <math> \epsilon > 0 </math> by a sparse attention Transformer <math> g \in \mathcal{T}_{n,p,r} </math> | |||

(i.e.,<math> d_{\mathcal{F}}(f, g) \leq \epsilon </math>) | |||

** This result holds as long as the underlying attention graph contains a star graph. | |||

* '''Supporting Lemmas:''' | |||

** Lemma 1: Scalar quantization of inputs using discrete maps. | |||

** Lemma 2: Contextual mapping via learned sparse attention layers. | |||

** Lemma 3 & 4: Construction of approximators with feedforward and attention layers using the sparse mechanism. | |||

These results justify that BigBird retains the expressive power of standard Transformers, while being more scalable. | |||

==== Intuition & Main Idea ==== | ==== Intuition & Main Idea ==== | ||

| Line 871: | Line 910: | ||

[[File:BigBird_Results.png|800px]] | [[File:BigBird_Results.png|800px]] | ||

QA tasks tests the model's ability to handle longer sequences and the ability to extract useful context. BigBird model outperforms RoBERTa and Longformer. At that time, there was also a burst in using deep learning for genomics data, most approaches consume DNA sequence fragments as inputs and BigBird achieved a 99.9 F1 score as well as 1.12 BPC (bits per character) on these tasks. | QA tasks tests the model's ability to handle longer sequences and the ability to extract useful context. | ||

Hotpot QA: For given question and documents, model is asked to generate correct answer and identify supporting facts | |||

* Ans (Answer): checks if the answer matches the ground truth. | |||

* Sup (Supporting Facts): checks if the model identifies sentences/evidences that support the answer. | |||

* Joint: A joint evaluation that is considered correct iff both Ans and Sup are correct. | |||

NaturalQ: For given question and documents, extract a short answer or a long answer | |||

* LA (Long Answer): evaluates model's ability to extract longer (paragraph-level) answer from passage | |||

* SA (Short Answer): evaluates model's ability to extract concise (short phrase like) answer from passage | |||

TriviaQA: For given question and documents, generate an answer | |||

* Full: Uses the complete set of questions with automatically paired evidence. | |||

* Verified: Uses the set that is manually paired to ensure correctness. | |||

For WikiHop: For a given question, supporting documents, the model is asked to choose a correct answer from a set of candidate answers | |||

* MCQ (Multiple Choice Question): evaluates model's ability to do MCQ. | |||

BigBird model outperforms RoBERTa and Longformer. At that time, there was also a burst in using deep learning for genomics data, most approaches consume DNA sequence fragments as inputs and BigBird achieved a 99.9 F1 score as well as 1.12 BPC (bits per character) on these tasks. | |||

====Limitations==== | ====Limitations==== | ||

| Line 879: | Line 936: | ||

===Attention with Linear Biases (ALiBi)=== | ===Attention with Linear Biases (ALiBi)=== | ||

==== ALiBi Mechanism ==== | |||

Currently, models struggle to extrapolate. Extrapolation is the ability to produce sequence lengths at inference time that are longer than the sequences they were trained on. For example, if a model is trained on a dataset where the longest sequence is 1024 tokens, then it will perform poorly when asked to generate a sequence of 2048 tokens. If we can solve this problem then, in theory, training can become much more efficient as we can train on shorter sequences without sacrificing performance. | Currently, models struggle to extrapolate. Extrapolation is the ability to produce sequence lengths at inference time that are longer than the sequences they were trained on. For example, if a model is trained on a dataset where the longest sequence is 1024 tokens, then it will perform poorly when asked to generate a sequence of 2048 tokens. If we can solve this problem then, in theory, training can become much more efficient as we can train on shorter sequences without sacrificing performance. | ||

| Line 901: | Line 961: | ||

The authors hypothesized that this could replace the positional encoding in transformers. For model architectures with multiple attention heads, the weighting of these biases can vary per head (defaulting to a geometric sequence of <math>\frac{1}{2^m}</math> where m is the number of heads.) | The authors hypothesized that this could replace the positional encoding in transformers. For model architectures with multiple attention heads, the weighting of these biases can vary per head (defaulting to a geometric sequence of <math>\frac{1}{2^m}</math> where m is the number of heads.) | ||

So even through ALiBi is considered to be a spare attention method, it didn't specify sparsity explicitly. As tokens gets far from one another, the bias becomes small and implicitly, we have sparsity. | So even through ALiBi is considered to be a spare attention method, it didn't specify sparsity explicitly. As tokens gets far from one another, the bias becomes small and implicitly, we have sparsity. | ||

==== Experimental Results ==== | |||

Experiments with a 1.3 billion parameter model on the [[WikiText-103]] dataset showed ALiBi, trained on 1024-token sequences, matched the perplexity of sinusoidal models trained on 2048-token sequences when tested on 2048 tokens. ALiBi was 11% faster and used 11% less memory.<ref name="Press2021" /> Results are summarized below: | |||

{| class="wikitable" | |||

|- | |||

! Method !! Training Length !! Test Length !! Perplexity !! Training Time !! Memory Use | |||

|- | |||

| Sinusoidal || 2048 || 2048 || 20.5 || 100% || 100% | |||

|- | |||

| ALiBi || 1024 || 2048 || 20.6 || 89% || 89% | |||

|- | |||

| Rotary || 1024 || 2048 || 22.1 || 95% || 92% | |||

|} | |||

==== Implications ==== | |||

ALiBi's efficiency and extrapolation ability suggest it could reduce training costs and improve scalability in transformer models. Its recency bias aligns with linguistic patterns, making it a promising advancement. | |||

===SpAtten=== | ===SpAtten=== | ||

| Line 933: | Line 1,011: | ||

Similarly, SpAtten employs a specially designed top-k engine to enable real-time decision-making for pruning tokens and attention heads. This engine efficiently ranks token and head importance scores in linear time, avoiding the inefficiencies of traditional sorting methods. Instead of sorting an entire array, the top-k engine uses a quick-select module to identify the k<sup>th</sup> largest element and filters the array based on this threshold. This technique is also implemented in a highly parallelized manner, with multiple comparators working simultaneously to achieve linear-time ranking. This streamlined process ensures rapid and accurate pruning decisions, contributing to SpAtten's overall efficiency. | Similarly, SpAtten employs a specially designed top-k engine to enable real-time decision-making for pruning tokens and attention heads. This engine efficiently ranks token and head importance scores in linear time, avoiding the inefficiencies of traditional sorting methods. Instead of sorting an entire array, the top-k engine uses a quick-select module to identify the k<sup>th</sup> largest element and filters the array based on this threshold. This technique is also implemented in a highly parallelized manner, with multiple comparators working simultaneously to achieve linear-time ranking. This streamlined process ensures rapid and accurate pruning decisions, contributing to SpAtten's overall efficiency. | ||

=== | === Adaptively Sparse Attention === | ||

==== Motivation ==== | |||

Although Sparse Attention methods like BigBird and Sparse Sinkhorn Attention successfully reduce computational complexity from quadratic to linear or near-linear, they often use predefined patterns (e.g., sliding windows, global tokens, random attention). These predefined patterns may not always reflect the optimal relationships within the sequence for every context. Adaptively Sparse Attention addresses this limitation by dynamically determining which tokens should attend to each other based on their semantic or contextual relationships. | |||

==== Core Idea ==== | |||

Adaptively Sparse Attention dynamically creates sparse attention patterns by identifying the most significant attention connections for each query token based on current input features. Instead of attending to a fixed set of neighbors, each token selectively attends only to tokens with high relevance scores. | |||

====Formulation==== | |||

Given the queries <math>Q \in \mathbb{R}^{T \times d}</math>, keys <math>K \in \mathbb{R}^{T \times d}</math>, and values <math>V \in \mathbb{R}^{T \times d_v}</math>, the standard attention mechanism computes: | |||

{ | <math> | ||

\text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d}}\right)V | |||

</math> | |||

In Adaptively Sparse Attention, we introduce an adaptive binary mask <math>M \in \{0, 1\}^{T \times T}</math> that selects which key tokens each query should attend to, effectively pruning unnecessary computations. Specifically, the formulation becomes: | |||

Step 1. Compute standard attention scores: | |||

<math> | |||

S = \frac{QK^T}{\sqrt{d}} | |||

</math> | |||

1 | Step 2. For each query token <math>i</math>, select a subset of keys by applying a top-<math>k</math> operator or adaptive thresholding: | ||

<math> | |||

M_{ij} = \begin{cases} | |||

1, & \text{if } S_{ij} \text{ is among the top-} k \text{ scores for row } i\\[6pt] | |||

0, & \text{otherwise} | |||

\end{cases} | |||

</math> | |||

Step 3. Compute the sparse attention: | |||

<math> | |||

\text{Attention}(Q,K,V) = \text{softmax}(S \odot M) V | |||

</math> | |||

Here, <math>\odot</math> denotes element-wise multiplication. Softmax normalization is computed over the nonzero elements in each row. | |||

====Advantages==== | |||

* | * Reduced computational complexity: By dynamically restricting attention computations to the top-<math>k</math> relevant keys per query, the complexity reduces from <math>O(T^2)</math> to approximately <math>O(Tk)</math>, which can be near-linear for <math>k \ll T</math>. | ||

* | * Context-aware sparsity: The adaptive selection allows the model to naturally focus on relevant tokens based on the input context, thus preserving performance while substantially improving efficiency. | ||

* Improved scalability: Suitable for very long sequences, Adaptively Sparse Attention provides computational efficiency needed for large-scale applications (e.g., long-document understanding, genomic sequences). | |||

====Example==== | |||

Suppose we have an attention score matrix <math>S \in \mathbb{R}^{4 \times 4}</math> (4 tokens for simplicity): | |||

<math> | |||

S = \begin{bmatrix} | |||

0.1 & 2.0 & 0.5 & 0.2 \\ | |||

1.5 & 0.3 & 0.8 & 0.4 \\ | |||

0.2 & 0.1 & 3.0 & 0.5 \\ | |||

0.7 & 0.6 & 0.4 & 0.2 \\ | |||

\end{bmatrix} | |||

</math> | |||

We set top-2 sparsity per row: | |||

Step 1. Select the top-2 scores per row: | |||

- Row 1: scores 2.0 and 0.5 (columns 2 and 3) | |||

- Row 2: scores 1.5 and 0.8 (columns 1 and 3) | |||

- Row 3: scores 3.0 and 0.5 (columns 3 and 4) | |||

- Row 4: scores 0.7 and 0.6 (columns 1 and 2) | |||

Step 2. Form adaptive mask <math>M</math>: | |||

<math>\ | <math> | ||

M = \begin{bmatrix} | |||

0 & 1 & 1 & 0 \\ | |||

1 & 0 & 1 & 0 \\ | |||

0 & 0 & 1 & 1 \\ | |||

1 & 1 & 0 & 0 \\ | |||

\end{bmatrix} | |||

</math> | |||

Step 3. Element-wise multiply to get sparse scores: | |||

<math> | |||

S' = S \odot M = \begin{bmatrix} | |||

0 & 2.0 & 0.5 & 0 \\ | |||

1.5 & 0 & 0.8 & 0 \\ | |||

0 & 0 & 3.0 & 0.5 \\ | |||

0.7 & 0.6 & 0 & 0 \\ | |||

\end{bmatrix} | |||

</math> | |||

Step 4. Apply row-wise softmax on nonzero entries. For example, row 1 nonzero entries (2.0, 0.5): | |||

<math> | |||

\text{softmax}(2.0, 0.5) \approx [0.82, 0.18] | |||

</math> | |||

Step 5. The final sparse attention output becomes: | |||

<math> | <math> | ||

\text{Attention}(Q,K,V) = \text{softmax}(S')\,V | |||

</math> | |||

Only selected entries are used, dramatically reducing computations. | |||

===Comparison=== | |||

= | {| class="wikitable" | ||

|+ Intuition Comparison of Sparse Attention Methods | |||

|- | |||

! Method !! Key Focus !! How It Works !! Primary Benefit !! Primary Trade-off | |||

|- | |||

| '''Sparse Sinkhorn''' || Global interactions via learned sorting || Rearranges tokens to place important ones together in local blocks for efficient attention || Retains global attention while reducing compute || Sorting overhead adds computation; complex implementation | |||

|- | |||

| '''BigBird''' || Local context with selective global/random links || Uses a fixed sparse pattern (local window + some global/random tokens) to approximate full attention || Scales to long sequences without needing per-sequence tuning || Fixed sparsity means some interactions might be missed | |||

|- | |||

| '''ALiBi''' || Recency bias via linear attention penalty, enables extrapolation to longer sequence lengths || Applies a penalty to distant tokens in attention scores, encouraging focus on closer tokens || Extrapolates well to longer sequences without increasing training costs || Does not reduce compute/memory at inference; only helps training | |||

|- | |||

| '''SpAtten''' || Dynamic token and head pruning for efficiency || Dynamically removes unimportant tokens and attention heads at inference for efficiency || Maximizes efficiency while preserving accuracy || Requires custom logic/hardware for full benefits | |||

|} | |||

{| class="wikitable" | |||

|+ Performance Comparison of Sparse Attention Methods | |||

|- | |||

! Method !! Memory Efficiency !! Computational Speed !! Accuracy vs Full Attention !! Real-World Adoption | |||

|- | |||

| '''Sparse Sinkhorn''' || High (learned local attention reduces need for full attention) || Moderate (sorting overhead but reduced attention computation) || Near-parity (sometimes better, as learned sparsity is adaptive) || Limited (mainly research, complex to implement) | |||

|- | |||

| '''BigBird''' || Very High (reduces O(n²) to O(n) via sparse pattern) || Fast (sparse pattern significantly reduces compute) || Near-parity (captures long-range dependencies well) || High (used in NLP for long-text tasks, available in HuggingFace) | |||

|- | |||

| '''ALiBi''' || Same as full attention (no sparsity, just biasing) but can use smaller context length in training || Same as full attention (no change in complexity) but can use smaller context length in training || Same (performs as well as full attention with added extrapolation) || Very High (adopted in major LLMs like BLOOM, MPT) | |||

|- | |||

| '''SpAtten''' || Extremely High (prunes tokens/heads dynamically) || Extremely Fast (up to 162× faster on specialized hardware) || Same (no accuracy loss, just efficiency gain) || Limited (research and specialized hardware, not common in open-source models) | |||

|} | |||

===Future of Sparse Attention=== | |||

Sparse attention mechanisms have emerged as a promising approach to improving the efficiency of transformer-based models by reducing computational and memory complexity. However, despite these advantages, they introduce trade-offs that warrant further research. Ongoing and future work in this area aims to enhance their expressivity, efficiency, and adaptability across diverse applications. | |||

1. Enhancing Long-Range Dependency Modeling | |||

Current sparse attention approaches struggle to fully capture distant contextual dependencies, limiting their effectiveness in tasks requiring extended sequence understanding. | |||

* While models like '''ALiBi''' demonstrate strong extrapolation, performance degrades beyond twice the training sequence length, indicating room for improvement. | |||

* Future work should focus on developing more robust mechanisms for retaining long-range information without sacrificing efficiency. | |||

2. Reducing Computational Overhead | |||

Despite their efficiency gains, some sparse attention methods introduce additional computational challenges: | |||

* '''Sparse Sinkhorn Attention''' requires iterative normalization (Sinkhorn balancing), increasing computational cost. | |||

* Pruning-based methods (e.g., '''SpAtten''') introduce runtime overhead due to dynamic token and head selection. | |||

* Many sparse attention models rely on specialized hardware acceleration (e.g., top-k engines), limiting their accessibility in general-purpose computing environments. | |||

Addressing these issues will be crucial for making sparse attention models more widely applicable. | |||

3. Optimizing Sparse Attention Architectures | |||

A key challenge is designing architectures that achieve high performance while maintaining efficiency. Future research should explore: | |||

* Balancing efficiency and expressivity by reducing the number of layers needed to match the performance of full attention models. | |||

* Hybrid approaches that integrate multiple sparse attention mechanisms to leverage their respective strengths. | |||

By addressing these challenges, future iterations of sparse attention models can push the boundaries of efficiency while preserving the rich contextual modeling capabilities of transformers. | |||

= Topic 10: Linear Attention= | |||

== Introduction == | |||

Linear attention tries to address the efficiency limitations of traditional softmax attention. Standard attention (also called vanilla attention) is calculated as: | |||

<math>\ | <math>\text{Attention}(Q, K, V) = \text{softmax}\left(\frac{QK^T}{\sqrt{d_k}}\right)V</math> | ||

The computation complexity of this method is <math>O(n^2d)</math> for sequence length <math>n</math> and representation dimension <math>d</math> and the main reason behind that is the multiplication of <math>Q</math> and <math>K^T</math>, produces a large <math>n \times n</math> attention matrix. If we remove the softmax function from this equation, then we can reduce the computational intensity because the matrix multiplication can be reordered to <math>Q(K^T V)</math>. Multiplying <math>K^T V</math> first results in smaller matrix multiplications. However, this linear mapping is insufficient to capture the complex relationships in the data. To reintroduce more complexity, we can replace the softmax function with some kernel with approximates it. Recall that for any kernel <math>K, K(x, y)= \Phi(x)^T \Phi(y)</math> for a matrix <math>\Phi</math>. If we apply this kernel to Q and K, then we can approximate vanilla attention with a much more efficient mechanism. | |||

Recent research has explored more sophisticated approaches to restore the modelling power of linear attention while preserving efficiency. For instance, Retentive Networks (RetNet) introduce a learnable decay-based recurrence that enables <math>O(1)</math> inference with strong performance on language tasks. Gated Linear Attention (GLA) incorporates a data-dependent gating mechanism to better capture context, and BASED proposes a hybrid strategy combining linear attention with sliding-window attention to balance throughput and recall. TransNormerLLM refines positional embeddings and normalization while accelerating linear attention with hardware-friendly techniques. | |||

<math> | |||

Linear attention is particularly useful for large-scale models and scenarios where memory and computing are constrained. Unlike traditional Transformers, which rely heavily on key-value caching and suffer latency bottlenecks during inference, linear attention variants can support faster decoding with lower memory usage. These properties make them attractive for applications such as real-time processing, edge deployment, and next-generation large language models. | |||

== | == Key Approaches to Linear Attention == | ||

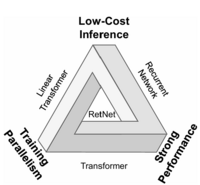

===Retentive Network (RetNet): A Successor to Transformer for Large Language Models=== | |||

[[File:retnet_impossible_triangle.png|thumb|200px|Figure 1: Impossible Triangle]] | |||

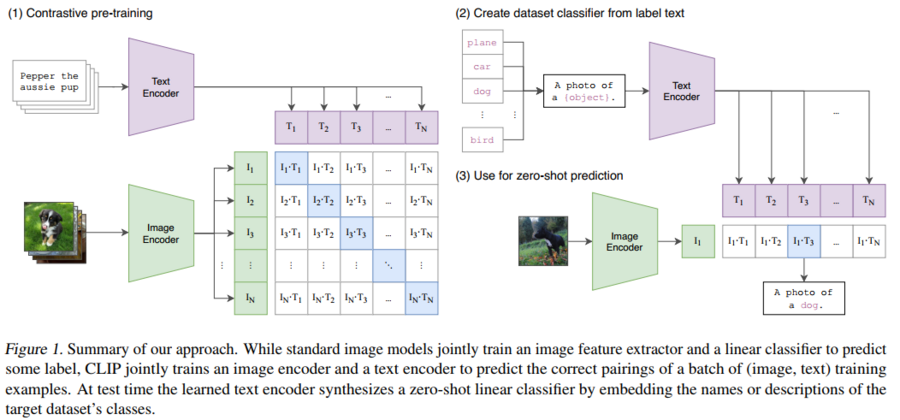

The authors of this paper introduce a new architecture that can achieve parallel training, fast inference, without compromising performance solving the inherent complexity in vanilla attention mechanism. They claim that the new architecture makes the impossible triangle, Figure 1, possible. RetNet combines the advantages of transformer performance and ability for parallel training with the lower-cost inference of RNNs by introducing a multi-scale retention mechanism to replace multi-head attention that incorporates three main representations: | |||

# Parallel: allows GPU utilization during training. | |||

# Recurrent: enables low-cost inference with <math>O(1)</math> complexity in terms of computation and memory. | |||

# Chunkwise recurrent: models long-sequences efficiently. | |||

Essentially, RetNet replaces the <math>softmax</math> by adopting a linear representation of the attention mechanism in the form of retention. | |||

====Recurrent Representation==== | |||

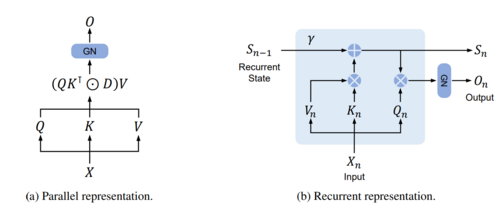

We can show that the retention mechanism has a dual form of recurrence and parallelism (Figure2). First let's show RetNet in its recurrent form: | |||

[[File:RetNet_dual.png|thumb|500px|Figure 2: Dual form of RetNet]] | |||

<math>S_n = \gamma S_{n-1} + K_n^T V_n</math> | |||

<math> | <math>\text{Retention}(X_n) = Q_n S_n</math> | ||

In this formula, <math>\gamma</math> is a decay factor, ensuring that more recent tokens are weighted more heavily, simulating recency bias without storing all past activations. <math>Q</math>, <math>K</math>, <math>V</math> are learned projections of the input. During training, this can be computed in parallel form. | |||

=== | ====Parallel Representation==== | ||

Now, we can show the parallel representation of retention: | |||

<math>\text{Retention}(X) = (QK^T \odot D)V</math> | |||

Where <math>D</math> is a matrix that combines causal masking and exponential decay along relative distance. For better understanding the role of matrix <math>D</math>, let's take a look at its formula: | |||

== | <math display="block"> | ||

D_{nm} = | |||

\begin{cases} | |||

\gamma^{n-m}, & n \geq m \ | |||

0, & n < m | |||

\end{cases} | |||

</math> | |||

This formulation ensures that tokens can only attend to previous tokens (which they call it, causal masking) and the attention strength decays exponentially with distance (controlled by parameter <math>\gamma</math>). In other words, as we move forward, less attention is paid to earlier tokens. | |||

====Chunkwise Recurrent Representation==== | |||

The third component, chunkwise recurrent representation, is a hybrid of recurrent and parallel representations aimed at training acceleration for long sequences. Simply, the input sequence is divided into chunks, where in each chunk a parallel representation is utilized to process all the tokens simultaneously. To pass information across chunks, the recurrent representation is used to create a summary of that chunk and pass it to the next. The combination of inner-chunk (parallel) and cross-chunk (recurrent) information produce the retention of the chunk which captures the details within the chunk and the context from previous chunks. To compute the retention of the i-th chunk, let <math>B</math> be the chunk length, then: | |||

<math> | <math>Q_{[i]} = Q_{Bi:B(i+1)}, K_{[i]} = K_{Bi:B(i+1)}, V_{[i]} = V_{Bi:B(i+1)}</math> | ||

<math>R_i = K_{[i]}^T (V_{[i]} \odot \zeta) + \gamma^B R_{i-1}, \quad \zeta_{ij} = \gamma^{B-i-1}</math> | |||

<math> | <math> | ||

{Retention}(X_{[i]}) = | |||

\underbrace{(Q_{[i]} K_{[i]}^T \odot D) V_{[i]}}_{Inner-Chunk} + | |||

\underbrace{(Q_{[i]} R_{i-1}) \odot \xi}_{Cross-Chunk}, \quad \xi_{i,j} = \gamma^{i+1} | |||

</math> | |||

where <math> | where <math>R_i</math> is the state of the current chunk to be passed to the next. | ||

<math> | In summary, the overall architecture of RetNet consists of stacked identical L blocks similar to Transformer. Each block consists of two modules: multi-scale retention (MSR) module and a feed-forward network (FFN) module. It takes the embeddings of input sequence <math>X^0= [x_1, \dots, x_{|x|}] \in \mathbb R^{|x| \times d_{model}}</math> and compute the output <math>X^L</math>: | ||

<math>Y^l = MSR(LN(X^l)) + X^l</math> | |||

<math>X^{l+1} = FFN(LN(Y^l)) + Y^l</math> | |||

where <math>LN(.)</math> is LayerNorm. | |||

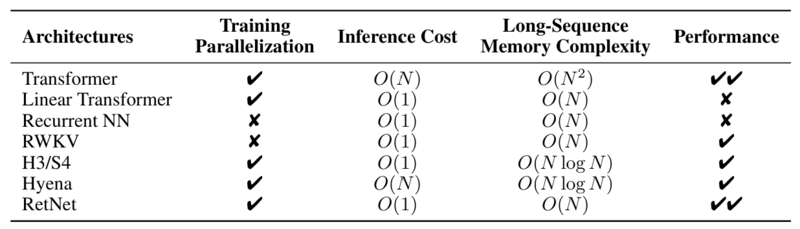

[[File:retnet_comparison.png|thumb|800px|Table 1: Model Comparison]] | |||

====Comparison, Limitations & Future Work==== | |||

Finally, comparing RetNet to other solutions, we can see that it provides a solid alternative to the Transformer achieving parallel training, fast inference, linear long-sequence memory complexity, and good performance. | |||

The limitations of this architecture can be summarized in the following points: | |||

* Limited Cross-Modal Exploration | |||

* The method is primarily validated on language modeling tasks. Its applicability to other modalities (e.g., vision, audio) remains unexplored, requiring further research for broader adoption. | |||

* RetNet achieves superior GPU utilization compared to standard Transformers during training due to its parallel retention mechanism. In practice, it allows training with significantly fewer memory bottlenecks, making it ideal for scaling to longer sequences or deeper models. | |||

=== Memory-Recall Tradeoff === | |||

A fundamental tradeoff exists between the size of a model's recurrent state and its ability to recall past tokens accurately. Some architectures, like BASED, combine linear attention with a sliding window of exact softmax attention to navigate this tradeoff. By adjusting hyperparameters such as the window size and feature dimensions, these models can traverse the Pareto frontier—achieving high recall with a small memory footprint while still benefiting from high throughput. | |||

This paper focuses on the task of associative recall and discusses the memory-recall tradeoff. The memory-recall tradeoff basically states that higher recall requires higher memory consumption, as shown in Figure 3. This tradeoff manifests in different ways across model architectures: | |||

* Vanilla attention models: achieve excellent recall but at the cost of quadratic computational complexity | |||

* Linear attention models: offer better efficiency but often struggle with accurate information retrieval | |||

* State-space models like Mamba: show that increasing recurrent state size generally improves recall accuracy but introduces computational overhead | |||

[[File:memory-recall-tradeoff.png|thumb|300px|Figure 3: Empirical performance showing the memory-recall tradeoff]] | |||

=== Implementations === | |||

The authors introduced Based architecture to improve this tradeoff. They do so by stacking linear attention blocks with sliding window attention blocks. Recall that one of the limitations of linear attention is relatively poor performance compared to vanilla attention. By selecting this type of architecture, the authors compromise on global performance by allowing linear attention to map global dependencies while retaining high performance within a local window by using the sliding window attention to map local dependencies. As seen in figure 4, they achieve this goal. | |||

==== Chunking Strategy ==== | |||

Chunking involves spliting the input sequence <math>X</math> into chunks of length <math>B</math>. For each chunk <math>i</math>, let | |||

<math>Q_{[i]} = Q_{B i : B(i+1)}, \quad K_{[i]} = K_{B i : B(i+1)}, \quad V_{[i]} = V_{B i : B(i+1)}</math> | |||

<math> | be the query, key, and value vectors (respectively) for that chunk. We maintain a recurrent “global” state <math>R_i</math> that summarizes information from all previous chunks and combine it with a “local” sliding-window attention over the current chunk. | ||

==== Global Linear Attention (Recurrent “State”) ==== | |||

We use a feature map <math>\Phi(\cdot)</math> (e.g., a kernel or ELU-based mapping) to make attention linear in the sequence length. We update a global state <math>R_i</math> for each chunk: | |||

<math> | <math>R_i = R_{i-1} + \Phi\bigl(K_{[i]}\bigr)^\top , V_{[i]},</math> | ||

and compute the global attention contribution for the <math>i</math>-th chunk: | |||

<math>\mathrm{GlobalAttn}(X_{[i]}) = \Phi\bigl(Q_{[i]}\bigr),R_{i-1}.</math> | |||

Intuitively, <math>R_{i-1}</math> aggregates (in linear time) all key-value information from past chunks. | |||

==== Taylor Linear Attention ==== | |||

The Based architecture specifically employs a second-order Taylor series expansion as the feature map for linear attention. This approximation offers a good balance between computational efficiency and performance: | |||

<math>\Phi(x) = \begin{bmatrix} 1 \\ x \\ \frac{x^2}{2} \end{bmatrix}</math> | |||

This feature map provides a reasonable approximation of the softmax function while maintaining the linear complexity benefits. | |||

=== | ==== Local Sliding-Window Attention (Exact) ==== | ||

Within the current chunk, we also apply standard (exact) attention to a small window of recent tokens. Denote the set of token indices in this window by <math>\mathcal{W}_i</math>. Then: | |||

<math>\mathrm{LocalAttn}(X_{[i]}) ;=; \sum_{j ,\in, \mathcal{W}i} \mathrm{softmax}!\Bigl(\frac{Q{[i]},K_j^\top}{\sqrt{d}}\Bigr),V_j.!</math> | |||

This term captures fine-grained, short-range dependencies at full (exact) attention fidelity, but only over a small neighborhood <math>\mathcal{W}_i</math>. | |||

==== Final Output per Chunk ==== | |||

The total representation for chunk <math>i</math> is a sum of global (linear) and local (windowed) attention: | |||

<math>h_{[i]} ;=; \mathrm{GlobalAttn}(X_{[i]}) ;+; \mathrm{LocalAttn}(X_{[i]}).!</math> | |||

<math> | By combining a linear-time global update (<math>R_i</math>) with high-resolution local attention, the model balances throughput (via the efficient global linear component) and recall (via exact local attention). This addresses the memory-recall tradeoff: large contexts are captured without quadratically scaling memory usage, while local windows preserve high accuracy on short-range dependencies. | ||

=== Hardware Optimizations === | |||

Recent work has shown that linear attention can be made even more practical when its implementation is tailored to the underlying hardware. For example, methods like '''FLASHLINEARATTENTION''' integrate I/O-aware techniques to minimize data movement between high-bandwidth memory and faster on-chip memories, resulting in real-world speedups that can even outperform optimized softmax attention implementations on moderate sequence lengths. | |||

The Based Architecture includes several hardware-aware optimizations: | |||

The | # Memory-efficient linear attention: The implementation fuses the feature map and causal dot product computation in fast memory, reducing high-latency memory operations. | ||

# Optimized sliding window: The window size is carefully selected to align with hardware constraints (typically 64×64 tiles), balancing computation and memory bandwidth. | |||

# Register-level computation: Critical calculations are performed in registers whenever possible, minimizing data movement between different memory hierarchies. | |||

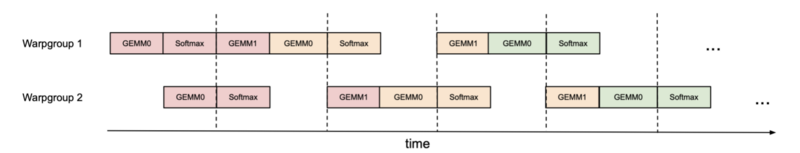

==== FLASHLINEARATTENTION: Hardware-Efficient Linear Attention for Fast Training and Inference ==== | |||

FLASHLINEARATTENTION is an I/O-aware, hardware-efficient linear attention mechanism for efficient data movement between shared memory (SRAM) and high-bandwidth memory (HBM). The goals are to alleviate memory bottlenecks, maximize GPU parallelism, and accelerate training and inference. The method significantly outperforms even FLASHATTENTION-2 at moderate sequence lengths (~1K tokens). Softmax-based self-attention, which is standard attention mechanism, is quadratic in both computation and memory complexity, making it inefficient for long sequences and scalability-constrained. Linear attention attempts to reduce this complexity, but most of them are not GPU-optimized for modern GPUs and do not provide real-world speed improvements. FLASHLINEARATTENTION solves this by splitting the input sequence into more manageable pieces where computation can independently be performed on each piece before global state updating. This alleviates redundant memory access, decreased latency GPU operations, and keeps tensor cores in effective use. | |||

Mathematically, FLASHLINEARATTENTION builds upon linear attention, which rewrites standard softmax attention as: | |||

<math>\text{Attention}(Q, K, V) = \text{softmax} \left(\frac{QK^T}{\sqrt{d}} \right) V</math> | |||

instead of explicitly computing the full <math>n \times n</math> attention matrix, linear attention approximates it using a kernel function <math>\phi(x)</math> such that: | |||

<math>\text{Attention}(Q, K, V) \approx \frac{\phi(Q) (\phi(K)^T V)}{\phi(Q) (\phi(K)^T)}</math> | |||

where <math>\phi(x)</math> is a feature map transformation ensuring that the inner product approximates the softmax function. In standard parallel linear attention, the output is computed as: | |||

<math>O = (Q K^T) V</math> | |||

which still has quadratic complexity <math>O(n^2 d)</math>. FLASHLINEARATTENTION, on the other hand, splits the input sequence into pieces and processes them separately while having a hidden state <math>S</math>. The rule to update the hidden state is in a recurrent form: | |||

<math>S[i+1] = S[i] + \sum_{j=1}^{C} K_j^T V_j</math> | |||

= | <math>O[i+1] = Q[i+1] S[i] + (Q[i+1] K[i+1]^T \odot M) V[i+1]</math> | ||

Here, <math>M</math> is a causal mask, ensuring attention is calculated only for tokens in the past. Chunkwise computation, minimizing memory overhead by splitting the sequence into smaller chunks to process, is the most crucial optimization in FLASHLINEARATTENTION to enhance efficiency. Chunks can be processed independently, allowing parallel execution. HBM I/O cost minimization is another key optimization, avoiding unnecessary data transfer between HBM and SRAM by reusing on-chip loaded tensors. When <math>Q[n]</math> is loaded into SRAM, both <math>Q[n]S</math> and <math>(Q[n]K[n]^T \odot M)V[n]</math> are computed without reloading <math>Q[n]</math>. FLASHLINEARATTENTION has two implementations. In the non-materialization version, hidden states <math>S[i]</math> are stored in SRAM to enable memory-efficient computation. In the materialization version, all <math>S[i]</math> are stored in HBM to enable full sequence-level parallelism. The materialization version is slightly slower but boosts training throughput by 10-20%. The calculation is parallel for chunks but sequential between chunks. This is for efficiency and handling of memory to render the algorithm suitable for processing long sequences. | |||

< | The FLASHLINEARATTENTION forward pass algorithm goes as follows. For input matrices <math>Q</math>, <math>K</math>, and <math>V</math> of size <math>L \times d</math> and chunk size <math>C</math>, the sequence is divided into <math>N = L / C</math> blocks as: | ||

<math>Q = \{ Q[1], Q[2], ..., Q[N] \}, \quad K = \{ K[1], K[2], ..., K[N] \}</math> | |||

The hidden state is initialized as <math>S = 0</math> in SRAM. For each chunk, <math>S</math> is stored in HBM if materialization is enabled, and the corresponding <math>K[n], V[n]</math> values are loaded into SRAM. The hidden state update follows: | |||

<math>S = S + K[n]^T V[n]</math> | |||

and the output for each chunk is computed in parallel as: | |||

<math> | |||

<math> | |||

</ | |||

The | |||

<math> | |||

<math>O'[n] = Q[n] S + (Q[n] K[n]^T \odot M) V[n]</math> | |||

<math>O | |||

The outputs are then stored in HBM and fed as outputs. The algorithm maintains a trade-off between memory usage and parallelization such that training speeds are higher than previous linear attention versions. FLASHLINEARATTENTION offers significant performance gains compared to several other attention models. Speedup gains involve less than FLASHATTENTION-2 on sequences shorter than 4K tokens and doubling of training speeds compared to standard linear attention. Memory efficiency is improved through reducing HBM I/O expense and removing redundant data movement, with 4x less memory usage compared to baseline softmax attention. Scalability is also a benefit, allowing for processing of sequences longer than 20K tokens without quadratic memory growth. These improvements make FLASHLINEARATTENTION a solid tool for large-scale transformers, improving efficiency in training and inference. By integrating FLASHLINEARATTENTION into traditional transformer designs, large language models can be significantly accelerated, and large-scale deployment made more viable. Future work can explore deeper kernel optimizations, CUDA-specific workloads, and hardware-specific transformations to further enhance efficiency. FLASHLINEARATTENTION is a significant advancement in hardware-efficient deep learning, supporting memory-efficient training at higher speeds for large-scale transformers. By optimizing memory access, chunking input sequences, and parallel intra-chunk computation, it achieves significant speedup with strong recall ability, making it a landmark achievement in the efficient processing of long sequences. | |||

===Empirical Results=== | |||

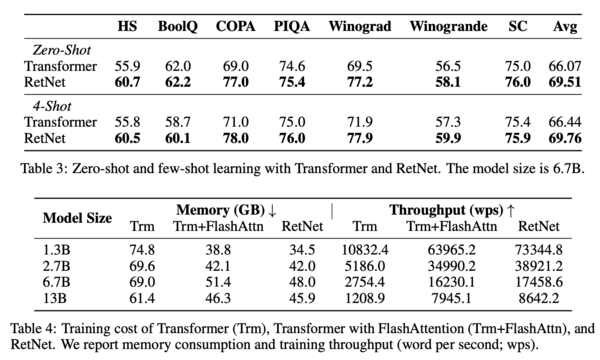

[[File:RetNet_Results.png|600px]] | |||

In Table 3, comparison was made between Transformer and RetNet on a variety of downstream tasks (i.e., HS, BoolQ, etc). In both zero-shot and 4-shot learning, RetNet achieved a higher accuracy on all tasks listed. In Table 4, the authors compared the training speed and memory usage of Transformer, Transformer with FlashAttention and RetNet, the training sequence length is fixed at 8192 tokens. Results show that RetNet consumes less memory while achieving a higher throughput than both Transformer and Transformer with FlashAttention. Recall that in FlashAttention the technique of kernel fusion was applied while here RetNet was implemented naively. Therefore, there's potential for improvements upon the current results, which already exceeds the other two models. | |||

=== | === Limitations === | ||

While the Based Architecture presents a promising direction, it still faces some challenges: | |||

# Implementation complexity: The dual-attention mechanism and hardware optimizations add complexity to the implementation. | |||

# Hyperparameter sensitivity: The optimal balance between global and local attention components may vary across different tasks and datasets. | |||

# | # Performance gap: Despite improvements, there remains a gap between Based models and state-of-the-art vanilla attention models on some specific tasks. | ||

# | |||

== | == Gated Linear Attention (GLA) == | ||

RetNet shows us we can achieve parallel training, efficient inference, and more optimized performance. However, despite its innovations, RetNet still has significant limitations. The biggest problem with RetNet was how it handled sequential data. RetNet uses a constant decay factor (<math>\gamma</math>) that doesn't adapt to the content being processed. Let's imagine a conversation where you treat every fifth word equally regardless of how significant. That is what RetNet does with its constant exponential decay factor. What that means is that RetNet treats all sequential dependencies the same way, regardless of whether the current content requires more emphasis on recent tokens or on tokens much farther back. This process could work, but not necessarily optimally. | |||

Furthermore, while RetNet is theoretically efficient, it is not so effective in practice on real hardware. The algorithms are not I/O-aware, and therefore they do not account for how data is being moved between levels of memory in modern GPUs and this leads to suboptimal performance. Gated Linear Attention (GLA) advances linear attention by solving such key limitations. | |||

The new idea of GLA is the use of data-dependent gates. Unlike RetNet which uses fixed decay factors, these gates dynamically adjust based on the content being processed and this makes the model more powerful. It likes an intelligent filter that decides, at each position in a sequence, how much information should pass through depending on its relevance. For example, when processing the phrase "Prime Minister" in "The Prime Minister of Canada," the model might focus more on "Canada" than on "The". This is something RetNet with fixed decay cannot achieve. GLA introduces a gating mechanism where the output is determined by the below formula: | |||

= | <math>\text{Output} = \text{LinearAttention} \odot \text{ContentGate}</math> | ||

The ContentGate is derived directly from the input itself and this modification enhances the model’s ability to capture complex dependencies in text. | |||

The other important feature of GLA is its '''FLASHLINEARATTENTION''' algorithm which designed specifically for modern GPU architectures. Unlike theoretical improvements, this optimization gives us real-world performance and outperforming even highly optimized methods like '''FLASHATTENTION-2'''. What makes '''FLASHLINEARATTENTION''' different is that it is I/O-aware, meaning it efficiently manages data movement between: | |||

* High-bandwidth memory (HBM): Large but slower GPU memory. | |||

* Shared memory (SRAM): Smaller but significantly faster memory. | |||

By minimizing unnecessary data transfers and using specialized compute units (like tensor cores), this method achieves remarkable speed and efficiency. In benchmarks, it even surpasses FLASHATTENTION-2 on relatively short sequences of just 1,000 tokens. | |||

FLASHLINEARATTENTION improves efficiency by using a method called chunkwise processing. Instead of processing the entire sequence at once, it divides it into smaller chunks. Within each chunk, computations are done in parallel to maximize GPU usage. Then, information is passed between chunks in a recurrent way to maintain dependencies. This balances speed and memory efficiency, making it faster than traditional softmax attention and even some optimized methods like FLASHATTENTION-2. | |||

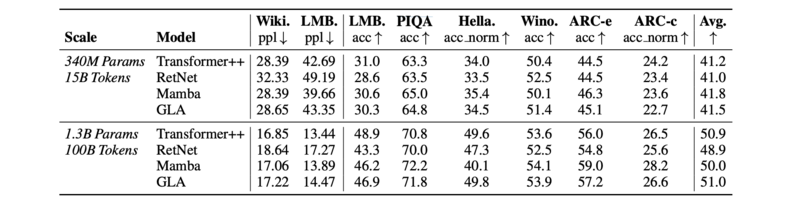

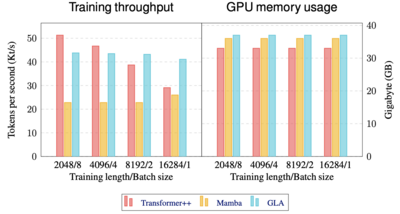

===Empirical Results=== | |||

[[File:GLA_Results.png|800px]] | |||

The table above shows GLA Transformer results against Transformer++, RetNet, and Mamba. Two sets of scales are employed and the same set of language tasks are tested on. The individual task performance is via zero-shot. We can see that GLA outperforms subquadratic models like RetNet on all tasks and achieved comparable performance against quadratic models like Transformer++. | |||

[[File:GLA_Results2.png|400px]] | |||

In additional to its performance on various language tasks, GLA also achieves a higher throughput and lower memory consumption, especially on long input sequences. | |||

=== | ===Limitations & Future Work=== | ||

* Lack of Large-Scale Validation | |||

**Although the authors anticipate that the training efficiency of GLA become even more favorable compared to model like Mamba at larger scales, it is unclear how GLA would scale to even larger models/datasets. | |||

**The experiments were conducted on moderate-scale language models (up to 1.3B parameters). The performance and efficiency of GLA at larger scales (e.g., >7B parameters) remain uncertain, particularly regarding tensor parallelism and memory constraints. | |||

* language | * Performance Gap in Recall-Intensive Tasks | ||

* | ** While GLA outperforms other subquadratic models in recall-intensive tasks, it still lags behind standard quadratic attention Transformers, indicating room for improvement in tasks requiring precise memory retrieval. | ||

* | |||

== | ==TransNormerLLM: A Faster and Better Large Language Model with Improved TransNormer== | ||

The objective of the paper is to address the quadratic time complexity and scalability limitations of conventional transformer-based LLMs by introducing a linear attention-based architecture for improved efficiency and performance. Modern linear attention models like '''TransNormerLLM''' push the envelope by integrating several modifications—including improved positional encodings (using LRPE with exponential decay), gating mechanisms, and tensor normalization—to not only match but even outperform conventional Transformer architectures in both accuracy and efficiency. These innovations help address the limitations of earlier linear attention approaches and demonstrate the potential for linear methods in large-scale language modeling. | |||

=== What is TransNormer? === | |||

'''TransNormer''' is a linear transformer model introduced in 2022 at the Conference on Empirical Methods in Natural Language Processing (EMNLP). It tackles two primary challenges: unbounded gradients in attention computation, which can destabilize training, and attention dilution, where long sequences reduce focus on local token interactions. To mitigate these, TransNormer replaces traditional scaling operations with normalization techniques to control gradients and employs diagonal attention in early layers to prioritize neighboring tokens.These innovations enhance performance on tasks such as text classification, language modeling, and the Long-Range Arena benchmark, while achieving significant space-time efficiency compared to softmax attention-based transformers. | |||

The original TransNormer model, faced several limitations that impacted its versatility. | |||

* '''Scalability Constraints''': TransNormer was primarily tested on small-scale tasks like text classification and the Long-Range Arena benchmark, with limited evidence of its performance on large-scale language modeling tasks. This restricted its applicability to modern large language models requiring extensive training data and parameters. | |||

* '''Complexity in Optimization''': Despite stabilizing gradients, the model's normalization techniques increased computational overhead in certain configurations, complicating optimization for resource-constrained environments. | |||

* '''Attention Dilution Trade-offs''': While diagonal attention improved local context focus, it potentially limited the model’s ability to capture long-range dependencies in complex sequences, affecting performance on tasks requiring global context. | |||

* '''Benchmark Scope''': The evaluation was confined to specific datasets, lacking comprehensive testing on diverse, real-world applications, which raised questions about its generalizability. | |||

The authors address all of these limitations in TransNormerLLM. | |||

===Key Contributions=== | |||

==== | <ul> | ||

<li>Architectural Improvements</li> | |||

<ol> | |||

<li>Positional Encoding: <br> | |||

Combines '''LRPE (Linearized Relative Positional Encoding)''' with exponential decay to balance global interactions and avoid attention dilution. The expression of positional coding is:<br> | |||

<math>a_{st}=\mathbf{q}_s^\top\mathbf{k}_t\lambda^{s-t}\exp(i\theta(s-t)),</math><br> | |||

where <math>a_{st}</math> is the attention score between token s and t, <math>\lambda^{s-t}</math> is the exponential decay factor and <math>\exp(i\theta(s-t))</math> is the encoding to capture relative positions.</li> | |||

<li>Gating Mechanisms:<br> | |||

Uses '''Gated Linear Attention (GLA)''' and '''Simplified Gated Linear Units (SGLU)''' to stabilize training and enhance performance.<br> | |||

Gate can enhance the performance of the model and smooth the training process. The structure of Gated LinearAttention (GLA) is:<br> | |||

<math>O=\mathrm{Norm}(QK^{\top}V)\odot U,</math><br> | |||

where:<math>\quad Q=\phi(XW_q),\quad K=\phi(XW_k),\quad V=XW_v,\quad U=XW_u.</math><br> | |||

To further accelerate the model, the author propose Simple GLU (SGLU), which removes the activation | |||

function from the original GLU structure as the gate itself can introduce non-linearity. Therefore, the channel mixing becomes:<br> | |||

<math>O=[V\odot U]W_o,</math><br> | |||

where:<math>V=XW_v,\quad U=XW_u.</math><br> | |||

</li> | |||

<li>Tensor Normalization: <br> | |||

Replaces '''RMSNorm''' with '''SRMSNorm''', a simpler normalization method that accelerates training without performance loss. | |||

The new simple normalization function called SimpleRMSNorm, abbreviated as SRMSNorm:<br> | |||

<math>\mathrm{SRMSNorm}(x)=\frac{x}{\|x\|_2/\sqrt{d}}</math> | |||

</li> | |||

</ol> | |||

<li>Training Optimization</li> | |||

Model Parallelism: <br> | |||

Adapts Megatron-LM model parallelism for SGLU and GLA, enabling efficient scaling from 7B to 175B parameters.<br> | |||

'''Model Parallelism on SGLU:<br>''' | |||

<math>O=\left((XW_v)\odot(XW_u)\right)W_o</math><br> | |||

<math>\begin{bmatrix} | |||

O_1^{\prime},O_2^{\prime} | |||

\end{bmatrix}=X | |||

\begin{bmatrix} | |||

W_v^1,W_v^2 | |||

\end{bmatrix}\odot X | |||

\begin{bmatrix} | |||

W_u^1,W_u^2 | |||

\end{bmatrix}= | |||

\begin{bmatrix} | |||

XW_v^1,XW_v^2 | |||

\end{bmatrix}\odot | |||

\begin{bmatrix} | |||

XW_u^1,XW_u^2 | |||

\end{bmatrix}</math><br> | |||

<math>O= | |||

\begin{bmatrix} | |||

O_1^{\prime},O_2^{\prime} | |||

\end{bmatrix} | |||

\begin{bmatrix} | |||

W_o^1,W_o^2 | |||

\end{bmatrix}^\top=O_1^{\prime}W_o^1+O_2^{\prime}W_o^2</math><br> | |||

'''Model Parallelism on GLA:<br>''' | |||

<math>[O_1,O_2]=\mathrm{SRMSNorm}(QK^\top V)\odot U,</math><br> | |||

where: <math>Q = [\phi(X W_q^1), \phi(X W_q^2)],\ K = [\phi(X W_q^1), \phi(X W_q^2)],\ V = X[W_v^1, W_v^2],\ U = X[W_u^1, W_u^2].</math><br> | |||

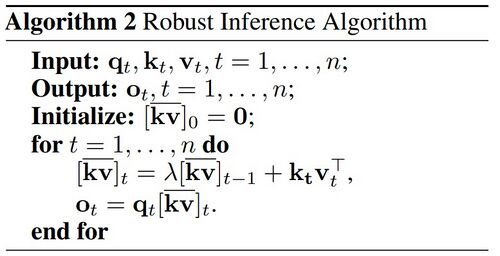

<li>Robust Inference</li> | |||

A robust inference algorithm proposed in the paper ensures numerical stability and constant inference speed regardless of sequence length via a recurrent formulation with decay factors.<br> | |||

[[File:RobustAlgorithm.jpg|500px|Robust Inference Algorithm ]] | |||

</ul> | |||

=== Training === | |||

TransNormerLLM has been trained in sizes ranging from 385 million to 7 billion parameters, with ongoing development of a 15-billion-parameter version. The training corpus includes 1.0 trillion tokens for the 385M model, 1.2 trillion for the 1B model, and 1.4 trillion for the 7B model, ensuring robust data exposure for diverse language tasks. The model utilizes large-scale clusters, enabling efficient scaling across multiple devices. | |||

=== Results === | |||

TransNormerLLM has demonstrated competitive performance across various benchmarks, particularly in commonsense reasoning and aggregated tasks, matching or approaching state-of-the-art models like LLaMA and OPT. The 7B parameter model is the most extensively evaluated, with results reported as follows: | |||

* '''Commonsense Reasoning (Zero-Shot)''': | |||

** BoolQ: 75.87 – Evaluates yes/no question answering based on passages. | |||

** PIQA: 80.09 – Tests understanding of physical world interactions. | |||

** HellaSwag: 75.21 – Assesses commonsense inference from multiple choices. | |||

* | * '''Aggregated Benchmarks (Five-Shot)''': | ||

** MMLU: 43.10 – Measures multitask language understanding in English. | |||

** CMMLU: 47.99 – Chinese version of MMLU. | |||

** C-Eval: 43.18 – Comprehensive Chinese evaluation across 52 disciplines. | |||

These results highlight the model's versatility in handling both English and Chinese tasks, with efficiency gains attributed to its linear attention mechanisms, achieving over 20% acceleration compared to traditional models. | |||

== | ==Simple linear attention language models balance the recall-throughput tradeoff== | ||

Large language models (LLMs) using Transformers are fantastic at "recalling" details from their input—think of it as their ability to dig up a specific fact buried in a long conversation. However, attention-based language models suffer from high memory consumption during inference due to the growing key-value (KV) cache, which scales with sequence length. This reduces throughput and limits efficiency for long sequences, despite their strong recall ability. | |||