stat940F24: Difference between revisions

| (83 intermediate revisions by 5 users not shown) | |||

| Line 293: | Line 293: | ||

* <math>y</math> is the original label, | * <math>y</math> is the original label, | ||

* <math>k</math> is the number of classes. | * <math>k</math> is the number of classes. | ||

The reason for using label smoothing: | |||

* '''Prevents overfitting''': By preventing the model from becoming too confident in its predictions, label smoothing reduces the likelihood of overfitting. | |||

* '''Improves generalization''': Label smoothing can help the model generalize better to unseen data, as it discourages overconfidence in training. | |||

== Bagging/Ensemble == | == Bagging/Ensemble == | ||

Bagging (short for bootstrap | Bagging (short for bootstrap aggregating) is a machine learning ensemble technique for reducing generalization error by combining several models (Breiman, 1994) | ||

Explanation: Aggregating is to ombine the predictions of each model, often using voting (for classification) or averaging (for regression). | |||

It works by training several different models separately, and then have all of the models vote on the output for test examples. For example, random forest, which is a popular bagging algorithm that builds multiple decision trees on different bootstrap samples of the data and aggregates their predictions. | |||

The reason why bagging works: | |||

* '''Variance reduction''': By training multiple models on different data subsets, bagging reduces the variance of the predictions. This means that it helps models generalize better to new, unseen data. | |||

* '''Handling overfitting''': Bagging is particularly useful for high-variance models (e.g., decision trees) as it helps reduce overfitting. | |||

While bagging is highly useful for traditional machine learning models, it is less commonly used in deep learning because modern neural networks are already highly expressive. Instead, other ensemble methods, such as model ensembling or dropout, are used. | |||

Code Sample: | |||

from sklearn.ensemble import RandomForestClassifier | |||

from sklearn.datasets import load_iris | |||

from sklearn.model_selection import train_test_split | |||

- Load a dataset (Iris dataset for example) | |||

data = load_iris() | |||

X_train, X_test, y_train, y_test = train_test_split(data.data, data.target, test_size=0.3, random_state=42) | |||

- Train a random forest classifier (bagging technique) | |||

rf_model = RandomForestClassifier(n_estimators=100, random_state=42) | |||

rf_model.fit(X_train, y_train) | |||

- Make predictions | |||

y_pred = rf_model.predict(X_test) | |||

- Evaluate the model | |||

accuracy = rf_model.score(X_test, y_test) | |||

== Dropout == | == Dropout == | ||

| Line 341: | Line 379: | ||

The gradient for each parameter are averaged over the training cases in each mini-batch. | The gradient for each parameter are averaged over the training cases in each mini-batch. | ||

== Test Time == | === Test Time === | ||

Use a single neural net without dropout | Use a single neural net without dropout | ||

If a unit is retained with probability p during training, the outgoing weights of that unit are multiplied by p at test time. <math>p \cdot w</math> | If a unit is retained with probability p during training, the outgoing weights of that unit are multiplied by p at test time. <math>p \cdot w</math> | ||

== Additional Regularization == | |||

=== L1 norm === | |||

L1 norm regularization, also known as Lasso, is a technique that adds a penalty equal to the absolute value of the magnitude of coefficients to the loss function. This encourages sparsity in the learned weights, meaning it forces some of the weights to become exactly zero, effectively selecting important features and reducing model complexity. | |||

=== Mixup === | |||

Mixup is a data augmentation technique that creates new training examples by taking convex combinations of pairs of input data and their labels. By blending images and labels together, the model learns smoother decision boundaries and becomes more robust to adversarial examples and noise. | |||

=== Cutout === | |||

Cutout is a form of data augmentation where random square regions are masked out (set to zero) in input images during training. This forces the model to focus on a broader range of features across the image rather than relying on any single part, leading to better generalization and robustness. | |||

=== Gradient Clipping === | |||

Gradient clipping is a technique used to prevent the gradients from becoming too large during training, which can cause the model to diverge. This is done by capping the gradients at a predefined threshold, ensuring that updates remain stable, especially in models like recurrent neural networks (RNNs) where exploding gradients are a common issue. | |||

=== DropConnect === | |||

DropConnect is a variation of Dropout, but instead of dropping neurons, it randomly drops connections (weights) between neurons during training. This prevents the co-adaptation of neurons while allowing individual neurons to contribute to learning. | |||

=== Data Augmentation (beyond Mixup and Cutout) === | |||

* '''Random Flips and Rotations''': Randomly flipping (either vertical or horizontal) or rotating images during training to make the model invariant to certain transformations. | |||

* '''Color Jittering''': Modifying the brightness, contrast, saturation, and hue of images to make the model more robust to variations in color. | |||

* '''Random Cropping and Scaling''': Randomly cropping and scaling images to force the model to learn from different perspectives and contexts within the data. | |||

For PyTorch augmentation, one can refer https://pytorch.org/vision/stable/transforms.html | |||

== Generalization Paradox == | |||

* Models with many parameters tend to overfit | |||

* However, deep neural network, despite using many parameters, works well with unseen data (look up the Double Descent Curve), the reason remains unknown | |||

This phenomenon is illustrated by the '''Double Descent Curve''', where after reaching a peak in test error (due to overfitting), the error decreases again with further model complexity. The precise reasons remain uncertain, but hypotheses include implicit regularization from optimization methods like SGD, hierarchical feature learning, and the redundancy offered by overparameterization. | |||

== Batch Normalization == | |||

=== Overview === | |||

Batch normalization is a technique used to improve the training process of deep neural networks by normalizing the inputs of each layer. Despite the initial intuition for the method being somewhat incorrect, it has proven to be highly effective in practice. Batch normalization speeds up convergence, allows for larger learning rates, and makes the model less sensitive to initialization, resulting in more stable and efficient training. | |||

Batch normalization motivated by internal covariate shift (2015 lofee & Szegedy) | |||

=== Internal Covariance Shift === | |||

Batch normalization was originally proposed as a solution to the internal covariance shift problem, where the distribution of inputs to each layer changes during training. This shift complicates training because the model must constantly adapt to new input distributions. | |||

The transformation of layers can be described as: | |||

<math> l = F_2(F_1(u, \theta_1), \theta_2) </math> | |||

For a mini-batch of activations <math>X = \{x_1, x_2, \dots, x_m\}</math> from a specific layer, batch normalization proceeds as follows: | |||

1. '''Compute the mean''': <math> \mu_B = \frac{1}{m} \sum_{i=1}^{m} x_i </math> | |||

2. '''Compute the variance''': <math> \sigma_B^2 = \frac{1}{m} \sum_{i=1}^{m} (x_i - \mu_B)^2 </math> | |||

3. '''Normalize the activations''': <math> \hat{x}_i = \frac{x_i - \mu_B}{\sqrt{\sigma_B^2 + \epsilon}} </math> | |||

4. '''Scale and shift''' the normalized activations using learned parameters <math>\gamma</math> (scale) and <math>\beta</math> (shift): <math> y_i = \gamma \hat{x}_i + \beta </math> | |||

where: | |||

* <math>x_i</math> represents the activations in the mini-batch, | |||

* <math>\mu_B</math> is the mean of the mini-batch, | |||

* <math>\sigma_B^2</math> is the variance of the mini-batch, | |||

* <math>\epsilon</math> is a small constant added for numerical stability, | |||

* <math>\hat{x}_i</math> is the normalized activation, | |||

* <math>\gamma</math> and <math>\beta</math> are learned parameters for scaling and shifting. | |||

The batch normalization improves validation accuracy by removing the dropout and enables higher learning rate | |||

=== Batch Normalization === | |||

As mentioned earlier, the original intuition behind batch normalization was found to be incorrect after further research. A paper by Santurkar, S., Tsipras, D., Ilyas, A., & Madry, A. (NeurIPS 2019) contradicted the original 2015 paper on BatchNorm by highlighting the following points: | |||

* Batch normalization does not fix covariate shift. | |||

* If we fix covariate shift, it doesn't help. | |||

* lf we intentionally increase lCS, it doesn't harm. | |||

* Batch Norm is not the only possible normalization. There are alternatives. | |||

Instead, they argue that Batch normalization works better due to other factors, particularly related to its effect on the optimization process: | |||

1. '''Reparameterization of the loss function:''' | |||

* Improved Lipschitzness: Batch normalization improves the Lipschitz continuity of the loss function, meaning that the loss changes at a smaller rate, and the magnitudes of the gradients are smaller. This makes the gradients of the loss more "Lipschitz." | |||

* Better β-smoothness: The loss exhibits significantly better smoothness, which aids in optimization by preventing large, erratic changes in the gradient. | |||

2. '''Variation of the loss function''': BatchNorm reduces the variability of the value of the loss. Consider the variation of the loss function: | |||

<math> \mathcal{L}(x + \eta \nabla \mathcal{L}(x)) </math> where <math> \eta \in [0.05, 0.4] </math>. | |||

A smaller variability of the loss indicates that the steps taken during training are less likely to cause the loss to increase uncontrollably. | |||

3. '''Gradient predictiveness''': BatchNorm enhances the predictiveness of the gradients, meaning the changes in the loss gradient are more stable and predictable. This can be expressed as: | |||

<math> || \nabla \mathcal{L}(x) - \nabla \mathcal{L}(x + \eta \nabla \mathcal{L}(x)) || </math>, where <math> \eta \in [0.05, 0.4] </math>. | |||

A good gradient predictiveness implies that the gradient evaluated at a given point remains relevant over longer distances, which allows for larger step sizes during training. | |||

=== Alternatives to Batch Norm === | |||

'''Weight Normalization''': Weight normalization is a technique where the weights, instead of the activations, are normalized. This method reparameterizes the weight vectors to accelerate the training of deep neural networks. | |||

Tim Salimans and Diederik P. Kingma, "Weight Normalization: A Simple Reparameterization to Accelerate Training of Deep Neural Networks," 2016. | |||

'''ELU (Exponential Linear Unit) and SELU (Scaled Exponential Linear Unit)''': ELU and SELU are two proposed activation functions that have a decaying slope instead of a sharp saturation. They can be used as alternatives to BatchNorm by providing smoother, non-linear activation without requiring explicit normalization. | |||

Djork-Arné Clevert, Thomas Unterthiner, and Sepp Hochreiter, "Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs)," In International Conference on Learning Representations (ICLR), 2016. | |||

Günter Klambauer, Thomas Unterthiner, Andreas Mayr, and Sepp Hochreiter, "Self-Normalizing Neural Networks," ICLR, 2017. | |||

== Convolutional Neural Network (CNN) == | |||

=== Introduction === | |||

Convolutional networks are simply neural networks that use convolution instead of general matrix multiplication in at least one of their layers. CNN is mainly used for image processing. | |||

=== Convolution === | |||

In ML, convolution means dot product | |||

<math> h = \sigma(\langle x, w\rangle+b)</math> | |||

* Same x, different w -- multi-layer perception (MLP) | |||

* Different x, same w -- CNN (weight sharing) | |||

From class, the following operation is called convolution | |||

<math> s(t) = \int x(a)w(t-a)ds </math> | |||

The convolution operation is typically denoted with an asterisk: | |||

<math> s(t) = (x \ast w)(t) </math> | |||

=== Discrete Convolution === | |||

If we now assume that x and w are defined only on integer t, we can define the discrete convolution: | |||

<math> | |||

s[t] = (x \ast w)(t) = \sum_{a=-\infty}^{\infty} x[a] \, w[t-a] | |||

</math> | |||

<math>w[t-a]</math> represents the sequence <math>w[t]</math> shifted by <math>a</math> units. | |||

=== In practice === | |||

We often use convolutions over more than one axis at a time. | |||

<math> | |||

s[i,j] = (I * K)[i,j] = \sum_m \sum_n I[m,n] K[i-m, j-n] | |||

</math> | |||

* '''Input''': usually a multidimensional array of data. | |||

* '''Kernel''': usually a multidimensional array of parameters that should be learned. | |||

We assume that these functions are zero everywhere but the finite set of points for which we store the values. | |||

We can implement the infinite summation as a summation over a finite number of array elements. | |||

=== Convolution and Cross-Correlation === | |||

Convolution is commutative: | |||

<math> | |||

s[i,j] = (I * K)[i,j] = \sum_m \sum_n I[i-m, j-n] K[m, n] | |||

</math> | |||

Cross-correlation: | |||

<math> | |||

s[i,j] = (I * K)[i,j] = \sum_m \sum_n I[i+m, j+n] K[m, n] | |||

</math> | |||

Many machine learning libraries implement cross-correlation but call it convolution. In the context of backpropagation in neural networks, cross-correlation simplifies the computation of gradients with respect to the input and kernel. | |||

Visualization of Cross-Correlation and Convolution with Matlab (https://www.youtube.com/v/Ma0YONjMZLI) | |||

=== Image to Convolved Feature === | |||

* '''Kernel\filter size''': weight <math>\times</math> height, e.g. 3 <math>\times</math> 3 in below example | |||

* '''Stride''': how many pixels to move the filter each time | |||

* '''Padding''': add zeros (or any other value) around the boundary of the input | |||

=== Example === | |||

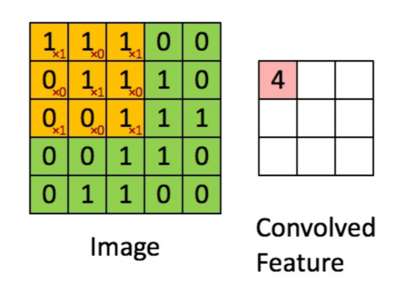

The following image illustrates a 2D convolution operation between an input image and a filter to produce a convolved feature map. | |||

'''Image (Input)''': | |||

The grid on the left represents a 5<math>\times</math>5 matrix of pixel values. The orange-highlighted 3<math>\times</math>3 region is part of the image currently being convolved with the filter. | |||

'''Convolution Operation''': | |||

The filter values are applied to the selected region in an element-wise multiplication followed by a summation. The operation is as follows: | |||

<math> (1 \times 1) + (1 \times 0) + (1 \times 1) + (0 \times 0) + (1 \times 1) + (1 \times 0) + (0 \times 1) + (0 \times 0) + (1 \times 1) = 4 </math> | |||

'''Convolved Feature Map''': | |||

The result value <math>4</math>, is placed in the corresponding position (top-left) of the convolved feature map on the right. | |||

[[Image:conv_example.png|thumb|400px|center|One Convolution Example]] | |||

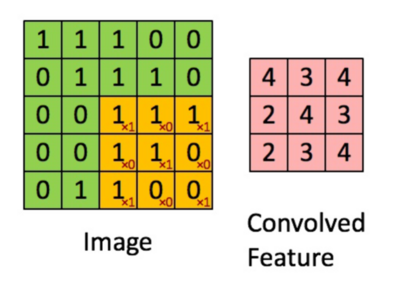

This process is repeated as the filter slides across the entire image. The final feature map is shown below. | |||

[[Image:conv_example_final.png|thumb|400px|center|Final Feature Map]] | |||

=== Sparse Interactions=== | |||

In feed forward neural network '''every''' output unit interacts with every input unit. | |||

* When we have <math>m</math> inputs and <math>n</math> outputs, then matrix multiplication requires <math> (m \times n) </math> parameters. and the algorithms used in practice have <math> O(m \times n) </math> runtime (per example) | |||

Convolutional networks, typically have sparse connectivity (sparse weights). This is accomplished by making the kernel smaller than the input. | |||

* Limit the number of connections each output may have to <math>k</math>, then requires only <math> (k \times n) </math> parameters and <math> O(k \times n) </math> runtime | |||

=== Parameter Sharing === | |||

* In a traditional neural net, each element of the weight matrix is multiplied by one element of the input. i.e. It is used once when computing the output of a layer. | |||

* In CNNs, each member of the kernel is used at every position of the input | |||

* Instead of learning a separate set of parameters for every location, we learn only one set | |||

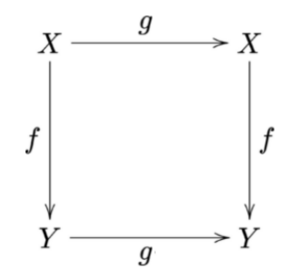

=== Equivariance === | |||

A function <math>f(x)</math> is '''equivaraint''' to a function <math>g</math> is the following holds: | |||

<math>f(g(x)) = g(f(x))</math> | |||

'''In simple terms''': Applying the function <math>f</math> after <math>g</math> is equivalent to applying <math>g</math> after <math>f</math> | |||

[[Image:equivariance.png|thumb|300px|center|Equivariance]] | |||

==== Equivariance in CNNs ==== | |||

* CNNs are naturally equivariant to translation (Covlution = Shift) | |||

* If an input image is shifted, the output feature map shifts correspondingly, preserving spatial structure | |||

* Importance: This property ensures that CNNs can detect features like edges or corners, no matter where they appear in the image | |||

A convolutional layer has equivariance to translation. For example, | |||

<math>g(x)[i] = x[i-1]</math> | |||

If we apply this transformation to x, then apply convolution, the result will be the same as if we applied convolution to x, then applied the transformation to the output. | |||

For images, convolution creates a 2-D map of where certain features appear in the input. Note that convolution is not equivariant to some other transformations, such as changes in the scale (rescaling) or rotation of an image. | |||

==== Importance of Data Augmentation ==== | |||

Data augmentation is commonly used to make CNNs robust against variations in scale, rotation, or other transformations. This involves artificially modifying the training data by applying transformations like flipping, scaling, and rotating to expose the network to different variations of the same object. | |||

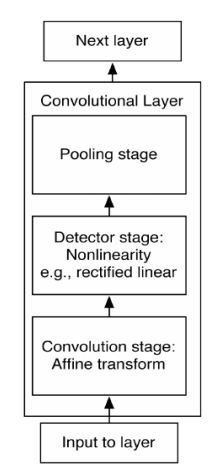

=== Convolutional Networks === | |||

'''The first stage (Convolution):''' The layer performs several convolutions in parallel to produce a set of preactivations. The convolution stage is designed to detect local features in the input image, such as edges and patterns. It does this by applying filters/kernels across the input image. | |||

'''The second stage (Detector): ''' Each preactivation is run through a nonlinear activation function (e.g. rectified linear). This stage introduces nonlinearity into the model, enabling it to learn complex patterns. Without this nonlinearity, the model would be limited to learning only linear relationships. | |||

'''The third stage (Pooling)''' Pooling reduces the spatial dimensions of the feature maps (height and width), helping to make the model more invariant to small translations and distortions in the input image. It also reduces computational load and helps prevent overfitting. | |||

[[Image:cnn.png|thumb|400px|center|CNN Structure]] | |||

=== Pooling=== | |||

Down-sample input size to reduce computation and memory | |||

==== Popular Pooling functions ==== | |||

* The maximum of a rectangular neighborhood (Max pooling operation) | |||

* The average of a rectangular neighborhood | |||

* The L2 norm of a rectangular neighborhood | |||

* A weighted average based on the distance from the central pixel | |||

==== Pooling with Downsampling ==== | |||

Max-pooling with a pool width of 3 and a stride between pools of 2. This reduces the representation size by a factor of 2, which reduces the the computational and statistical burden on the next layer. | |||

==== Pooling and Translations ==== | |||

Pooling helps to make the representation become invariant to small translations of the input. Invariance to local translation can be a very useful property if we care more about whether some feature is present than exactly where it is. For example: In a face, we need not know the exact location of the eyes. | |||

==== Input of Varying Size ==== | |||

Example: we want to classify images of variable size. | |||

The input to the classification layer must have a fixed size. In the final pooling output (for example) four sets of summary statistics, one for each quadrant of an image, regardless of the image size. Feature after pooling is <math>2 \times 2</math>. | |||

It is also possible to dynamically pool features together, for example, by running a clustering algorithm on the locations of interesting features (Boureau et al., 2011). | |||

i.e. a different set of pooling regions for each image. | |||

Learn a single pooling structure that is then applied to all images (Jia et al., 2012) | |||

=== Convolution and Pooling as an Infinitely Strong Prior === | |||

* '''Weak Prior''': a prior distribution that has high entropy, which means there is a high level of uncertainty or spread in the distribution. An example of this would be a Gaussian distribution with high variance | |||

* '''Strong Prior''': has very low entropy, which implies a high level of certainty or concentration in the distribution. An example of this would be a Gaussian distribution with low variance | |||

* '''Infinitely Strong Prior''': places zero probability on some parameters and says a convolutional net is similar to a fully connected net with an infinitely strong prior over its weights | |||

The weights for one hidden unit must be identical to the weights of its neighbor, but shifted in space. The weights must be zero, except for in the small, spatially contiguous receptive field assigned to that hidden unit. | |||

Use of convolution as infinitely strong prior probability distribution over the parameters of a layer. This prior says that the function the layer should learn contains only local interactions and is equivariantto translation. | |||

The use of pooling is infinitely strong prior that each unit should be invariant to small translations. Convolution and pooling can cause underfitting. | |||

=== Practical Issues === | |||

The input is usually not just a grid of real values. It is a grid of vector-valued observations. For example, a color image has a red, green, and blue intensity at each pixel. | |||

When working with images, we usually think of the input and output of the convolution as 3-D tensors. One index into the different channels and two indices into the coordinates of each channel. | |||

Software implementations usually work in batch mode, so they will actually use 4-D tensors, with the fourth axis indexing different examples in the batch. | |||

=== Training === | |||

Suppose we want to train a convolutional network that incorporates convolution of kernel stack <math>K</math> applied to multi-channel image <math>V</math> with stride <math>s</math>:<math>c(K; V; s)</math> | |||

Suppose we want to minimize some loss function <math>J(V; K)</math>. During forward propagation, we will need to use <math>c</math> itself to output <math>Z</math>. | |||

<math>Z</math> is propagated through the rest of the network and used to compute <math>J</math>. | |||

* During backpropagation, we receive a tensor <math>\mathbf{G}</math> such that: | |||

<math> G_{i,j,k} = \frac{\partial}{\partial Z_{i,j,k}} J(V, K) </math> | |||

*To train the network, we compute the derivatives with respect to the weights in the kernel: | |||

<math> g(\mathbf{G}, \mathbf{V}, s)_{i,j,k,l} = \frac{\partial}{\partial Z_{i,j,k}} J(V, K) = \sum_{m,n} G_{i,m,n} V_{j,ms+k,ns+l} </math> | |||

*If this layer is not the bottom layer of the network, we compute the gradient with respect to <math>\mathbf{V}</math> to backpropagate the error further: | |||

<math> h(\mathbf{K}, \mathbf{G}, s)_{i,j,k} = \frac{\partial}{\partial V_{i,j,k}} J(V, K) = \sum_{l,m \lvert s l + m = j} \sum_{n,p \lvert s n + p = k} \sum_{q} K_{q,i,m,p} G_{i,l,n} </math> | |||

=== Random or Unsupervised Features === | |||

The most computationally expensive part of training a convolutional network is learning the features. | |||

* Supervised training with gradient descent requires full forward and backward propagation through the entire network for every gradient update. | |||

* One approach to reduce this cost is to use features that are not learned in a supervised manner, such as random or unsupervised features. | |||

==== Random Initilizations ==== | |||

Simply initialize the convolution kernels randomly. In high-dimension space, random vectors are almost orthogonal to each other (correlated). Features captured by different kernels are independent. | |||

==== Unsupervised Learning ==== | |||

Learn the convolution kernels using an unsupervised criterion. | |||

==== Key insight ==== | |||

Random filters can perform surprisingly well in convolutional networks. | |||

* Layers composed of convolution followed by pooling naturally become frequency-selective and translation-invariant, even with random weights. | |||

Inexpensive architecture selection | |||

* Evaluate multiple convolutional architectures by training only the last layer. | |||

* Choose the best-performing architecture and then fully train it using a more intensive method. | |||

== Residual Networks (ResNet) == | |||

=== Overview === | |||

Deeper models are harder to train due to vanishing/exploding gradients and can be worse than shallower networks if not properly trained. There are advanced networks to deal with the degradation problem. | |||

ResNet, short for Residual Networks, was introduced by Kaiming He et al. from Microsoft Research in 2015. It brought a significant breakthrough in deep learning by enabling the training of much deeper networks, addressing the vanishing gradient problem. | |||

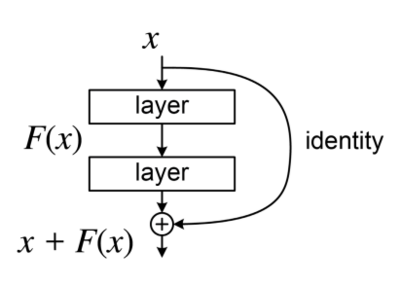

[[Image:resnet.png|thumb|400px|center|ResNet Structure]] | |||

* ResNet introduces the concept of skip connections (or residual connections) that allow the gradient to be directly backpropagated to earlier layers | |||

* Skip connections help in overcoming the degradation problem, where the accuracy saturates and then degrades rapidly as the network depth increases | |||

=== Variants === | |||

Several variants of ResNet have been developed, including ResNet-50, ResNet-101, and ResNet-152, differing in the number of layers. | |||

=== Application === | |||

ResNet has been widely adopted for various computer vision tasks, including image classification, object detection, and facial recognition. | |||

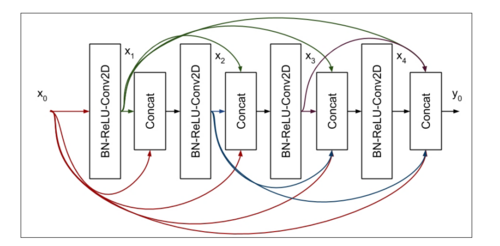

== DenseNet == | |||

=== Overview === | |||

* DenseNet, short for Densely Connected Networks, was introduced by Gao Huang et al. in 2017. | |||

* It is known for its efficient connectivity between layers, which enhances feature propagation and reduces the number of parameters | |||

[[Image:densenet.png|thumb|500px|center|DenseNet Structure]] | |||

=== Key Feature === | |||

The key feature is '''Dense Connectivity'''. | |||

* In DenseNet, each layer receives feature maps from all preceding layers and passes its own feature maps to all subsequent layers | |||

* This dense connectivity improves the flow of information and gradients throughout the network, mitigating the vanishing gradient problem | |||

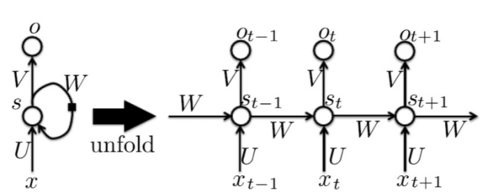

== Echo State Network == | |||

In RNNs, the ability to capture long-term dependencies is crucial. Set the recurrent and input weights such that the recurrent hidden units do a good job of capturing the history of past inputs, and only learn the output weights. The goal is to access the information from the past implicitly. | |||

The hidden state at time <math>t</math> can be expressed as: | |||

<math>s_t = \sigma(W s_{t-1} + U x_t)</math> | |||

where: | |||

* <math>W</math> represents the recurrent weight matrix, which connects previous hidden states to the current state, | |||

* <math>U</math> is the input weight matrix, responsible for incorporating the current input <math>x_t</math>, | |||

* <math>\sigma</math> is an activation function, like a non-linear function such as a sigmoid or tanh. | |||

It is important to control how small changes in the hidden state propagate through time to ensure the network does not become unstable. | |||

If a change <math>\Delta s</math> in the state at time <math>t</math> is aligned with an eigenvector <math>v</math> of the Jacobian <math>J</math> with eigenvalue <math>\lambda > 1</math>, then the small change <math>\Delta s</math> becomes <math>\lambda \Delta s</math> after one-time step, and <math>\lambda^t \Delta s</math> after <math>t</math> time steps. | |||

If the largest eigenvalue <math>\lambda < 1</math>, the map from <math>t</math> to <math>t+1</math> is contractive. | |||

The network forgets information about the long-term past. | |||

Set the weights to make the Jacobians slightly contractive. This allows the network to gradually forget irrelevant information while still remembering key long-term dependencies. | |||

[[Image:echo_state_net.png|thumb|500px|center|Echo State Network]] | |||

== Long Delays == | |||

RNNs often fail to capture these dependencies due to the vanishing gradient problem. Long delays use recurrent connections. It knows something from the past, help vanishing gradient - even if gradient get vanished during the path, it still has the direct information from the past. | |||

[[Image:long delay.png|thumb|500px|center|Long Delays]] | |||

== Leaky Units == | |||

In some cases, we do need to forget the path while in some we do not since we do not want to remember redundant information. | |||

Recall that: | |||

<math>s_t = \sigma(W s_{t-1} + U x_t)</math> | |||

Then consider the following refined form of the equation (convex combination of the current state and previous through a new parameter): | |||

<math>s_{t,i} = \left(1 - \frac{1}{\tau_i}\right) s_{t-1} + \frac{1}{\tau_i} \sigma(W s_{t-1} + U x_t)</math> | |||

where | |||

* <math>1 \leq \tau_i \leq \infty</math> | |||

* <math>\tau_i = 1</math> corresponds to an ordinary RNN | |||

* <math>\tau_i > 1</math> allows gradients to propagate more easily | |||

* <math>\tau_i \gg 1</math> means the state changes very slowly, integrating past values associated with the input sequence over a long duration | |||

Infinity means the current state is the previous state while one means completely forgetting the previous steps and only depends on the current observations. | |||

== Gated RNNs == | |||

=== Defnition === | |||

It might be useful for the neural network to forget the old state in some cases like if we only care about if the current letter is a or b. | |||

Example: <math>a\ a\ b\ b\ b\ b\ a\ a\ a\ a\ b\ a\ b</math> | |||

It might be useful to keep the memory of the past. | |||

Example: | |||

Instead of manually deciding when to clear the state, we want the neural network to learn to decide when to do it. | |||

=== Long-Short-Term-Memory (LSTM) === | |||

The Long-Short-Term-Memory (LSTM) algorithm was proposed in 1997 (Hochreiter and Schmidhuber, 1997). It is a type of recurrent neural network designed for approaching the vanishing gradient problem. | |||

Several variants of the LSTM are found in the literature: | |||

*Hochreiter and Schmidhuber, 1997 | |||

*Graves, 2012 | |||

*Graves et al., 2013 | |||

*Sutskever et al., 2014 | |||

The principle is always to have a linear self-loop through which gradients can flow for a long duration. | |||

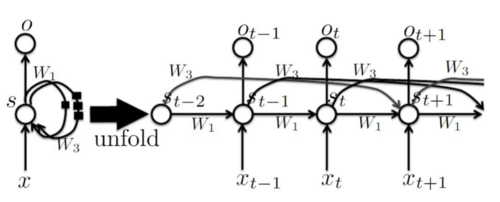

== Gated Recurrent Units (GRU) == | |||

Here is the plain text version of the new image content: | |||

Recent work on gated RNNs, Gated Recurrent Units (GRU), was proposed in 2014. | |||

* Cho et al., 2014 | |||

* Chung et al., 2014, 2015 | |||

* Jozefowicz et al., 2015 | |||

* Chrupala et al., 2015 | |||

Standard RNN computes the hidden layer at the next time step directly: | |||

<math>s_t = \sigma(W s_{t-1} + U x_t)</math> | |||

There are two gates: the update gate and the reset gate. Update gate for the case that we want to keep the information around while reset gate is the case when forgetting. A temporary state locks down some of the values of the current state. | |||

GRU first computes an update gate (another layer) based on the current input vector and hidden state: | |||

<math>z_t = \sigma(U^{(z)} x_t + W^{(z)} s_{t-1})</math> | |||

It also computes the reset gate similarly but with different weights: | |||

<math>r_t = \sigma(U^{(r)} x_t + W^{(r)} s_{t-1})</math> | |||

New memory content is calculated as: | |||

<math>\tilde{s_t} = \tanh(U x_t + r_t \odot W s_{t-1})</math> | |||

which has current observations and forgetting something from the past | |||

If the reset gate is close to 0, this causes the network to ignore the previous hidden state, effectively allowing the model to drop irrelevant information. | |||

The final memory at time step <math>t</math> is a combination of the current and previous time steps: | |||

<math>s_t = z_t \odot s_{t-1} + (1 - z_t) \odot \tilde{s_t}</math> | |||

If the reset gate is close to 0, it will ignore the previous hidden state, allowing the model to discard irrelevant information. | |||

The update gate <math>z_t</math> controls how much of the past state should matter in the current time step. If <math>z_t</math> is close to 1, then we can effectively copy information from the past state across multiple time steps. | |||

Units that need to capture short-term dependencies often have highly active reset gates. | |||

== Cliping Gradients == | |||

A simple solution for clipping the gradient. (Mikolov, 2012; Pascanu et al., 2013): | |||

* Clip the parameter gradient from a mini-batch element-wise (Mikolov, 2012) just before the parameter update. | |||

* Clip the norm <math>g</math> of the gradient <math>g</math> (Pascanu et al., 2013a) just before the parameter update. | |||

The formula for clipping the gradient is: | |||

<math>g' = \min\left(1, \frac{c}{|g|}\right) g</math> | |||

where c is a constant. | |||

== Attention == | |||

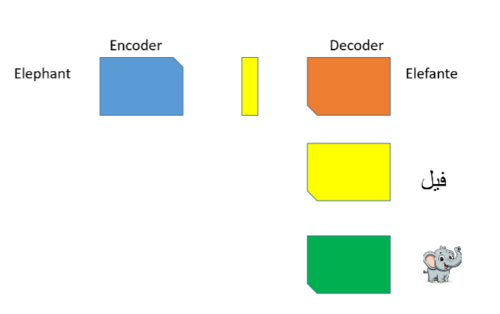

The attention mechanism was introduced to improve the performance of the encoder-decoder model for machine translation. | |||

=== Common Representation === | |||

A single 'concept' is universally represented, transcending specific languages or forms. | |||

* '''Encoder''': Processes the word 'elephant' from its original source. | |||

* '''Output''': A universal representation vector (the abstract 'concept' of an elephant). | |||

* '''Decoders''': Translate this concept into various domains or applications. | |||

The 'concept' is an abstract entity that exists independently of any particular language or representation. | |||

For example, if we want to translate from English to Spanish | |||

* '''Encoder (English Input)''': The system processes the word "elephant." | |||

* '''Output (Universal Representation)''': The system generates an abstract concept or vector representing an "elephant," independent of any specific language. | |||

* '''Decoder (Spanish Output)''': The system decodes this concept and outputs the equivalent Spanish word: "elefante." | |||

[[Image:attn_exmaple.png|thumb|500px|center|Common Representation]] | |||

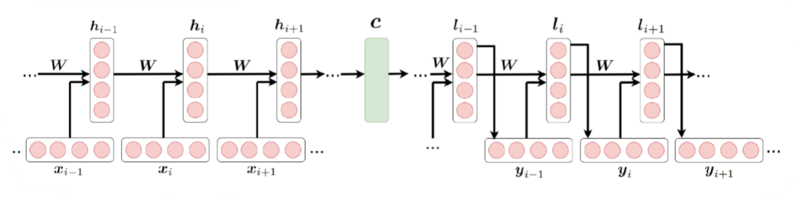

=== Sequence-to-Sequence Model === | |||

In the sequence-to-sequence model, every word <math>x_i</math> produces a hidden vector <math>h_i</math> in the encoder part of the autoencoder. The hidden vector of every word, <math>h_i</math>, is fed to the next hidden vector, <math>h_{i+1}</math>, by a projection matrix <math>W</math>. | |||

In this model, for the whole sequence, there is only one context vector <math>c</math>, which is equal to the last hidden vector of the encoder, i.e., <math>c = h_n</math>. | |||

[[Image:sqe2seq.png|thumb|800px|center|Sequence-to-Sequence Model]] | |||

Challenges: | |||

1. '''Long-range dependencies''': As the model processes long sequences, it can struggle to remember and utilize information from earlier steps, especially in cases where long-term context is crucial. | |||

2. '''Sequential processing''': Since these models process data step by step in sequence, they can't take full advantage of parallel processing, which limits the speed and efficiency of training. | |||

These are the core challenges that newer architectures, such as transformers, aim to address. | |||

=== Attention Definition === | |||

The basic idea behind the attention mechanism is directing the focus on important factors when processing data. Attention is a fancy name for '''weighted average'''. | |||

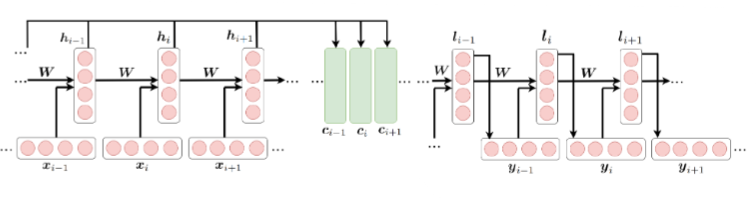

=== Sequence-to-Sequence Model with Attention === | |||

* Sequence-to-sequence models: | |||

Multiple RNN units serve as the encoder. They encode information into the context vectors. Multiple RNN units decode the concept in the context vector to different domain information. The limitations of this approach are long-range dependencies and prevention of parallelization. | |||

<math>p(y_i | y_1, \ldots, y_{i-1}) = g(y_{i-1}, l_i, c)</math> | |||

* Sequence-to-sequence with attention: | |||

Pass multiple context vectors to the decoder. | |||

<math>p(y_i | y_1, \ldots, y_{i-1}) = g(y_{i-1}, l_i, c_i)</math> | |||

[[Image:sqe2seq attn.png|thumb|800px|center|Sequence-to-Sequence Model with Attention]] | |||

There are some calculations: | |||

1. Similarity score: | |||

<math>s_{ij} = similarity(l_{i-1}, h_j)</math> | |||

2. Attention weight: | |||

<math>a_{ij} = \frac{e^{s_{ij}}}{\sum_{k=1}^{T} e^{s_{ik}}}</math> | |||

The attention weight <math>a_{ij}</math> is obtained by applying a softmax function to the similarity scores. This normalizes the scores across all encoder hidden states, turning them into a probability distribution. | |||

3. Context vector: | |||

<math>c_i = \sum_{j=1}^{T} a_{ij} h_j</math> | |||

The effectiveness of the correlation between inputs around position <math>j</math> and the output at position <math>i</math> is crucial. | |||

This score is determined based on: | |||

* The RNN hidden state <math>l_{i-1}</math> just before emitting <math>y_i</math>. | |||

* The <math>j^{th}</math> hidden state <math>h_j</math> of the input sentence. | |||

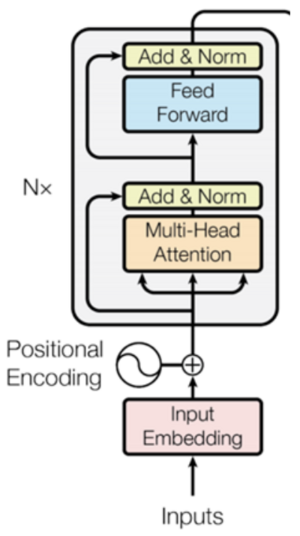

== Transformer Architecture == | |||

The basic concept behind transformers is attention as summarized in the paper by Vaswani et. al [https://arxiv.org/abs/1706.03762 'Attention is all you need']. This paper claims that all you need is attention and with the structure of attentions, basically you can handle the sequential data. It was based on GPT and many other models that we use in LLM and imaging processing. Transformer is an example of an encoder-decoder architecture. Unlike RNNs, Transformers can process sequences in parallel, making them faster to train on large datasets. Transformers have applications beyond NLP, such as Vision Transformers (ViT) in computer vision and protein structure prediction in biology (AlphaFold). Transformers' ability to capture long-range dependencies efficiently has made them the standard for many modern AI models. | |||

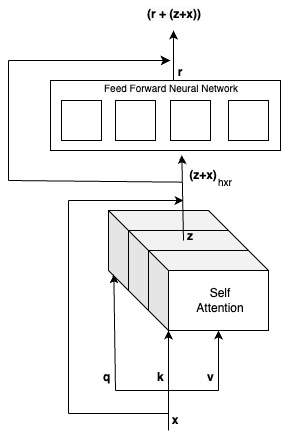

=== Encoder === | |||

The encoder consists of two main components: Self Attention and Feed Forward Neural Network. The architecture of the Encoder is given below: | |||

[[File:Encoder.png|center|thumb|Encoder architecture of the Transformer]] | |||

==== Self Attention ==== | |||

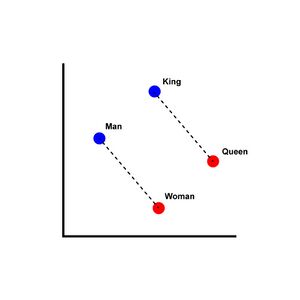

Understanding the individual words in a sentence is not enough to understand the whole sentence and one needs to understand how the words relate to each other. The attention mechanism forms composite representations. We aim to have embeddings of words and embeddings of compositions at the same time in different levels. Unlike word2vec introduced in 2013 by Mikolov et al., which captures the vector representations of words in an embedding space such that words that are similar are represented closer to each other, self-attention aims to capture the similarity between words based on context and in relation to each other. Multiple layers help form complex concept representations. For example, the context of the word "bank" differs based on if the surrounding words involve "money" or "river". | |||

[[File:word2vec.jpg|center|thumb|Illustration of word2vec where "King" - "Man" + "Woman" produces a vector close to the word "Queen"]] | |||

Self-attention is analogous to the fundamental retrieval strategy in databases where given a query, and key-value pairs, we use the query to identify the key and retrieve the corresponding value. The generalized definition for calculating the attention of a target word with respect to the input word: use the '''Query''' of the target and the '''Key''' of the input and then calculate a matching score. These matching scores act as the weights of the '''Value''' vectors. | |||

Then we look at the definition if matrix form. Given an input vector <math>\mathbf{x} \in \mathbb{R}^d</math>, the weights for the query, key, and value transformations are defined as: | |||

* <b>Query weight matrix</b>: <math>\mathbf{W}_q \in \mathbb{R}^{d \times p}</math> | |||

* <b>Key weight matrix</b>: <math>\mathbf{W}_k \in \mathbb{R}^{d \times p}</math> | |||

* <b>Value weight matrix</b>: <math>\mathbf{W}_v \in \mathbb{R}^{d \times r}</math> | |||

The transformations for the query (<math>\mathbf{q}</math>), key (<math>\mathbf{k}</math>), and value (<math>\mathbf{v}</math>) vectors are given by: | |||

* <b>Query vector</b>: <math>\mathbf{q} = \mathbf{W}_q^T \mathbf{x}</math> where <math>\mathbf{q} \in \mathbb{R}^{p \times 1}</math>, since <math>\mathbf{W}_q^T \in \mathbb{R}^{p \times d}</math>, and <math>\mathbf{x} \in \mathbb{R}^{d \times 1}</math> | |||

* <b>Key vector</b>: <math>\mathbf{k} = \mathbf{W}_k^T \mathbf{x}</math> where <math>\mathbf{k} \in \mathbb{R}^{p \times 1}</math>, since <math>\mathbf{W}_k^T \in \mathbb{R}^{p \times d}</math>, and <math>\mathbf{x} \in \mathbb{R}^{d \times 1}</math> | |||

* <b>Value vector</b>: <math>\mathbf{v} = \mathbf{W}_v^T \mathbf{x}</math> where <math>\mathbf{v} \in \mathbb{R}^{r \times 1}</math>, since <math>\mathbf{W}_v^T \in \mathbb{R}^{r \times d}</math>, and <math>\mathbf{x} \in \mathbb{R}^{d \times 1}</math> | |||

The transformations allow the attention mechanism to compute similarity scores between the query and key vectors and to use the value vectors to produce the final weighted output as <math>\mathbf{z_1} = \alpha_1 \mathbf{v}_1 + \alpha_2 \mathbf{v}_2 + \ldots + \alpha_n \mathbf{v}_n</math>, where <math>\mathbf{v}_i</math> are similar to the input in CNNs, and <math>\alpha_i</math> are similar to the kernels in CNNs. | |||

However, unlike the kernels in CNNs, the <math>\alpha_i</math>'s are data-dependent and given by: | |||

<math>\alpha_i = \text{softmax}\left(\frac{\mathbf{q}^T \mathbf{k}_i}{\sqrt{p}}\right)</math>. | |||

Extending this to the entire dataset, the equations are: | |||

<math> | |||

\mathbf{X} = [\mathbf{x}_1, \mathbf{x}_2, \ldots, \mathbf{x}_n] \in \mathbb{R}^{d \times n} | |||

</math> | |||

<math> | |||

\mathbf{Q} = [\mathbf{q}_1, \mathbf{q}_2, \ldots, \mathbf{q}_n] \in \mathbb{R}^{p \times n} | |||

</math> | |||

<math> | |||

\mathbf{K} = [\mathbf{k}_1, \mathbf{k}_2, \ldots, \mathbf{k}_n] \in \mathbb{R}^{p \times n} | |||

</math> | |||

<math> | |||

\mathbf{V} = [\mathbf{v}_1, \mathbf{v}_2, \ldots, \mathbf{v}_n] \in \mathbb{R}^{r \times n} | |||

</math> | |||

The transformations are defined as: | |||

<math> | |||

\mathbf{Q}_{p \times n} = \mathbf{W}_{q}^{T_{p \times d}} \mathbf{X}_{d \times n} | |||

</math> | |||

<math> | |||

\mathbf{K}_{p \times n} = \mathbf{W}_{k}^{T_{p \times d}} \mathbf{X}_{d \times n} | |||

</math> | |||

<math> | |||

\mathbf{V}_{r \times n} = \mathbf{W}_{v}^{T_{r \times d}} \mathbf{X}_{d \times n} | |||

</math> | |||

Therefore the output, | |||

<math> | |||

\mathbf{Z}_{r \times n} = \mathbf{V}_{r \times n} \cdot \text{softmax}\left(\frac{\mathbf{Q}^{T} \mathbf{K}}{\sqrt{p}}\right)_{n \times n} | |||

</math> | |||

Additionally, we have: | |||

<math> | |||

\mathbf{Q}^{T} \mathbf{K} = \mathbf{X}^{T} \mathbf{W}_{q} \mathbf{W}_{k}^{T} \mathbf{X} \quad \text{(an asymmetric kernel extracting the global similarity between words)} | |||

</math> | |||

Let us take a closer look at how one word is processed in the encoder. | |||

[[File:Encoder-Deeper-View.jpg|center|thumb|Deeper view of the Encoder]] | |||

The input vector x is passed through a linear transformation layer which transforms the input into query q, key k, and value v vectors, given by the equations above. This is passed through a stacked multi-head self-attention layer which extracts global information across pairwise sequence of words to produce the output vector Z also given by the equation above. The equation for Z can be compared to how similarity is computed between the key and value pairs in databases. This output is added to the residual input x to preserve the meaning of individual words in addition to the pairwise representations. This is normalized to produce the output <math>\mathbf{(Z+x)} \in \mathbb{R}^{h \times r}</math>. This serves as the input to the feed forward neural network. | |||

==== Feed Forward Neural Network ==== | |||

The structure of the feed forward network (FFN) is Linear Layer 1, followed by ReLU activation and then another linear layer 2. While the attention mechanism captures global information between words and hence aggregates across columns, the feed forward neural network aggregates across rows and takes a more broader look at each word independently. Depsite the individual processing, all positions share the same set of weights and biases in the FFN. In a classroom environment, the attention mechanism is similar to the teacher observing the interactions among students in a group, whereas the feed forward neural network resembles the teacher evaluating each student independently for their understanding of the assignment. The output of the feed forward layer r also has a residual connection from the previous layer which is normalized and passed as the input of the decoder as <math>\mathbf{(r+(z+x))}</math>. The encoder also has a positional encoding component which captures information about the position of words in a sequence. | |||

While the above figure zooms in at how a word vector x is processed by the encoder, the major advantage of the transformer architecture is its ability to handle multiple words in a sequence in parallel and so in practice, the above zoomed in version of the encoder is usually stacked to handle a group of words in parallel. | |||

==== Global v.s. Local Information | |||

For Attention Mechanism: | |||

* '''Global Understanding''': Captures relationships among different positions in the sequence. | |||

* '''Context Aggregation''': Spreads relevant information across the sequence, enabling each position to see a broader context. | |||

For Feed-Forward Networks (FFN): | |||

* '''Local Processing''': While attention looks across the entire sequence, FFN zooms back in to process each position independently. | |||

* '''Individual Refinement''': Enhances the representation of each position based on its own value, refining the local information gathered so far. | |||

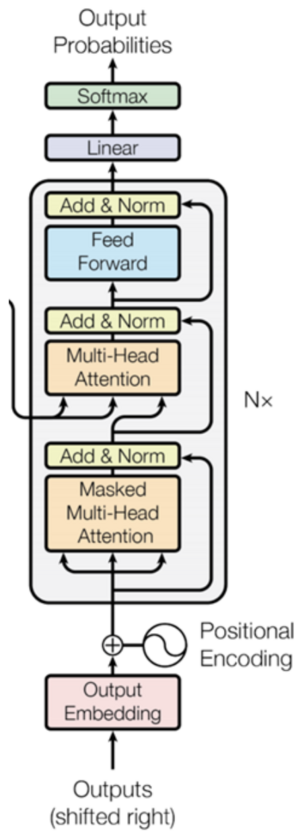

=== Decoder === | |||

The decoder consist of three main components: Masked Self Attention, Cross Attention and Linear Layer. The architecture of the Decoder is given below: | |||

[[File:Transformer-Decoder.png|center|thumb|Decoder architecture of the Transformer]] | |||

==== Masked Self Attention ==== | |||

In masked self attention, we add a mask matrix <math>\mathbf{M} \in \mathbb{R}^{n \times n}</math> to the normalized argument within the softmax of <math>\mathbf{Z}</math> given by | |||

<math> | |||

\mathbf{Z}_{r \times n} = \mathbf{V}_{r \times n} \cdot \text{softmax}\left(\frac{\mathbf{Q}^{T} \mathbf{K} + \mathbf{M}}{\sqrt{p}}\right)_{n \times n} | |||

</math> | |||

The reason for adding a mask matrix M is to ensure that the output is informed only by the past words and not by the words further along in the sequence. The mask matrix therefore is given by: | |||

<math>M(i,j) = \begin{cases} | |||

0 & \text{if } j \leq i \\ | |||

-\infty & \text{if } j > i | |||

\end{cases}</math> | |||

This is because <math>\mathbf{Z}</math> is an upper triangular matrix with values for previous words since <math>softmax(x + 0)</math> is the same as <math>softmax(x)</math>, but <math>softmax(x - \infty)</math> will be equal to 0. | |||

==== Cross Attention ==== | |||

The intuition behind cross attention is similar to sequence-to-sequence models where the context vector is passed from the encoder to the decoder capturing relevant information from the input sequence. The residual connection plus the output of the masked self attention is passed as the query to the cross attention block, whereas the key and value pairs are the same as the output of the encoder. The relationship and relevance between words in different sentences are captured. | |||

==== Linear Layer ==== | |||

The linear layer is applied to the output of the feed forward neural network of the decoder and its primary role is to adjust the dimensionality of the network to match the size of the vocabulary. It involves learning a set of parameters - a matrix of dimension equal to <math> p \times len(vocab)</math>. This results in a <math> p \times 1</math> vector which is then passed through a probabilistic softmax layer to predict the next word in the sequence. | |||

==== Softmax Activation ==== | |||

The function transforms the linear layer's output into probabilities as mentioned above. The output represents the likelihood of a respective word being the next word in the sequence. | |||

=== Positional Encoding === | |||

So far, the encoder and decoder has no sense of the order of the words in the sequence. The sentences "I am a teacher", "Teacher I am", "Am I a teacher?" are processed the same way, even though the meaning may not be the same. To circumvent this issue, and due to the lack of the convolution or recurrence operations, a positional encoding scheme is embedded within the encoder and decoder. In this encoding, the position of the words in a sequence is encoded by a vector. The even positions are represented by a sinusoidal wave with 1 representing the peaks and 0 representing the troughs. Similarly, the odd positions are represented by a cosine wave. Thus, each position is encoded by a unique binary vector and each unique binary vector represents a specific position. | |||

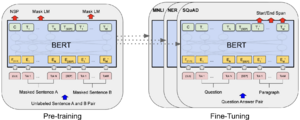

== Bidirectional Encoder Representations from Transformers (BERT) == | |||

[https://arxiv.org/abs/1810.04805 BERT] introduced by Google is built by repeating the encoder of the transformer multiple times. The Bidirectional in BERT refers to its ability to attend to the future and past words in the sequence unlike Transformers which only looks at the past to make predictions about the future. The fundamental principles behind BERT are Masked Language Modeling, and Next Sentence Prediction. The architecture of BERT is given below. | |||

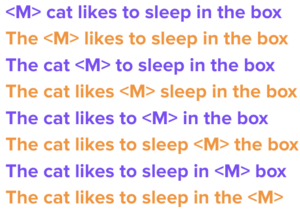

[[File:BERT.png|center|thumb|Masked Language Modeling]] | |||

=== Masked Language Modeling === | |||

BERT masks words in a corpus as shown in the figure below and makes the model learn to predict these words. It thereby pays attention to both words before and after the masked word and fills in the blank given the entire context. It is the same as a Transformer Encoder where 12 encoders (in contrast to the 6 in the original paper of Transformers) are stacked on top of each other. The output of the encoder is passed to s linear dense layer and softmax to predict the most probable word from the vocabulary of the corpus. | |||

[[File:MLM.png|center|thumb|Masked Language Modeling]] | |||

=== Next Sentence Prediction === | |||

It was also used to identify if given two sentences A and B, B logically follows the sentence A. This is possible due to the ability to fine-tune the weights of the pretrained BERT model for downstream prediction tasks. The pre-trained model can also be used to represent the input of a sentiment analysis task (for example) to a neural network in a better manner. The [CLS] token is prepended to the input sequence and is used to capture the context of the whole sentence such that during fine-tuning, the prediction may be conditioned on the representation of the [CLS] token. | |||

There are various flavors of BERT based on slight modifications to the original architecture and training processes. The advantage of fine tuning pretrained models is the opportunity to finetune them on a domain specific corpus leading to additional variants of BERT as BioBERT retrained on a biomedical corpus, BERTweet trained on around 850 million tweets and so on. | |||

== Transformers and GPT Models == | |||

=== Overview of Transformers === | |||

* Transformers consist of two main components: | |||

** Encoder | |||

** Decoder | |||

* BERT utilizes a stack of encoders, while GPT uses a stack of decoders. | |||

* GPT models are neural network-based language prediction models built on Transformer architecture. | |||

=== Decoder Structure === | |||

* The decoder has three parts: | |||

*# Masked Multi-Head Attention: Attends only to the left. | |||

*# Cross Attention: Attends to encoded inputs (removed in GPT). | |||

*# Feed Forward Neural Network. | |||

=== Differences Between BERT and GPT === | |||

* Both BERT and GPT use masked multi-head attention, but unlike BERT that masks a word in the middle of the sentence, and tries to predict the mask, GPT masks all the future words and tries to predict them. | |||

* Training methods differ: | |||

** BERT masks tokens in sentences. | |||

** GPT predicts the next token in a sequence. | |||

=== Training Process === | |||

* In GPT, the model predicts the next token based on the previous tokens, treating the input as a sequence. | |||

* The first GPT model (2018) had 117 million parameters and was trained on 7,000 books. | |||

* It was seen that larger models tend to generalize better and have better "emergent abilities", although the reason is still unclear. | |||

=== Evolution of GPT Models === | |||

* GPT-2 (2019) had 1.5 billion parameters. | |||

* GPT-3 (2020) had 175 billion parameters. | |||

* Larger models tend to generalize better. This trend suggests that increasing model size allows for deeper contextual understanding. | |||

=== Chain of Thought Reasoning === | |||

* GPT-4 introduced a method called “chain of thought,” allowing the model to predict intermediate steps in reasoning tasks. It involves multi-step thinking: The model generates a series of logical steps to arrive at the final answer, rather than answering in a single sentence. | |||

* Example: Problem: What is the result of 5 + 5 + 5? It is clear to see the answer is 15. With the chain of thought reasoning - Step 1: The first 5 plus the second 5 gives 10 (i.e. <math>5+5=10</math>). Step 2: add the last 5 to the result from Step 1 (i.e. <math>10+5=15</math>). | |||

=== Alignment and Instruction Following === | |||

* ChatGPT is designed to follow instructions and align with user preferences, addressing issues like harmful or politically incorrect outputs. | |||

* InstructGPT is a variant of GPT that focuses on following instructions using human feedback via Reinforcement Learning from Human Feedback (RLHF). | |||

=== Text-Text Transfer Transformer (T5) === | |||

* T5 combines encoder and decoder structures and treats various NLP tasks as text-to-text problems, allowing for diverse applications like translation and summarization. | |||

* NLP tasks such as sentiment analysis which were primarily treated as classification problems are cast as text-to-text problems. | |||

* Next span prediction is used where a set of sequential tokens are removed and replaced with sentinel tokens. | |||

* T5 architecture operates on an encoder-decoder model and encoder input padding can be performed on both left and right. | |||

=== Training Techniques in T5 === | |||

* T5 masks spans of tokens rather than individual tokens, focusing on global context rather than local dependencies. 15% of tokens are randomly removed and replaced with sentinel tokens. | |||

=== Benchmarking and Performance === | |||

* Models are often evaluated against benchmarks like GLUE and SuperGLUE, which consist of various NLP tasks. | |||

=== Training and Fine-Tuning LLMs === | |||

* LLMs are trained on massive datasets of unlabeled text using unsupervised learning. | |||

* The training process involves predicting the next token in a sequence. | |||

* Fine-tuning is done on labeled data to align the model with specific tasks or instructions. This process is called Supervised Fine-Tuning (SFT). | |||

=== Aligning LLMs with Human Feedback === | |||

* Reinforcement Learning with Human Feedback (RLHF) is used to ensure LLMs produce safe and ethical outputs. | |||

* RLHF involves generating multiple responses for a given prompt and having humans rank them. | |||

* A reward model is trained on this ranked data to predict the quality of a response. | |||

* The original LLM is then fine-tuned using this reward model. | |||

* RLHF was introduced in 2017 and has been widely used in LLMs like ChatGPT. | |||

=== Direct Preference Optimization (DPO) === | |||

* DPO is a newer approach that aims to replace RLHF. | |||

* It directly collects user preferences for different responses, eliminating the need for a reward model. | |||

* DPO is easier to implement and more stable than RLHF. | |||

=== Project Ideas for Deep Learning === | |||

# Enhancing Speculative Decoding for Faster Large Language Modeling | |||

#* This project aims to improve the speed of LLMs by using techniques like rejection sampling and importance sampling. | |||

#* The goal is to find alternative sampling methods to rejection sampling, which is computationally expensive. | |||

# Reducing the Computational Complexity of Transformers | |||

#* Transformers are computationally expensive, especially for long sequences. | |||

#* This project explores methods like ORCID, which uses data-dependent convolution to reduce complexity. | |||

#* The goal is to develop more efficient transformers that can handle longer sequences. | |||

# Diffusion Decoding for Peptide De Novo Sequencing | |||

#* Peptide sequencing is a crucial problem in bioinformatics, involving determining the amino acid sequence of a peptide. | |||

#* This project proposes using diffusion models for peptide sequencing, replacing the traditional GPT-based approach. | |||

#* Diffusion models can potentially improve accuracy by predicting all tokens simultaneously, rather than sequentially. | |||

# Using ORCID for DNA Analysis | |||

#* This project explores using ORCID, a model that can handle larger sequences, for DNA analysis. | |||

#* The goal is to leverage ORCID’s ability to handle long sequences to improve DNA analysis tasks. | |||

# Symbolic Regression with Diffusion Models | |||

#* Symbolic regression involves finding a mathematical formula that best fits a given dataset. | |||

#* This project proposes using diffusion models for symbolic regression, potentially improving the accuracy and efficiency of the process. | |||

== Transformers and Variational Autoencoders == | |||

=== Large Language Models (LLMs) and Transformers === | |||

==== Key Components of Transformers ==== | |||

* Attention Mechanism Formula: The core of the attention mechanism is computed using the following formula: | |||

<center><math display=block>V \text{softmax} \left( \frac{Q^T K}{\sqrt{p}} \right) \quad \text{(n x n)}</math></center> | |||

* Dimensions: | |||

** <math>X</math> as a <math display=inline>d \times n</math> matrix, <math>n</math> as sequence length, and <math>d</math> as data dimensionality. | |||

** <math>Q</math>, <math>K</math> and <math>V</math> are the query, key, and value matrices, respectively, and they are derived from <math>W_Q</math>, <math>W_K</math> and <math>W_V</math>. | |||

** Given a sequence <math>X = \begin{bmatrix} x_1 & \cdots & x_n \end{bmatrix}_{d \times n}</math> we define: | |||

*** <math>Q = W_Q^T X \quad \text{(p x n)}, \quad W_Q^T \in \mathbb{R}^{p \times d}, \quad X \in \mathbb{R}^{d \times n}</math> | |||

*** <math>K = W_K^T X \quad \text{(p x n)}, \quad W_K^T \in \mathbb{R}^{p \times d}</math> | |||

*** <math>V = W_V^T X \quad \text{(m x n)}, \quad W_V^T \in \mathbb{R}^{m \times d}</math> | |||

==== Recap of Transformers ==== | |||

* Building Blocks of LLMs: Transformers. | |||

* Computational Complexity: | |||

** Issue: Transformers have quadratic complexity concerning sequence length. | |||

** Reason: Complexity arises due to calculations in the attention mechanism. | |||

==== Approaches to Reduce Complexity ==== | |||

* <b>Issue</b>: Long sequences result in an <math>n \times n</math> matrix, making computation demanding. | |||

* <b>Solution</b>: Techniques like the Performer model approximate attention, reducing complexity to linear time. | |||

=== Performer Model === | |||

==== Overview ==== | |||

* <b>Objective</b>: Address quadratic complexity in transformers by approximating attention using kernel methods. | |||

* <b>Kernel Approximation</b>: Utilizes random features to approximate kernels, e.g., Gaussian kernels. The kernel function <math>K(x, y)</math> is defined as: | |||

<center><math display=block>K_{\text{Gauss}}(x, y) = \varphi(x)^T \varphi(y)</math></center> | |||

* For the Gaussian kernel approximation, the transformation <math>\varphi_{\text{Gauss}}(x)</math> is given by: | |||

<center><math display=block>\varphi_{\text{Gauss}}(x) = \frac{1}{\sqrt{r}}\begin{pmatrix} \sin(\omega_1^T x), & \sin(\omega_2^T x), & \dots, & \sin(\omega_r^T x), \cos(\omega_1^T x), & \cos(\omega_2^T x), & \dots, & \cos(\omega_r^T x)\end{pmatrix}</math></center> | |||

* In general for kernel <math>K</math>: | |||

<center><math display=block>\phi(x) = \frac{h(x)}{\sqrt{ r }}(f_{1}(w_{1}^Tx) \dots f_{1}(w_{r}^Tx) \dots f_{l}(w_{1}^Tx) \dots f_{l}(w_{r}^Tx)) \quad \text{(l x r)}</math></center> | |||

==== Advantage ==== | |||

'''Scalability''': Performers are highly scalable for very long sequences. | |||

'''Memory Efficiency''': Performers drastically reduce memory usage by linearizing attention. | |||

'''Robust Performance''': Despite the approximation, Performers retain high accuracy and performance, comparable to standard transformers. | |||

==== Application ==== | |||

Performers are beneficial in applications requiring efficient processing of long sequences, such as natural language processing, DNA sequence analysis, and other domains where traditional transformers are computationally prohibitive. | |||

=== Softmax and Attention Mechanism in Transformers === | |||

==== Softmax Formula ==== | |||

* In the context of transformers, the softmax function is used to normalize the attention scores: | |||

<center><math display=block>\text{SM}[s]_i = \frac{e^{s_i}}{\sum_j e^{s_j}}</math></center> | |||

* To calculate the softmax numerically, we can consider: | |||

<center> | |||

<math>e^{Q^T K} = A</math> | |||

</center> | |||

<center> | |||

<math>d = \begin{bmatrix} | |||

& & & & \\ | |||

& & & & \\ | |||

& & & & | |||

\end{bmatrix}_{n \times n} | |||

\begin{bmatrix} | |||

1 \\ | |||

1 \\ | |||

\vdots \\ | |||

1 | |||

\end{bmatrix}_{n \times 1} \\</math> | |||

</center> | |||

<center> | |||

<math>D = \operatorname{diag}(d) = \begin{bmatrix} d_1 & 0 & \cdots & 0 \\ 0 & d_2 & \cdots & 0 \\ \vdots & \vdots & \ddots & \vdots \\ 0 & 0 & \cdots & d_n \end{bmatrix}_{n \times n}</math> | |||

</center> | |||

<center> | |||

<math>\operatorname{SM}[s]_i = \frac{e^{s_i}}{\sum_j e^{s_j}} = A D^{-1}</math> | |||

</center> | |||

* We want to be able to do the matrix multiplication in the following way, which has linear time complexity for long sequences in transformers: | |||

<center><math>\begin{bmatrix} | |||

V & \Phi^T & \Phi | |||

\end{bmatrix} | |||

\quad \text{with dimensions:} | |||

\quad | |||

\begin{bmatrix} | |||

m \times n & n \times r’ & r’ \times n | |||

\end{bmatrix} | |||

= m \times n</math></center> | |||

==== Steps in Kernel Approximation ==== | |||

* <b>Kernel Formulation</b>: Approximate <math>\phi(x)^T \phi(y)</math> as a function of random vectors. | |||

* <b>Random Feature Transformation</b>: Performers use sinusoidal functions (sine and cosine) as random features for approximating similarity between sequences. | |||

* The softmax can also be approximated using random features, with a specific <math>\varphi(x)</math> tailored for softmax. | |||

<center><math>e^{-\frac{1}{2}\lVert x - y \rVert^2} = e^{-\frac{(x - y)^T (x - y)}{2}} = e^{-\frac{x^T x + y^T y - 2 x^T y}{2}} = e^{-\frac{x^T x}{2}} \cdot e^{-\frac{y^T y}{2}} \cdot e^{x^T y}</math></center> | |||

<center><math>e^{x^T y} = e^{-\lVert x - y \rVert^2} \cdot e^{\frac{x^T x}{2}} \cdot e^{\frac{y^T y}{2}} = \varphi_{\text{Gauss}}(x)^T \varphi_{\text{Gauss}}(y) \cdot e^{\frac{x^T x}{2}} \cdot e^{\frac{y^T y}{2}} = \varphi_{\text{Gauss}}(x)^T e^{\frac{x^T x}{2}} \cdot \varphi_{\text{Gauss}}(y) e^{\frac{y^T y}{2}}</math></center> | |||

* So, the kernel approximation for the softmax is: | |||

<center><math>\varphi_{\text{SM}}(x) = \frac{e^{\frac{x^T x}{2}}}{\sqrt{r}} \begin{pmatrix} \sin(\omega_1^T x), & \sin(\omega_2^T x), & \dots, & \sin(\omega_r^T x), \cos(\omega_1^T x), & \cos(\omega_2^T x), & \dots, & \cos(\omega_r^T x)\end{pmatrix}</math></center> | |||

=== Variational Auto-encoders (VAEs) === | |||

* <b>Overview</b>: VAEs are a type of generative model that extends traditional auto-encoders by introducing stochastic elements. While traditional auto-encoders are deterministic and focus on reconstructing inputs, VAEs aim to learn a distribution over the latent space. This approach extends traditional autoencoders by introducing a probabilistic framework that enables them to capture complex data distributions. | |||

==== Background: Auto-encoders ==== | |||

* <b>Basic Structure</b>: Consists of an encoder, a bottleneck layer, and a decoder. | |||

* <b>Objective</b>: Maps input <math>x</math> into a lower-dimensional representation (<math>z = u^Tx</math>) by compressing it and reconstructs it. It minimizes the differences in <math>x</math> and the reconstructed version of it (<math>\hat{x} = uz</math>): <math>\min \lvert x - \hat{x} \rvert</math> | |||

* <b>PCA Connection</b>: A simple, linear auto-encoder resembles PCA in dimensionality reduction. | |||

* <b>Limitation</b>: While VAEs are powerful, they may struggle to capture highly complex data distributions compared to more recent generative models like GANs. | |||

==== Moving to Variational Auto-encoders ==== | |||

* <b>Generative Model Aspect</b>: Variational Auto-encoders enforce a specific distribution on <math>z</math>, allowing them to generate new samples. | |||

* <b>Gaussian Distribution Constraint</b>: By enforcing a Gaussian distribution on <math>z</math>, the model can generate new samples in the learned data distribution. So Variational Auto-encoder introduces a prior distribution (typically Gaussian) on <math>z</math> and maximizes the Evidence Lower Bound (ELBO) instead of reconstruction alone. | |||

==== Approximation of the Posterior Distribution ==== | |||

<center><math>p(z|x) = \frac{p(x|z) , p(z)}{p(x)}</math></center> | |||

<center><math>p(x) = \int_z p(x|z) , p(z) , dz</math></center> | |||

* <math>\mathbf{q_{\theta}(z)}</math> is the approximate posterior distribution of the latent variable <math>z</math>, parameterized by <math>\theta</math>. | |||

* <b>Purpose of</b> <math>\mathbf{q_{\theta}(z)}</math>: In a VAE, we aim to learn a latent variable <math>z</math> that represents the underlying structure of the data <math>x</math>. Ideally, we want the true posterior <math>p(z|x)</math>, which is often intractable to compute directly. To address this, we approximate <math>p(z|x)</math> with a simpler distribution <math>q_{\theta}(z|x)</math>, where <math>\theta</math> represents the parameters (typically learned through a neural network). | |||

* <b>Key Points about</b> <math>\mathbf{q_{\theta}(z)}</math>: | |||

** <b>Approximate Posterior</b>: <math>q_{\theta}(z|x)</math> is an approximation of the true posterior <math>p(z|x)</math>. | |||

** <b>Parameterized by</b> <math>\mathbf{\theta}</math>: The parameters <math>\theta</math> define the structure of this distribution, often through a neural network in a VAE. | |||

** <b>Optimization</b>: During training, we optimize <math>\theta</math> to make <math>q_{\theta}(z|x)</math> as close as possible to <math>p(z|x)</math> by minimizing the KL divergence between <math>q_{\theta}(z|x)</math> and <math>p(z|x)</math>: <math>\min_{\theta} \text{KL} \left( q_{\theta}(z) || p(z|x) \right)</math> | |||

==== Information Theory ==== | |||

In VAEs, the model learns a latent space where each data point is mapped not to a single point, but to a probability distribution. This design choice allows the VAE to: Capture the information content of the input data in a compressed, structured form. Represent the uncertainty in the data by encoding it as a distribution rather than as a single deterministic vector. The VAE learns this by balancing reconstruction accuracy with the information-theoretic regularization of the latent space, which enforces a smooth and organized representation. | |||

* <b>Information content</b>: | |||

** <math>I</math>, represents the information content or self-information of an event with probability <math>p(x)</math>. It quantifies how much “surprise” or “information” is associated with a specific outcome <math>x</math> occurring. | |||

** The formula for information content <math>I</math> of an event <math>x</math> is: <math>I = -\log p(x)</math> | |||

** <math>I</math> is higher for less probable events (low <math>p(x)</math>), indicating more “surprise” or “information” content when that event occurs. | |||

** In information theory, this concept helps quantify the amount of information gained from observing an event, with rare events providing more information than common ones. | |||

** For example, given a sentence "Tomorrow, it either rains, or not." <math>p(x) = 1 </math> and so <math> I = -log(1) = 0 </math> since there is no insight to glean in that sentence. | |||

* <b>Entropy</b>: | |||

** <math>H</math> represents entropy. Entropy measures the average amount of information (or “uncertainty”) contained in a random variable. It is often used to quantify the uncertainty or unpredictability of a probability distribution. | |||

** The formula for entropy <math>H</math> of a discrete random variable <math>x</math> with a probability distribution <math>p(x)</math> is: <math>H = -\sum p(x) \log p(x)</math> | |||

** <math>H</math> is maximized when the distribution is most uncertain (e.g., in a uniform distribution). | |||

** In the context of VAEs, entropy is used to understand the distribution of latent variables and how much “information” or “uncertainty” they contain. | |||

* <b>KL divergence</b>: | |||

** KL divergence, stands for the Kullback-Leibler divergence between two probability distributions <math>q</math> and <math>p</math>. It is a measure of how one probability distribution <math>q</math> diverges from a second, reference probability distribution <math>p</math>. | |||

** The KL divergence from <math>q(x)</math> to <math>p(x)</math> is defined as: <math>\text{KL}(q || p) = \sum q(x) \log q(x) - \sum q(x) \log p(x) = \sum_x q(x) \log \frac{q(x)}{p(x)}</math> | |||

** KL divergence is not symmetric; <math>\text{KL}(q \| p) \neq \text{KL}(p \| q)</math>. This means that it matters which distribution we consider as the reference. | |||

** KL divergence is always non-negative, <math>\text{KL}(q \| p) \geq 0</math>, and equals zero if and only if <math>q = p</math> (almost everywhere). This is known as Gibbs’ inequality. | |||

** In variational inference and variational auto-encoders (VAEs), KL divergence is used to measure the difference between the approximate posterior <math>q_{\theta}(z|x)</math> and the true posterior <math>p(z|x)</math>. Minimizing <math>\text{KL}(q_{\theta}(z|x) | p(z|x))</math> helps make the approximation <math>q_{\theta}(z|x)</math> closer to the true posterior. | |||

** KL divergence quantifies the “information loss” if we approximate <math>p(x)</math> with <math>q(x)</math>. In essence, it tells us how much extra information (or “surprise”) is needed to describe samples from <math>q</math> as if they were from <math>p</math>. | |||

** For the continuous space: | |||

<center><math>\text{KL}(q(z) \| p(z|x)) = -\int q(z) \log \frac{p(z|x)}{q(z)} \, dz</math></center> | |||

<center><math>p(z|x) = \frac{p(x|z) \, p(z)}{p(x)}</math></center> | |||

<center><math>p(x|z) \, p(z) = p(x,z)</math></center> | |||

<center><math>\text{KL}(q(z) \| p(z|x))= -\int q(z) \log \frac{p(x, z)}{q(z) \cdot p(x)} \, dz</math></center> | |||

<center><math>= -\int q(z) \left[ \log \frac{p(x, z)}{q(z)} + \log \frac{1}{p(x)} \right] dz</math></center> | |||

<center><math>= -\int q(z) \log \frac{p(x, z)}{q(z)} \, dz + \int q(z) \log p(x) \, dz = -\int q(z) \log \frac{p(x, z)}{q(z)} \, dz + \log p(x) \int q(z) \, dz</math></center> | |||

<center><math>\int q(z) \, dz = 1</math></center> | |||

<center><math>\log p(x) = \text{KL}(q(z) \| p(z|x)) + \int q(z) \log \frac{p(x, z)}{q(z)} \, dz = \text{KL}(q(z) \| p(z|x)) + \text{ELBO}</math></center> | |||

<center><math>\text{ELBO} = \int q(z) \log \frac{p(x, z)}{q(z)} \, dz</math></center> | |||

<center><math>= \int q(z) \log \frac{p(x|z) \, p(z)}{q(z)} \, dz</math></center> | |||

<center><math>= \int q(z) \left[ \log p(x|z) + \log \frac{p(z)}{q(z)} \right] dz</math></center> | |||

<center><math>= \int q(z) \log p(x|z) \, dz + \int q(z) \log \frac{p(z)}{q(z)} \, dz</math></center> | |||

<center><math>= \mathbb{E}_{q(z)}[\log p(x|z)] - \text{KL}(q(z) \| p(z))</math></center> | |||

<center><math>\text{ELBO} = \mathbb{E}_{q_{\theta}(z|x)}[\log p(x|z)] - \text{KL}(q_{\theta}(z|x) | p(z))</math></center> | |||

==== KL Divergence and Evidence Lower Bound (ELBO) ==== | |||

* <b>KL Divergence</b>: Measures the similarity between two distributions; minimized to bring <math>q</math> close to <math>p</math>. | |||

* <b>Evidence Lower Bound (ELBO)</b>: | |||

** Variational auto-encoders maximize ELBO which is similar to minimizing KL divergence since <math> log(p(x)) </math> is a constant. | |||

** Maximizing ELBO ensures <math>q</math> closely approximates <math>p</math>, meaning maximizing ELBO ensures that the learned latent distribution is close to the target distribution. This approach allows VAEs to generate new samples by sampling from the latent space. | |||

==== ELBO Decomposition ==== | |||

* <b>ELBO Equation</b>: <math>ELBO = q(z) log p(x|z) - KL[q(z) || p(z)]</math> | |||

* First Term: Reconstruction likelihood (maximize this). | |||

* Second Term: Ensures latent variable <math>z</math> approximates the desired distribution. | |||

==== Practical Implementation: Reconstruction Loss and Gaussian Constraint ==== | |||

* <b>Objective in VAE Training</b>: | |||

** Minimize reconstruction loss (similar to traditional auto-encoders). | |||

** Ensure <math>z</math> follows a Gaussian distribution. | |||

* <b>Regularization Term in ELBO</b>: In VAEs, the Evidence Lower Bound (ELBO) includes a KL divergence term to ensure that the learned latent distribution remains close to a prior distribution (e.g., a Gaussian <math>p(z) = N(0, I)</math>). | |||

==== Re-parameterization Trick ==== | |||

* <b>Challenge</b>: The stochastic nature of <math>z</math> complicates backpropagation. | |||

* <b>Solution</b>: To make the stochastic layer differentiable, VAEs use the re-parameterization trick, where <math>z</math> is expressed as a deterministic function with a noise component. So we use re-parameterization to enable gradient-based optimization and we minimize: | |||

<center><math>\min \left( \lvert x - \hat{x} \rvert^2 + \text{KL}(q(z) \| N(0, 1)) \right)</math></center> | |||

==== Reverse Processes ==== | |||