Robust Imitation Learning from Noisy Demonstrations: Difference between revisions

| (15 intermediate revisions by the same user not shown) | |||

| Line 30: | Line 30: | ||

=== Imitation Learning via Risk Optimization === | === Imitation Learning via Risk Optimization === | ||

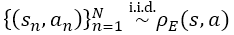

Under the assumption of the Mixture state-action density, | Under the assumption of the Mixture state-action density, | ||

[[File:eqn3_KL.PNG| | [[File:eqn3_KL.PNG|350px|center]] | ||

where <math>\rho_{\pi}(x)</math>, <math>\rho_{E}(x)</math>, and <math>\rho_{N}(x)</math> are the state-action densities of the learning, expert and non-expert policy, respectively. | where <math>\rho_{\pi}(x)</math>, <math>\rho_{E}(x)</math>, and <math>\rho_{N}(x)</math> are the state-action densities of the learning, expert and non-expert policy, respectively. | ||

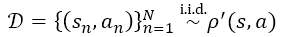

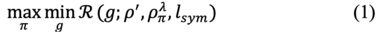

The paper proposes to perform IL by solving the risk optimization problem, | The paper proposes to perform IL by solving the risk optimization problem, | ||

[[File:eqn4_IL.PNG|center]] | [[File:eqn4_IL.PNG|380px|center]] | ||

where <math>\mathcal{R}</math> is the balanced risk; <math>\rho_{\pi}^{\lambda}</math> is a mixture density; <math>\lambda</math> is a hyper-parameter; <math>\pi</math> is a policy to be learned by maximizing the risk; <math>g</math> is a classifier to be learned by minimizing the risk and <math>l_{sym}</math> is a symmetric loss. | where <math>\mathcal{R}</math> is the balanced risk; <math>\rho_{\pi}^{\lambda}</math> is a mixture density; <math>\lambda</math> is a hyper-parameter; <math>\pi</math> is a policy to be learned by maximizing the risk; <math>g</math> is a classifier to be learned by minimizing the risk and <math>l_{sym}</math> is a symmetric loss. | ||

| Line 51: | Line 51: | ||

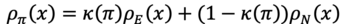

The algorithm of RIC-Co is described with the steps in Figure 2. | The algorithm of RIC-Co is described with the steps in Figure 2. | ||

[[File:fig2_KL.png| | [[File:fig2_KL.png|880px|center]] | ||

<div style="text-align:center;">Figure 2: The General Algorithm of RIL-Co</div> | <div style="text-align:center;">Figure 2: The General Algorithm of RIL-Co</div> | ||

== Methodology and Results == | == Methodology and Results == | ||

[[File:fig3_KL.PNG|center]] | |||

[[File:fig3_KL.PNG|1000px|center]] | |||

<div style="text-align:center;">Figure 3: Final performance in continuous-control benchmarks with different noise rates.</div> | |||

The RIL-Co model with Average-Precision (AP) loss is benchmarked against other established models: Behavioural Cloning (BC), Forward Inverse Reinforcement Learning (FAIRL), Variational Imitation Learning with Diverse-quality Demonstration (VILD), and three variations of Generative adversarial imitation learning (GAIL) with logistic, unhinged and AP loss functions. All models have the same structure of 2 hidden-layers, with 64 hyperbolic tangent nodes. The policy networks use trust region policy gradient (instead of stochastic gradient like we have seen in our courses), and the classifiers are trained by Adam, with a gradient penalty regularization penalty of 10. | The RIL-Co model with Average-Precision (AP) loss is benchmarked against other established models: Behavioural Cloning (BC), Forward Inverse Reinforcement Learning (FAIRL), Variational Imitation Learning with Diverse-quality Demonstration (VILD), and three variations of Generative adversarial imitation learning (GAIL) with logistic, unhinged and AP loss functions. All models have the same structure of 2 hidden-layers, with 64 hyperbolic tangent nodes. The policy networks use trust region policy gradient (instead of stochastic gradient like we have seen in our courses), and the classifiers are trained by Adam, with a gradient penalty regularization penalty of 10. | ||

| Line 67: | Line 70: | ||

The authors also observe that RIL-Co uses fewer transition samples, and thus learns quicker, than other methods in this test. Thus RIL-Co is more data efficient, which is a useful property for a model to have. | The authors also observe that RIL-Co uses fewer transition samples, and thus learns quicker, than other methods in this test. Thus RIL-Co is more data efficient, which is a useful property for a model to have. | ||

[[File:fig4_KL.PNG|center]] | |||

[[File:fig4_KL.PNG|1000px|center]] | |||

<div style="text-align:center;">Figure 4: Final performance of variants of RIL-Co in the ablation study.</div> | |||

An ablation study was conducted, where parts of the model is changed out (such as the loss function) to observe how the model behaves under this change. The loss function was swapped out for a logistic loss to get a better picture of how important a symmetric loss function is to the model. The results indicate that the original AP loss function outperformed the logistic loss, which indicates that using the symmetric loss is important for the model’s ability to be robust. | An ablation study was conducted, where parts of the model is changed out (such as the loss function) to observe how the model behaves under this change. The loss function was swapped out for a logistic loss to get a better picture of how important a symmetric loss function is to the model. The results indicate that the original AP loss function outperformed the logistic loss, which indicates that using the symmetric loss is important for the model’s ability to be robust. | ||

Another aspect that was tested was the type of noise presented to the model. The RIL-Co model with AP loss was presented with a noisy dataset generated with Gaussian noise. As expected VILD performed much better, since this fits in with the strict Gaussian noise assumption made in the model. RIL-Co achieved performance comparable to VILD given enough transitions, despite no assumption being made in the formulation of the model. This shows promise that RIL-Co performs well under different noise distributions. | [[File:fig5_KL.PNG|500px|center]] | ||

<div style="text-align:center;">Figure 5: Performance with a Gaussian noise dataset.</div> | |||

Another aspect that was tested was the type of noise presented to the model. The RIL-Co model with AP loss was presented with a noisy dataset generated with Gaussian noise. As expected VILD performed much better, since this fits in with the strict Gaussian noise assumption made in the model. RIL-Co achieved performance comparable to VILD given enough transitions, despite no assumption being made in the formulation of the model. This shows promise that RIL-Co performs well under different noise distributions. | |||

== Conclusion == | == Conclusion == | ||

| Line 79: | Line 88: | ||

== References == | == References == | ||

[1] Angluin, D. and Laird, P. (1988). Learning from noisy examples. Machine Learning. | |||

[2] Blum, A. and Mitchell, T. (1998). Combining labeled and unlabeled data with co-training. In International Conference on Computational Learning Theory. | |||

[3] Brodersen, K. H., Ong, C. S., Stephan, K. E., and Buhmann, J. M. (2010). The balanced accuracy and its posterior distribution. In International Conference on Pattern Recognition. | |||

[4] Brown, D., Coleman, R., Srinivasan, R., and Niekum, S. (2020). Safe imitation learning via fast bayesian reward inference from preferences. In International Conference on Machine Learning. | |||

[5] Brown, D. S., Goo, W., Nagarajan, P., and Niekum, S. (2019). Extrapolating beyond suboptimal demonstrations via inverse reinforcement learning from observations. In International Conference on Machine Learning. | |||

[6] Chapelle, O., Schlkopf, B., and Zien, A. (2010). SemiSupervised Learning. The MIT Press, 1st edition. | |||

[7] Charoenphakdee, N., Lee, J., and Sugiyama, M. (2019). On symmetric losses for learning from corrupted labels. In International Conference on Machine Learning. | |||

[8] Charoenphakdee, N., Sugiyama, M. and Tangkaratt, V. (2020). Robust Imitation Learning from Noisy Demonstrations. | |||

[9] Coumans, E. and Bai, Y. (2016–2019). Pybullet, a python module for physics simulation for games, robotics and machine learning. http://pybullet. org. | |||

[10] Ghasemipour, S. K. S., Zemel, R., and Gu, S. (2020). A divergence minimization perspective on imitation learning methods. In Proceedings of the Conference on Robot Learning. | |||

[11] Lu, N., Niu, G., Menon, A. K., and Sugiyama, M. (2019). On the minimal supervision for training any binary classifier from only unlabeled data. In International Conference on Learning Representations. | |||

[12] Sun, W., Vemula, A., Boots, B., and Bagnell, D. (2019). Provably efficient imitation learning from observation alone. In International Conference on Machine Learning. | |||

[13] Wu, Y., Charoenphakdee, N., Bao, H., Tangkaratt, V., and Sugiyama, M. (2019). Imitation learning from imperfect demonstration. In International Conference on Machine Learning. | |||

[14] Ziebart, B. D., Bagnell, J. A., and Dey, A. K. (2010). Modeling interaction via the principle of maximum causal entropy. In International Conference on Machine Learning. | |||

Latest revision as of 18:23, 27 November 2021

Presented by

Kar Lok Ng, Muhan (Iris) Li

Introduction

In Imitation Learning (IL), an agent (such as a neural network) aims to learn a policy from demonstrations of desired behaviour, so that it can make the desired decisions when presented with new situations. It differs from traditional Reinforcement Learning (RL), as it makes no assumption as to the nature of a reward function. IL methods assume that the demonstrations we feed the algorithm is optimal (or near optimal). This creates a big problem, as this method becomes very susceptible to poor data (i.e., not very robust). This intuitively makes sense, as the agent cannot effectively learn the optimal policy when it is fed low-quality demonstrations. As such, a robust method of IL is desired so that it can make better decisions despite being presented with noisy data. Established methods to combat noisy data in IL have limitations. One proposed solution requires the noisy demonstration to be ranked according to their relative performance to each other. Another similar method requires extra labelling of the data with a score that determines the probability that a particular demonstration is from an expert (a “good” demonstration). Both methods require extra data preprocessing that may not be feasible. A third method did not require these labels, but instead assume that noisy demonstrations were generated by a Gaussian distribution. This strict assumption limits the useability of such a model. Thus, a new method for IL from noisy demonstration is created. In this paper, they called this method Robust IL with Co-pseudo-labelling (RIL-Co). This method does not require additional labelling, nor does it require assumptions to be made about the noise distributions.

Model Architecture

Previous Work

Reinforcement Learning (RL): The purpose of RL is to learn an optimal policy of a Markov decision process (MDP). And the process of RL involves observing a state, choosing an action based on an optimal policy, transiting to the next state and receiving an immediate reward in a discrete-time MDP. Although this method performs well theoretically, it is limited in practice by the availability of the reward function.

Imitation Learning (IL): IL is applied to learn an expert policy when RL is not applicable; specifically, the reward function is unavailable. The process of IL is similar to RL except that IL methods learn a policy from a dataset of state-action samples that contain information of an optimal or near-optimal policy. In particular, the policy founded by some density matching methods when combined with deep neural networks makes IL perform well to high-dimensional problems. However, this approach is not robust against noisy demonstrations.

On the basis of IL, the paper considers a scenario of replacing given demonstrations by a mixture of expert and non-expert demonstrations. Previously, the expert policy to be learned in IL is,

where [math]\displaystyle{ \rho_E }[/math] is a state-action density of expert policy [math]\displaystyle{ \pi_E }[/math]; state s∈S and action a∈A under the discrete-time MDP denoted by [math]\displaystyle{ \mathcal{M} = (\mathcal{S}, \mathcal{A}, \mathcal{\rho_T}(s'|s,a)),\rho_1(s_1), r(s,a),\gamma) }[/math]

Then, within the new approach, there is an assumption that it is given a dataset of state-action samples drawn from a noisy state-action density,

Where [math]\displaystyle{ \rho' }[/math] is a mixture of the expert and non-expert state-action densities [math]\displaystyle{ \rho'(s,a) = \alpha \rho_E(s,a) + (1-\alpha)\rho_N(s,a) }[/math]

Following the typical assumption of learning from noisy data, [math]\displaystyle{ \alpha }[/math] as a mixing coefficient is chosen between 0.5 and 1. Also, [math]\displaystyle{ \rho_N }[/math] is the state-action density of a non-expert policy [math]\displaystyle{ \pi_N }[/math].

Imitation Learning via Risk Optimization

Under the assumption of the Mixture state-action density,

where [math]\displaystyle{ \rho_{\pi}(x) }[/math], [math]\displaystyle{ \rho_{E}(x) }[/math], and [math]\displaystyle{ \rho_{N}(x) }[/math] are the state-action densities of the learning, expert and non-expert policy, respectively.

The paper proposes to perform IL by solving the risk optimization problem,

where [math]\displaystyle{ \mathcal{R} }[/math] is the balanced risk; [math]\displaystyle{ \rho_{\pi}^{\lambda} }[/math] is a mixture density; [math]\displaystyle{ \lambda }[/math] is a hyper-parameter; [math]\displaystyle{ \pi }[/math] is a policy to be learned by maximizing the risk; [math]\displaystyle{ g }[/math] is a classifier to be learned by minimizing the risk and [math]\displaystyle{ l_{sym} }[/math] is a symmetric loss.

Besides, following Charoenphakdee et al. (2019), the paper also constructs a lemma indicating that, a minimizer [math]\displaystyle{ g^* }[/math] of [math]\displaystyle{ \mathcal{R}(g;\rho',\rho_{\pi}^{\lambda},l_{sym}) }[/math] is identical to that of [math]\displaystyle{ \mathcal{R}(g;\rho_E,\rho_N,l_{sym}) }[/math]. By this lemma, it is proved that the maximizer of the risk optimization in equation (1) is the expert policy.

It has been shown in the essay that robust IL can be achieved by optimizing the risk in equation (1). More importantly, this significant result indicates that robust IL is achievable without the knowledge of the mixing coefficient α nor estimates of α.

Co-pseudo-labeling for Risk Optimization

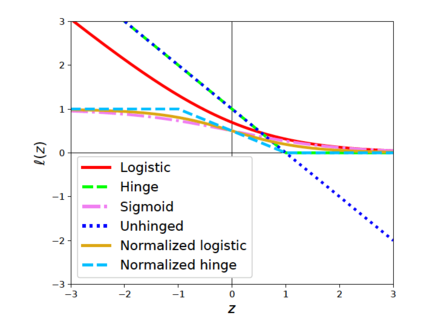

To address the issue of failing to optimize in equation (1), the paper suggests approximately drawing samples from [math]\displaystyle{ \rho_N(x) }[/math] by using co-pseudo-labeling. The methodology is to estimate the expectation over [math]\displaystyle{ \rho_N }[/math]. The authors firstly introduced pseudo-labeling to find the empirical risk in order to solve equation (1) in their setting. However, the over-confidence of the classifier arises from incorrectly predictions of the labels during training. Then the authors proposed co-pseudo-labeling which combined the ideas of pseudo-labeling and co-training, namely Robust IL with Co-pseudo-labeling (RIL-Co). After determining the overall framework of the model, the authors also made a choice of hyper-parameter [math]\displaystyle{ \lambda }[/math]. It is demonstrated that the appropriate value of [math]\displaystyle{ \lambda }[/math]is [math]\displaystyle{ 0.5 \le \lambda \lt 1(x) }[/math]. Specifically, to avoid increasing the impact of pseudo-labels on the risks, the authors decided to use [math]\displaystyle{ \lambda=0.5 }[/math]. In addition, with regard to the choice of symmetric loss, the authors emphasized that any symmetric loss can be used to learn the expert policy with RIL-Co. As Figure 1 shown, the loss can become symmetric after normalization.

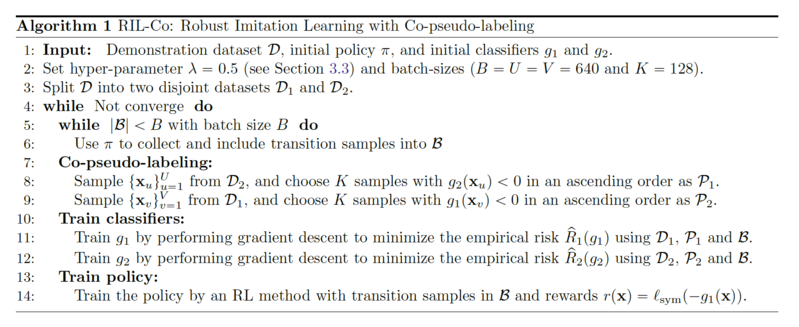

The algorithm of RIC-Co is described with the steps in Figure 2.

Methodology and Results

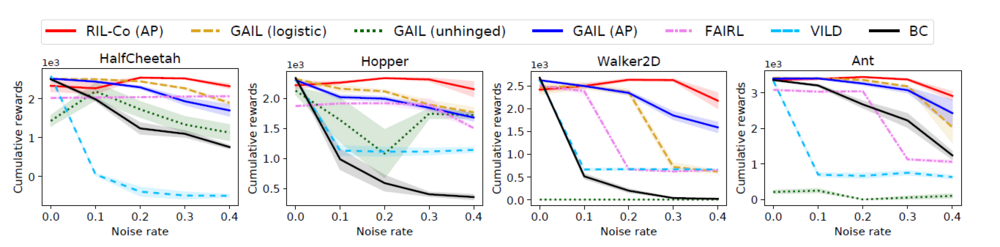

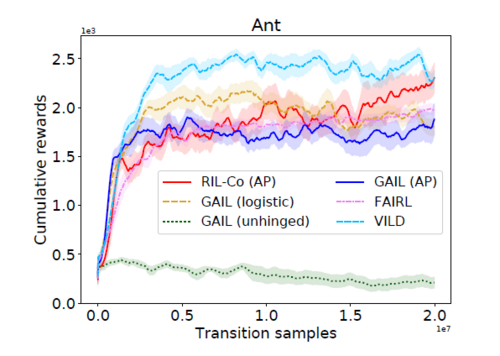

The RIL-Co model with Average-Precision (AP) loss is benchmarked against other established models: Behavioural Cloning (BC), Forward Inverse Reinforcement Learning (FAIRL), Variational Imitation Learning with Diverse-quality Demonstration (VILD), and three variations of Generative adversarial imitation learning (GAIL) with logistic, unhinged and AP loss functions. All models have the same structure of 2 hidden-layers, with 64 hyperbolic tangent nodes. The policy networks use trust region policy gradient (instead of stochastic gradient like we have seen in our courses), and the classifiers are trained by Adam, with a gradient penalty regularization penalty of 10.

The task supplied to the model to be trained on is to generate a model that walks. There are 4 simulated methods of walking: HalfCheetah, Hopper, Walker2d, and Ant. To generate the demonstrations to train the model on, a regular reinforcement learning model is used with true, known, reward functions. The best performing policy snapshot is then used to generate 10,000 “expert” state-action samples, and the 5 other policy snapshots are used to collect 10,000 “non-expert” state-action samples. The two sets of state-action samples are then mixed with varying noise rates of 0, 0.1, 0.2, 0.3 and 0.4 (e.g., the dataset consisting of 10,000 expert samples and 7500 non-expert samples corresponds to a noise rate of 0.4).

The models are judged on their effectiveness by the cumulative reward. In the experimentation, they observed that RIL-Co performed better than the rest in high noise scenarios (noise rate of 0.2, 0.3 and 0.4), while in low noise scenarios, RIL-Co performs comparably to the best performing alternative. GAIL with AP loss performs better than RIL-Co in low noise scenarios. The authors conject that this is due to co-pseudo-labelling adding additional bias. They propose a fix by varying the hyperparameter lambda from 0, which is equivalent to performing GAIL, to 0.5 as learning progresses.

VILD performs poorly with even small amounts of noisy data (with rate 0.1). The authors believe because VILD has a strict Gaussian noise assumption, and the data is not generated with any noise assumptions, VILD could not accurately estimate the noise distribution and thus performs poorly. BC also performs poorly, which is as expected as BC assumes the demonstrations fed into the model are expert models. The authors also observe that RIL-Co uses fewer transition samples, and thus learns quicker, than other methods in this test. Thus RIL-Co is more data efficient, which is a useful property for a model to have.

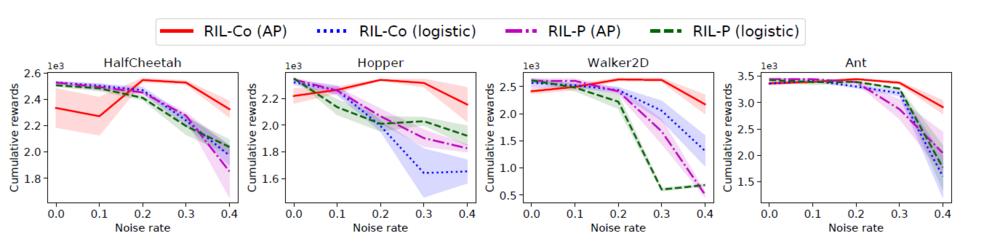

An ablation study was conducted, where parts of the model is changed out (such as the loss function) to observe how the model behaves under this change. The loss function was swapped out for a logistic loss to get a better picture of how important a symmetric loss function is to the model. The results indicate that the original AP loss function outperformed the logistic loss, which indicates that using the symmetric loss is important for the model’s ability to be robust.

Another aspect that was tested was the type of noise presented to the model. The RIL-Co model with AP loss was presented with a noisy dataset generated with Gaussian noise. As expected VILD performed much better, since this fits in with the strict Gaussian noise assumption made in the model. RIL-Co achieved performance comparable to VILD given enough transitions, despite no assumption being made in the formulation of the model. This shows promise that RIL-Co performs well under different noise distributions.

Conclusion

A new method for IL from noisy demonstrations is presented, which is more robust than other established methods. Further investigation can be done to see how well this model works under non-simulated data.

References

[1] Angluin, D. and Laird, P. (1988). Learning from noisy examples. Machine Learning.

[2] Blum, A. and Mitchell, T. (1998). Combining labeled and unlabeled data with co-training. In International Conference on Computational Learning Theory.

[3] Brodersen, K. H., Ong, C. S., Stephan, K. E., and Buhmann, J. M. (2010). The balanced accuracy and its posterior distribution. In International Conference on Pattern Recognition.

[4] Brown, D., Coleman, R., Srinivasan, R., and Niekum, S. (2020). Safe imitation learning via fast bayesian reward inference from preferences. In International Conference on Machine Learning.

[5] Brown, D. S., Goo, W., Nagarajan, P., and Niekum, S. (2019). Extrapolating beyond suboptimal demonstrations via inverse reinforcement learning from observations. In International Conference on Machine Learning.

[6] Chapelle, O., Schlkopf, B., and Zien, A. (2010). SemiSupervised Learning. The MIT Press, 1st edition.

[7] Charoenphakdee, N., Lee, J., and Sugiyama, M. (2019). On symmetric losses for learning from corrupted labels. In International Conference on Machine Learning.

[8] Charoenphakdee, N., Sugiyama, M. and Tangkaratt, V. (2020). Robust Imitation Learning from Noisy Demonstrations.

[9] Coumans, E. and Bai, Y. (2016–2019). Pybullet, a python module for physics simulation for games, robotics and machine learning. http://pybullet. org.

[10] Ghasemipour, S. K. S., Zemel, R., and Gu, S. (2020). A divergence minimization perspective on imitation learning methods. In Proceedings of the Conference on Robot Learning.

[11] Lu, N., Niu, G., Menon, A. K., and Sugiyama, M. (2019). On the minimal supervision for training any binary classifier from only unlabeled data. In International Conference on Learning Representations.

[12] Sun, W., Vemula, A., Boots, B., and Bagnell, D. (2019). Provably efficient imitation learning from observation alone. In International Conference on Machine Learning.

[13] Wu, Y., Charoenphakdee, N., Bao, H., Tangkaratt, V., and Sugiyama, M. (2019). Imitation learning from imperfect demonstration. In International Conference on Machine Learning.

[14] Ziebart, B. D., Bagnell, J. A., and Dey, A. K. (2010). Modeling interaction via the principle of maximum causal entropy. In International Conference on Machine Learning.