Mask RCNN: Difference between revisions

| (3 intermediate revisions by 3 users not shown) | |||

| Line 3: | Line 3: | ||

== Introduction == | == Introduction == | ||

Mask RCNN (Region Based Convolutional Neural Networks)[1] is a deep neural network architecture that aims to solve instance segmentation problems in computer vision which is important when attempting to identify different objects within the same image.It combines elements from classical computer vision of object detection and semantic segmentation. RCNN base architectures first extract a regional proposal (a region of the image where the object of interest is proposed to lie) and then attempts to classify the object within it. | Mask RCNN (Region Based Convolutional Neural Networks)[1] is a deep neural network architecture that aims to solve instance segmentation problems in computer vision which is important when attempting to identify different objects within the same image by identifying object's bounding box and classes.It combines elements from classical computer vision of object detection and semantic segmentation. RCNN base architectures first extract a regional proposal (a region of the image where the object of interest is proposed to lie) and then attempts to classify the object within it. | ||

Mask R-CNN extends Faster R-CNN [2] by adding a branch for predicting an object mask in parallel with the existing branch for bounding box recognition. This is done by using a Fully Convolutional Network as each mask branch in a pixel-by-pixel way. Mask R-CNN is simple to train and adds only a small overhead to Faster R-CNN, running at 5 fps. Moreover, Mask R-CNN is easy to generalize to other tasks, e.g., allowing us to estimate human poses in the same framework. Mask R-CNN achieved top results in all three tracks of the COCO suite of challenges [3], including instance segmentation, bounding-box object detection, and person keypoint detection. | Mask R-CNN extends Faster R-CNN [2] by adding a branch for predicting an object mask in parallel with the existing branch for bounding box recognition. This is done by using a Fully Convolutional Network as each mask branch in a pixel-by-pixel way. Mask R-CNN is simple to train and adds only a small overhead to Faster R-CNN, running at 5 fps. Moreover, Mask R-CNN is easy to generalize to other tasks, e.g., allowing us to estimate human poses in the same framework. Mask R-CNN achieved top results in all three tracks of the COCO suite of challenges [3], including instance segmentation, bounding-box object detection, and person keypoint detection. | ||

| Line 16: | Line 16: | ||

- Semantic Segmentation: Associate every pixel in an input image with a class label. The common baseline system for semantic segmentation is FCN (Fully Convolutional Network). | - Semantic Segmentation: Associate every pixel in an input image with a class label. The common baseline system for semantic segmentation is FCN (Fully Convolutional Network). | ||

- Instance Segmentation: Associate every pixel in an input image to a specific object. Instance segmentation combines | - Instance Segmentation: Associate every pixel in an input image to a specific object. Instance segmentation combines object detection and semantic segmentation as a complex task, to obtain both correct detection and precise segmentation together. | ||

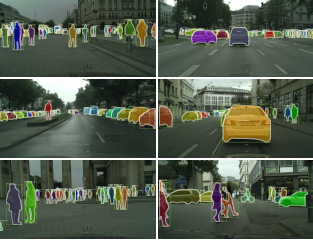

[[File:instance segmentation.png | center]] | [[File:instance segmentation.png | center]] | ||

| Line 105: | Line 105: | ||

Mask RCNN can be extended to human pose estimation. | Mask RCNN can be extended to human pose estimation. | ||

The simple approach the paper presents is to model a keypoint’s location as a one-hot mask, and adopt Mask R-CNN to predict K masks, one for each of K keypoint types such as left shoulder, right elbow. The model has minimal knowledge of human pose and this example illustrates the generality of the model. | The simple approach the paper presents is to model a keypoint’s location as a one-hot mask, and adopt Mask R-CNN to predict K masks, one for each of K keypoint types such as left shoulder, right elbow. The model has minimal knowledge of human pose and this example illustrates the generality of the model. Mask R-CNN does this by viewing each keypoint as a one-hot binary mask and applying minimal modification to detect instance-specific poses. | ||

[[File:HumanPose.png | center]] | [[File:HumanPose.png | center]] | ||

| Line 168: | Line 168: | ||

It would be more helpful if metrics of measuring classification errors are introduced briefly. Otherwise, it can be confusing. | It would be more helpful if metrics of measuring classification errors are introduced briefly. Otherwise, it can be confusing. | ||

For the Mask R-CNN model can be used for Human Pose Estimation, can it be further trained to adapt the technology to dangerous workplace training simulations? For example, if a window cleaning worker in training, the model can be used to estimate its posture with its respective data sent to another pipeline for estimating danger levels or correctness of a worker's posture. Secondly, since it can estimate a human's posture, can it be used to estimate on-site approaching live traffic to aid autonomous driving? Also, even though we are seeing it built from ResNet variants and backbones, why not provide an actual visualized comparison between the original ResNet and Mask RCNN to show improvements (tables were given, but what about a graph to show the training process accuracy and loss)? | |||

== Interesting Directions == | == Interesting Directions == | ||

Latest revision as of 22:37, 7 December 2020

Presented by

Qing Guo, Xueguang Ma, James Ni, Yuanxin Wang

Introduction

Mask RCNN (Region Based Convolutional Neural Networks)[1] is a deep neural network architecture that aims to solve instance segmentation problems in computer vision which is important when attempting to identify different objects within the same image by identifying object's bounding box and classes.It combines elements from classical computer vision of object detection and semantic segmentation. RCNN base architectures first extract a regional proposal (a region of the image where the object of interest is proposed to lie) and then attempts to classify the object within it. Mask R-CNN extends Faster R-CNN [2] by adding a branch for predicting an object mask in parallel with the existing branch for bounding box recognition. This is done by using a Fully Convolutional Network as each mask branch in a pixel-by-pixel way. Mask R-CNN is simple to train and adds only a small overhead to Faster R-CNN, running at 5 fps. Moreover, Mask R-CNN is easy to generalize to other tasks, e.g., allowing us to estimate human poses in the same framework. Mask R-CNN achieved top results in all three tracks of the COCO suite of challenges [3], including instance segmentation, bounding-box object detection, and person keypoint detection.

Visual Perception Tasks

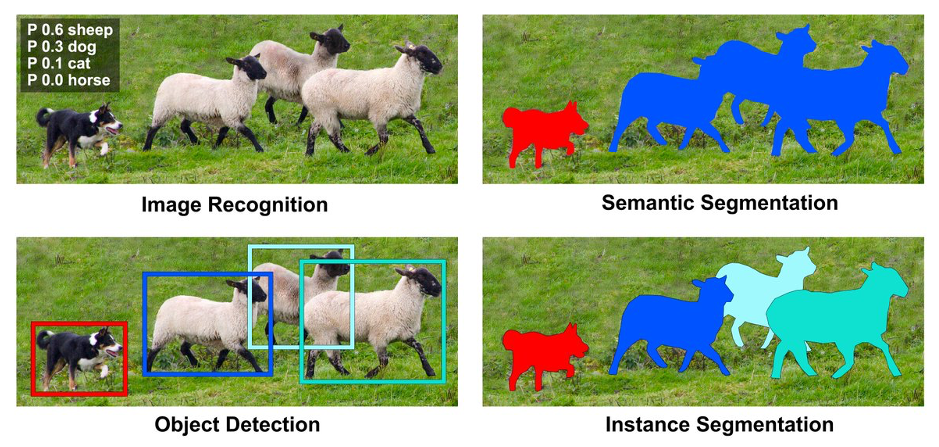

Figure 1 shows a visual representation of different types of visual perception tasks:

- Image Classification: Predict a set of labels to characterize the contents of an input image

- Object Detection: Localizing objects in an image by building bounding boxes around the object, then proceed to classify them according to a set of classes. The current baseline system for object detection is Fast/Faster R-CNN.

- Semantic Segmentation: Associate every pixel in an input image with a class label. The common baseline system for semantic segmentation is FCN (Fully Convolutional Network).

- Instance Segmentation: Associate every pixel in an input image to a specific object. Instance segmentation combines object detection and semantic segmentation as a complex task, to obtain both correct detection and precise segmentation together.

Mask RCNN is a deep neural network architecture combining multiple state-of-art techniques for the task of Instance Segmentation.

Related Architecture to Mask RCNN

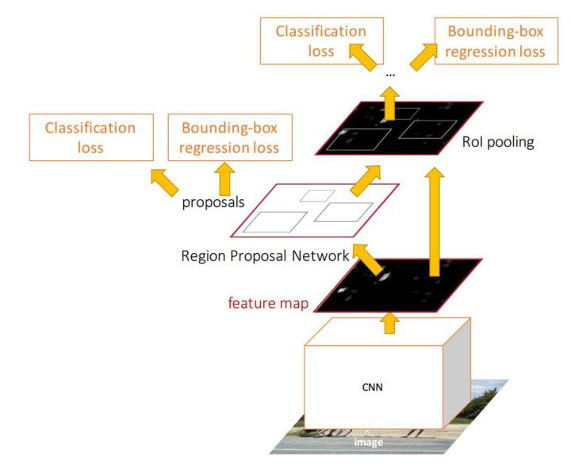

Region Proposal Network: A Region Proposal Network (RPN) proposes candidate object bounding boxes, which is the first step for effective object detection. It takes an image (of any size) as input and outputs a set of rectangular object boxes, each with an objectness score.

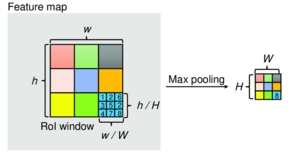

ROI Pooling: The main use of ROI (Region of Interest) Pooling is to adjust the proposal to a uniform size. It’s better for the subsequent network to process. It maps the proposal to the corresponding position of the feature map, divide the mapped area into sections of the same size, and performs max pooling or average pooling operations on each section. ROI pooling solves the problem of fixed image size requirement for object detection network. The entire image feeds a CNN model to detect RoI on the feature maps.

Faster R-CNN: Faster R-CNN consists of two stages: Region Proposal Network and Fast R-CNN using ROI Pooling. Region Proposal Network proposes candidate object bounding boxes. ROI Pooling, which is in essence Fast R-CNN, extracts features using RoIPool from each candidate box and performs classification and bounding-box regression. The features used by both stages can be shared for faster inference.

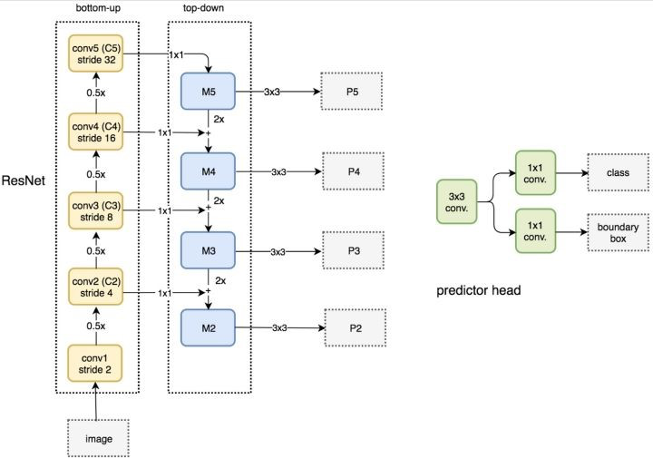

ResNet-FPN: FPN uses a top-down architecture with lateral connections to build an in-network feature pyramid from a single-scale input. FPN is a general architecture that can be used in conjunction with various networks, such as VGG, ResNet, etc. Faster R-CNN with an FPN backbone extracts RoI features from different levels of the feature pyramid according to their scale. Other than FPN, the rest of the approach is similar to vanilla ResNet. Using a ResNet-FPN backbone for feature extraction with Mask RCNN gives excellent gains in both accuracy and speed.

Model Architecture

The structure of mask R-CNN is quite similar to the structure of faster R-CNN. Faster R-CNN has two stages, the RPN(Region Proposal Network) first proposes candidate object bounding boxes. Then RoIPool extracts the features from these boxes. After the features are extracted, these features data can be analyzed using classification and bounding-box regression. Mask R-CNN shares the identical first stage. But the second stage is adjusted to tackle the issue of simplifying the stages pipeline. Instead of only performing classification and bounding-box regression, it also outputs a binary mask for each RoI as [math]\displaystyle{ L=L_{cls}+L_{box}+L_{mask} }[/math], where [math]\displaystyle{ L_{cls} }[/math], [math]\displaystyle{ L_{box} }[/math], [math]\displaystyle{ L_{mask} }[/math] represent the classification loss, bounding box loss and the average binary cross-entropy loss respectively.

The important concept here is that, for most recent network systems, there's a certain order to follow when performing classification and regression, because classification depends on mask predictions. Mask R-CNN, on the other hand, applies bounding-box classification and regression in parallel, which effectively simplifies the multi-stage pipeline of the original R-CNN. And just for comparison, complete R-CNN pipeline stages involve 1. Make region proposals; 2. Feature extraction from region proposals; 3. SVM for object classification; 4. Bounding box regression. In conclusion, stages 3 and 4 are adjusted to simplify the network procedures.

The system follows the multi-task loss, which by formula equals classification loss plus bounding-box loss plus the average binary cross-entropy loss. One thing worth noticing is that for other network systems, those masks across classes compete with each other. However, in this particular case with a per-pixel sigmoid and a binary loss the masks across classes no longer compete, it makes this formula the key for good instance segmentation results.

RoIAlign

This concept is useful in stage 2 where the RoIPool extracts features from bounding-boxes. For each RoI as input, there will be a mask and a feature map as output. The mask is obtained using the FCN(Fully Convolutional Network) and the feature map is obtained using the RoIPool. The mask helps with spatial layout, which is crucial to the pixel-to-pixel correspondence.

The two things we desire along the procedure are: pixel-to-pixel correspondence; no quantization is performed on any coordinates involved in the RoI, its bins, or the sampling points. Pixel-to-pixel correspondence makes sure that the input and output match in size. If there is a size difference, there will be information loss, and coordinates cannot be matched.

RoIPool is standard for extracting a small feature map from each RoI. However, it performs quantization before subdividing into spatial bins which are further quantized. Quantization produces misalignments when it comes to predicting pixel accurate masks. Therefore, instead of quantization, the coordinates are computed using bilinear interpolation They use bilinear interpolation to get the exact values of the inputs features at the 4 RoI bins and aggregate the result (using max or average). These results are robust to the sampling location and number of points and to guarantee spatial correspondence.

The network architectures utilized are called ResNet and ResNeXt. The depth can be either 50 or 101. ResNet-FPN(Feature Pyramid Network) is used for feature extraction.

Some implementation details should be mentioned: first, an RoI is considered positive if it has IoU with a ground-truth box of at least 0.5 and negative otherwise. It is important because the mask loss Lmask is defined only on positive RoIs. Second, image-centric training is used to rescale images so that pixel correspondence is achieved. An example complete structure is, the proposal number is 1000 for FPN, and then run the box prediction branch on these proposals. The mask branch is then applied to the highest scoring 100 detection boxes. The mask branch can predict K masks per RoI, but only the kth mask will be used, where k is the predicted class by the classification branch. The m-by-m floating-number mask output is then resized to the RoI size and binarized at a threshold of 0.5.

Results

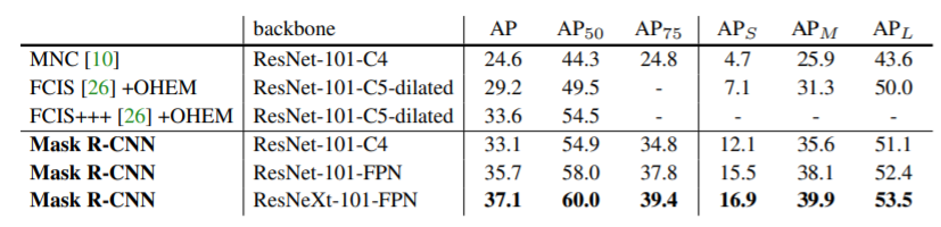

Instance Segmentation: Based on COCO dataset, Mask R-CNN outperforms all categories comparing to MNC and FCIS which are state of the art model

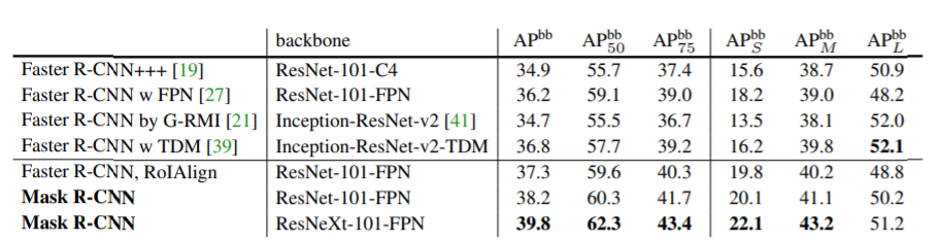

Bounding Box Detection: Mask R-CNN outperforms the base variants of all previous state-of-the-art models, including the winner of the COCO 2016 Detection Challenge.

Ablation Experiments

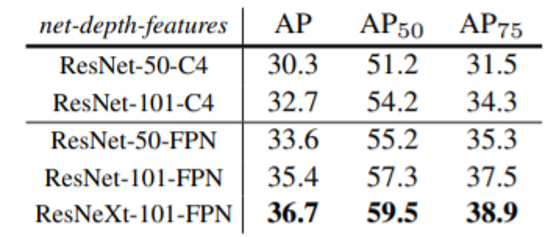

(a) Backbone Architecture: Better backbones bring expected gains: deeper networks do better, FPN outperforms C4 features, and ResNeXt improves on ResNet.

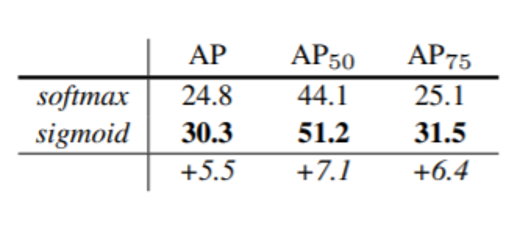

(b) Multinomial vs. Independent Masks (ResNet-50-C4): Decoupling via perclass binary masks (sigmoid) gives large gains over multinomial masks (softmax).

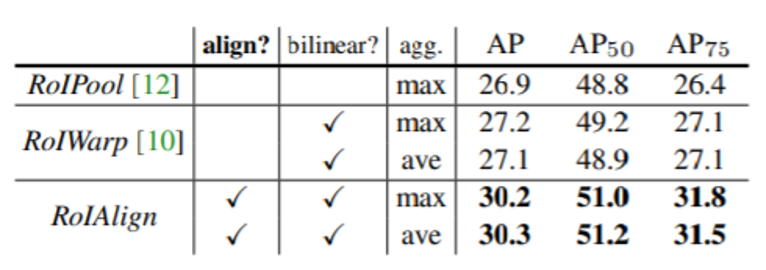

(c) RoIAlign (ResNet-50-C4): Mask results with various RoI layers. Our RoIAlign layer improves AP by ∼3 points and AP75 by ∼5 points. Using proper alignment is the only factor that contributes to the large gap between RoI layers.

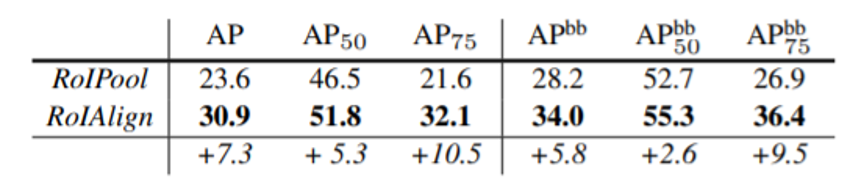

(d) RoIAlign (ResNet-50-C5, stride 32): Mask-level and box-level AP using large-stride features. Misalignments are more severe than with stride-16 features, resulting in big accuracy gaps.

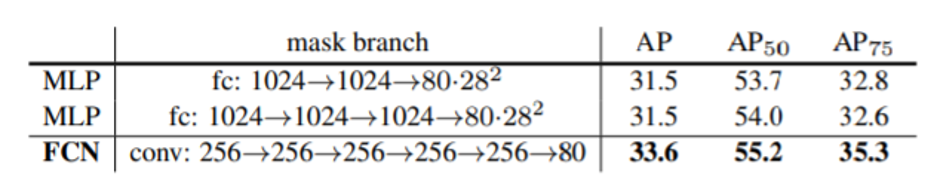

(e) Mask Branch (ResNet-50-FPN): Fully convolutional networks (FCN) vs. multi-layer perceptrons (MLP, fully-connected) for mask prediction. FCNs improve results as they take advantage of explicitly encoding spatial layout.

Human Pose Estimation

Mask RCNN can be extended to human pose estimation.

The simple approach the paper presents is to model a keypoint’s location as a one-hot mask, and adopt Mask R-CNN to predict K masks, one for each of K keypoint types such as left shoulder, right elbow. The model has minimal knowledge of human pose and this example illustrates the generality of the model. Mask R-CNN does this by viewing each keypoint as a one-hot binary mask and applying minimal modification to detect instance-specific poses.

Experiments on Cityscapes

The model was also tested on Cityscapes dataset. From this dataset the authors used 2975 annotated images for training, 500 for validation, and 1525 for testing. The instance segmentation task involved eight categories: person, rider, car, truck, bus, train, motorcycle and bicycle. When the Mask R-CNN model was applied to the data it achieved 26.2 AP on the testing data which was an over 30% improvement on the previous best entry.

Figure 12. Cityscapes Results

Conclusion

Mask RCNN is a deep neural network aimed to solve the instance segmentation problems in machine learning or computer vision. Mask R-CNN is a conceptually simple, flexible, and general framework for object instance segmentation. It can efficiently detect objects in an image while simultaneously generating a high-quality segmentation mask for each instance. It does object detection and instance segmentation, and can also be extended to human pose estimation. It extends Faster R-CNN by adding a branch for predicting an object mask in parallel with the existing branch for bounding box recognition. Mask R-CNN is simple to train and adds only a small overhead to Faster R-CNN, running at 5 fps.

Critiques

In Faster RCNN, the ROI boundary is quantized. However, mask RCNN avoids quantization and used the bilinear interpolation to compute exact values of features. By solving the misalignments due to quantization, the number and location of sampling points have no impact on the result.

It may be better to compare the proposed model with other NN models or even non-NN methods like spectral clustering. Also, the applications can be further discussed like geometric mesh processing and motion analysis.

The paper lacks the comparisons of different methods and Mask RNN on unlabeled data, as the paper only briefly mentioned that the authors found out that Mask R_CNN can benefit from extra data, even if the data is unlabelled.

The Mask RCNN has many practical applications as well. A particular example, where Mask RCNNs are applied would be in autonomous vehicles. Namely, it would be able to help with isolating pedestrians, other vehicles, lights, etc.

The Mask RCNN could be a candidate model to do short-term predictions on the physical behaviors of a person, which could be very useful at crime scenes.

For the most part, instance segmentation is now quite achievable, and it’s time to start thinking about innovative ways of using this idea of doing computer vision algorithms at a pixel by pixel level such as the DensePose algorithm.

An interesting application of Mask RCNN would be on face recognition from CCTVs. Flurry pictures of crowded people could be obtained from CCTV, so that mask RCNN can be applied to distinguish each person.

The main problem for CNN architectures like Mask RCNN is the running time. Due to slow running times, Single Shot Detector algorithms are preferred for applications like video or live stream detections, where a faster running time would mean a better response to changes in frames. It would be beneficial to have a graphical representation of the Mask RCNN running times against single shot detector algorithms such as YOLOv3.

It is interesting to investigate a solution of embedding instance segmentation with semantic segmentation to improve time performance. Because in many situations, knowing the exact boundary of an object is not necessary.

It will be better if we can have more comparisons with other models. It will also be nice if we can have more details about why Mask RCNN can perform better, and how about the efficiency of it?

The authors mentioned that Mask R-CNN is a deep neural network architecture for Instance Segmentation. It's better to include more background information about this task. For example, challenges of this task (e.g. the model will need to take into account the overlapping of objects) and limitations of existing methods.

It would be interesting to see how a postprocessing step with conditional random fields (CRF) might improve (or not?) segmentation. It would also have been interesting to see the performance of the method with lighter backbones since the backbones used to have a very large inference time which makes them unsuitable for many applications.

An extension of the application of Mask RCNN in medical AI is to highlight areas of an MRI scan that correlate to certain behavioral/psychological patterns.

The use of these in medical imaging systems seems rather useful, but it can also be extended to more general CCTV camera systems which can also detect physiological patterns.

In the Human Pose Estimation section, we assume that Mask RCNN does not have any knowledge of human poses, and all the predictions are based on keypoints on human bodies, for example, left shoulder and right elbow. While in fact we may be able to achieve better performances here because currently this approach is strongly dependent on correct classifications of human body parts. That is, if the model messed up the position of left shoulder, the position estimation will be awful. It is important to remove the dependency on preceding predictions, so that even when previous steps fail, we may still expect a fair performance.

It will be interesting to see if applying dropout can boost this Mask RCNN architecture's performance.

It will be interesting if mask RCNN is applied to human faces and how it classify each individual also would be nice to see how the technical calculations such as classification and predictions are done.

It would be interesting to know how the RCNN model will perform on unbalanced data and how the performance compares with other models in this circumstances.

The authors omitted the details of the training and the computational cost of training the model. Since RCNN combines stages 3 and 4 (SVM to categorize and bounding box regression), how does this affect the computational cost of the model? Similar architectures to the RCNN have long training times so it is of interest to know the computational runtime of this model in comparison to other models.

It's amazing what these researchers were able to achieve with adding minimal overhead, and how well it generalizes using two completely different datasets. For the future work it would be nice to see if the model is able to also predict the distance between the objects that overlap in an image, without adding any further significant overhead.

Additionally, it would be nice to see how well the model is able to predict collision detection between the objects given that it is currently at 5 frames-per-second (which is still really impressive, it just would be interesting to see how much would be possible)

It would be more helpful if metrics of measuring classification errors are introduced briefly. Otherwise, it can be confusing.

For the Mask R-CNN model can be used for Human Pose Estimation, can it be further trained to adapt the technology to dangerous workplace training simulations? For example, if a window cleaning worker in training, the model can be used to estimate its posture with its respective data sent to another pipeline for estimating danger levels or correctness of a worker's posture. Secondly, since it can estimate a human's posture, can it be used to estimate on-site approaching live traffic to aid autonomous driving? Also, even though we are seeing it built from ResNet variants and backbones, why not provide an actual visualized comparison between the original ResNet and Mask RCNN to show improvements (tables were given, but what about a graph to show the training process accuracy and loss)?

Interesting Directions

There is recent work on ResNeSt: Split-Attention Networks (https://arxiv.org/abs/2004.08955), which uses an explicit soft attention mechanism over channels within a ResNeXt style architecture which shows improvements to classification. It would be interesting to use this backbone with Mask R-CNN and see if the attention helps capture longer range dependencies and thus produce better segmentations.

References

[1] Kaiming He, Georgia Gkioxari, Piotr Dollár, Ross Girshick. Mask R-CNN. arXiv:1703.06870, 2017.

[2] Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks, arXiv:1506.01497, 2015.

[3] Tsung-Yi Lin, Michael Maire, Serge Belongie, Lubomir Bourdev, Ross Girshick, James Hays, Pietro Perona, Deva Ramanan, C. Lawrence Zitnick, Piotr Dollár. Microsoft COCO: Common Objects in Context. arXiv:1405.0312, 2015