stat841: Difference between revisions

| Line 27: | Line 27: | ||

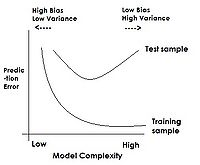

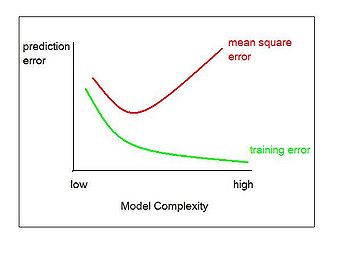

=== Error rate === | === Error rate === | ||

:''' | : The '''true error rate'''' <math>\,L(h)</math> of a classifier having classification rule <math>\,h</math> is defined as the probability that <math>\,h</math> does not correctly classify any new data input, i.e., it is defined as <math>\,L(h)=P(h(X) \neq Y)</math>. Here, <math>\,X \in \mathcal{X}</math> and <math>\,Y \in \mathcal{Y}</math> are the known feature values and the true class of that input, respectively. | ||

:''' | : The '''empirical error rate''' (or '''training error rate''') of a classifier having classification rule <math>\,h</math> is defined as the frequency at which <math>\,h</math> does not correctly classify the data inputs in the training set, i.e., it is defined as | ||

<math>\,\hat{L}_{n} = \frac{1}{n} \sum_{i=1}^{n} I(h(X_{i}) \neq Y_{i})</math>, where <math>\,I</math> is an indicator variable and <math>\,I = \left\{\begin{matrix} 1 &\text{if } h(X_i) \neq Y_i \\ 0 &\text{if } h(X_i) = Y_i \end{matrix}\right.</math>. Here, | |||

1 & h(X_i) \neq Y_i \\ | <math>\,X_{i} \in \mathcal{X}</math> and <math>\,Y_{i} \in \mathcal{Y}</math> are the known feature values and the true class of the <math>\,i_th</math> training input, respectively. | ||

0 & h(X_i)=Y_i \end{matrix}\right.</math>. | |||

=== Bayes Classifier === | === Bayes Classifier === | ||

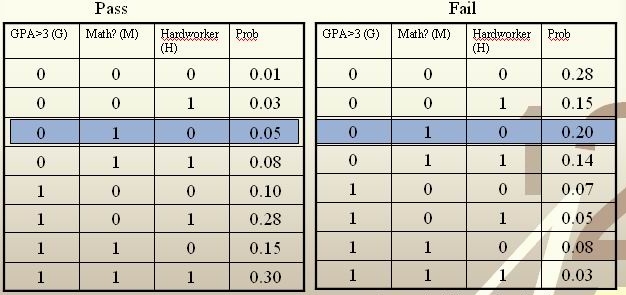

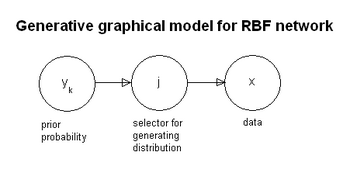

The principle of Bayes Classifier is to calculate the posterior probability of a given object from its prior probability via Bayes formula, and then place the object in the class with the largest posterior probability. Intuitively speaking, to classify <math>\,x\in \mathcal{X}</math> we find <math>y \in \mathcal{Y}</math> such that <math>\,P(Y=y|X=x)</math> is maximum over all the members of <math>\mathcal{Y}</math>. | The principle of Bayes Classifier is to calculate the posterior probability of a given object from its prior probability via Bayes formula, and then place the object in the class with the largest posterior probability<ref> http://www.wikicoursenote.com/wiki/Stat841f11#Bayes_Classifier </ref> | ||

Intuitively speaking, to classify <math>\,x\in \mathcal{X}</math> we find <math>y \in \mathcal{Y}</math> such that <math>\,P(Y=y|X=x)</math> is maximum over all the members of <math>\mathcal{Y}</math>. | |||

Mathematically, for <math>\,k</math> classes and given object <math>\,X=x</math>, we find <math>\,y\in \mathcal{Y}</math> which | Mathematically, for <math>\,k</math> classes and given object <math>\,X=x</math>, we find <math>\,y\in \mathcal{Y}</math> which | ||

| Line 74: | Line 73: | ||

1) Empirical Risk Minimization: Choose a set fo classifier <math>\mathcal{H}</math> and find <math>\,h^*\in \mathcal{H}</math> that minimizes some estimate of <math>\,L(h)</math> | 1) Empirical Risk Minimization: Choose a set fo classifier <math>\mathcal{H}</math> and find <math>\,h^*\in \mathcal{H}</math> that minimizes some estimate of <math>\,L(h)</math> | ||

2) Regression: Find an estimate <math> (\hat r) </math> of the function <math> r </math> and | 2) Regression: Find an estimate <math> (\hat r) </math> of the function <math> r </math> and define | ||

:<math>\, h(X)= \left\{\begin{matrix} | :<math>\, h(X)= \left\{\begin{matrix} | ||

1 & \hat r(x)>\frac{1}{2} \\ | 1 & \hat r(x)>\frac{1}{2} \\ | ||

| Line 180: | Line 179: | ||

* <math>\,\pi_k</math> is called the [http://en.wikipedia.org/wiki/Prior_probability '''prior probability''']. This is a probability distribution that represents what we know (or believe we know) about a population. | * <math>\,\pi_k</math> is called the [http://en.wikipedia.org/wiki/Prior_probability '''prior probability''']. This is a probability distribution that represents what we know (or believe we know) about a population. | ||

* <math>\,\Sigma_k</math> is the sum with respect to all <math>\,k</math> classes. | * <math>\,\Sigma_k</math> is the sum with respect to all <math>\,k</math> classes. | ||

====Approaches==== | ====Approaches==== | ||

Representing the | Representing the optimal method, Bayes classifier cannot be used in the most practical situations though, since usually the prior probability is unknown. Fortunately, other methods of classification have been evolved. These methods fall into three general categories. | ||

1 Empirical Risk Minimization:Choose a set fo classifier <math>\mathcal{H}</math> and find <math>\,h^* \epsilon H</math>, minimize some estimate of <math>\,L(H)</math>. | 1 [http://en.wikipedia.org/wiki/Supervised_learning Empirical Risk Minimization]:Choose a set fo classifier <math>\mathcal{H}</math> and find <math>\,h^* \epsilon H</math>, minimize some estimate of <math>\,L(H)</math>. | ||

2 Regression:Find an estimate <math> (\hat r) </math> of the function <math> r </math> and deifne | 2 Regression:Find an estimate <math> (\hat r) </math> of the function <math>\ r </math> and deifne | ||

:<math>\, h(X)= \left\{\begin{matrix} | :<math>\, h(X)= \left\{\begin{matrix} | ||

1 & \hat r(x)>\frac{1}{2} \\ | 1 & \hat r(x)>\frac{1}{2} \\ | ||

0 & \mathrm{otherwise} \end{matrix}\right.</math> | 0 & \mathrm{otherwise} \end{matrix}\right.</math> | ||

3 Density estimation, estimate <math>P(X = x|Y = 0)</math> and <math>P(X = x|Y = 1)</math> | 3 [http://en.wikipedia.org/wiki/Density_estimation Density estimation], estimate <math>\ P(X = x|Y = 0)</math> and <math>\ P(X = x|Y = 1)</math> | ||

The third approach, in this form, is not popular because density estimation doesn't work very well with dimension greater than 2. | Note:<br /> | ||

The third approach, in this form, is not popular because density estimation doesn't work very well with dimension greater than 2. However this approach is the simplest and we can assume a parametric model for the densities. | |||

Linear Discriminate Analysis and Quadratic Discriminate Analysis are examples of the third approach, density estimation. | Linear Discriminate Analysis and Quadratic Discriminate Analysis are examples of the third approach, density estimation. | ||

| Line 208: | Line 206: | ||

====History==== | ====History==== | ||

The name Linear Discriminant Analysis comes from the fact that these simplifications produce a linear model, which is used to discriminate between classes. In many cases, this simple model is sufficient to provide a near optimal classification - for example, the Z-Score credit risk model, designed by Edward Altman in 1968, which is essentially a weighted LDA, [http://pages.stern.nyu.edu/~ealtman/Zscores.pdf revisited in 2000], has shown an 85-90% success rate predicting bankruptcy, and is still in use today. | The name Linear Discriminant Analysis comes from the fact that these simplifications produce a linear model, which is used to discriminate between classes. In many cases, this simple model is sufficient to provide a near optimal classification - for example, the Z-Score credit risk model, designed by Edward Altman in 1968, which is essentially a weighted LDA, [http://pages.stern.nyu.edu/~ealtman/Zscores.pdf revisited in 2000], has shown an 85-90% success rate predicting bankruptcy, and is still in use today. | ||

'''Purpose''' | |||

1 feature selection | |||

2 which classification rule best seperate the classes | |||

====Definition==== | ====Definition==== | ||

To perform LDA we make two assumptions. | To perform [http://en.wikipedia.org/wiki/Linear_discriminant_analysis LDA] we make two assumptions. | ||

* The clusters belonging to all classes each follow a multivariate normal distribution. <br /><math>x \in \mathbb{R}^d</math> <math>f_k(x)=\frac{1}{ (2\pi)^{d/2}|\Sigma_k|^{1/2} }\exp\left( -\frac{1}{2} [x - \mu_k]^\top \Sigma_k^{-1} [x - \mu_k] \right)</math> | |||

where <math>\ f_k(x)</math> is a class conditional density | |||

* Simplification Assumption: Each cluster has the same covariance matrix <math>\,\Sigma</math> equal to the covariance of <math>\Sigma_k \forall k</math>. | |||

We wish to solve for the boundary where the error rates for classifying a point are equal, where one side of the boundary gives a lower error rate for one class and the other side gives a lower error rate for the other class. | We wish to solve for the [http://en.wikipedia.org/wiki/Decision_boundary decision boundary] where the error rates for classifying a point are equal, where one side of the boundary gives a lower error rate for one class and the other side gives a lower error rate for the other class. | ||

So we solve <math>\,r_k(x)=r_l(x)</math> for all the pairwise combinations of classes. | So we solve <math>\,r_k(x)=r_l(x)</math> for all the pairwise combinations of classes. | ||

| Line 247: | Line 254: | ||

<math>\,\Rightarrow \log(\frac{\pi_k}{\pi_l})-\frac{1}{2}\left( \mu_k^\top\Sigma^{-1}\mu_k-\mu_l^\top\Sigma^{-1}\mu_l - 2x^\top\Sigma^{-1}(\mu_k-\mu_l) \right)=0</math> after canceling out like terms and factoring. | <math>\,\Rightarrow \log(\frac{\pi_k}{\pi_l})-\frac{1}{2}\left( \mu_k^\top\Sigma^{-1}\mu_k-\mu_l^\top\Sigma^{-1}\mu_l - 2x^\top\Sigma^{-1}(\mu_k-\mu_l) \right)=0</math> after canceling out like terms and factoring. | ||

We can see that this is a linear function in x with general form <math>\,ax+b=0</math>. | We can see that this is a linear function in <math>\ x </math> with general form <math>\,ax+b=0</math>. | ||

Actually, this linear log function shows that the decision boundary between class <math>k</math> and class <math>l</math>, i.e. <math> | Actually, this linear log function shows that the decision boundary between class <math>\ k </math> and class <math>\ l </math>, i.e. <math>\ P(G=k|X=x)=P(G=l|X=x) </math>, is linear in <math>\ x</math>. Given any pair of classes, decision boundaries are always linear. In <math>\ d</math> dimensions, we separate regions by hyperplanes. | ||

In the special case where the number of samples from each class are equal (<math>\,\pi_k=\pi_l</math>), the boundary surface or line lies halfway between <math>\,\mu_l</math> and <math>\,\mu_k</math> | In the special case where the number of samples from each class are equal (<math>\,\pi_k=\pi_l</math>), the boundary surface or line lies halfway between <math>\,\mu_l</math> and <math>\,\mu_k</math> | ||

====Limitation==== | |||

* LDA implicitly assumes Gaussian distribution of data. | |||

* LDA implicitly assumes that the mean is the discriminating factor, not variance. | |||

* LDA may overfit the data. | |||

===QDA=== | ===QDA=== | ||

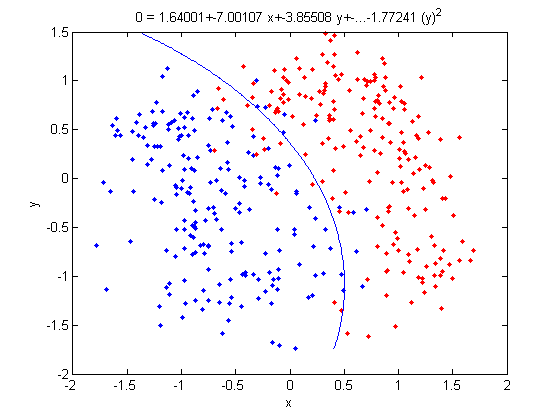

The concept | The concept uses a same idea as LDA of finding a boundary where the error rate for classification between classes are equal, except the assumption that each cluster has the same variance <math>\,\Sigma</math> equal to the mean variance of <math>\Sigma_k \forall k</math> is removed. We can use a hypothesis test with <math>\ H_0 </math>: <math>\Sigma_k \forall k </math>=<math>\,\Sigma</math>.The best method is likelihood ratio test. | ||

| Line 281: | Line 294: | ||

== '''Linear and Quadratic Discriminant Analysis cont'd - October 5, 2009''' == | == '''Linear and Quadratic Discriminant Analysis cont'd - October 5, 2009''' == | ||

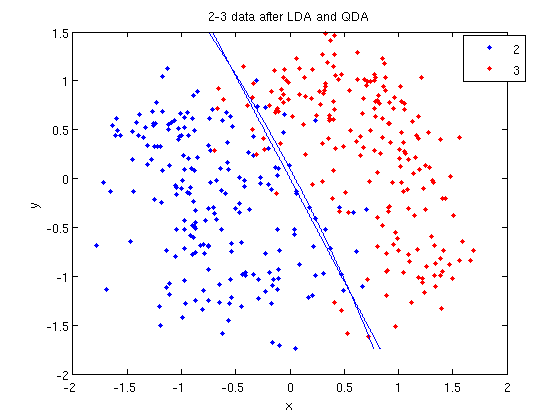

Linear discriminant analysis[http://en.wikipedia.org/wiki/Linear_discriminant_analysis] is a statistical method used to find the ''linear combination'' of features which best separate two or more classes of objects or events. It is widely applied in classifying diseases, positioning, product management, and marketing research. | |||

Quadratic Discriminant Analysis[http://en.wikipedia.org/wiki/Quadratic_classifier], on the other had, aims to find the ''quadratic combination'' of features. It is more general than Linear discriminant analysis. Unlike LDA however, in QDA there is no assumption that the covariance of each of the classes is identical. When the assumption is true, the best possible test for the hypothesis that a given measurement is from a given class is the [http://en.wikipedia.org/wiki/Likelihood-ratio_test likelihood ratio test]. Suppose the means of each class are known to be <math> \mu_{y=0},\mu_{y=1} </math> and the covariances <math> \Sigma_{y=0}, \Sigma_{y=1} </math>. Then the likelihood ratio will be given by | |||

:Likelihood ratio = <math> \frac{ \sqrt{2 \pi |\Sigma_{y=1}|}^{-1} \exp \left( -\frac{1}{2}(x-\mu_{y=1})^T \Sigma_{y=1}^{-1} (x-\mu_{y=1}) \right) }{ \sqrt{2 \pi |\Sigma_{y=0}|}^{-1} \exp \left( -\frac{1}{2}(x-\mu_{y=0})^T \Sigma_{y=0}^{-1} (x-\mu_{y=0}) \right)} < t </math> | |||

for some threshold t. After some rearrangement, it can be shown that the resulting separating surface between the classes is a quadratic. | |||

===Summarizing LDA and QDA=== | ===Summarizing LDA and QDA=== | ||

We can summarize what we have learned on LDA and QDA so far into the following theorem. | We can summarize what we have learned on [http://academicearth.org/lectures/advice-for-applying-machine-learning LDA and QDA] so far into the following theorem. | ||

'''Theorem''': | '''Theorem''': | ||

| Line 315: | Line 335: | ||

<math>\,\Sigma=\frac{\sum_{r=1}^{k}(n_r\Sigma_r)}{\sum_{l=1}^{k}(n_l)} </math> | <math>\,\Sigma=\frac{\sum_{r=1}^{k}(n_r\Sigma_r)}{\sum_{l=1}^{k}(n_l)} </math> | ||

This is a Maximum Likelihood estimate. | |||

===Computation=== | ===Computation=== | ||

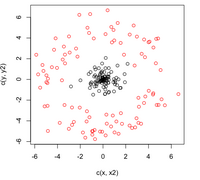

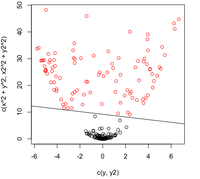

'''Case 1: (Example) <math>\, \Sigma_k = I </math>' | '''Case 1: (Example) <math>\, \Sigma_k = I </math>' | ||

[[File:case1.jpg|300px|thumb|right]] | |||

This means that the data is distributed symmetrically around the center <math>\mu</math>, i.e. the isocontours are all circles. | This means that the data is distributed symmetrically around the center <math>\mu</math>, i.e. the isocontours are all circles. | ||

| Line 327: | Line 350: | ||

<math> \,\delta_k = - \frac{1}{2}log(|I|) - \frac{1}{2}(x-\mu_k)^\top I(x-\mu_k) + log (\pi_k) </math> | <math> \,\delta_k = - \frac{1}{2}log(|I|) - \frac{1}{2}(x-\mu_k)^\top I(x-\mu_k) + log (\pi_k) </math> | ||

We see that the first term in the above equation, <math>\,\frac{1}{2}log(|I|)</math>, is zero. The second term contains <math>\, (x-\mu_k)^\top I(x-\mu_k) = (x-\mu_k)^\top(x-\mu_k) </math>, which is the squared Euclidean distance between <math>\,x</math> and <math>\,\mu_k</math>. Therefore we can find the distance between a point and each center and adjust it with the log of the prior, <math>\,log(\pi_k)</math>. The class that has the minimum distance will maximise <math>\,\delta_k</math>. According to the theorem, we can then classify the point to a specific class <math>\,k</math>. In addition, <math>\, \Sigma_k = I </math> implies that our data is spherical. | We see that the first term in the above equation, <math>\,\frac{1}{2}log(|I|)</math>, is zero since <math>\ |I| </math> is the determine and <math>\ |I|=1 </math>. The second term contains <math>\, (x-\mu_k)^\top I(x-\mu_k) = (x-\mu_k)^\top(x-\mu_k) </math>, which is the [http://www.improvedoutcomes.com/docs/WebSiteDocs/Clustering/Clustering_Parameters/Euclidean_and_Euclidean_Squared_Distance_Metrics.htm squared Euclidean distance] between <math>\,x</math> and <math>\,\mu_k</math>. Therefore we can find the distance between a point and each center and adjust it with the log of the prior, <math>\,log(\pi_k)</math>. The class that has the minimum distance will maximise <math>\,\delta_k</math>. According to the theorem, we can then classify the point to a specific class <math>\,k</math>. In addition, <math>\, \Sigma_k = I </math> implies that our data is spherical. | ||

| Line 363: | Line 386: | ||

The answer is NO. Consider that you have two classes with different shapes, then consider transforming them to the same shape. Given a data point, justify which class this point belongs to. The question is, which transformation can you use? For example, if you use the transformation of class A, then you have assumed that this data point belongs to class A. | The answer is NO. Consider that you have two classes with different shapes, then consider transforming them to the same shape. Given a data point, justify which class this point belongs to. The question is, which transformation can you use? For example, if you use the transformation of class A, then you have assumed that this data point belongs to class A. | ||

[http://portal.acm.org/citation.cfm?id=1340851 Kernel QDA] | |||

In real life, QDA is always better fit the data then LDA because QDA relaxes does not have the assumption made by LDA that the covariance matrix for each class is identical. However, QDA still assumes that the class conditional distribution is Gaussian which does not be the real case in practical. Another method-kernel QDA does not have the Gaussian distribution assumption and it works better. | |||

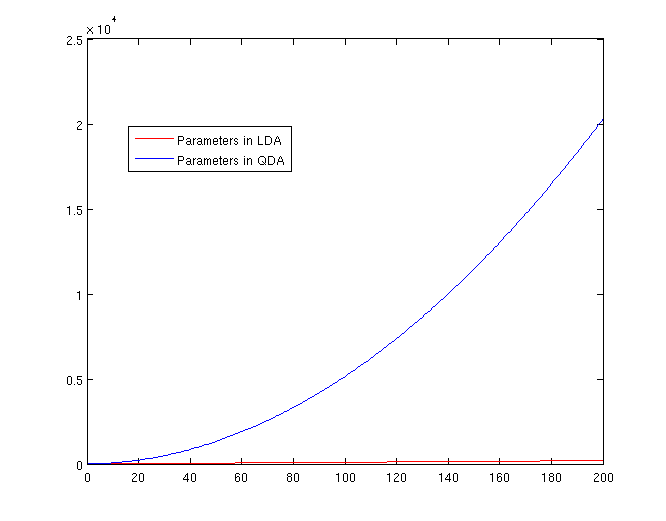

===The Number of Parameters in LDA and QDA=== | ===The Number of Parameters in LDA and QDA=== | ||

| Line 373: | Line 399: | ||

[[File:Lda-qda-parameters.png|frame|center|A plot of the number of parameters that must be estimated, in terms of (K-1). The x-axis represents the number of dimensions in the data. As is easy to see, QDA is far less robust than LDA for high-dimensional data sets.]] | [[File:Lda-qda-parameters.png|frame|center|A plot of the number of parameters that must be estimated, in terms of (K-1). The x-axis represents the number of dimensions in the data. As is easy to see, QDA is far less robust than LDA for high-dimensional data sets.]] | ||

related link: | |||

LDA:[http://www.stat.psu.edu/~jiali/course/stat597e/notes2/lda.pdf] | |||

[http://www.dtreg.com/lda.htm] | |||

[http://biostatistics.oxfordjournals.org/cgi/reprint/kxj035v1.pdf Regularized linear discriminant analysis and its application in microarrays] | |||

[http://www.isip.piconepress.com/publications/reports/isip_internal/1998/linear_discrim_analysis/lda_theory.pdf MATHEMATICAL OPERATIONS OF LDA] | |||

[http://psychology.wikia.com/wiki/Linear_discriminant_analysis Application in face recognition and in market] | |||

QDA:[http://portal.acm.org/citation.cfm?id=1314542] | |||

[http://jmlr.csail.mit.edu/papers/volume8/srivastava07a/srivastava07a.pdf Bayes QDA] | |||

[http://www.uni-leipzig.de/~strimmer/lab/courses/ss06/seminar/slides/daniela-2x4.pdf LDA & QDA] | |||

== LDA and QDA in Matlab - October 7, 2009 == | == LDA and QDA in Matlab - October 7, 2009 == | ||

| Line 498: | Line 542: | ||

Then we can see that y=score, v=U. | Then we can see that y=score, v=U. | ||

'''useful resouces:''' | |||

LDA and QDA in Matlab[http://www.mathworks.com/products/statistics/demos.html?file=/products/demos/shipping/stats/classdemo.html],[http://www.mathworks.com/matlabcentral/fileexchange/189],[http://seed.ucsd.edu/~cse190/media07/MatlabClassificationDemo.pdf] | |||

== Trick: Using LDA to do QDA - October 7, 2009 == | == Trick: Using LDA to do QDA - October 7, 2009 == | ||

| Line 517: | Line 564: | ||

<math>y = \underline{w}^Tx</math> | <math>y = \underline{w}^Tx</math> | ||

where <math>\underline{w}</math> is a d-dimensional column vector, and <math style="vertical-align:0%;">x\ \epsilon\ \ | where <math>\underline{w}</math> is a d-dimensional column vector, and <math style="vertical-align:0%;">x\ \epsilon\ \mathbb{R}^d</math> (vector in d dimensions). | ||

We also have a non-linear function <math>g(x) = y = x^Tvx + \underline{w}^Tx</math> that we cannot estimate. | We also have a non-linear function <math>g(x) = y = x^Tvx + \underline{w}^Tx</math> that we cannot estimate. | ||

| Line 623: | Line 670: | ||

: Labeling the lines directly on the graph makes it easier to interpret. | : Labeling the lines directly on the graph makes it easier to interpret. | ||

== | |||

=== Distance Metric Learning VS FDA === | |||

In many fundamental machine learning problems, the Euclidean distances between data points do not represent the desired topology that we are trying to capture. Kernel methods address this problem by mapping the points into new spaces where Euclidean distances may be more useful. An alternative approach is to construct a Mahalanobis distance (quadratic Gaussian metric) over the input space and use it in place of Euclidean distances. This | |||

approach can be equivalently interpreted as a linear transformation of the original inputs,followed by Euclidean distance in the projected space. This approach has attracted a lot of recent interest. | |||

Some of the proposed algorithms are iterative and computationally expensive. In the paper,"[http://www.aaai.org/Papers/AAAI/2008/AAAI08-095.pdf Distance Metric Learning VS FDA] " written by our instructor, they propose a closed-form solution to one algorithm that previously required expensive semidefinite optimization. They provide a new problem setup in which the algorithm performs better or as well as some standard methods, but without the computational complexity. Furthermore, they show a strong relationship between these methods and the Fisher Discriminant Analysis (FDA). They also extend the approach by kernelizing it, allowing for non-linear transformations of the metric. | |||

== Fisher's Discriminant Analysis (FDA) - October 9, 2009 == | |||

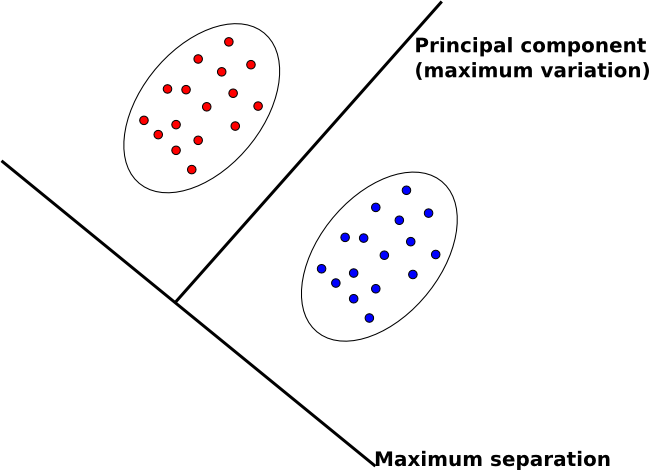

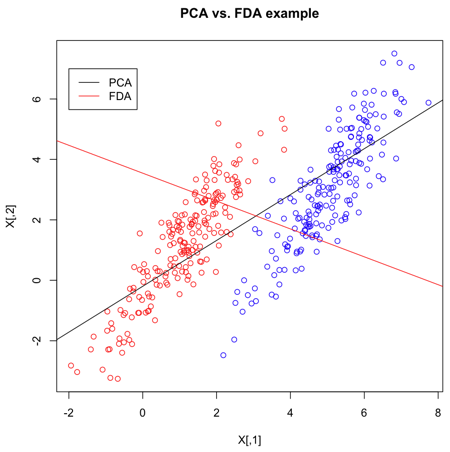

The goal of FDA is to reduce the dimensionality of data in order to have separable data points in a new space. | The goal of FDA is to reduce the dimensionality of data in order to have separable data points in a new space. | ||

| Line 630: | Line 684: | ||

* multi-class problem | * multi-class problem | ||

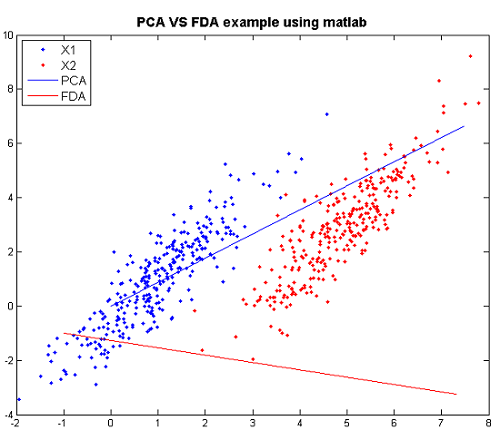

=== Two-class problem | [[File:graph.jpg|500px|thumb|right| PCA vs FDA]] | ||

=== Two-class problem === | |||

In the two-class problem, we have the pre-knowledge that data points belong to two classes. Intuitively speaking points of each class form a cloud around the mean of the class, with each class having possibly different size. To be able to separate the two classes we must determine the class whose mean is closest to a given point while also accounting for the different size of each class, which is represented by the covariance of each class. | In the two-class problem, we have the pre-knowledge that data points belong to two classes. Intuitively speaking points of each class form a cloud around the mean of the class, with each class having possibly different size. To be able to separate the two classes we must determine the class whose mean is closest to a given point while also accounting for the different size of each class, which is represented by the covariance of each class. | ||

| Line 646: | Line 702: | ||

<br/> | <br/> | ||

<br/> | <br/> | ||

Original points are <math>\underline{x_{i}} \in \mathbb{R}^{d}</math><br /> | Original points are <math>\underline{x_{i}} \in \mathbb{R}^{d}</math><br /> <math>\ \{ \underline x_1 \underline x_2 \cdot \cdot \cdot \underline x_n \} </math> | ||

Projected points are <math>\underline{z_{i}} \in \mathbb{R}^{1}</math> with <math>\underline{z_{i}} = \underline{w}^T \cdot\underline{x_{i}}</math> | |||

Projected points are <math>\underline{z_{i}} \in \mathbb{R}^{1}</math> with <math>\underline{z_{i}} = \underline{w}^T \cdot\underline{x_{i}}</math> <math>\ z_i </math> is a sclar | |||

==== Between class covariance ==== | ==== Between class covariance ==== | ||

| Line 660: | Line 718: | ||

&= \underline{w}^T(\underline{\mu_{1}}-\underline{\mu_{2}})(\underline{\mu_{1}}-\underline{\mu_{2}})^T\underline{w} | &= \underline{w}^T(\underline{\mu_{1}}-\underline{\mu_{2}})(\underline{\mu_{1}}-\underline{\mu_{2}})^T\underline{w} | ||

\end{align} | \end{align} | ||

</math> | </math> which is scalar | ||

| Line 690: | Line 748: | ||

:<math>\underset{\underline{w}}{max}\ \frac{\underline{w}^T S_{B} \underline{w}}{\underline{w}^T S_{W} \underline{w}}</math> | :<math>\underset{\underline{w}}{max}\ \frac{\underline{w}^T S_{B} \underline{w}}{\underline{w}^T S_{W} \underline{w}}</math> | ||

This maximization problem is equivalent to <math>\underset{\underline{w}}{max}\ \underline{w}^T S_{B} \underline{w}</math> subject to constraint <math>\underline{w}^T S_{W} \underline{w} = 1</math>. We can use the Lagrange multiplier method to solve it: | This maximization problem is equivalent to <math>\underset{\underline{w}}{max}\ \underline{w}^T S_{B} \underline{w} \equiv \max(\underline w^T S_B \underline w)</math> subject to constraint <math>\underline{w}^T S_{W} \underline{w} = 1</math>, | ||

where <math>\ \underline w^T S_B \underline w</math> is no upper bound and <math>\ \underline w^T S_w \underline w</math> is no lower bound. | |||

We can use the Lagrange multiplier method to solve it: | |||

:<math>L(\underline{w},\lambda) = \underline{w}^T S_{B} \underline{w} - \lambda(\underline{w}^T S_{W} \underline{w} - 1)</math> | :<math>L(\underline{w},\lambda) = \underline{w}^T S_{B} \underline{w} - \lambda(\underline{w}^T S_{W} \underline{w} - 1)</math> where <math>\ \lambda </math> is the weight | ||

< | |||

With <math>\frac{\part L}{\part \underline{w}} = 0</math> we get: | |||

With <math>\frac{\part L}{\part \underline{w}} = 0</math> we get: | |||

:<math> | :<math> | ||

\begin{align} | \begin{align} | ||

| Line 704: | Line 766: | ||

Note that <math>\, S_{W}=\Sigma_1+\Sigma_2</math> is sum of two positive matrices and so it has an inverse. | Note that <math>\, S_{W}=\Sigma_1+\Sigma_2</math> is sum of two positive matrices and so it has an inverse. | ||

Here <math>\underline{w}</math> is the eigenvector of <math>S_{w}^{-1}\ S_{B}</math> corresponding to the largest eigenvalue. | Here <math>\underline{w}</math> is the eigenvector of <math>S_{w}^{-1}\ S_{B}</math> corresponding to the largest eigenvalue <math>\ \lambda </math>. | ||

In facts, this expression can be simplified even more.<br> | In facts, this expression can be simplified even more.<br> | ||

| Line 743: | Line 805: | ||

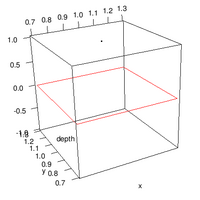

[[File:PCA-VS-FDA.png|frame|center|PCA and FDA primary dimension for normal multivariate data, using matlab]] | [[File:PCA-VS-FDA.png|frame|center|PCA and FDA primary dimension for normal multivariate data, using matlab]] | ||

From the graph: when we see using PCA, we have a huge overlap for two classes, so PCA is not good. However, there is no overlap for the two classes and they are seperated pretty. Thus, FDA is better than PCA here. | |||

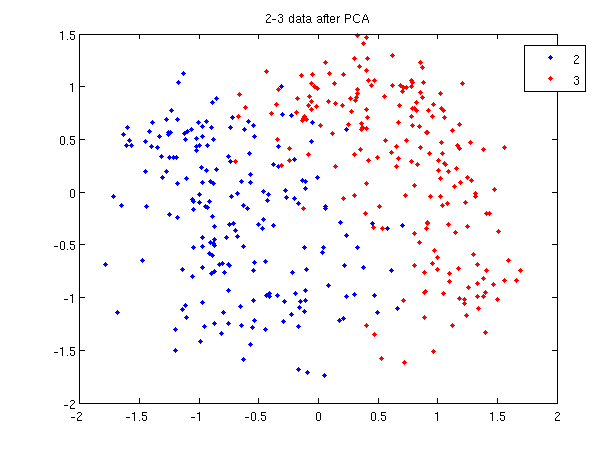

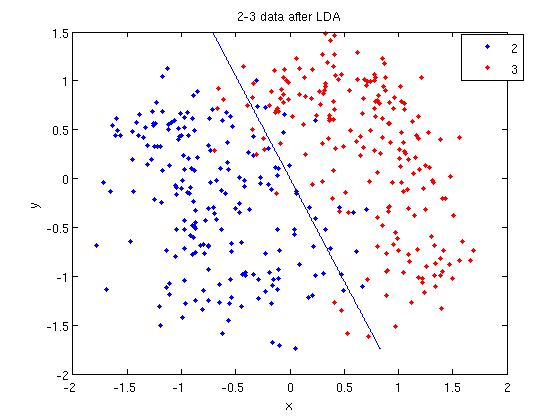

==== Practical example of 2_3 ==== | ==== Practical example of 2_3 ==== | ||

| Line 774: | Line 838: | ||

[[File:fda2-3.jpg|frame|center|FDA projection of data 2_3, using [http://www.mathwork.com Matlab].]] | [[File:fda2-3.jpg|frame|center|FDA projection of data 2_3, using [http://www.mathwork.com Matlab].]] | ||

Map the data into a linear line, and the two classes are seperated perfectly here. | |||

==== An extension of Fisher's discriminant analysis for stochastic processes ==== | |||

A general notion of Fisher's linear discriminant analysis can extend the classical multivariate concept to situations that allow for function-valued random elements. The development uses a bijective mapping that connects a second order process to the reproducing kernel Hilbert space generated by its within class covariance kernel. This approach provides a seamless transition between Fisher's original development and infinite dimensional settings that lends itself well to computation via smoothing and regularization. | |||

Link for Algorithm introduction:[[http://statgen.ncsu.edu/icsa2007/talks/HyejinShin.pdf]] | |||

== FDA for Multi-class Problems - October 14, 2009 == | == FDA for Multi-class Problems - October 14, 2009 == | ||

===FDA method for Multi-class Problems === | |||

For the <math>k</math>-class problem, we need to find a projection from | For the <math>k</math>-class problem, we need to find a projection from | ||

| Line 1,039: | Line 1,115: | ||

\end{align} | \end{align} | ||

</math> | </math> | ||

===Generalization of Fisher's Linear Discriminant === | |||

Fisher's linear discriminant (Fisher, 1936) is very popular among users of discriminant analysis.Some of the reasons for this are its simplicity | |||

and unnecessity of strict assumptions. However it has optimality properties only if the underlying distributions of the groups are multivariate normal. It is also easy to verify that the discriminant rule obtained can be very harmed by only a small number of outlying observations. Outliers are very hard to detect in multivariate data sets and even when they are detected simply discarding them is not the most effcient way of handling the situation. Therefore the need for robust procedures that can accommodate the outliers and are not strongly affected by them. Then, a generalization of Fisher's linear discriminant algorithm [[http://www.math.ist.utl.pt/~apires/PDFs/APJB_RP96.pdf]]is developed to lead easily to a very robust procedure. | |||

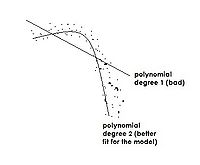

== Linear Regression Models - October 14, 2009 == | == Linear Regression Models - October 14, 2009 == | ||

Regression analysis is a general statistical technique for modelling and analyzing how a dependent variable changes according to changes in independent variables. In classification, we are interested in how a label, <math>\,y</math>, changes according to changes in <math>\,X</math>. | [http://en.wikipedia.org/wiki/Regression_analysis Regression analysis] is a general statistical technique for modelling and analyzing how a dependent variable changes according to changes in independent variables. In classification, we are interested in how a label, <math>\,y</math>, changes according to changes in <math>\,X</math>. | ||

We will start by considering a very simple regression model, the linear regression model. | We will start by considering a very simple regression model, the linear regression model. | ||

General information on linear regression can be found at the [http://numericalmethods.eng.usf.edu/topics/linear_regression.html University of South Florida] and [http://academicearth.org/lectures/applications-to-linear-estimation-least-squares this MIT lecture]. | General information on [http://en.wikipedia.org/wiki/Linear_regression linear regression] can be found at the [http://numericalmethods.eng.usf.edu/topics/linear_regression.html University of South Florida] and [http://academicearth.org/lectures/applications-to-linear-estimation-least-squares this MIT lecture]. | ||

For the purpose of classification, the linear regression model assumes | For the purpose of classification, the linear regression model assumes | ||

| Line 1,052: | Line 1,133: | ||

<math>\,\mathbf{x}_{1}, ..., \mathbf{x}_{p}</math>. | <math>\,\mathbf{x}_{1}, ..., \mathbf{x}_{p}</math>. | ||

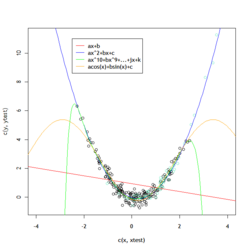

The linear regression model has the general form: | The simple linear regression model has the general form: | ||

:<math> | :<math> | ||

| Line 1,059: | Line 1,140: | ||

\end{align} | \end{align} | ||

</math> | </math> | ||

where <math>\,\beta</math> is a <math>1 \times d</math> vector. | where <math>\,\beta</math> is a <math>1 \times d</math> vector and <math>\ x_i </math> is a <math>d \times 1</math> vector . | ||

Given input data <math>\,\mathbf{x}_{1}, ..., \mathbf{x}_{p}</math> and <math>\,y_{1}, ..., y_{p}</math> our goal is to find <math>\,\beta</math> and <math>\,\beta_0</math> such that the linear model fits the data while minimizing sum of squared errors using the Least Squares method. | Given input data <math>\,\mathbf{x}_{1}, ..., \mathbf{x}_{p}</math> and <math>\,y_{1}, ..., y_{p}</math> our goal is to find <math>\,\beta</math> and <math>\,\beta_0</math> such that the linear model fits the data while minimizing sum of squared errors using the [http://en.wikipedia.org/wiki/Least_squares Least Squares method]. | ||

Note that vectors <math>\mathbf{x}_{i}</math> could be numerical inputs, | Note that vectors <math>\mathbf{x}_{i}</math> could be numerical inputs, | ||

| Line 1,119: | Line 1,200: | ||

where <math>\mathbf{H} = \mathbf{X} | where <math>\mathbf{H} = \mathbf{X} | ||

(\mathbf{X}^{T}\mathbf{X})^{-1}\mathbf{X}^{T}</math> is called the hat matrix. | (\mathbf{X}^{T}\mathbf{X})^{-1}\mathbf{X}^{T}</math> is called the [http://en.wikipedia.org/wiki/Hat_matrix hat matrix]. | ||

<br/> | <br/> | ||

| Line 1,125: | Line 1,206: | ||

<math>r(x)= P( Y=k | X=x )= \frac{f_{k}(x)\pi_{k}}{\Sigma_{k}f_{k}(x)\pi_{k}}</math><br/> | <math>r(x)= P( Y=k | X=x )= \frac{f_{k}(x)\pi_{k}}{\Sigma_{k}f_{k}(x)\pi_{k}}</math><br/> | ||

It is clear that to make sense mathematically, <math>\displaystyle r(x)</math> must be a value between 0 and 1. If this is estimated with the | It is clear that to make sense mathematically, <math>\displaystyle r(x)</math> must be a value between 0 and 1. If this is estimated with the | ||

regression function <math>\displaystyle r(x)=E(Y|X=x)</math> and <math>\mathbf{\hat\beta} </math> is learned as above, then there is nothing that would restrict <math>\displaystyle r(x)</math> to taking values between 0 and 1. | regression function <math>\displaystyle r(x)=E(Y|X=x)</math> and <math>\mathbf{\hat\beta} </math> is learned as above, then there is nothing that would restrict <math>\displaystyle r(x)</math> to taking values between 0 and 1. This is more direct approach to classification since it do not need to estimate <math>\ f_k(x) </math> and <math>\ \pi_k </math>. | ||

<math>\ 1 \times P(Y=1|X=x)+0 \times P(Y=0|X=x)=E(Y|X) </math> | |||

This model does not classify Y between 0 and 1, so it is not good and sometimes it can lead to a decent classifier. <math>\ y_i=\frac{1}{n_1} </math> <math>\ \frac{-1}{n_2} </math> | |||

====A linear regression example in Matlab==== | ====A linear regression example in Matlab==== | ||

| Line 1,160: | Line 1,243: | ||

[[File: linearregression.png|center|frame| the figure shows that the classification of the data points in 2_3.m by the linear regression model]] | [[File: linearregression.png|center|frame| the figure shows that the classification of the data points in 2_3.m by the linear regression model]] | ||

== | ====Comments about Linear regression model==== | ||

Linear regression model is almost the easiest and most popular way to analyze the relationship of different data sets. However, it has some disadvantages as well as its advantages. We should be clear about them before we apply the model. | |||

''Advantages'': Linear least squares regression has earned its place as the primary tool for process modeling because of its effectiveness and completeness. Though there are types of data that are better described by functions that are nonlinear in the parameters, many processes in science and engineering are well-described by linear models. This is because either the processes are inherently linear or because, over short ranges, any process can be well-approximated by a linear model. The estimates of the unknown parameters obtained from linear least squares regression are the optimal estimates from a broad class of possible parameter estimates under the usual assumptions used for process modeling. Practically speaking, linear least squares regression makes very efficient use of the data. Good results can be obtained with relatively small data sets. Finally, the theory associated with linear regression is well-understood and allows for construction of different types of easily-interpretable statistical intervals for predictions, calibrations, and optimizations. These statistical intervals can then be used to give clear answers to scientific and engineering questions. | |||

''Disadvantages'': The main disadvantages of linear least squares are limitations in the shapes that linear models can assume over long ranges, possibly poor extrapolation properties, and sensitivity to outliers. Linear models with nonlinear terms in the predictor variables curve relatively slowly, so for inherently nonlinear processes it becomes increasingly difficult to find a linear model that fits the data well as the range of the data increases. As the explanatory variables become extreme, the output of the linear model will also always more extreme. This means that linear models may not be effective for extrapolating the results of a process for which data cannot be collected in the region of interest. Of course extrapolation is potentially dangerous regardless of the model type. Finally, while the method of least squares often gives optimal estimates of the unknown parameters, it is very sensitive to the presence of unusual data points in the data used to fit a model. One or two outliers can sometimes seriously skew the results of a least squares analysis. This makes model validation, especially with respect to outliers, critical to obtaining sound answers to the questions motivating the construction of the model. | |||

''' | '''useful link''':[http://www.uco.es/dptos/prod-animal/p-animales/cerdo-iberico/Bibliografia/p253.pdf] | ||

[http://www.cs.au.dk/~cstorm/courses/ML/slides/linear-regression-and-classification.pdf] | |||

: | |||

==Logistic Regression- October 16, 2009== | |||

The [http://en.wikipedia.org/wiki/Logistic_regression logistic regression] model arises from the desire to model the posterior probabilities of the <math>\displaystyle K</math> classes via linear functions in <math>\displaystyle x</math>, while at the same time ensuring that they sum to one and remain in [0,1].Logistic regression models are usually fit by maximum likelihood, using the conditional likelihood ,using <math>\displaystyle Pr(Y|X)</math>. Since <math>\displaystyle Pr(Y|X)</math> completely specifies the conditional distribution, the multinomial distribution is appropriate. This model is widely used in biostatistical applications for two classes. For instance: people survive or die, have a disease or not, have a risk factor or not. | |||

[ | |||

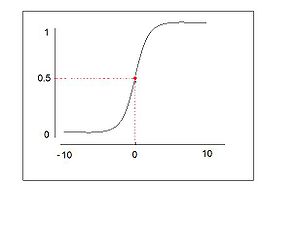

=== | === logistic function === | ||

: | A [http://en.wikipedia.org/wiki/Logistic_function logistic function] or logistic curve is the most common sigmoid curve. | ||

:<math>y = \frac{1}{1+e^{-x}}</math> | |||

:<math> | |||

1. <math>\frac{dy}{dx} = y(1-y)=\frac{e^{x}}{(1+e^{x})^{2}}</math> | |||

</math> | |||

2. <math>y(0) = \frac{1}{2}</math> | |||

3. <math> \int y dx = ln(1 + e^{x})</math> | |||

\ | |||

</math> | |||

4. <math> y(x) = \frac{1}{2} + \frac{1}{4}x - \frac{1}{48}x^{3} + \frac{1}{48}x^{5} \cdots </math> | |||

= | 5. The logistic curve shows early exponential growth for negative t, which slows to linear growth of slope 1/4 near t = 0, then approaches y = 1 with an exponentially decaying gap. | ||

===Logistic Regression | ===Intuition behind Logistic Regression=== | ||

Recall that, for classification purposes, the linear regression model presented in the above section is not correct because it does not force <math>\,r(x)</math> to be between 0 and 1 and sum to 1. Consider the following [http://en.wikipedia.org/wiki/Logit log odds] model (for two classes): | |||

:<math>\log\left(\frac{P(Y=1|X=x)}{P(Y=0|X=x)}\right)=\beta^Tx</math> | |||

Calculating <math>\,P(Y=1|X=x)</math> leads us to the logistic regression model, which as opposed to the linear regression model, allows the modelling of the posterior probabilities of the classes through linear methods and at the same time ensures that they sum to one and are between 0 and 1. It is a type of [http://en.wikipedia.org/wiki/Generalized_linear_model Generalized Linear Model (GLM)]. | |||

=== | ===The Logistic Regression Model=== | ||

The logistic regression model for the two class case is defined as | |||

''' | '''Class 1''' | ||

[[File:Picture1.png|150px|thumb|right|<math>P(Y=1 | X=x)</math>]] | |||

:<math>P(Y=1 | X=x) =\frac{\exp(\underline{\beta}^T \underline{x})}{1+\exp(\underline{\beta}^T \underline{x})}=P(x;\underline{\beta})</math> | |||

Then we have that | |||

'''Class 0''' | |||

[[File:Picture2.png |150px|thumb|right|<math>P(Y=0 | X=x)</math>]] | |||

:<math>P(Y=0 | X=x) = 1-P(Y=1 | X=x)=1-\frac{\exp(\underline{\beta}^T \underline{x})}{1+\exp(\underline{\beta}^T \underline{x})}=\frac{1}{1+\exp(\underline{\beta}^T \underline{x})}</math> | |||

<math> | ===Fitting a Logistic Regression=== | ||

Logistic regression tries to fit a distribution. The fitting of logistic regression models is usually accomplished by [http://en.wikipedia.org/wiki/Maximum_likelihood maximum likelihood], using Pr(Y|X). The maximum likelihood of <math>\underline\beta</math> maximizes the probability of obtaining the data <math>\displaystyle{x_{1},...,x_{n}}</math> from the known distribution. Combining <math>\displaystyle P(Y=1 | X=x)</math> and <math>\displaystyle P(Y=0 | X=x)</math> as follows, we can consider the two classes at the same time: | |||

:<math>p(\underline{x_{i}};\underline{\beta}) = \left(\frac{\exp(\underline{\beta}^T \underline{x_i})}{1+\exp(\underline{\beta}^T \underline{x_i})}\right)^{y_i} \left(\frac{1}{1+\exp(\underline{\beta}^T \underline{x_i})}\right)^{1-y_i}</math> | |||

Assuming the data <math>\displaystyle {x_{1},...,x_{n}}</math> is drawn independently, the likelihood function is | |||

:<math> | |||

\begin{align} | |||

\mathcal{L}(\theta)&=p({x_{1},...,x_{n}};\theta)\\ | |||

&=\displaystyle p(x_{1};\theta) p(x_{2};\theta)... p(x_{n};\theta) \quad \mbox{(by independence)}\\ | |||

&= \prod_{i=1}^n p(x_{i};\theta) | |||

\end{align} | |||

</math> | |||

Since it is more convenient to work with the log-likelihood function, take the log of both sides, we get | |||

:<math>\displaystyle l(\theta)=\displaystyle \sum_{i=1}^n \log p(x_{i};\theta)</math> | |||

So, | |||

:<math> | |||

\begin{align} | |||

l(\underline\beta)&=\displaystyle\sum_{i=1}^n y_{i}\log\left(\frac{\exp(\underline{\beta}^T \underline{x_i})}{1+\exp(\underline{\beta}^T \underline{x_i})}\right)+(1-y_{i})\log\left(\frac{1}{1+\exp(\underline{\beta}^T\underline{x_i})}\right)\\ | |||

&= \displaystyle\sum_{i=1}^n y_{i}(\underline{\beta}^T\underline{x_i}-\log(1+\exp(\underline{\beta}^T\underline{x_i}))+(1-y_{i})(-\log(1+\exp(\underline{\beta}^T\underline{x_i}))\\ | |||

&= \displaystyle\sum_{i=1}^n y_{i}\underline{\beta}^T\underline{x_i}-y_{i} \log(1+\exp(\underline{\beta}^T\underline{x_i}))- \log(1+\exp(\underline{\beta}^T\underline{x_i}))+y_{i} \log(1+\exp(\underline{\beta}^T\underline{x_i}))\\ | |||

&=\displaystyle\sum_{i=1}^n y_{i}\underline{\beta}^T\underline{x_i}- \log(1+\exp(\underline{\beta}^T\underline{x_i}))\\ | |||

\end{align} | |||

</math> | |||

To maximize the log-likelihood, set its derivative to 0. | |||

:<math> | |||

\begin{align} | |||

\frac{\partial l}{\partial \underline{\beta}} &= \sum_{i=1}^n \left[{y_i} \underline{x}_i- \frac{\exp(\underline{\beta}^T \underline{x_i})}{1+\exp(\underline{\beta}^T \underline{x}_i)}\underline{x}_i\right]\\ | |||

&=\sum_{i=1}^n \left[{y_i} \underline{x}_i - p(\underline{x}_i;\underline{\beta})\underline{x}_i\right] | |||

\end{align} | |||

</math> | |||

<math> | There are n+1 nonlinear equations in <math>/ \beta </math>. The first column is vector 1, then <math>\ \sum_{i=1}^n {y_i} =\sum_{i=1}^n p(\underline{x}_i;\underline{\beta}) </math> i.e. the expected number of class ones matches the observed number. | ||

To solve this equation, the [http://numericalmethods.eng.usf.edu/topics/newton_raphson.html Newton-Raphson algorithm] is used which requires the second derivative in addition to the first derivative. This is demonstrated in the next section. | |||

The | ====Advantages and Disadvantages==== | ||

Logistic regression has several advantages over discriminant analysis: | |||

* it is more robust: the independent variables don't have to be normally distributed, or have equal variance in each group | |||

* It does not assume a linear relationship between the IV and DV | |||

* It may handle nonlinear effects | |||

* You can add explicit interaction and power terms | |||

* The DV need not be normally distributed. | |||

* There is no homogeneity of variance assumption. | |||

* Normally distributed error terms are not assumed. | |||

* It does not require that the independents be interval. | |||

* It does not require that the independents be unbounded. | |||

With all this flexibility, you might wonder why anyone would ever use discriminant analysis or any other method of analysis. Unfortunately, the advantages of logistic regression come at a cost: it requires much more data to achieve stable, meaningful results. With standard regression, and DA, typically 20 data points per predictor is considered the lower bound. For logistic regression, at least 50 data points per predictor is necessary to achieve stable results. | |||

some resources: [http://www.statgun.com/tutorials/logistic-regression.html], [http://etd.library.pitt.edu/ETD/available/etd-04122006-102254/unrestricted/realfinalplus_ETD2006.pdf] | |||

====Extension==== | |||

* When we are dealing with a problem with more than two classes, we need to generalize our logistic regression to a [http://en.wikipedia.org/wiki/Multinomial_logit Multinomial Logit model]. | |||

* Limitations of Logistic Regression: | |||

:1. We know that there is no assumptions are made about the distributions of the features of the data (i.e. the explanatory variables). However, the features should not be highly correlated with one another because this could cause problems with estimation. | |||

:2. Large number of data points (i.e.the sample sizes) are required for logistic regression to provide sufficient numbers in both classes. The more number of features/dimensions of the data, the larger the sample size required. | |||

== Logistic Regression(2) - October 19, 2009 == | |||

===Logistic Regression Model=== | |||

Recall that in the last lecture, we learned the logistic regression model. | |||

<math>\ | * <math>P(Y=1 | X=x)=P(\underline{x}_i;\underline{\beta})=\frac{exp(\underline{\beta}^T \underline{x})}{1+exp(\underline{\beta}^T \underline{x})}</math> | ||

* <math>P(Y=0 | X=x)=1-P(\underline{x}_i;\underline{\beta})=\frac{1}{1+exp(\underline{\beta}^T \underline{x})}</math> | |||

===Find <math>\underline{\beta}</math> === | |||

<math>\underline{\beta} | '''Criteria''': find a <math>\underline{\beta}</math> that maximizes the conditional likelihood of Y given X using the training data. | ||

From above, we have the first derivative of the log-likelihood: | |||

<math> | <math>\frac{\partial l}{\partial \underline{\beta}} = \sum_{i=1}^n \left[{y_i} \underline{x}_i- \frac{exp(\underline{\beta}^T \underline{x_i})}{1+exp(\underline{\beta}^T \underline{x}_i)}\underline{x}_i\right] </math> | ||

\ | <math>=\sum_{i=1}^n \left[{y_i} \underline{x}_i - P(\underline{x}_i;\underline{\beta})\underline{x}_i\right]</math> | ||

\underline{\beta}^{ | |||

\ | |||

Newton-Raphson algorithm:<br /> | |||

If we want to find <math>\ x^* </math> such that <math>\ f(x^*)=0</math> | |||

<math>\ X^{new} \leftarrow x^{old}-\frac {f(x^{old})}{\partial f(x^{old})} </math> | |||

<math>\ x^{new} \rightarrow x^* </math> | |||

If we want to maximize or minimize <math>\ f(x) </math>, then solve for <math>\ \partial f(x)=0 </math> | |||

<math>\ | <math>\ X^{new} \leftarrow x^{old}-\frac {\partial f(x^{old})}{\partial^2 f(x^{old})} </math> | ||

The [http://en.wikipedia.org/wiki/Newton%27s_method Newton-Raphson algorithm] requires the second-derivative or [http://en.wikipedia.org/wiki/Hessian_matrix Hessian matrix]. | |||

<math>\frac{\partial^{2} l}{\partial \underline{\beta} \partial \underline{\beta}^T }= | |||

\sum_{i=1}^n - \underline{x_i} \frac{(exp(\underline{\beta}^T\underline{x}_i) \underline{x}_i^T)(1+exp(\underline{\beta}^T \underline{x}_i))-\underline{x}_i exp(\underline{\beta}^T\underline{x}_i)exp(\underline{\beta}^T\underline{x}_i)}{(1+exp(\underline{\beta}^T \underline{x}))^2}</math> | |||

\ | |||

</math> | |||

('''note''': <math>\frac{\partial\underline{\beta}^T\underline{x}_i}{\partial \underline{\beta}^T}=\underline{x}_i^T</math> you can check it [http://www.ee.ic.ac.uk/hp/staff/dmb/matrix/intro.html here], it's a very useful website including a Matrix Reference Manual that you can find information about linear algebra and the properties of real and complex matrices.) | |||

::<math>=\sum_{i=1}^n - \underline{x}_i \frac{(-\underline{x}_i exp(\underline{\beta}^T\underline{x}_i) \underline{x}_i^T)}{(1+exp(\underline{\beta}^T \underline{x}))(1+exp(\underline{\beta}^T \underline{x}))}</math> (by cancellation) | |||

::<math>=\sum_{i=1}^n - \underline{x}_i \underline{x}_i^T P(\underline{x}_i;\underline{\beta}))[1-P(\underline{x}_i;\underline{\beta})])</math>(since <math>P(\underline{x}_i;\underline{\beta})=\frac{exp(\underline{\beta}^T \underline{x})}{1+exp(\underline{\beta}^T \underline{x})}</math> and <math>1-P(\underline{x}_i;\underline{\beta})=\frac{1}{1+exp(\underline{\beta}^T \underline{x})}</math>) | |||

The same second derivative can be achieved if we reduce the occurrences of beta to 1 by the identity<math>\frac{a}{1+a}=1-\frac{1}{1+a}</math> | |||

And solving <math>\frac{\partial}{\partial \underline{\beta}^T}\sum_{i=1}^n \left[{y_i} \underline{x}_i-\left[1-\frac{1}{1+exp(\underline{\beta}^T \underline{x}_i)}\right]\underline{x}_i\right] </math> | |||

Starting with <math>\,\underline{\beta}^{old}</math>, the Newton-Raphson update is | |||

<math>\,\underline{\beta}^{new}\leftarrow \,\underline{\beta}^{old}- (\frac{\partial ^2 l}{\partial \underline{\beta}\partial \underline{\beta}^T})^{-1}(\frac{\partial l}{\partial \underline{\beta}})</math> where the derivatives are evaluated at <math>\,\underline{\beta}^{old}</math> | |||

The iteration will terminate when <math>\underline{\beta}^{new}</math> is very close to <math>\underline{\beta}^{old}</math>. | |||

The iteration can be described in matrix form. | |||

* Let <math>\,\underline{Y}</math> be the column vector of <math>\,y_i</math>. (<math>n\times1</math>) | |||

* Let <math>\,X</math> be the <math>{d}\times{n}</math> input matrix. | |||

* Let <math>\,\underline{P}</math> be the <math>{n}\times{1}</math> vector with <math>i</math>th element <math>P(\underline{x}_i;\underline{\beta}^{old})</math>. | |||

* Let <math>\,W</math> be an <math>{n}\times{n}</math> diagonal matrix with <math>i</math>th element <math>P(\underline{x}_i;\underline{\beta}^{old})[1-P(\underline{x}_i;\underline{\beta}^{old})]</math> | |||

then | |||

<math>\frac{\partial l}{\partial \underline{\beta}} = X(\underline{Y}-\underline{P})</math> | |||

<math>\frac{\partial ^2 l}{\partial \underline{\beta}\partial \underline{\beta}^T} = -XWX^T</math> | |||

The Newton-Raphson step is | |||

<math>\underline{\beta}^{new} \leftarrow \underline{\beta}^{old}+(XWX^T)^{-1}X(\underline{Y}-\underline{P})</math> | |||

This equation is sufficient for computation of the logistic regression model. However, we can simplify further to uncover an interesting feature of this equation. | |||

=== | <math> | ||

\begin{align} | |||

\underline{\beta}^{new} &= (XWX^T)^{-1}(XWX^T)\underline{\beta}^{old}+(XWX^T)^{-1}XWW^{-1}(\underline{Y}-\underline{P})\\ | |||

&=(XWX^T)^{-1}XW[X^T\underline{\beta}^{old}+W^{-1}(\underline{Y}-\underline{P})]\\ | |||

&=(XWX^T)^{-1}XWZ | |||

\end{align}</math> | |||

where <math>Z=X\underline{\beta}^{old}+W^{-1}(\underline{Y}-\underline{P})</math> | |||

<math>\ | This is a adjusted response and it is solved repeatedly when <math>\ p </math>, <math>\ W </math>, and <math>\ z </math> changes. This algorithm is called [http://en.wikipedia.org/wiki/Iteratively_reweighted_least_squares iteratively reweighted least squares] because it solves the weighted least squares problem repeatedly. | ||

Recall that linear regression by least square finds the following minimum: <math>\min_{\underline{\beta}}(\underline{y}-\underline{\beta}^T X)^T(\underline{y}-\underline{\beta}^TX)</math> | |||

<math>\ | we have <math>\underline\hat{\beta}=(XX^T)^{-1}X\underline{y}</math> | ||

Similarly, we can say that <math>\underline{\beta}^{new}</math> is the solution of a weighted least square problem: | |||

<math>\underline{\beta}^{new} \leftarrow arg \min_{\underline{\beta}}(Z-X\underline{\beta}^T)W(Z-X\underline{\beta})</math> | |||

<math> | ====WLS==== | ||

Actually, the weighted least squares estimator minimizes the weighted sum of squared errors | |||

<math> | |||

S(\beta) = \sum_{i=1}^{n}w_{i}[y_{i}-\mathbf{x}_{i}^{T}\beta]^{2} | |||

</math> | |||

where <math>\displaystyle w_{i}>0</math>. | |||

Hence the WLS estimator is given by | |||

<math> | |||

\hat\beta^{WLS}=\left[\sum_{i=1}^{n}w_{i}\mathbf{x}_{i}\mathbf{x}_{i}^{T} \right]^{-1}\left[ \sum_{i=1}^{n}w_{i}\mathbf{x}_{i}y_{i}\right] | |||

</math> | |||

<math> | A weighted linear regression of the iteratively computed response | ||

<math> | |||

\mathbf{z}=\mathbf{X}^{T}\beta^{old}+\mathbf{W}^{-1}(\mathbf{y}-\mathbf{p}) | |||

</math> | |||

Therefore, we obtain | |||

:<math> | |||

\begin{align} | |||

& \hat\beta^{WLS}=\left[\sum_{i=1}^{n}w_{i}\mathbf{x}_{i}\mathbf{x}_{i}^{T} \right]^{-1}\left[ \sum_{i=1}^{n}w_{i}\mathbf{x}_{i}z_{i}\right] | |||

\\& | |||

= \left[ \mathbf{XWX}^{T}\right]^{-1}\left[ \mathbf{XWz}\right] | |||

\\& | |||

= \left[ \mathbf{XWX}^{T}\right]^{-1}\mathbf{XW}(\mathbf{X}^{T}\beta^{old}+\mathbf{W}^{-1}(\mathbf{y}-\mathbf{p})) \\& | |||

= \beta^{old}+ \left[ \mathbf{XWX}^{T}\right]^{-1}\mathbf{X}(\mathbf{y}-\mathbf{p}) | |||

\end{align} | |||

</math> | |||

'''note:'''Here we obtain <math>\underline{\beta}</math>, which is a <math>d\times{1}</math> vector, because we construct the model like <math>\underline{\beta}^T\underline{x}</math>. If we construct the model like <math>\underline{\beta}_0+ \underline{\beta}^T\underline{x}</math>, then similar to linear regression, <math>\underline{\beta}</math> will be a <math>(d+1)\times{1}</math> vector. | |||

<br/> | |||

:Choosing <math>\displaystyle\beta=0</math> seems to be a suitable starting value for the Newton-Raphson iteration procedure in this case. However, this does not guarantee convergence. The procedure will usually converge since the log-likelihood function is concave(or convex), but overshooting can occur. In the rare cases that the log-likelihood decreases, cut step | |||

size by half, then we can always have convergence. In the case that it does not, we can just prove the local convergence of the method, which means the iteration would converge only if the initial point is closed enough to the exact solution. However, in practice, choosing an appropriate initial value is really trivial, namely, it is not often to find a initial too far from the exact solution to make the iteration invalid. <ref>C. T. Kelley, Iterative Methods for Linear and Nonlinear Equations, chapter 5 </ref> Besides, step-size halving will solve this problem. <ref>H. Trevor, R. Tibshirani, J. Friedman, The Elements of Statistical Learning (Springer | |||

2009),121.</ref> | |||

For multiclass cases: the Newton algorithm can also be expressed as an iteratively reweighted least squares algorithm, but with a vector of <math>\ k-1 </math> response and a nondiagonal weight matrix per observation. And we can use coordinate-descent method to maximize the log-likelihood efficiently. | |||

====Pseudo Code==== | |||

#<math>\underline{\beta} \leftarrow 0</math> | |||

#Set <math>\,\underline{Y}</math>, the label associated with each observation <math>\,i=1...n</math>. | |||

#Compute <math>\,\underline{P}</math> according to the equation <math>P(\underline{x}_i;\underline{\beta})=\frac{exp(\underline{\beta}^T \underline{x})}{1+exp(\underline{\beta}^T \underline{x})}</math> for all <math>\,i=1...n</math>. | |||

#Compute the diagonal matrix <math>\,W</math> by setting <math>\,w_i,i</math> to <math>P(\underline{x}_i;\underline{\beta}))[1-P(\underline{x}_i;\underline{\beta})]</math> for all <math>\,i=1...n</math>. | |||

#<math>Z \leftarrow X^T\underline{\beta}+W^{-1}(\underline{Y}-\underline{P})</math>. | |||

#<math>\underline{\beta} \leftarrow (XWX^T)^{-1}XWZ</math>. | |||

#If the new <math>\underline{\beta}</math> value is sufficiently close to the old value, stop; otherwise go back to step 3. | |||

===Comparison with Linear Regression=== | |||

*'''Similarities''' | |||

#They are both to attempt to estimate <math>\,P(Y=k|X=x)</math> (For logistic regression, we just mentioned about the case that <math>\,k=0</math> or <math>\,k=1</math> now). | |||

#They are both have linear boundaris. | |||

:'''note:'''For linear regression, we assume the model is linear. The boundary is <math>P(Y=k|X=x)=\underline{\beta}^T\underline{x}_i+\underline{\beta}_0=0.5</math> (linear) | |||

::For logistic regression, the boundary is <math>P(Y=k|X=x)=\frac{exp(\underline{\beta}^T \underline{x})}{1+exp(\underline{\beta}^T \underline{x})}=0.5 \Rightarrow exp(\underline{\beta}^T \underline{x})=1\Rightarrow \underline{\beta}^T \underline{x}=0</math> (linear) | |||

*'''Differences''' | |||

#Linear regression: <math>\,P(Y=k|X=x)</math> is linear function of <math>\,x</math>, <math>\,P(Y=k|X=x)</math> is not guaranteed to fall between 0 and 1 and to sum up to 1. | |||

#Logistic regression: <math>\,P(Y=k|X=x)</math> is a nonlinear function of <math>\,x</math>, and it is guaranteed to range from 0 to 1 and to sum up to 1. | |||

===Comparison with LDA=== | |||

#The linear logistic model only consider the conditional distribution <math>\,P(Y=k|X=x)</math>. No assumption is made about <math>\,P(X=x)</math>. | |||

#The LDA model specifies the joint distribution of <math>\,X</math> and <math>\,Y</math>. | |||

#Logistic regression maximizes the conditional likelihood of <math>\,Y</math> given <math>\,X</math>: <math>\,P(Y=k|X=x)</math> | |||

#LDA maximizes the joint likelihood of <math>\,Y</math> and <math>\,X</math>: <math>\,P(Y=k,X=x)</math>. | |||

#If <math>\,\underline{x}</math> is d-dimensional,the number of adjustable parameter in logistic regression is <math>\,d</math>. The number of parameters grows linearly w.r.t dimension. | |||

#If <math>\,\underline{x}</math> is d-dimensional,the number of adjustable parameter in LDA is <math>\,(2d)+d(d+1)/2+2=(d^2+5d+4)/2</math>. The number of parameters grows quardratically w.r.t dimension. | |||

#LDA estimate parameters more efficiently by using more information about data and samples without class labels can be also used in LDA. | |||

#As logistic regression relies on fewer assumptions, it seems to be more robust. | |||

#In practice, Logistic regression and LDA often give the similar results. | |||

====By example==== | |||

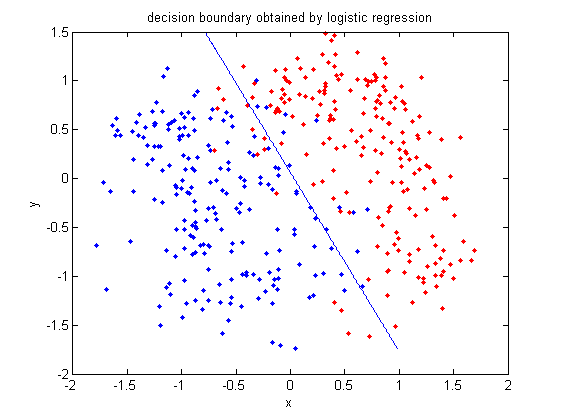

Now we compare LDA and Logistic regression by an example. Again, we use them on the 2_3 data. | |||

>>load 2_3; | |||

>>[U, sample] = princomp(X'); | |||

>>sample = sample(:,1:2); | |||

>>plot (sample(1:200,1), sample(1:200,2), '.'); | |||

>>hold on; | |||

>>plot (sample(201:400,1), sample(201:400,2), 'r.'); | |||

:First, we do PCA on the data and plot the data points that represent 2 or 3 in different colors. See the previous example for more details. | |||

>>group = ones(400,1); | |||

>>group(201:400) = 2; | |||

:Group the data points. | |||

>>[B,dev,stats] = mnrfit(sample,group); | |||

>>x=[ones(1,400); sample']; | |||

:Now we use [http://www.mathworks.com/access/helpdesk/help/toolbox/stats/index.html?/access/helpdesk/help/toolbox/stats/mnrfit.html&http://www.google.cn/search?hl=zh-CN&q=mnrfit+matlab&btnG=Google+%E6%90%9C%E7%B4%A2&aq=f&oq= mnrfit] to use logistic regression to classfy the data. This function can return B which is a <math>(d+1)\times{(k–1)}</math> matrix of estimates, where each column corresponds to the estimated intercept term and predictor coefficients. In this case, B is a <math>3\times{1}</math> matrix. | |||

>> B | |||

B =0.1861 | |||

-5.5917 | |||

-3.0547 | |||

==== | :This is our <math>\underline{\beta}</math>. So the posterior probabilities are: | ||

:<math>P(Y=1 | X=x)=\frac{exp(0.1861-5.5917X_1-3.0547X_2)}{1+exp(0.1861-5.5917X_1-3.0547X_2)}</math>. | |||

:<math>P(Y=2 | X=x)=\frac{1}{1+exp(0.1861-5.5917X_1-3.0547X_2)}</math> | |||

The | :The classification rule is: | ||

:<math>\hat Y = 1</math>, if <math>\,0.1861-5.5917X_1-3.0547X_2>=0</math> | |||

:<math>\hat Y = 2</math>, if <math>\,0.1861-5.5917X_1-3.0547X_2<0</math> | |||

>>f = sprintf('0 = %g+%g*x+%g*y', B(1), B(2), B(3)); | |||

>>ezplot(f,[min(sample(:,1)), max(sample(:,1)), min(sample(:,2)), max(sample(:,2))]) | |||

:Plot the decision boundary by logistic regression. | |||

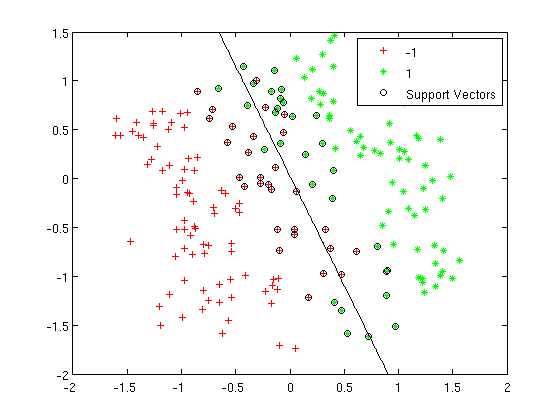

[[File:Boundary-lr.png|frame|center|This is a decision boundary by logistic regression.The line shows how the two classes split.]] | |||

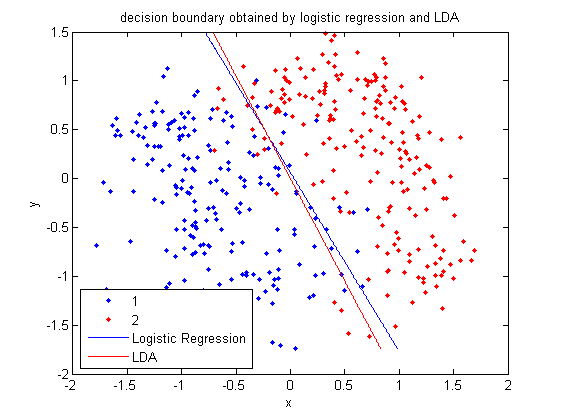

>>[class, error, POSTERIOR, logp, coeff] = classify(sample, sample, group, 'linear'); | |||

>>k = coeff(1,2).const; | |||

>>l = coeff(1,2).linear; | |||

>>f = sprintf('0 = %g+%g*x+%g*y', k, l(1), l(2)); | |||

>>h=ezplot(f, [min(sample(:,1)), max(sample(:,1)), min(sample(:,2)), max(sample(:,2))]); | |||

:Plot the decision boundary by LDA. See the previous example for more information about LDA in matlab. | |||

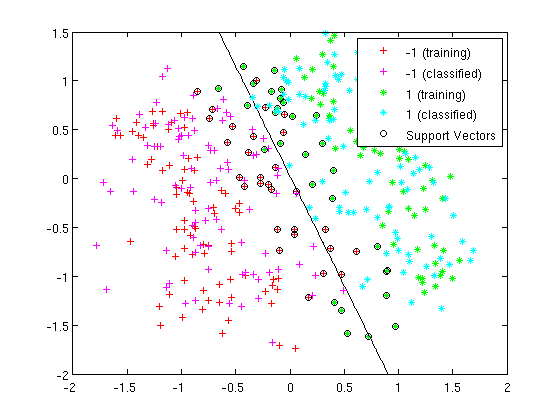

[[File:Boundary-lda.png|frame|center| From this figure, we can see that the results of Logistic Regression and LDA are very similar.]] | |||

== | == ''' 2009.10.21''' == | ||

=== Multi-Class Logistic Regression === | |||

Our earlier goal with logistic regression was to model the posteriors for a 2 class classification problem with a linear function bounded by the interval [0,1]. In that case our model was,<br /><br /> | |||

[ | |||

<math>\log\left(\frac{P(Y=1|X=x)}{P(Y=0|X=x)}\right)= \log\left(\frac{\frac{\exp(\beta^T x)}{1+\exp(\beta^T x)}}{\frac{1}{1+\exp(\beta^T x)}}\right) =\beta^Tx</math><br /><br /> | |||

We can extend this idea to the more general case with K-classes. This model is specified with K - 1 terms where the Kth class in the denominator can be chosen arbitrarily.<br /><br /> | |||

</ | |||

<math>\ | <math>\log\left(\frac{P(Y=i|X=x)}{P(Y=K|X=x)}\right)=\beta_i^Tx,\quad i \in \{1,\dots,K-1\} </math><br /><br /> | ||

The posteriors for each class are given by,<br /><br /> | |||

<br/> | |||

<br/> | |||

<math>P(Y=i|X=x) = \frac{\exp(\beta_i^T x)}{1+\sum_{k=1}^{K-1}\exp(\beta_k^T x)}, \quad i \in \{1,\dots,K-1\}</math><br /><br /> | |||

<br/ | |||

<math>P(Y=K|X=x) = \frac{1}{1+\sum_{k=1}^{K-1}\exp(\beta_k^T x)}</math><br /><br /> | |||

Seeing these equations as a weighted least squares problem makes them easier to derivate. | |||

Note that we still retain the property that the sum of the posteriors is 1. In general the posteriors are no longer complements of each other as in true in the 2 class problem where we could express <math>\displaystyle P(Y=1|X=x)=1-P(Y=0|X=x)</math>. Fitting a Logistic model for the K>2 class problem isn't as 'nice' as in the 2 class problem since we don't have the same simplification. | |||

===Multi-class kernel logistic regression=== | |||

Logistic regression (LR) and kernel logistic regression (KLR) have already proven their value in the statistical and machine learning community. Opposed to an empirically risk minimization approach such as employed by Support Vector Machines (SVMs), LR and KLR yield probabilistic outcomes based on a maximum likelihood argument. It seems that this framework provides a natural extension to multiclass classification tasks, which must be contrasted to the | |||

commonly used coding approach. | |||

A paper uses the LS-SVM framework to solve the KLR problem. In that paper,they see that the minimization of the negative penalized log likelihood criterium is equivalent to solving in each iteration a weighted version of least squares support vector machines (wLS-SVMs). In the derivation it turns out that the global regularization term is reflected as usual in each step. In a similar iterative weighting of wLS-SVMs, with different weighting factors is reported to converge to an SVM solution. | |||

Unlike SVMs, KLR by its nature is not sparse and needs all training samples in its final model. Different adaptations to the original algorithm were proposed to obtain sparseness. The second one uses a sequential minimization optimization (SMO) approach and in the last case, the binary KLR problem is reformulated into a geometric programming system which can be efficiently solved by an interior-point algorithm. In the LS-SVM framework, fixed-size LS-SVM has shown its value on large data sets. It approximates the feature map using a spectral decomposition, which leads to a sparse representation of the model when estimating in the primal space. They use this technique as a practical implementation of KLR with estimation in the primal space. To reduce the size of the Hessian, an alternating descent version of Newton’s method is used which has the extra advantage that it can be easily used in a distributed computing environment. The proposed algorithm is compared to existing algorithms using small size to large scale benchmark data sets. | |||

Paper's Link: [[ftp://ftp.esat.kuleuven.ac.be/pub/SISTA/karsmakers/20070424IJCNN_pk.pdf]] | |||

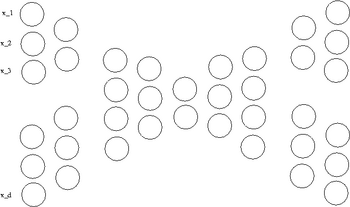

=== Perceptron (Foundation of Neural Network) === | |||

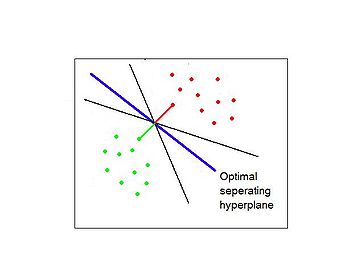

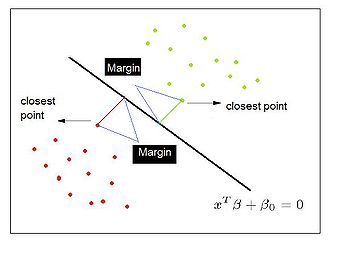

==== Separating Hyperplane Classifiers ==== | |||

Separating hyperplane trys to separate the data using linear decision boundaries. When the classes overlap, it can be generalized to support vector machine, which constructs nonlinear boundaries by constructing a linear boundary in an enlarged and transformed feature space. | |||

where <math>\displaystyle | |||

==== Perceptron ==== | |||

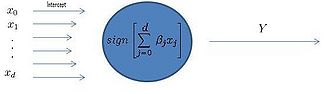

[[Image:Simpleperceptron.jpg|thumb|right|325px|Figure 1: Diagram of a linear perceptron.]] | |||

Recall the use of Least Squares regression as a classifier, shown to be identical to LDA. To classify points with least squares we take the sign of a linear combination of data points and assign a label equivalent to +1 or -1. | |||

= | Least Squares returns the sign of a linear combination of data points as the class label | ||

<math>sign(\underline{\beta}^T \underline{x} + {\beta}_0) = sign(\beta_{0}+\beta_{1}x_{1}+\beta_{2}x_{2})</math> | |||

In the 1950s [http://en.wikipedia.org/wiki/Frank_Rosenblatt Frank Rosenblatt] developed an iterative linear classifier while at Cornell University known as the Perceptron. The concept of a perceptron was fundamental to the later development of the [http://en.wikipedia.org/wiki/Artificial_neural_network Artificial Neural Network] models. The perceptron is a simple type of neural network which models the electrical signals of [http://en.wikipedia.org/wiki/Biological_neural_network biological neurons]. In fact, it was the first neural network to be algorithmically described. <ref>Simon S. Haykin, Neural Networks and Learning Machines, (Prentice Hall 2008). </ref> | |||

As in other linear classification methods like Least Squares, Rosenblatt's classifier determines a hyperplane for the decision boundary. Linear methods all determine slightly different decision boundaries, Rosenblatt's algorithm seeks to minimize the distance between the decision boundary and the misclassified points <ref>H. Trevor, R. Tibshirani, J. Friedman, The Elements of Statistical Learning (Springer 2009),156.</ref>. | |||

Particular to the iterative nature of the solution, the problem has no global mean (not convex). It does not converge to give a unique hyperplane, and the solutions depend on the size of the gap between classes. If the classes are separable then the algorithm is shown to converge to a local mean. The proof of this convergence is known as the ''perceptron convergence theorem''. However, for overlapping classes convergence to a local mean cannot be guaranteed. | |||

If we find a hyperplane that is not unique between 2 classes, there will be infinitely many solutions obtained from the perceptron algorithm. | |||

As seen in Figure 1, after training, the perceptron determines the label of the data by computing the sign of a linear combination of components. | |||

====A Perceptron Example==== | |||

The perceptron network can figure out the decision boundray line even if we dont know how to draw the line. We just have to give it some examples first. For example: | |||

{| class="wikitable" | |||

|- | |||

! Features:x1, x2, x3 | |||

! Answer | |||

|- | |||

| 1,0,0 | |||

| +1 | |||

|- | |||

| 1,0,1 | |||

| +1 | |||

|- | |||

| 1,1,0 | |||

| +1 | |||

|- | |||

| 0,0,1 | |||

| -1 | |||

|- | |||

| 0,1,1 | |||

| -1 | |||

|- | |||

| 1,1,1 | |||

| -1 | |||

|} | |||

Then the perceptron starts out not knowing how to separate the answers so it guesses. For example we input 1,0,0 and it guesses -1. But the right answer is +1. So the perceptron adjusts its line and we try the next example. Eventually the perceptron will have all the answers right. | |||

y=[1;1;1;-1;-1;-1]; | |||

x=[1,0,0;1,0,1;,1,1,0;0,0,1;0,1,1;1,1,1]'; | |||

=== | b_0=0; | ||

b=[1;1;1]; | |||

rho=.5; | |||

for j=1:100; | |||

changed=0; | |||

for i=1:6 | |||

d=(b'*x(:,i)+b_0)*y(i); | |||

if d<0 | |||

b=b+rho*x(:,i)*y(i); | |||

b_0=b_0+rho*y(i); | |||

changed=1; | |||

end | |||

end | |||

if changed==0 | |||

break; | |||

end | |||

end | |||

==The Perceptron (Lecture October 23, 2009)== | |||

[[File:misclass.png|300px|thumb|right|Figure 2: This figure shows a misclassified point and the movement of the decision boundary.]] | |||

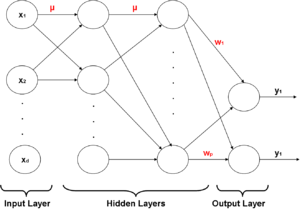

A Perceptron can be modeled as shown in Figure 1 of the previous lecture where<math>\,x_0</math> is the model intercept and <math>x_{1},\ldots,x_{d}</math> represent the feature data, <math>\sum_{i=0}^d \beta_{j}x_{j}</math> is a linear combination of some weights of these inputs, and <math>I(\sum_{i=1}^d \beta_{j}x_{j})</math>, where <math>\,I</math> indicates the sign of the expression and returns the label of the data point. | |||

The Perceptron algorithm seeks a linear boundary between two classes. A linear decision boundary can be represented by<math> \underline{\beta}^T\underline{x}+\beta_{0}. </math> The algorithm begins with an arbitrary hyperplane <math>\underline{\beta}^T\underline{x}+\beta_{0} </math> (initial guess). Its goal is to minimize the distance between the decision boundary and the misclassified data points. This is illustrated in Figure 2. It attempts to find the optimal <math>\underline\beta</math> by iteratively adjusting the decision boundary until all points are on the correct side of the boundary. It terminates when there are no misclassified points. | |||

<br/> | |||

<br/> | |||

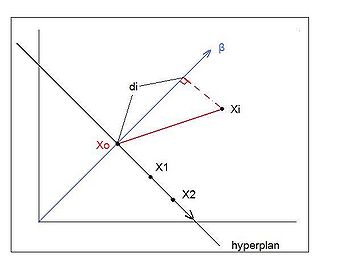

[[File:distance2.jpg|300px|thumb|right|Figure 3: This figure illustrates the derivation of the distance between the decision boundary and misclassified points]] | |||

'''Derivation''':'' The distance between the decision boundary and misclassified points''. <br /><br /> | |||

If <math>\underline{x_{1}}</math> and <math>\underline{x_{2}}</math>both lie on the decision boundary then,<br /><br /> | |||

:<math> | |||

\begin{align} | |||

\underline{\beta}^T\underline{x_{1}}+\beta_{0} &= \underline{\beta}^T\underline{x_{2}}+\beta_{0} \\ | |||

\underline{\beta}^T (x_{1}-x_{2})&=0 | |||

\end{align} | |||

</math> | |||

<math>\underline{\beta}^T (x_{1}-x_{2})</math> denotes an inner product. Since the inner product is 0 and <math>(\underline{x_{1}}-\underline{x_{2}})</math> is a vector lying on the decision boundary, <math>\underline{\beta}</math> is orthogonal to the decision boundary. <br /><br /> | |||

Let <math>\underline{x_{i}}</math> be a misclassified point. <br /><br /> | |||

< | Then the projection of the vector <math> \underline{x_{i}}</math> on the direction that is orthogonal to the decision boundary is <math>\underline{\beta}^T\underline{x_{i}}</math>. | ||

< | Now, if <math>\underline{x_{0}}</math> is also on the decision boundary, then <math>\underline{\beta}^T\underline{x_{0}}+\beta_{0}=0</math> and so <math>\underline{\beta}^T\underline{x_{0}}= -\beta_{0}</math>. Looking at Figure 3, it can be seen that the distance between <math>\underline{x_{i}}</math> and the decision boundary is the absolute value of <math>\underline{\beta}^T\underline{x_{i}}+\beta_{0}. </math> | ||

<br/> | |||

<br/> | |||

Consider <math>y_{i}(\underline{\beta}^T\underline{x_{i}}+\beta_{0}).</math> | |||

:Notice that if <math>\underline{x_{i}}</math> is classified ''correctly'' then this product is positive. This is because if it is classified correctly, then either both (<math>\underline{\beta}^T\underline{x_{i}}+\beta_{0})</math> and<math>\displaystyle y_{i}</math> are positive or they are both negative. However, if <math>\underline{x_{i}}</math> is classified ''incorrectly'' then one of <math>(\underline{\beta}^T\underline{x_{i}}+\beta_{0})</math> and <math>\displaystyle y_{i}</math> is positive and the other is negative. The result is that the above product is negative for a point that is misclassified. | |||

<br/> | |||

For the algorithm, we need only consider the distance between the misclassified points and the decision boundary. | |||

:Consider <math>\phi(\underline{\beta},\beta_{0})= -\displaystyle\sum_{i\in M} –y_{i}(\underline{\beta}^T\underline{x_{i}}+\beta_{0}) </math> | |||

which is a summation of positive numbers and where <math>\displaystyle M</math> is the set of all misclassified points. | |||

<br/> | |||

The goal now becomes to <math>\min_{\underline{\beta},\beta_{0}} \phi(\underline{\beta},\beta_{0}). </math> | |||

This can be done using a [http://en.wikipedia.org/wiki/Gradient_descent gradient descent approach], which is a numerical method that takes one predetermined step in the direction of the gradient, getting closer to a minimum at each step, until the gradient is zero. A problem with this algorithm is the possibility of getting stuck in a local minimum. To continue, the following derivatives are needed: | |||

:<math>\frac{\partial \phi}{\partial \underline{\beta}}= -\displaystyle\sum_{i \in M}y_{i}\underline{x_{i}} | |||

\ \ \ \ \ \ \ \ \ \ \ \frac{\partial \phi}{\partial \beta_{0}}= -\displaystyle\sum_{i \in M}y_{i}</math> | |||

<br/> | |||

Then the gradient descent type algorithm (Perceptron Algorithm) is | |||

:<math> | |||

\begin{pmatrix} | |||

\underline{\beta}^{\mathrm{new}}\\ | |||

\underline{\beta_0}^{\mathrm{new}} | |||

<math>\ | \end{pmatrix} | ||

= | |||

\begin{pmatrix} | |||

\underline{\beta}^{\mathrm{old}}\\ | |||

<br | \underline{\beta_0}^{\mathrm{old}} | ||

\end{pmatrix} | |||

+\rho | |||

\begin{pmatrix} | |||

== | y_i \underline{x_i}\\ | ||

y_i | |||

=== | \end{pmatrix} | ||

The | </math> | ||

where <math>\displaystyle\rho</math> is the magnitude of each step called the "learning rate" or the "convergence rate". The algorithm continues until <math> | |||

\begin{pmatrix} | |||

# | \underline{\beta}^{\mathrm{new}}\\ | ||

# | \underline{\beta_0}^{\mathrm{new}} | ||

\end{pmatrix} | |||

= | |||

\begin{pmatrix} | |||

\underline{\beta}^{\mathrm{old}}\\ | |||

\underline{\beta_0}^{\mathrm{old}} | |||

\end{pmatrix} </math> | |||

or until it has iterated a specified number of times. If the algorithm converges, it has found a linear classifier, ie., there are no misclassified points. | |||

<br/> | |||

<br/> | |||

====Problems with the [http://www.cs.cmu.edu/~avrim/ML09/lect0126.pdf Algorithm] and Issues Affecting Convergence==== | |||

#The output values of a perceptron can take on only one of two values (+1 or -1); that is, it only can be used for two-class classification. | |||

#If the data is not separable, then the Perceptron algorithm will not converge since it cannot find a linear classifier that classifies all of the points correctly. | |||

#Convergence rates depend on the size of the gap between classes. If the gap is large, then the algorithm converges quickly. However, if the gap is small, the algorithm converges slowly. This problem can be eliminated by using basis expansions technique. To be specific, we try to find a hyperplane not in the original space, but in the enlarged space obtained by using some basis functions. | |||

#If the classes are separable, there exists infinitely many solutions to Perceptron, all of which are hyperplanes. | |||

#The speed of convergence of the algorithm is also dependent on the value of <math>\displaystyle\rho</math>, the learning rate. A larger value of <math>\displaystyle\rho</math> could yield quicker convergence, but if this value is too large, it may also result in “skipping over” the minimum that the algorithm is trying to find and possibly oscillating forever between the last two points, before and after the min. | |||

#A perfect separation is not always available even desirable. If observations comes from different classes sharing the same imput, the classification model seems to be overfitting and will generally have poor predictive performance. | |||

#The [http://annet.eeng.nuim.ie/intro/course/chpt2/convergence.shtml perceptron convergence theorem] states that if there exists an exact solution (in other words, if the training data set is linearly separable), then the perceptron learning algorithm is guaranteed to find an exact solution in a finite number of steps. Proofs of this theorem can be found for example in Rosenblatt (1962), Block (1962), Nilsson (1965), Minsky and Papert (1969), Hertz et al. (1991), and Bishop (1995a). Note, however, that the number of steps required to achieve convergence could still be substantial, and in practice, until convergence is achieved we will not be able to distinguish between a nonseparable problem and one that is simply slow to converge<ref> | |||

Pattern Recognition and Machine Learning,Christopher M. Bishop,194 | |||

</ref>. | |||