Music Recommender System Based using CRNN: Difference between revisions

| (65 intermediate revisions by 39 users not shown) | |||

| Line 1: | Line 1: | ||

==Introduction and Objective:== | ==Introduction and Objective:== | ||

In the digital era of music streaming, companies, such as Spotify and Pandora, are faced with the following challenge: can they provide users with relevant and personalized music recommendations amidst the ever-growing abundance of music and user data | In the digital era of music streaming, companies, such as Spotify and Pandora, are faced with the following challenge: can they provide users with relevant and personalized music recommendations amidst the ever-growing abundance of music and user data? | ||

The objective of this paper is to implement a personalized music recommender system that takes user listening history as input and continually finds new music that captures individual user preferences. | The objective of this paper is to implement a personalized music recommender system that takes user listening history as input and continually finds new music that captures individual user preferences. | ||

| Line 9: | Line 9: | ||

The authors of this paper took a content-based music approach to build the recommendation system - specifically, comparing the similarity of features based on the audio signal. | The authors of this paper took a content-based music approach to build the recommendation system - specifically, comparing the similarity of features based on the audio signal. | ||

The following two-method approach | The following two-method approach for building the recommendation system was followed: | ||

#Make recommendations including genre information extracted from classification algorithms. | #Make recommendations including genre information extracted from classification algorithms. | ||

#Make recommendations without genre information. | #Make recommendations without genre information. | ||

The authors used convolutional recurrent neural networks (CRNN), which is a combination of convolutional neural networks (CNN) and recurrent neural network(RNN), as their main classification model. | The authors used convolutional recurrent neural networks (CRNN), which is a combination of 2D convolutional neural networks (CNN) and recurrent neural network(RNN), as their main classification model. | ||

==Methods and Techniques:== | ==Methods and Techniques:== | ||

Generally, a music recommender can be divided into three main parts: ( | Generally, a music recommender can be divided into three main parts: (i) users, (ii) items, and (iii) user-item matching algorithms. Firstly, a model for a user's music taste is generated based on their profiles. Secondly, item profiling based on editorial, cultural, and acoustic metadata is exploited to increase listener satisfaction. Thirdly, a matching algorithm is employed to recommend personalized music to the listener. Two main approaches are currently available; | ||

To classify music, the original music’s audio signal is converted into a spectrogram image. Using the image and the Short Time Fourier Transform (STFT), we convert the data into the Mel scale which is used in the CNN and CRNN models. | 1. Collaborative filtering | ||

It is based on users' historical listening data and depends on user ratings. Nearest neighbour is the standard method used for collaborative filtering and can be broken into two classes of methods: (i) user-based neighbourhood methods and (ii) item-based neighbourhood methods. | |||

User-based neighbourhood methods calculate the similarity between the target user and other users, and selects the k most similar. A weighted average of the most similar users' song ratings is then computed to predict how the target user would rate those songs. Songs that have a high predicted rating are then recommended to the user. In contrast, methods that use item-based neighbourhoods calculate similarities between songs that the target user has rated well and songs they have not listened to in order to recommend songs. | |||

That being said, collaborative filtering faces many challenges. For example, given that each user sees only a small portion of all music libraries, sparsity and scalability become an issue. However, this can be dealt with using matrix factorization. A more difficult challenge to overcome is the fact that users often don't rate songs when they are listening to music. | |||

2. Content-based filtering | |||

Content based recommendation systems base their recommendations on the similarity of an items features and features that the user has enjoyed. It has two-steps; (i) Extract audio content features and (ii) predict user preferences. | |||

However content-based filtering has to overcome the challenge of only being able to predict based on users' existing interests. The model is unable to effectively scale to a user's ever changing music taste. | |||

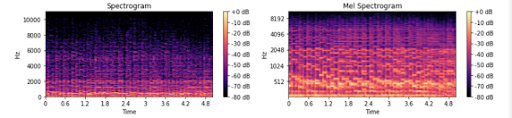

In this work, the authors take a content-based approach, as they compare the similarity of audio signal features to make recommendations. To classify music, the original music’s audio signal is converted into a spectrogram image. Using the image and the Short Time Fourier Transform (STFT), we convert the data into the Mel scale which is used in the CNN and CRNN models. | |||

=== Mel Scale: === | === Mel Scale: === | ||

The scale of pitches that are heard by listeners, which translates to equal pitch increments. | The scale of pitches that are heard by listeners, which translates to equal pitch increments. | ||

| Line 27: | Line 41: | ||

The transformation that determines the sinusoidal frequency of the audio, with a Hanning smoothing function. In the continuous case this is written as: <math>\mathbf{STFT}\{x(t)\}(\tau,\omega) \equiv X(\tau, \omega) = \int_{-\infty}^{\infty} x(t) w(t-\tau) e^{-i \omega t} \, d t </math> | The transformation that determines the sinusoidal frequency of the audio, with a Hanning smoothing function. In the continuous case this is written as: <math>\mathbf{STFT}\{x(t)\}(\tau,\omega) \equiv X(\tau, \omega) = \int_{-\infty}^{\infty} x(t) w(t-\tau) e^{-i \omega t} \, d t </math> | ||

where: <math>w(\tau)</math> is the Hanning smoothing function | where: <math>w(\tau)</math> is the Hanning smoothing function. The STFT is applied over a specified window length at a certain time allowing the frequency to represented for that given window rather than the entire signal as a typical Fourier Transform would. | ||

=== Convolutional Neural Network (CNN): === | === Convolutional Neural Network (CNN): === | ||

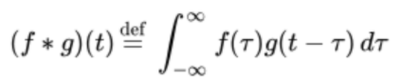

Neural Network that uses convolution in place of matrix multiplication for some layer calculations. By training the data, weights for inputs are updated to find the most significant data relevant to classification. These convolutional layers gather small groups of data | A Convolutional Neural Network is a Neural Network that uses convolution in place of matrix multiplication for some layer calculations. By training the data, weights for inputs are updated to find the most significant data relevant to classification. These convolutional layers gather small groups of data with kernels and try to find patterns that can help find features in the overall data. The features are then used for classification. Padding is another technique used to extend the pixels on the edge of the original image to allow the kernel to more accurately capture the borderline pixels. Padding is also used if one wishes the convolved output image to have a certain size. The image on the left represents the mathematical expression of a convolution operation, while the right image demonstrates an application of a kernel on the data. | ||

[[File:Convolution.png|thumb|400px|left|Convolution Operation]] | [[File:Convolution.png|thumb|400px|left|Convolution Operation]] | ||

| Line 36: | Line 50: | ||

=== Convolutional Recurrent Neural Network (CRNN): === | === Convolutional Recurrent Neural Network (CRNN): === | ||

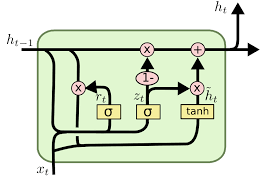

The CRNN is similar to the architecture of a CNN, but with the addition of a Gated Recurrent Unit (GRU), which is a Recurrent Neural Network (RNN). An RNN is used to treat sequential data, by reusing the activation function of previous nodes to update the output. The GRU is used to store more long-term memory and will help train the early hidden layers. GRUs can be thought of as LSTMs but with a forget gate, and has fewer parameters than an LSTM. These gates are used to determine how much information from the past should be passed along onto the future. They are originally aimed to prevent the vanishing gradient problem, since deeper networks will result in smaller and smaller gradients at each layer. The GRU can choose to copy over all the information in the past, thus eliminating the risk of vanishing gradients. | |||

[[File:GRU441.png|thumb|400px|left|Gated Recurrent Unit (GRU)]] | [[File:GRU441.png|thumb|400px|left|Gated Recurrent Unit (GRU)]] | ||

| Line 43: | Line 57: | ||

==Data Screening:== | ==Data Screening:== | ||

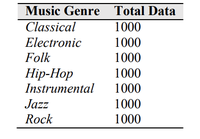

The authors of this paper used a publicly available music dataset made up of 25,000 30-second songs from the Free Music Archives. To ensure a balanced dataset, only 1000 songs each from the genres of classical, electronic, folk, hip-hop, instrumental, jazz and rock were used in the final model. | The authors of this paper used a publicly available music dataset made up of 25,000 30-second songs from the Free Music Archives which contains 16 different genres. The data is cleaned up by removing low audio quality songs, wrongly labelled genres and those that have multiple genres. To ensure a balanced dataset, only 1000 songs each from the genres of classical, electronic, folk, hip-hop, instrumental, jazz and rock were used in the final model. | ||

[[File:Data441.png|thumb|200px|none|Data sorted by music genre]] | [[File:Data441.png|thumb|200px|none|Data sorted by music genre]] | ||

| Line 53: | Line 67: | ||

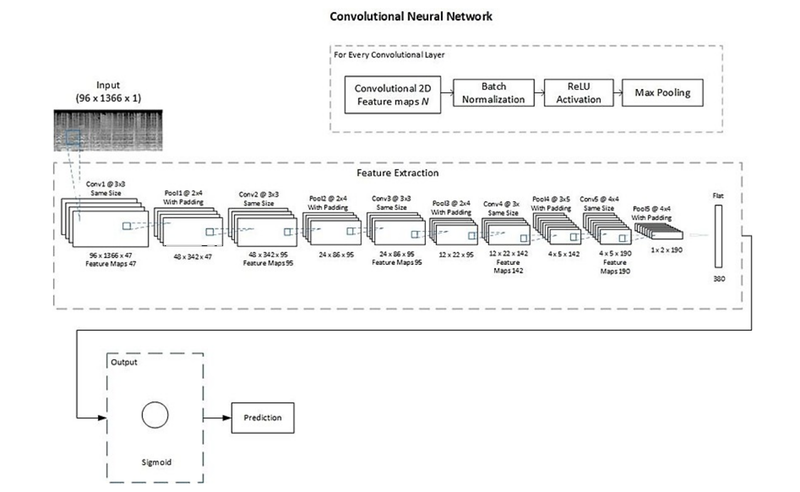

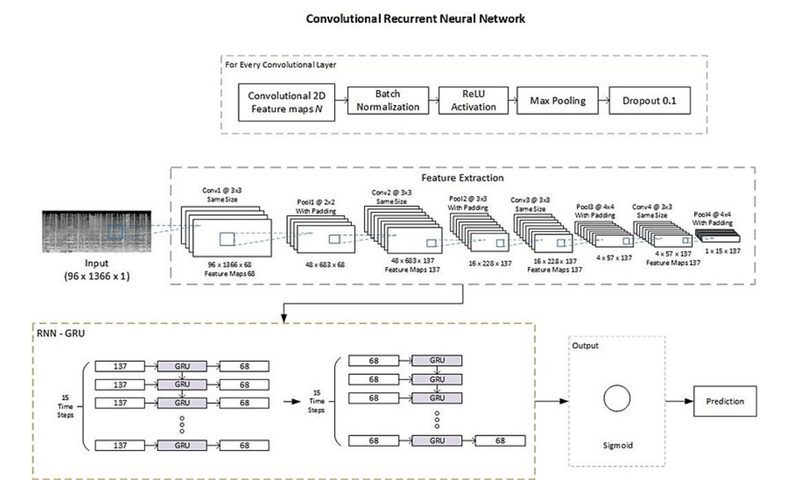

As noted previously, both CNNs and CRNNs were used to model the data. The advantage of CRNNs is that they are able to model time sequence patterns in addition to frequency features from the spectrogram, allowing for greater identification of important features. Furthermore, feature vectors produced before the classification stage could be used to improve accuracy. | As noted previously, both CNNs and CRNNs were used to model the data. The advantage of CRNNs is that they are able to model time sequence patterns in addition to frequency features from the spectrogram, allowing for greater identification of important features. Furthermore, feature vectors produced before the classification stage could be used to improve accuracy. | ||

In implementing the neural networks, the Mel-spectrogram data was split up into training, validation, and test sets at a ratio of 8:1:1 respectively and labelled via one-hot encoding. This made it possible for the categorical data to be labelled correctly for binary classification. As opposed to classical stochastic gradient descent, the authors opted to use | In implementing the neural networks, the Mel-spectrogram data was split up into training, validation, and test sets at a ratio of 8:1:1 respectively and labelled via one-hot encoding. This made it possible for the categorical data to be labelled correctly for binary classification. As opposed to classical stochastic gradient descent, the authors opted to use binary classier and ADAM optimization to update weights in the training phase, and parameters of <math>\alpha = 0.001, \beta_1 = 0.9, \beta_2 = 0.999</math>. Binary cross-entropy was used as the loss function. | ||

Input spectrogram image are 96x1366. In both the CNN and CRNN models, the data was trained over 100 epochs with a batch size of 50 (limited computing power) and using binary cross-entropy as the loss function. Notable model specific details are below: | |||

'''CNN''' | |||

* Five convolutional layers with 3x3 kernel, stride 1, padding, batch normalization, and ReLU activation | |||

* Max pooling layers | |||

* The sigmoid function was used as the output layer | |||

'''CRNN''' | |||

* Four convolutional layers with 3x3 kernel (which construct a 2D temporal pattern - two layers of RNNs with Gated Recurrent Units), stride 1, padding, batch normalization, ReLU activation, and dropout rate 0.1 | |||

* Feature maps are N x1x15 (N = number of features maps, 68 feature maps in this case) is used for RNNs. | |||

* 4 Max pooling layers for four convolutional layers with kernel ((2x2)-(3x3)-(4x4)-(4x4)) and same stride | |||

* The sigmoid function was used as the output layer | |||

The CNN and CRNN architecture is also given in the charts below. | |||

[[File:CNN441.png|thumb|800px|none|Implementation of CNN Model]] | [[File:CNN441.png|thumb|800px|none|Implementation of CNN Model]] | ||

| Line 65: | Line 88: | ||

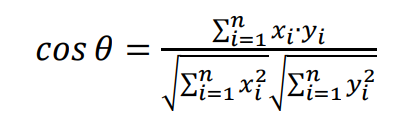

=== Music Recommendation System === | === Music Recommendation System === | ||

The recommendation system | The recommendation system utilizes cosine similarity of the extracted features from the neural network to compute similarity. Each genre will have a song act as a centre point for each class. The final inputs of the trained neural networks will be the feature variables. The feature variables will be used in the cosine similarity to find the best recommendations. | ||

The values are between [-1,1], where larger values are songs that have similar features. When the user inputs five songs, those songs become the new inputs in the neural networks and the features are used by the cosine similarity with other music. The largest five cosine similarities are used as recommendations. | The values are between [-1,1], where larger values are songs that have similar features. When the user inputs five songs, those songs become the new inputs in the neural networks and the features are used by the cosine similarity with other music. The largest five cosine similarities are used as recommendations. | ||

| Line 105: | Line 128: | ||

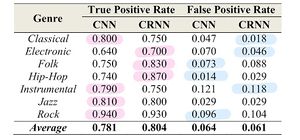

[[File:TruePositiveChart.jpg|thumb|none|True Positive / False Positive Chart]] | [[File:TruePositiveChart.jpg|thumb|none|True Positive / False Positive Chart]] | ||

On average, CRNN outperforms CNN in true positive and false positive cases. | On average, CRNN outperforms CNN in true positive and false positive cases. In addition, it is very apparent that false positive rate is much more higher for songs in the Instrumental genre, perhaps indicating that more pre-processing needs to be done for songs in this genre or that it should be excluded from the analysis completely since most music incorporates instrumental components. | ||

| Line 116: | Line 139: | ||

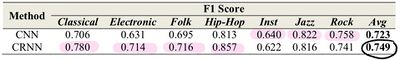

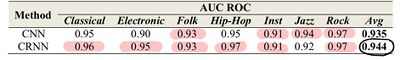

CRNN models that consider the frequency features and time sequence patterns of songs have a better classification performance through metrics such as F1 score and AUC | CRNN models that consider the frequency features and time sequence patterns of songs have a better classification performance through metrics such as F1 score and AUC compared to the CNN classifier. | ||

=== Evaluation of Music Recommendation System: === | === Evaluation of Music Recommendation System: === | ||

* A listening experiment was performed with 30 participants to | * A listening experiment was performed with 30 participants to assess user responses to given music recommendations. | ||

* Participants choose 5 | * Participants choose 5 pieces of music they enjoy and the recommender system generates 5 new recommendations. The participants then evaluate the recommendation by recording whether they liked or disliked the music recommendation | ||

* The recommendation system takes two approaches to the recommendation: | * The recommendation system takes two approaches to the recommendation: | ||

** Method one uses only the value of cosine similarity. | ** Method one uses only the value of cosine similarity. | ||

| Line 128: | Line 151: | ||

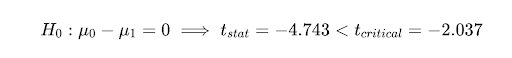

[[File:H0441.png|frame|100px|none|Hypothesis test between method 1 and method 2]] | [[File:H0441.png|frame|100px|none|Hypothesis test between method 1 and method 2]] | ||

Comparing the two methods, <math> H_0: u_1 - u_2 = 0</math>, we have <math> t_{stat} = -4.743 < -2.037 </math> which demonstrates that the increase in average user likes with the addition of music genre information is statistically significant. | Comparing the two methods, <math> H_0: u_1 - u_2 = 0</math>, we have <math> t_{stat} = -4.743 < -2.037 </math>, which demonstrates that the increase in average user likes with the addition of music genre information is statistically significant. | ||

== Conclusion: == | == Conclusion: == | ||

The two two main conclusions obtained from this paper: | |||

* The music genre should be a key feature to increase the predictive capabilities of the music recommendation system. | |||

* To extract the song genre from a song’s audio signals and get overall better performance, CRNN’s are superior to CNN’s as they consider frequency in features and time sequence patterns of audio signals. | |||

According to | According to the paper, the authors suggested adding other music features like tempo gram for capturing local tempo as a way to improve the accuracy of the recommender system. | ||

== Critiques/ Insights: == | == Critiques/ Insights: == | ||

# | # It would be helpful if authors bench-mark their novel approach with other recommendation algorithms such as collaborative filtering to see if there is a lift in predictive capabilities. | ||

# The listening experiment used to evaluate the recommendation system only includes songs that are outputted by the model. Users may be biased if they believe all songs have come from a recommendation system. To remove bias, we suggest having 15 songs where 5 songs are recommended and 10 songs are set. With this in the user’s mind, it may remove some bias in response and give more accurate predictive capabilities. | # The listening experiment used to evaluate the recommendation system only includes songs that are outputted by the model. Users may be biased if they believe all songs have come from a recommendation system. To remove bias, we suggest having 15 songs where 5 songs are recommended and 10 songs are set. With this in the user’s mind, it may remove some bias in response and give more accurate predictive capabilities. | ||

# | # It would be better if they go into more details about how CRNN makes it perform better than CNN, in terms of attributes of each network. Also, it might be helpful to include the motivations why CNN and CRNN are useful in this specific problem. | ||

# The methodology introduced in this paper is probably also suitable for movie recommendations. As music is presented as spectrograms (images) in a time sequence, and it is very similar to a movie. | # The methodology introduced in this paper is probably also suitable for movie recommendations. As music is presented as spectrograms (images) in a time sequence, and it is very similar to a movie. | ||

# The way of evaluation is a very interesting approach. Since it's usually not easy to evaluate the testing result when it's subjective. By listing all these evaluations' performance, the result would be more comprehensive. | # The way of evaluation is a very interesting approach. Since it's usually not easy to evaluate the testing result when it's subjective. By listing all these evaluations' performance, the result would be more comprehensive. A practice that might reduce bias is by coming back to the participants after a couple of days and asking whether they liked the music that was recommended. Often times music "grows" on people and their opinion of a new song may change after some time has passed. | ||

# The paper lacks the comparison between the proposed algorithm and the music recommendation algorithms being used now. It will be clearer to show the superiority of this algorithm. | # The paper lacks the comparison between the proposed algorithm and the music recommendation algorithms being used now. It will be clearer to show the superiority of this algorithm. | ||

# The GAN neural network has been proposed to enhance the performance of the neural network, so an improved result may appear after considering using GAN. | # The GAN neural network has been proposed to enhance the performance of the neural network, so an improved result may appear after considering using GAN. | ||

# The limitation of CNN and CRNN could be that they are only able to process the spectrograms with single labels rather than multiple labels. This is far from enough for the music recommender systems in today's music industry since the edges between various genres are blurred. | # The limitation of CNN and CRNN could be that they are only able to process the spectrograms with single labels rather than multiple labels. This is far from enough for the music recommender systems in today's music industry since the edges between various genres are blurred. | ||

# | # Is it possible for CNN and CRNN to identify different songs? The model would be harder to train, based on my experience, the efficiency of CNN in R is not very high, which can be improved for future work. | ||

# According to the author, the recommender system is done by calculating the cosine similarity of extraction features from one music to another music. Is possible to represent it by Euclidean distance or p-norm distances? | |||

# In real-life application, most of the music software will have the ability to recommend music to the listener and ask do they like the music that was recommended. It would be a nice application by involving some new information from the listener. | # In real-life application, most of the music software will have the ability to recommend music to the listener and ask do they like the music that was recommended. It would be a nice application by involving some new information from the listener. | ||

# Actual music listeners do not listen to one genre of music, and in fact listening to the same track or the same genre would be somewhat unusual. Could this method be used to make recommendations not on genre, but based on other categories? (Such as the theme of the lyrics, the pitch of the singer, or the date published). Would this model be able to differentiate between tracks of varying "lyric vocabulation difficulty"? Or would NLP algorithms be needed to consider lyrics? | |||

# Actual music listeners do not listen to one genre of music, and in fact listening to the same track or the same genre would be somewhat unusual. Could this method be used to make recommendations not on genre, but based on other | # This model can be applied to many other fields such as recommending the news in the news app, recommending things to buy in the amazon, recommending videos to watch in YOUTUBE and so on based on the user information. | ||

# Looks like for the most genres, CRNN outperforms CNN, but CNN did do better on a few genres (like Jazz), so it might be better to mix them together or might use CNN for some genres and CRNN for the rest. | |||

# Cosine similarity is used to find songs with similar patterns as the input ones from users. That is, feature variables are extracted from the trained neural network model before the classification layer, and used as the basis to find similar songs. One potential problem of this approach is that if the neural network classifies an input song incorrectly, the extracted feature vector will not be a good representation of the input song. Thus, a song that is in fact really similar to the input song may have a small cosine similarity value, i.e. not be recommended. In conclusion, if the first classification is wrong, future inferences based on that is going to make it deviate further from the true answer. A possible future improvement will be how to offset this inference error. | |||

# In the tables when comparing performance and accuracies of the CNN and CRNN models on different genres of music, the researchers claimed that CRNN had superior performance to CNN models. This seemed intuitive, especially in the cases when the differences in accuracies were large. However, maybe the researchers should consider including some hypothesis testing statistics in such tables, which would support such claims in a more rigorous manner. | |||

# A music recommender system that doesn't use the song's meta data such as artist and genre and rather tries to classify genre itself seems unproductive. I also believe that the specific artist matters much more than the genre since within a genre you have many different styles. It just seems like the authors hamstring their recommender system by excluding other relevant data. | |||

# The genres that are posed in the paper are very broad and may not be specific enough to distinguish a listeners actual tastes (ie, I like rock and roll, but not punk rock, which could both be in the "rock" category). It would be interesting to run similar experiments with more concrete and specific genres to study the possibility of improving accuracy in the model. | |||

# This summary is well organized with detailed explanation to the music recommendation algorithm. However, since the data used in this paper is cleaned to buffer the efficiency of the recommendation, there should be a section evaluating the impact of noise on the performance this algorithm and how to minimize the impact. | |||

# This method will be better if the user choose some certain music genres that they like while doing the sign-up process. This is similar to recommending articles on twitter. | |||

# I have some feedback for the "Evaluation of Music Recommendation System" section. Firstly, there can be a brief mention of the participants' background information. Secondly, the summary mentions that "participants choose 5 pieces of music they enjoyed". Are they free to choose any music they like, or are they choosing from a pool of selections? What are the lengths of these music pieces? Lastly, method one and method two are compared against each other. It's intuitive that method two will outperform method one, since method two makes use of both cosine similarity and information on music genre, whereas method one only makes use of cosine similarity. Thus, saying method two outperforms method one is not necessarily surprising. I would like to see more explanation on why these methods are chosen, and why comparing them directly is considered to be fair. | |||

# It would be better to have more comparison with other existing music recommender system. | |||

# In the Collecting Music Data section, the author has indicated that for maintaining the balance of data for each genre that they are choosing to omit some genres and a portion of the dataset. However, how this was done was not explained explicitly which can be a concern for results replication. It would be better to describe the steps and measures taken to ensure the actions taken by the teams are reproducible. | |||

# For cleaning data, for training purposes, the team is choosing to omit the ones with lower music quality. While this is a sound option, it can be adjusted that the ratings for the music are deducted to adjust the balance. This could be important since a poor music quality could mean either equipment failure or corrupt server storage or it was a recording of a live performance that often does not have a perfect studio quality yet it would be loved by many real-life users. This omission is not entirely justified and feels like a deliberate adjustment for later results. | |||

# It would be more convincing if the author could provide more comparison between CRNN and CNN. | |||

# How is the result used to recommend songs within genres? It looks like it only predicts what genre the user likes to listen and recommends one of the songs from that genre. How can this recommender system be used to recommend songs within the same genre? | |||

# This [https://arxiv.org/pdf/2006.15795.pdf paper] implements CRNN differently; the CNN and RNN are separate and their resulting matrices and combined later. Would using this version of the CRNN potentially improve the accuracy? | |||

# This kind of approach can be used in implementing other recommender systems for, like movies, articles, news, websites etc. It would be helpful if the author could explain and generalize the implementation on other forms of recommender systems. | |||

# The accuracy of the genre classifier seemed really low, considering how distinct the genres sound to humans. The authors recommend adding features to the data but these could likely be extracted from the audio signal. Extra preprocessing would likely go a long way to improve the accuracy. | |||

# Since it was mentioned that different genres were used, it would be interesting to know if the model can classify different languages and how it performs with songs in different languages. | |||

# It is possible to extend this application to classifying baroque, classical, and romantic genre music. This can be beneficial for students (and frankly, people of all ages) who are learning about music. What's even more interesting to see is if this algorithm can distinguish music pieces written by classical musicians such as Beethoven, Haydn, and Mozart. Of course, it would take more effort in distinguishing features across the music pieces of these three artists, but it's an area worth exploring. | |||

# In contrast to the mel spectrogram method, the popular streaming app Spotify allows you to view data they collect that includes features about the nature of the of song such as acousticness, danceability, loudness, tempo, etc. | |||

# The authors introduced a good recommendation system. It might be helpful to evaluate just based on how similar users are. Meanwhile, there might be some bias need to be considered in the dataset. PCA might be a good way to analyze the existing pattern. | |||

# This models needs a way to introduce variance into its recommendations. The kind of music you wnat to listen to is largely dependent on non quantifiable factors like mood, but there is evidence to support that it depends on current events/activities. A way to introduce those factors into the recommendation system need to be looked at. | |||

# The music recommender is based on big data of what the users listen to. It would be better if the summary can explain more about how this problem is very similar to image detection problem, and how they are related to each other. This way it is better for the audiences to make connection. | |||

== References: == | |||

Nilashi, M., et.al. ''Collaborative Filtering Recommender Systems''. Research Journal of Applied Sciences, Engineering and Technology 5(16):4168-4182, 2013. | |||

Adiyansjah, Alexander A S Gunawan, Derwin Suhartono, Music Recommender System Based on Genre using Convolutional Recurrent Neural Networks, Procedia Computer Science, https://doi.org/10.1016/j.procs.2019.08.146. | |||

Latest revision as of 04:38, 16 December 2020

Introduction and Objective:

In the digital era of music streaming, companies, such as Spotify and Pandora, are faced with the following challenge: can they provide users with relevant and personalized music recommendations amidst the ever-growing abundance of music and user data?

The objective of this paper is to implement a personalized music recommender system that takes user listening history as input and continually finds new music that captures individual user preferences.

This paper argues that a music recommendation system should vary from the general recommendation system used in practice since it should combine music feature recognition and audio processing technologies to extract music features, and combine them with data on user preferences.

The authors of this paper took a content-based music approach to build the recommendation system - specifically, comparing the similarity of features based on the audio signal.

The following two-method approach for building the recommendation system was followed:

- Make recommendations including genre information extracted from classification algorithms.

- Make recommendations without genre information.

The authors used convolutional recurrent neural networks (CRNN), which is a combination of 2D convolutional neural networks (CNN) and recurrent neural network(RNN), as their main classification model.

Methods and Techniques:

Generally, a music recommender can be divided into three main parts: (i) users, (ii) items, and (iii) user-item matching algorithms. Firstly, a model for a user's music taste is generated based on their profiles. Secondly, item profiling based on editorial, cultural, and acoustic metadata is exploited to increase listener satisfaction. Thirdly, a matching algorithm is employed to recommend personalized music to the listener. Two main approaches are currently available;

1. Collaborative filtering

It is based on users' historical listening data and depends on user ratings. Nearest neighbour is the standard method used for collaborative filtering and can be broken into two classes of methods: (i) user-based neighbourhood methods and (ii) item-based neighbourhood methods.

User-based neighbourhood methods calculate the similarity between the target user and other users, and selects the k most similar. A weighted average of the most similar users' song ratings is then computed to predict how the target user would rate those songs. Songs that have a high predicted rating are then recommended to the user. In contrast, methods that use item-based neighbourhoods calculate similarities between songs that the target user has rated well and songs they have not listened to in order to recommend songs.

That being said, collaborative filtering faces many challenges. For example, given that each user sees only a small portion of all music libraries, sparsity and scalability become an issue. However, this can be dealt with using matrix factorization. A more difficult challenge to overcome is the fact that users often don't rate songs when they are listening to music.

2. Content-based filtering

Content based recommendation systems base their recommendations on the similarity of an items features and features that the user has enjoyed. It has two-steps; (i) Extract audio content features and (ii) predict user preferences.

However content-based filtering has to overcome the challenge of only being able to predict based on users' existing interests. The model is unable to effectively scale to a user's ever changing music taste.

In this work, the authors take a content-based approach, as they compare the similarity of audio signal features to make recommendations. To classify music, the original music’s audio signal is converted into a spectrogram image. Using the image and the Short Time Fourier Transform (STFT), we convert the data into the Mel scale which is used in the CNN and CRNN models.

Mel Scale:

The scale of pitches that are heard by listeners, which translates to equal pitch increments.

Short Time Fourier Transform (STFT):

The transformation that determines the sinusoidal frequency of the audio, with a Hanning smoothing function. In the continuous case this is written as: [math]\displaystyle{ \mathbf{STFT}\{x(t)\}(\tau,\omega) \equiv X(\tau, \omega) = \int_{-\infty}^{\infty} x(t) w(t-\tau) e^{-i \omega t} \, d t }[/math]

where: [math]\displaystyle{ w(\tau) }[/math] is the Hanning smoothing function. The STFT is applied over a specified window length at a certain time allowing the frequency to represented for that given window rather than the entire signal as a typical Fourier Transform would.

Convolutional Neural Network (CNN):

A Convolutional Neural Network is a Neural Network that uses convolution in place of matrix multiplication for some layer calculations. By training the data, weights for inputs are updated to find the most significant data relevant to classification. These convolutional layers gather small groups of data with kernels and try to find patterns that can help find features in the overall data. The features are then used for classification. Padding is another technique used to extend the pixels on the edge of the original image to allow the kernel to more accurately capture the borderline pixels. Padding is also used if one wishes the convolved output image to have a certain size. The image on the left represents the mathematical expression of a convolution operation, while the right image demonstrates an application of a kernel on the data.

Convolutional Recurrent Neural Network (CRNN):

The CRNN is similar to the architecture of a CNN, but with the addition of a Gated Recurrent Unit (GRU), which is a Recurrent Neural Network (RNN). An RNN is used to treat sequential data, by reusing the activation function of previous nodes to update the output. The GRU is used to store more long-term memory and will help train the early hidden layers. GRUs can be thought of as LSTMs but with a forget gate, and has fewer parameters than an LSTM. These gates are used to determine how much information from the past should be passed along onto the future. They are originally aimed to prevent the vanishing gradient problem, since deeper networks will result in smaller and smaller gradients at each layer. The GRU can choose to copy over all the information in the past, thus eliminating the risk of vanishing gradients.

Data Screening:

The authors of this paper used a publicly available music dataset made up of 25,000 30-second songs from the Free Music Archives which contains 16 different genres. The data is cleaned up by removing low audio quality songs, wrongly labelled genres and those that have multiple genres. To ensure a balanced dataset, only 1000 songs each from the genres of classical, electronic, folk, hip-hop, instrumental, jazz and rock were used in the final model.

Implementation:

Modeling Neural Networks

As noted previously, both CNNs and CRNNs were used to model the data. The advantage of CRNNs is that they are able to model time sequence patterns in addition to frequency features from the spectrogram, allowing for greater identification of important features. Furthermore, feature vectors produced before the classification stage could be used to improve accuracy.

In implementing the neural networks, the Mel-spectrogram data was split up into training, validation, and test sets at a ratio of 8:1:1 respectively and labelled via one-hot encoding. This made it possible for the categorical data to be labelled correctly for binary classification. As opposed to classical stochastic gradient descent, the authors opted to use binary classier and ADAM optimization to update weights in the training phase, and parameters of [math]\displaystyle{ \alpha = 0.001, \beta_1 = 0.9, \beta_2 = 0.999 }[/math]. Binary cross-entropy was used as the loss function. Input spectrogram image are 96x1366. In both the CNN and CRNN models, the data was trained over 100 epochs with a batch size of 50 (limited computing power) and using binary cross-entropy as the loss function. Notable model specific details are below:

CNN

- Five convolutional layers with 3x3 kernel, stride 1, padding, batch normalization, and ReLU activation

- Max pooling layers

- The sigmoid function was used as the output layer

CRNN

- Four convolutional layers with 3x3 kernel (which construct a 2D temporal pattern - two layers of RNNs with Gated Recurrent Units), stride 1, padding, batch normalization, ReLU activation, and dropout rate 0.1

- Feature maps are N x1x15 (N = number of features maps, 68 feature maps in this case) is used for RNNs.

- 4 Max pooling layers for four convolutional layers with kernel ((2x2)-(3x3)-(4x4)-(4x4)) and same stride

- The sigmoid function was used as the output layer

The CNN and CRNN architecture is also given in the charts below.

Music Recommendation System

The recommendation system utilizes cosine similarity of the extracted features from the neural network to compute similarity. Each genre will have a song act as a centre point for each class. The final inputs of the trained neural networks will be the feature variables. The feature variables will be used in the cosine similarity to find the best recommendations.

The values are between [-1,1], where larger values are songs that have similar features. When the user inputs five songs, those songs become the new inputs in the neural networks and the features are used by the cosine similarity with other music. The largest five cosine similarities are used as recommendations.

Evaluation Metrics

Precision:

- The proportion of True Positives with respect to the predicted positive cases (true positives and false positives)

- For example, out of all the songs that the classifier predicted as Classical, how many are actually Classical?

- Describes the rate at which the classifier predicts the true genre of songs among those predicted to be of that certain genre

Recall:

- The proportion of True Positives with respect to the actual positive cases (true positives and false negatives)

- For example, out of all the songs that are actually Classical, how many are correctly predicted to be Classical?

- Describes the rate at which the classifier predicts the true genre of songs among the correct instances of that genre

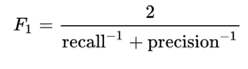

F1-Score:

An accuracy metric that combines the classifier’s precision and recall scores by taking the harmonic mean between the two metrics:

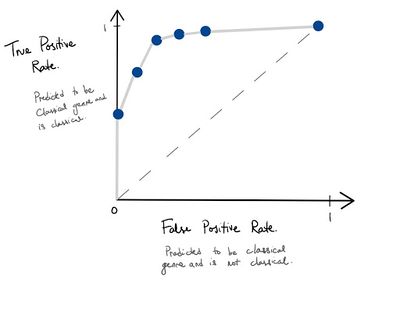

Receiver operating characteristics (ROC):

- A graphical metric that is used to assess a classification model at different classification thresholds

- In the case of a classification threshold of 0.5, this means that if [math]\displaystyle{ P(Y = k | X = x) \gt 0.5 }[/math] then we classify this instance as class k

- Plots the true positive rate versus false positive rate as the classification threshold is varied

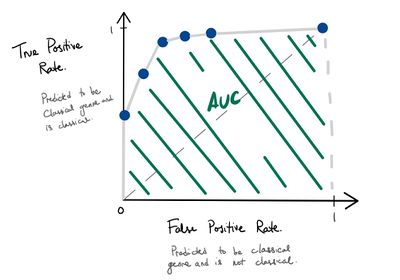

Area Under the Curve (AUC)

AUC is the area under the ROC in doing so, the ROC provides an aggregate measure across all possible classification thresholds.

In the context of the paper: When scoring all songs as [math]\displaystyle{ Prob(Classical | X=x) }[/math], it is the probability that the model ranks a random Classical song at a higher probability than a random non-Classical song.

Results

Accuracy Metrics

The table below is the accuracy metrics with the classification threshold of 0.5.

On average, CRNN outperforms CNN in true positive and false positive cases. In addition, it is very apparent that false positive rate is much more higher for songs in the Instrumental genre, perhaps indicating that more pre-processing needs to be done for songs in this genre or that it should be excluded from the analysis completely since most music incorporates instrumental components.

On average, CRNN outperforms CNN in F1-score.

On average, CRNN also outperforms CNN in AUC metric.

CRNN models that consider the frequency features and time sequence patterns of songs have a better classification performance through metrics such as F1 score and AUC compared to the CNN classifier.

Evaluation of Music Recommendation System:

- A listening experiment was performed with 30 participants to assess user responses to given music recommendations.

- Participants choose 5 pieces of music they enjoy and the recommender system generates 5 new recommendations. The participants then evaluate the recommendation by recording whether they liked or disliked the music recommendation

- The recommendation system takes two approaches to the recommendation:

- Method one uses only the value of cosine similarity.

- Method two uses the value of cosine similarity and information on music genre.

- Perform test of significance of differences in average user likes between the two methods using a t-statistic:

Comparing the two methods, [math]\displaystyle{ H_0: u_1 - u_2 = 0 }[/math], we have [math]\displaystyle{ t_{stat} = -4.743 \lt -2.037 }[/math], which demonstrates that the increase in average user likes with the addition of music genre information is statistically significant.

Conclusion:

The two two main conclusions obtained from this paper:

- The music genre should be a key feature to increase the predictive capabilities of the music recommendation system.

- To extract the song genre from a song’s audio signals and get overall better performance, CRNN’s are superior to CNN’s as they consider frequency in features and time sequence patterns of audio signals.

According to the paper, the authors suggested adding other music features like tempo gram for capturing local tempo as a way to improve the accuracy of the recommender system.

Critiques/ Insights:

- It would be helpful if authors bench-mark their novel approach with other recommendation algorithms such as collaborative filtering to see if there is a lift in predictive capabilities.

- The listening experiment used to evaluate the recommendation system only includes songs that are outputted by the model. Users may be biased if they believe all songs have come from a recommendation system. To remove bias, we suggest having 15 songs where 5 songs are recommended and 10 songs are set. With this in the user’s mind, it may remove some bias in response and give more accurate predictive capabilities.

- It would be better if they go into more details about how CRNN makes it perform better than CNN, in terms of attributes of each network. Also, it might be helpful to include the motivations why CNN and CRNN are useful in this specific problem.

- The methodology introduced in this paper is probably also suitable for movie recommendations. As music is presented as spectrograms (images) in a time sequence, and it is very similar to a movie.

- The way of evaluation is a very interesting approach. Since it's usually not easy to evaluate the testing result when it's subjective. By listing all these evaluations' performance, the result would be more comprehensive. A practice that might reduce bias is by coming back to the participants after a couple of days and asking whether they liked the music that was recommended. Often times music "grows" on people and their opinion of a new song may change after some time has passed.

- The paper lacks the comparison between the proposed algorithm and the music recommendation algorithms being used now. It will be clearer to show the superiority of this algorithm.

- The GAN neural network has been proposed to enhance the performance of the neural network, so an improved result may appear after considering using GAN.

- The limitation of CNN and CRNN could be that they are only able to process the spectrograms with single labels rather than multiple labels. This is far from enough for the music recommender systems in today's music industry since the edges between various genres are blurred.

- Is it possible for CNN and CRNN to identify different songs? The model would be harder to train, based on my experience, the efficiency of CNN in R is not very high, which can be improved for future work.

- According to the author, the recommender system is done by calculating the cosine similarity of extraction features from one music to another music. Is possible to represent it by Euclidean distance or p-norm distances?

- In real-life application, most of the music software will have the ability to recommend music to the listener and ask do they like the music that was recommended. It would be a nice application by involving some new information from the listener.

- Actual music listeners do not listen to one genre of music, and in fact listening to the same track or the same genre would be somewhat unusual. Could this method be used to make recommendations not on genre, but based on other categories? (Such as the theme of the lyrics, the pitch of the singer, or the date published). Would this model be able to differentiate between tracks of varying "lyric vocabulation difficulty"? Or would NLP algorithms be needed to consider lyrics?

- This model can be applied to many other fields such as recommending the news in the news app, recommending things to buy in the amazon, recommending videos to watch in YOUTUBE and so on based on the user information.

- Looks like for the most genres, CRNN outperforms CNN, but CNN did do better on a few genres (like Jazz), so it might be better to mix them together or might use CNN for some genres and CRNN for the rest.

- Cosine similarity is used to find songs with similar patterns as the input ones from users. That is, feature variables are extracted from the trained neural network model before the classification layer, and used as the basis to find similar songs. One potential problem of this approach is that if the neural network classifies an input song incorrectly, the extracted feature vector will not be a good representation of the input song. Thus, a song that is in fact really similar to the input song may have a small cosine similarity value, i.e. not be recommended. In conclusion, if the first classification is wrong, future inferences based on that is going to make it deviate further from the true answer. A possible future improvement will be how to offset this inference error.

- In the tables when comparing performance and accuracies of the CNN and CRNN models on different genres of music, the researchers claimed that CRNN had superior performance to CNN models. This seemed intuitive, especially in the cases when the differences in accuracies were large. However, maybe the researchers should consider including some hypothesis testing statistics in such tables, which would support such claims in a more rigorous manner.

- A music recommender system that doesn't use the song's meta data such as artist and genre and rather tries to classify genre itself seems unproductive. I also believe that the specific artist matters much more than the genre since within a genre you have many different styles. It just seems like the authors hamstring their recommender system by excluding other relevant data.

- The genres that are posed in the paper are very broad and may not be specific enough to distinguish a listeners actual tastes (ie, I like rock and roll, but not punk rock, which could both be in the "rock" category). It would be interesting to run similar experiments with more concrete and specific genres to study the possibility of improving accuracy in the model.

- This summary is well organized with detailed explanation to the music recommendation algorithm. However, since the data used in this paper is cleaned to buffer the efficiency of the recommendation, there should be a section evaluating the impact of noise on the performance this algorithm and how to minimize the impact.

- This method will be better if the user choose some certain music genres that they like while doing the sign-up process. This is similar to recommending articles on twitter.

- I have some feedback for the "Evaluation of Music Recommendation System" section. Firstly, there can be a brief mention of the participants' background information. Secondly, the summary mentions that "participants choose 5 pieces of music they enjoyed". Are they free to choose any music they like, or are they choosing from a pool of selections? What are the lengths of these music pieces? Lastly, method one and method two are compared against each other. It's intuitive that method two will outperform method one, since method two makes use of both cosine similarity and information on music genre, whereas method one only makes use of cosine similarity. Thus, saying method two outperforms method one is not necessarily surprising. I would like to see more explanation on why these methods are chosen, and why comparing them directly is considered to be fair.

- It would be better to have more comparison with other existing music recommender system.

- In the Collecting Music Data section, the author has indicated that for maintaining the balance of data for each genre that they are choosing to omit some genres and a portion of the dataset. However, how this was done was not explained explicitly which can be a concern for results replication. It would be better to describe the steps and measures taken to ensure the actions taken by the teams are reproducible.

- For cleaning data, for training purposes, the team is choosing to omit the ones with lower music quality. While this is a sound option, it can be adjusted that the ratings for the music are deducted to adjust the balance. This could be important since a poor music quality could mean either equipment failure or corrupt server storage or it was a recording of a live performance that often does not have a perfect studio quality yet it would be loved by many real-life users. This omission is not entirely justified and feels like a deliberate adjustment for later results.

- It would be more convincing if the author could provide more comparison between CRNN and CNN.

- How is the result used to recommend songs within genres? It looks like it only predicts what genre the user likes to listen and recommends one of the songs from that genre. How can this recommender system be used to recommend songs within the same genre?

- This paper implements CRNN differently; the CNN and RNN are separate and their resulting matrices and combined later. Would using this version of the CRNN potentially improve the accuracy?

- This kind of approach can be used in implementing other recommender systems for, like movies, articles, news, websites etc. It would be helpful if the author could explain and generalize the implementation on other forms of recommender systems.

- The accuracy of the genre classifier seemed really low, considering how distinct the genres sound to humans. The authors recommend adding features to the data but these could likely be extracted from the audio signal. Extra preprocessing would likely go a long way to improve the accuracy.

- Since it was mentioned that different genres were used, it would be interesting to know if the model can classify different languages and how it performs with songs in different languages.

- It is possible to extend this application to classifying baroque, classical, and romantic genre music. This can be beneficial for students (and frankly, people of all ages) who are learning about music. What's even more interesting to see is if this algorithm can distinguish music pieces written by classical musicians such as Beethoven, Haydn, and Mozart. Of course, it would take more effort in distinguishing features across the music pieces of these three artists, but it's an area worth exploring.

- In contrast to the mel spectrogram method, the popular streaming app Spotify allows you to view data they collect that includes features about the nature of the of song such as acousticness, danceability, loudness, tempo, etc.

- The authors introduced a good recommendation system. It might be helpful to evaluate just based on how similar users are. Meanwhile, there might be some bias need to be considered in the dataset. PCA might be a good way to analyze the existing pattern.

- This models needs a way to introduce variance into its recommendations. The kind of music you wnat to listen to is largely dependent on non quantifiable factors like mood, but there is evidence to support that it depends on current events/activities. A way to introduce those factors into the recommendation system need to be looked at.

- The music recommender is based on big data of what the users listen to. It would be better if the summary can explain more about how this problem is very similar to image detection problem, and how they are related to each other. This way it is better for the audiences to make connection.

References:

Nilashi, M., et.al. Collaborative Filtering Recommender Systems. Research Journal of Applied Sciences, Engineering and Technology 5(16):4168-4182, 2013. Adiyansjah, Alexander A S Gunawan, Derwin Suhartono, Music Recommender System Based on Genre using Convolutional Recurrent Neural Networks, Procedia Computer Science, https://doi.org/10.1016/j.procs.2019.08.146.