Superhuman AI for Multiplayer Poker: Difference between revisions

| (33 intermediate revisions by 18 users not shown) | |||

| Line 4: | Line 4: | ||

== Introduction == | == Introduction == | ||

A | A super-intelligence is a hypothetical agent that possesses intelligence surpassing that of the brightest and most gifted human minds. In the past two decades, most of the superhuman AI that have been built were only able to beat human players in two-player zero-sum games, where the sum of the gains and losses always equals zero. They almost dominated most of the board games in these twenty years. The most popular AI in the board games are the chess AI deep blue and the go chess AI Alpha-go. The most common strategy that the AI uses to beat those games is to find the most optimal Nash equilibrium. A Nash equilibrium is a pair of strategies such that either single-player switching to any ''other'' choice of strategy (while the other player's strategy remains unchanged) will result in a lower payout for the switching player. Intuitively this is similar to a locally optimal strategy for the players but is (i) not guaranteed to exist and (ii) may not be the truly optimal strategy. An example of this is the Prisoner's dilemma, where two individuals each have the option to testify against the other or to remain silent. Although the optimal choice is to remain silent, the individuals have an incentive to act in their own self-interest which results in a less than optimal outcome. | ||

More specifically, in the game of poker, we only have AI models that can beat human players in two-player settings | More specifically, in the game of poker, we only have AI models that can beat human players in two-player settings. This means that developing a superhuman AI in multiplayer poker is the remaining great milestone in this field, because there is no polynomial-time algorithm that can find a Nash equilibrium in two-player non-zero-sum games. The discovery of such an algorithm would have surprising and profound implications in computational complexity theory. | ||

In this paper, the AI which we call Pluribus is capable of defeating human professional poker players in Texas hold'em poker which is a six-player poker game and is the most commonly played format in the world. The algorithm that is used is not guaranteed to converge to a Nash algorithm outside of two-player zero-sum games. However, it uses a strong strategy that is capable of consistently defeating elite human professionals. This shows that despite not having strong theoretical guarantees on performance, they are capable of applying a wider class of superhuman strategies. | In this paper, the AI which we call Pluribus is capable of defeating human professional poker players in Texas hold'em poker which is a six-player poker game and is the most commonly played format in the world. The algorithm that is used is not guaranteed to converge to a Nash algorithm outside of two-player zero-sum games. However, it uses a strong strategy that is capable of consistently defeating elite human professionals. This shows that despite not having strong theoretical guarantees on performance, they are capable of applying a wider class of superhuman strategies. | ||

Knowing the basics of Texas hold'em poker may help to understand how the AI can play. Texas hold'em is played using a standard deck of 52 cards. For each hand, players are each dealt two cards, which only they can see. Once players receive their two cards, and prior to turning any other cards, players (in clockwise order) have the option to call, raise, fold, or check. Call means to match the highest bet so far, raise means to increase the bet, fold means the player wishes to leave the current hand, and check means to continue without betting any further. A player can only check if no one has bet yet this round or if they have already matched the highest bet. Once this round of betting is over three cards, called the Flop, are turned over, and the betting happens in a clockwise manner again. This is followed by revealing another card, the Turn, and then proceeding with another round of betting. Now, the final card, the River, is turned. There is one more round of betting before the players reveal their cards and determine the winner. The player with the best five-card hand (out of the 7 cards) wins the hand and the money in the pot. The following is a list of the Texas hold'em hands from best to worst: | |||

* Royal Flush (A,K,Q,J,10 all of the same suit) | |||

* Straight Flush(5 in a row from the same suit) | |||

* 4 of a kind | |||

* Full house (3 of a kind + a pair) | |||

* Flush (5 cards of the same suit) | |||

* Straight (5 cards in a row) | |||

* 3 of a kind | |||

* 2 different pairs | |||

* A pair | |||

* Highest card | |||

== Nash Equilibrium in Multiplayer Games == | == Nash Equilibrium in Multiplayer Games == | ||

Many AI has reached superhuman performance in games like checkers, chess, two-player limit poker, Go, and two-player no-limit poker. Nash equilibrium has been proven to exist in all finite games and numerous infinite games | Many AI has reached superhuman performance in games like checkers, chess, two-player limit poker, Go, and two-player no-limit poker. Nash equilibrium has been proven to exist in all finite games and numerous infinite games but the challenge is to find the equilibrium. It is the best possible strategy and is unbeatable in two-player zero-sum games since it guarantees to not lose in expectation regardless of what the opponent is doing. | ||

To have a deeper understanding of Nash Equilibria we must first define some basic game theory concepts. The first one being a strategic game, in game theory a strategic game consists of a set of players, for each player a set of actions and for each player preferences (or payoffs) over the set of action profiles (set of combination of actions). With these three elements, we can model a wide variety of situations. Now a Nash Equilibrium is an action profile, with the property that no player can do better by changing their action, given that all other players' actions remain the same. A common illustration of Nash equilibria is the Prisoner's Dilemma. We also have mixed strategies and mixed strategy Nash equilibria. A mixed strategy is when instead of a player choosing an action they apply a probability distribution to their set of actions and pick randomly. Note that with mixed strategies we must look at the expected payoff of the player given the other players' strategies. Therefore a mixed strategy Nash Equilibria involves at least one player playing with a mixed strategy where no player can increase their expected payoff by changing their action, given that all other players' actions remain the same. Then we can define a pure Nash Equilibria to where no one is playing a mixed strategy. We also must be aware that a single game can have multiple pure Nash equilibria and mixed Nash equilibria. Also, Nash Equilibria are purely theoretical and depend on players acting optimally and being rational, this is not always the case with humans and we can act very irrationally. Therefore empirically we will see that games can have very unexpected outcomes and you may be able to get a better payoff if you move away from a strictly theoretical strategy and take advantage of your opponent's irrational behavior. | To have a deeper understanding of Nash Equilibria, we must first define some basic game theory concepts. The first one being a strategic game, in-game theory a strategic game consists of a set of players, for each player a set of actions and for each player preferences (or payoffs) over the set of action profiles (set of combination of actions). With these three elements, we can model a wide variety of situations. Now a Nash Equilibrium is an action profile, with the property that no player can do better by changing their action, given that all other players' actions remain the same. A common illustration of Nash equilibria is the Prisoner's Dilemma. We also have mixed strategies and mixed strategy Nash equilibria. A mixed strategy is when instead of a player choosing an action they apply a probability distribution to their set of actions and pick randomly. Note that with mixed strategies we must look at the expected payoff of the player given the other players' strategies. Therefore a mixed strategy Nash Equilibria involves at least one player playing with a mixed strategy where no player can increase their expected payoff by changing their action, given that all other players' actions remain the same. Then we can define a pure Nash Equilibria to where no one is playing a mixed strategy. We also must be aware that a single game can have multiple pure Nash equilibria and mixed Nash equilibria. Also, Nash Equilibria are purely theoretical and depend on players acting optimally and being rational, this is not always the case with humans and we can act very irrationally. Therefore empirically we will see that games can have very unexpected outcomes and you may be able to get a better payoff if you move away from a strictly theoretical strategy and take advantage of your opponent's irrational behavior. | ||

The insufficiency with current AI systems is that they only try to achieve Nash equilibriums instead of trying to actively detect and exploit weaknesses in opponents. At the Nash equilibrium, there is no incentive for any player to change their initial strategy, so it is a stable state of the system. For example, let's consider the game of Rock-Paper-Scissors, the Nash equilibrium is to randomly pick any option with equal probability. However, we can see that this means the best strategy that the opponent can have will result in a tie. Therefore, in this example, our player cannot win in expectation. Now let's try to combine the Nash equilibrium strategy and opponent exploitation. We can initially use the Nash equilibrium strategy and then change our strategy | The insufficiency with current AI systems is that they only try to achieve Nash equilibriums instead of trying to actively detect and exploit weaknesses in opponents. At the Nash equilibrium, there is no incentive for any player to change their initial strategy, so it is a stable state of the system. For example, let's consider the game of Rock-Paper-Scissors, the Nash equilibrium is to randomly pick any option with equal probability. However, we can see that this means the best strategy that the opponent can have will result in a tie. Therefore, in this example, our player cannot win in expectation. Now let's try to combine the Nash equilibrium strategy and opponent exploitation. We can initially use the Nash equilibrium strategy and then change our strategy over time to exploit the observed weaknesses of our opponent. For example, we switch to always play Rock against our opponent who always plays Scissors. However, shifting away from the Nash equilibrium strategy opens up the possibility for our opponent to use our strategy against ourselves. For example, they notice we always play Rock and thus they will now always play Paper. | ||

Trying to approximate a Nash equilibrium is hard in theory, and in games with more than two players, it can only find a handful of possible strategies per player. Currently, existing techniques to find ways to exploit an opponent require way too many samples and are not competitive enough outside of small games. Finding a Nash equilibrium in three or more players is a great challenge. Even we can efficiently compute a Nash equilibrium in games with more than two players, it is still highly questionable if playing the Nash equilibrium strategy is a good choice. Additionally, if each player tries to find their own version of a Nash equilibrium, we could have infinitely many strategies and each player’s version of the equilibrium might not even be a Nash equilibrium. | Trying to approximate a Nash equilibrium is hard in theory, and in games with more than two players, it can only find a handful of possible strategies per player. Currently, existing techniques to find ways to exploit an opponent require way too many samples and are not competitive enough outside of small games. Finding a Nash equilibrium in three or more players is a great challenge. Even we can efficiently compute a Nash equilibrium in games with more than two players, it is still highly questionable if playing the Nash equilibrium strategy is a good choice. Additionally, if each player tries to find their own version of a Nash equilibrium, we could have infinitely many strategies and each player’s version of the equilibrium might not even be a Nash equilibrium. | ||

| Line 26: | Line 38: | ||

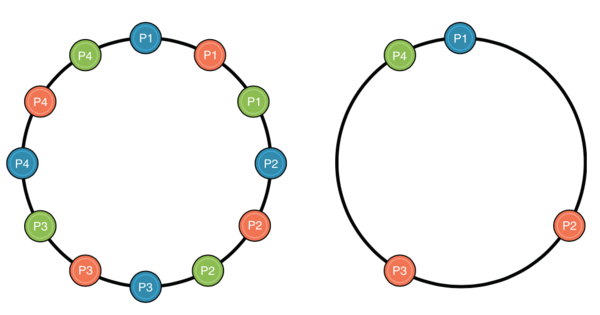

<div align="center">Figure 1: Lemonade Stand Example</div> | <div align="center">Figure 1: Lemonade Stand Example</div> | ||

From the right circle in Figure 1, we can see that when each player tries to calculate their own Nash equilibria, | From the right circle in Figure 1, we can see that when each player tries to calculate their own Nash equilibria independently, the joint strategy can hardly lead to equally-spaced players along the ring, which is not a Nash equilibrium. This shows that attempting to find a Nash equilibrium is not the best strategy outside of two-player zero-sum games, and our goal should not be focused on finding a specific game-theoretic solution. Instead, we need to focus on observations and empirical results that consistently defeat human opponents. | ||

== Theoretical Analysis == | == Theoretical Analysis == | ||

Pluribus uses forms of abstraction to make computations scalable. To simplify the complexity due to too many decision points, some actions are eliminated from consideration and similar decision points are grouped together and treated as identical. This process is called abstraction. Pluribus uses two kinds of abstraction: Action abstraction and information abstraction. Action abstraction reduces the number of different actions the AI needs to consider. For instance, it does not consider all bet sizes (the exact number of bets it considers varies between 1 and 14 depending on the situation). Information abstraction groups together decision points that reveal similar information. For instance, the player’s cards and revealed board cards. This is only used to reason about situations on future betting rounds, never the current betting round. | Pluribus uses forms of abstraction to make computations scalable. To simplify the complexity due to too many decision points, some actions are eliminated from consideration and similar decision points are grouped together and treated as identical. This process is called abstraction. Pluribus uses two kinds of abstraction: '''Action abstraction and information abstraction'''. Action abstraction reduces the number of different actions the AI needs to consider. For instance, it does not consider all bet sizes (the exact number of bets it considers varies between 1 and 14 depending on the situation). Information abstraction groups together decision points that reveal similar information. For instance, the player’s cards and revealed board cards. This is only used to reason about situations on future betting rounds, never the current betting round. | ||

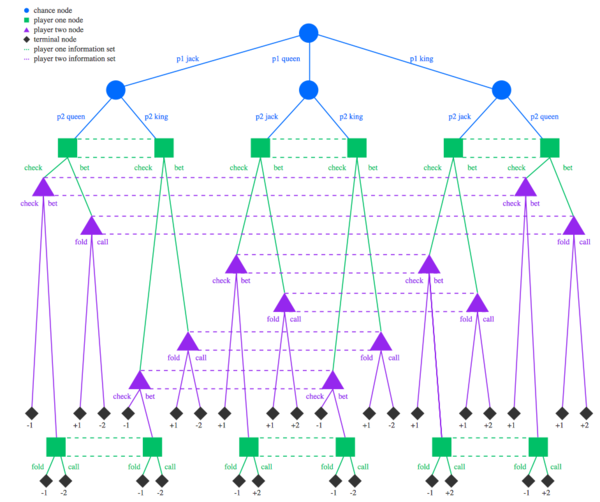

Pluribus uses a built-in strategy - “Blueprint strategy”, which it gradually improves by searching in real-time in situations it finds itself in during the course of the game. In the first betting round, pluribus uses the initial blueprint strategy when the number of decision points is small. The blueprint strategy is computed using Monte Carlo Counterfactual Regret Minimization (MCCFR) algorithm. CFR is commonly used in imperfect information games AI which is trained by repeatedly playing against copies of itself, without any data of human or prior AI play used as input. For ease of computation of CFR in this context, poker is represented as a game tree. A game tree is a tree structure where each node represents either a player’s decision, a chance event, or a terminal outcome and edges represent actions taken. | Pluribus uses a built-in strategy - '''“Blueprint strategy”''', which it gradually improves by searching in real-time in situations it finds itself in during the course of the game. In the first betting round, pluribus uses the initial blueprint strategy when the number of decision points is small. The blueprint strategy is computed using Monte Carlo Counterfactual Regret Minimization (MCCFR) algorithm. CFR is commonly used in imperfect information games AI which is trained by repeatedly playing against copies of itself, without any data of human or prior AI play used as input. For ease of computation of CFR in this context, poker is represented as a game tree. A game tree is a tree structure where each node represents either a player’s decision, a chance event, or a terminal outcome and edges represent actions taken. | ||

[[File:Screen_Shot_2020-11-17_at_11.57.00_PM.png| 600px |center ]] | [[File:Screen_Shot_2020-11-17_at_11.57.00_PM.png| 600px |center ]] | ||

| Line 41: | Line 53: | ||

The value of counterfactual regret for a decision is adjusted over the iterations as more scenarios or decision points are encountered. This means at the end of each iteration, the traverser’s strategy is updated so actions with higher counterfactual regret are chosen with higher probability. CFR minimizes regret over many iterations until the average strategy overall iterations converge and the average strategy is the approximated Nash equilibrium. CFR guarantees in all finite games that all counterfactual regrets grow sublinearly in the number of iterations. Pluribus uses Linear CFR in early iterations to reduce the influence of initial bad iterations i.e it assigns a weight of T to regret contributions at iteration T. This causes the influence of the first iteration to decay at a rate of <math>\frac{1}{\sum_{t=1}^Tt} = \frac{2}{T(T+1)}</math>, compared to a rate of <math>\frac{1}{T}</math> in the original CFR algorithm. This leads to the strategy of improving more quickly in practice. | The value of counterfactual regret for a decision is adjusted over the iterations as more scenarios or decision points are encountered. This means at the end of each iteration, the traverser’s strategy is updated so actions with higher counterfactual regret are chosen with higher probability. CFR minimizes regret over many iterations until the average strategy overall iterations converge and the average strategy is the approximated Nash equilibrium. CFR guarantees in all finite games that all counterfactual regrets grow sublinearly in the number of iterations. Pluribus uses Linear CFR in early iterations to reduce the influence of initial bad iterations i.e it assigns a weight of T to regret contributions at iteration T. This causes the influence of the first iteration to decay at a rate of <math>\frac{1}{\sum_{t=1}^Tt} = \frac{2}{T(T+1)}</math>, compared to a rate of <math>\frac{1}{T}</math> in the original CFR algorithm. This leads to the strategy of improving more quickly in practice. | ||

An additional feature of Pluribus is that in the | An additional feature of Pluribus is that in the sub games, instead of assuming that all players play according to a single strategy, Pluribus considers that each player may choose between k different strategies specialized to each player when a decision point is reached. This results in the searcher choosing a more balanced strategy. For instance, if a player never bluffs while holding the best possible hand, then the opponents would learn that fact and always fold in that scenario. To fold in that scenario is a more balanced strategy than to bet. | ||

Therefore, the blueprint strategy is produced offline for the entire game and it is gradually improved while making real-time decisions during the game. | Therefore, the blueprint strategy is produced offline for the entire game and it is gradually improved while making real-time decisions during the game. | ||

| Line 47: | Line 59: | ||

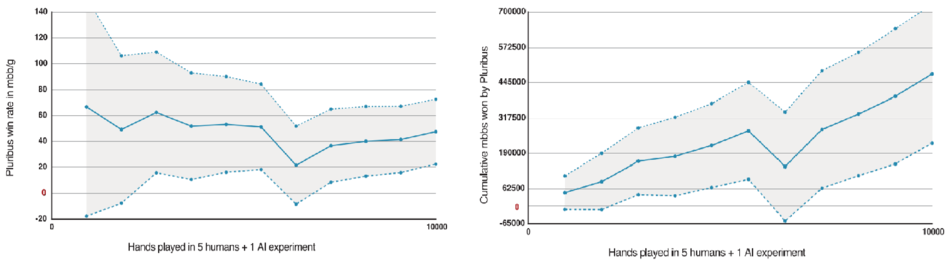

To test how well Pluribus functions, it was tested against human players in 2 formats. The first format included 5 human players and one copy of Pluribus (5H+1AI). The 13 human participants were poker players who have won more than $1M playing professionally and were provided with cash incentives to play their best. 10,000 hands of poker were played over 12 days with the 5H+1AI format. Players were anonymized with aliases that remained consistent throughout all their games. The aliases helped the players keep track of the tendencies and types of games played by each player over the 10,000 hands. | To test how well Pluribus functions, it was tested against human players in 2 formats. The first format included 5 human players and one copy of Pluribus (5H+1AI). The 13 human participants were poker players who have won more than $1M playing professionally and were provided with cash incentives to play their best. 10,000 hands of poker were played over 12 days with the 5H+1AI format. Players were anonymized with aliases that remained consistent throughout all their games. The aliases helped the players keep track of the tendencies and types of games played by each player over the 10,000 hands. | ||

The second format | The second format included one human player and 5 copies of Pluribus (1H+5AI). There were 2 more professional players who split another 10,000 hands of poker by playing 5000 hands each and followed the same aliasing process as the first format. | ||

The performance was measured using milli big blinds per game, mbb/game, (i.e. the initial amount of money the second player has to put in the pot) which is the standard measure in the AI field. Additionally, AIVAT was used as the variance reduction technique to control for luck in the games. Significance tests were run at a 95% significance level with one-tailed t-tests to check the profitability of Pluribus's performance. | The performance was measured using milli big blinds per game, mbb/game, (i.e. the initial amount of money the second player has to put in the pot) which is the standard measure in the AI field. Additionally, AIVAT was used as the variance reduction technique to control for luck in the games. Significance tests were run at a 95% significance level with one-tailed t-tests to check the profitability of Pluribus's performance. | ||

| Line 63: | Line 75: | ||

<div align="center">"Figure 3. Performance of Pluribus in the 5 humans + 1 AI experiment. The dots show Pluribus's performance at the end of each day of play. (Top) The lines show the win rate (solid line) plus or minus the standard error (dashed lines). (Bottom) The lines show the cumulative number of mbbs won (solid line) plus or minus the standard error (dashed lines). The relatively steady performance of Pluribus over the course of the 10,000-hand experiment also suggests that the humans were unable to find exploitable weaknesses in the bot."</div> | <div align="center"> | ||

"Figure 3. Performance of Pluribus in the 5 humans + 1 AI experiment. The dots show Pluribus's performance at the end of each day of play. (Top) The lines show the win rate (solid line) plus or minus the standard error (dashed lines). (Bottom) The lines show the cumulative number of mbbs won (solid line) plus or minus the standard error (dashed lines). The relatively steady performance of Pluribus over the course of the 10,000-hand experiment also suggests that the humans were unable to find exploitable weaknesses in the bot."</div> | |||

Optimal play in Pluribus looks different from well-known poker conventions: A standard convention of “limping” in poker (calling the 'big blind' rather than folding or raising) is confirmed to be not optimal by Pluribus since it initially experimented with it but eliminated this from its strategy over its games of self-play. On the other hand, another convention of “donk betting” (starting a round by betting when someone else ended the previous round with a call) that is dismissed by players was adopted by Pluribus much more often than played by humans and is proven to be profitable. | Optimal play in Pluribus looks different from well-known poker conventions: A standard convention of “limping” in poker (calling the 'big blind' rather than folding or raising) is confirmed to be not optimal by Pluribus since it initially experimented with it but eliminated this from its strategy over its games of self-play. On the other hand, another convention of “donk betting” (starting a round by betting when someone else ended the previous round with a call) that is dismissed by players was adopted by Pluribus much more often than played by humans and is proven to be profitable. | ||

| Line 74: | Line 88: | ||

Extending this idea beyond two-player zero-sum games will have many applications in real life. For example, the emergence of strategies used by the superhuman AI that were seen as "non-optimal" to humans, poses interesting questions about applying such an AI to fields such as business, economics and financial markets. | Extending this idea beyond two-player zero-sum games will have many applications in real life. For example, the emergence of strategies used by the superhuman AI that were seen as "non-optimal" to humans, poses interesting questions about applying such an AI to fields such as business, economics and financial markets. | ||

It should be noted that there's one interesting fact that even though a player knows all possible outcomes in a Nash Equilibrium game, he/she would still not be beneficial for changing strategies, which means that the player will still choose to keep his/her original strategy. | |||

The summary for Superhuman AI for Multiplayer Poker is very well written, with a detailed explanation of the concept, steps, and result and with a combination of visual images. However, it seems that the experiment of the study is not well designed. For example, sample selection is not strict and well defined, this could cause selection bias introduced into the result and thus making it not generalizable. | The summary for Superhuman AI for Multiplayer Poker is very well written, with a detailed explanation of the concept, steps, and result and with a combination of visual images. However, it seems that the experiment of the study is not well designed. For example, sample selection is not strict and well defined, this could cause selection bias introduced into the result and thus making it not generalizable. | ||

| Line 90: | Line 106: | ||

Similar to Pluribis, another [https://science.sciencemag.org/content/356/6337/508 paper] discussed a different AI program, called DeepStack, which also has defeated professional poker players at a 2-player Texas hold'em variant. However, instead of finding the Nash Equilibria, DeepStack uses recursive reasoning, decomposition, and a form of intuition that is automatically learned from self-play. | Similar to Pluribis, another [https://science.sciencemag.org/content/356/6337/508 paper] discussed a different AI program, called DeepStack, which also has defeated professional poker players at a 2-player Texas hold'em variant. However, instead of finding the Nash Equilibria, DeepStack uses recursive reasoning, decomposition, and a form of intuition that is automatically learned from self-play. | ||

AI can calculate the exact odds of a specific card or set of cards dropped on the flop. The difficulty is that in poker, the player cannot see the opponent's hand. This kind of hidden information also brings other complications (such as fraud), which is more challenging for AI at the game. At the same time, using AI to train each other is also an interesting topic. | |||

In inspiration from the critique about fraud, could this AI be used to detect cheating, such as illicitly obtaining information from an opponents hand, in games between human players? For instance by comparing the decision of the humans versus that of the AI's given the same information. Or are the strategies between human players and this AI too different to make conclusions about suspicious humans plays? | |||

I think AI can possibly calculate the probability of winning each round based on the hand it gets, and modify that probability while replicating the experiments. This may help building the model more accuratly and efficiently. | |||

The summary is overall well-defined, but authors should explain more on the algorithm parts such that specify how this model perform in each step to achieve the optimal solution. Also, there is an issue such that we must have an assumption before applying this model. The assumption is human need to follow the "rules". What if this model is against with human which does not follow the "rules" then how this model will learn from it? This is a question to contemplate. | |||

This is an interesting topic discussing AI players in the game. It would be attractive if the summary can provide a brief comparison about the advantages and shortages of the AI players nowadays. | |||

It is interesting to see how AI has some strategy playing against people in Texas poker. However, this game also relies on how people behave in their body language and the way they talk. This paper focuses more on theoretical side but not 100% true in real life. | |||

There's still some aspects of strategies in poker that still aren't explicitly present in this type of AI system. However, this does appear to show something that is far more advanced that most contemporary AI in most online poker systems. | |||

This is a interesting topic. It would be interesting to know how this can be applied to applications other than games like poker. | |||

It would be better if the introduction part can be trimmed. It will be interesting to know how this superhuman AI deal with simple non-zero-sum games and how the algorithm would adjust. | |||

This is a very interesting topic, and as mentioned above, this topic can expand far beyond the topic of multiplayer poker. In the end, the paper mentions that some common strategies by human players are dismissed and some strategies that are considered weak are being adopted by the AI. It would be interesting to see how one can embed this into the process of human decision making in the future, so that we can re-evaluate our decisions with the assistance from trained AI agents. | |||

This is an interesting topic that can be applied to read outside of just playing game, such as in the field of ecnomics. | |||

== Conclusion == | == Conclusion == | ||

As Pluribus’s strategy was not developed with any human data and was trained only by self-play, it is an unbiased and a different perspective on how optimal play can be attained. | As Pluribus’s strategy was not developed with any human data and was trained only by self-play, it is an unbiased and a different perspective on how optimal play can be attained. | ||

Developing a superhuman AI for multiplayer poker is a widely recognized | Developing a superhuman AI for multiplayer poker is a widely recognized milestone in this area and the major remaining milestone in computer poker. | ||

Pluribus’s success shows that despite the lack of known strong theoretical guarantees on performance in multiplayer games, there are large-scale, complex multiplayer imperfect information settings in which a carefully constructed self-play-with-search algorithm can produce superhuman strategies. | Pluribus’s success shows that despite the lack of known strong theoretical guarantees on performance in multiplayer games, there are large-scale, complex multiplayer imperfect information settings in which a carefully constructed self-play-with-search algorithm can produce superhuman strategies. | ||

Latest revision as of 00:06, 8 December 2020

Presented by

Hansa Halim, Sanjana Rajendra Naik, Samka Marfua, Shawrupa Proshasty

Introduction

A super-intelligence is a hypothetical agent that possesses intelligence surpassing that of the brightest and most gifted human minds. In the past two decades, most of the superhuman AI that have been built were only able to beat human players in two-player zero-sum games, where the sum of the gains and losses always equals zero. They almost dominated most of the board games in these twenty years. The most popular AI in the board games are the chess AI deep blue and the go chess AI Alpha-go. The most common strategy that the AI uses to beat those games is to find the most optimal Nash equilibrium. A Nash equilibrium is a pair of strategies such that either single-player switching to any other choice of strategy (while the other player's strategy remains unchanged) will result in a lower payout for the switching player. Intuitively this is similar to a locally optimal strategy for the players but is (i) not guaranteed to exist and (ii) may not be the truly optimal strategy. An example of this is the Prisoner's dilemma, where two individuals each have the option to testify against the other or to remain silent. Although the optimal choice is to remain silent, the individuals have an incentive to act in their own self-interest which results in a less than optimal outcome.

More specifically, in the game of poker, we only have AI models that can beat human players in two-player settings. This means that developing a superhuman AI in multiplayer poker is the remaining great milestone in this field, because there is no polynomial-time algorithm that can find a Nash equilibrium in two-player non-zero-sum games. The discovery of such an algorithm would have surprising and profound implications in computational complexity theory.

In this paper, the AI which we call Pluribus is capable of defeating human professional poker players in Texas hold'em poker which is a six-player poker game and is the most commonly played format in the world. The algorithm that is used is not guaranteed to converge to a Nash algorithm outside of two-player zero-sum games. However, it uses a strong strategy that is capable of consistently defeating elite human professionals. This shows that despite not having strong theoretical guarantees on performance, they are capable of applying a wider class of superhuman strategies.

Knowing the basics of Texas hold'em poker may help to understand how the AI can play. Texas hold'em is played using a standard deck of 52 cards. For each hand, players are each dealt two cards, which only they can see. Once players receive their two cards, and prior to turning any other cards, players (in clockwise order) have the option to call, raise, fold, or check. Call means to match the highest bet so far, raise means to increase the bet, fold means the player wishes to leave the current hand, and check means to continue without betting any further. A player can only check if no one has bet yet this round or if they have already matched the highest bet. Once this round of betting is over three cards, called the Flop, are turned over, and the betting happens in a clockwise manner again. This is followed by revealing another card, the Turn, and then proceeding with another round of betting. Now, the final card, the River, is turned. There is one more round of betting before the players reveal their cards and determine the winner. The player with the best five-card hand (out of the 7 cards) wins the hand and the money in the pot. The following is a list of the Texas hold'em hands from best to worst:

- Royal Flush (A,K,Q,J,10 all of the same suit)

- Straight Flush(5 in a row from the same suit)

- 4 of a kind

- Full house (3 of a kind + a pair)

- Flush (5 cards of the same suit)

- Straight (5 cards in a row)

- 3 of a kind

- 2 different pairs

- A pair

- Highest card

Nash Equilibrium in Multiplayer Games

Many AI has reached superhuman performance in games like checkers, chess, two-player limit poker, Go, and two-player no-limit poker. Nash equilibrium has been proven to exist in all finite games and numerous infinite games but the challenge is to find the equilibrium. It is the best possible strategy and is unbeatable in two-player zero-sum games since it guarantees to not lose in expectation regardless of what the opponent is doing.

To have a deeper understanding of Nash Equilibria, we must first define some basic game theory concepts. The first one being a strategic game, in-game theory a strategic game consists of a set of players, for each player a set of actions and for each player preferences (or payoffs) over the set of action profiles (set of combination of actions). With these three elements, we can model a wide variety of situations. Now a Nash Equilibrium is an action profile, with the property that no player can do better by changing their action, given that all other players' actions remain the same. A common illustration of Nash equilibria is the Prisoner's Dilemma. We also have mixed strategies and mixed strategy Nash equilibria. A mixed strategy is when instead of a player choosing an action they apply a probability distribution to their set of actions and pick randomly. Note that with mixed strategies we must look at the expected payoff of the player given the other players' strategies. Therefore a mixed strategy Nash Equilibria involves at least one player playing with a mixed strategy where no player can increase their expected payoff by changing their action, given that all other players' actions remain the same. Then we can define a pure Nash Equilibria to where no one is playing a mixed strategy. We also must be aware that a single game can have multiple pure Nash equilibria and mixed Nash equilibria. Also, Nash Equilibria are purely theoretical and depend on players acting optimally and being rational, this is not always the case with humans and we can act very irrationally. Therefore empirically we will see that games can have very unexpected outcomes and you may be able to get a better payoff if you move away from a strictly theoretical strategy and take advantage of your opponent's irrational behavior.

The insufficiency with current AI systems is that they only try to achieve Nash equilibriums instead of trying to actively detect and exploit weaknesses in opponents. At the Nash equilibrium, there is no incentive for any player to change their initial strategy, so it is a stable state of the system. For example, let's consider the game of Rock-Paper-Scissors, the Nash equilibrium is to randomly pick any option with equal probability. However, we can see that this means the best strategy that the opponent can have will result in a tie. Therefore, in this example, our player cannot win in expectation. Now let's try to combine the Nash equilibrium strategy and opponent exploitation. We can initially use the Nash equilibrium strategy and then change our strategy over time to exploit the observed weaknesses of our opponent. For example, we switch to always play Rock against our opponent who always plays Scissors. However, shifting away from the Nash equilibrium strategy opens up the possibility for our opponent to use our strategy against ourselves. For example, they notice we always play Rock and thus they will now always play Paper.

Trying to approximate a Nash equilibrium is hard in theory, and in games with more than two players, it can only find a handful of possible strategies per player. Currently, existing techniques to find ways to exploit an opponent require way too many samples and are not competitive enough outside of small games. Finding a Nash equilibrium in three or more players is a great challenge. Even we can efficiently compute a Nash equilibrium in games with more than two players, it is still highly questionable if playing the Nash equilibrium strategy is a good choice. Additionally, if each player tries to find their own version of a Nash equilibrium, we could have infinitely many strategies and each player’s version of the equilibrium might not even be a Nash equilibrium.

Consider the Lemonade Stand example from Figure 1 Below. We have 4 players and the goal for each player is to find a spot in the ring that is furthest away from every other player. This way, each lemonade stand can cover as much selling region as possible and generate maximum revenue. In the left circle, we have three different Nash equilibria distinguished by different colors which would benefit everyone. The right circle is an illustration of what would happen if each player decides to calculate their own Nash equilibrium.

From the right circle in Figure 1, we can see that when each player tries to calculate their own Nash equilibria independently, the joint strategy can hardly lead to equally-spaced players along the ring, which is not a Nash equilibrium. This shows that attempting to find a Nash equilibrium is not the best strategy outside of two-player zero-sum games, and our goal should not be focused on finding a specific game-theoretic solution. Instead, we need to focus on observations and empirical results that consistently defeat human opponents.

Theoretical Analysis

Pluribus uses forms of abstraction to make computations scalable. To simplify the complexity due to too many decision points, some actions are eliminated from consideration and similar decision points are grouped together and treated as identical. This process is called abstraction. Pluribus uses two kinds of abstraction: Action abstraction and information abstraction. Action abstraction reduces the number of different actions the AI needs to consider. For instance, it does not consider all bet sizes (the exact number of bets it considers varies between 1 and 14 depending on the situation). Information abstraction groups together decision points that reveal similar information. For instance, the player’s cards and revealed board cards. This is only used to reason about situations on future betting rounds, never the current betting round.

Pluribus uses a built-in strategy - “Blueprint strategy”, which it gradually improves by searching in real-time in situations it finds itself in during the course of the game. In the first betting round, pluribus uses the initial blueprint strategy when the number of decision points is small. The blueprint strategy is computed using Monte Carlo Counterfactual Regret Minimization (MCCFR) algorithm. CFR is commonly used in imperfect information games AI which is trained by repeatedly playing against copies of itself, without any data of human or prior AI play used as input. For ease of computation of CFR in this context, poker is represented as a game tree. A game tree is a tree structure where each node represents either a player’s decision, a chance event, or a terminal outcome and edges represent actions taken.

At the start of each iteration, MCCFR stimulates a hand of poker randomly (Cards held by a player at a given time) and designates one player as the traverser of the game tree. Once that is completed, the AI reviews the decision made by the traverser at a decision point in the game and investigates whether the decision was profitable. The AI compares its decision with other actions available to the traverser at that point and also with the future hypothetical decisions that would have been made following the other available actions. To evaluate a decision, the Counterfactual Regret factor is used. This is the difference between what the traverser would have expected to receive for choosing an action and actually received on the iteration. Thus regret is a numeric value, where a positive regret indicates you regret your decision, a negative regret indicates you are happy with your decision and zero regret indicates that you are indifferent.

The value of counterfactual regret for a decision is adjusted over the iterations as more scenarios or decision points are encountered. This means at the end of each iteration, the traverser’s strategy is updated so actions with higher counterfactual regret are chosen with higher probability. CFR minimizes regret over many iterations until the average strategy overall iterations converge and the average strategy is the approximated Nash equilibrium. CFR guarantees in all finite games that all counterfactual regrets grow sublinearly in the number of iterations. Pluribus uses Linear CFR in early iterations to reduce the influence of initial bad iterations i.e it assigns a weight of T to regret contributions at iteration T. This causes the influence of the first iteration to decay at a rate of [math]\displaystyle{ \frac{1}{\sum_{t=1}^Tt} = \frac{2}{T(T+1)} }[/math], compared to a rate of [math]\displaystyle{ \frac{1}{T} }[/math] in the original CFR algorithm. This leads to the strategy of improving more quickly in practice.

An additional feature of Pluribus is that in the sub games, instead of assuming that all players play according to a single strategy, Pluribus considers that each player may choose between k different strategies specialized to each player when a decision point is reached. This results in the searcher choosing a more balanced strategy. For instance, if a player never bluffs while holding the best possible hand, then the opponents would learn that fact and always fold in that scenario. To fold in that scenario is a more balanced strategy than to bet. Therefore, the blueprint strategy is produced offline for the entire game and it is gradually improved while making real-time decisions during the game.

Experimental Results

To test how well Pluribus functions, it was tested against human players in 2 formats. The first format included 5 human players and one copy of Pluribus (5H+1AI). The 13 human participants were poker players who have won more than $1M playing professionally and were provided with cash incentives to play their best. 10,000 hands of poker were played over 12 days with the 5H+1AI format. Players were anonymized with aliases that remained consistent throughout all their games. The aliases helped the players keep track of the tendencies and types of games played by each player over the 10,000 hands.

The second format included one human player and 5 copies of Pluribus (1H+5AI). There were 2 more professional players who split another 10,000 hands of poker by playing 5000 hands each and followed the same aliasing process as the first format. The performance was measured using milli big blinds per game, mbb/game, (i.e. the initial amount of money the second player has to put in the pot) which is the standard measure in the AI field. Additionally, AIVAT was used as the variance reduction technique to control for luck in the games. Significance tests were run at a 95% significance level with one-tailed t-tests to check the profitability of Pluribus's performance.

Applying AIVAT the following were the results:

| Format | Average mbb/game | Standard Error in mbb/game | P-value of being profitable |

|---|---|---|---|

| 5H+1AI | 48 | 25 | 0.028 |

| 1H+5AI | 32 | 15 | 0.014 |

Optimal play in Pluribus looks different from well-known poker conventions: A standard convention of “limping” in poker (calling the 'big blind' rather than folding or raising) is confirmed to be not optimal by Pluribus since it initially experimented with it but eliminated this from its strategy over its games of self-play. On the other hand, another convention of “donk betting” (starting a round by betting when someone else ended the previous round with a call) that is dismissed by players was adopted by Pluribus much more often than played by humans and is proven to be profitable.

Discussion and Critiques

Pluribus' Blueprint strategy and Abstraction methods effectively reduce the computational power required. Hence it was computed in 8 days and required less than 512 GB of memory, and costs about $144 to produce. This is in sharp contrast to all the other recent superhuman AI milestones for games. This is a great way the researchers have condensed down the problem to fit the current computational powers.

Pluribus definitely shows that we can capture observational data and empirical results to construct a superhuman AI without requiring theoretical guarantees, this can be a baseline for future AI inventions and help in the research of AI. It would be interesting to use Pluribus's way of using a non-theoretical approach in more real-life problems such as autonomous driving or stock market trading.

Extending this idea beyond two-player zero-sum games will have many applications in real life. For example, the emergence of strategies used by the superhuman AI that were seen as "non-optimal" to humans, poses interesting questions about applying such an AI to fields such as business, economics and financial markets.

It should be noted that there's one interesting fact that even though a player knows all possible outcomes in a Nash Equilibrium game, he/she would still not be beneficial for changing strategies, which means that the player will still choose to keep his/her original strategy.

The summary for Superhuman AI for Multiplayer Poker is very well written, with a detailed explanation of the concept, steps, and result and with a combination of visual images. However, it seems that the experiment of the study is not well designed. For example, sample selection is not strict and well defined, this could cause selection bias introduced into the result and thus making it not generalizable.

Superhuman AI, while sounding superior, is actually not uncommon. There have been many endeavours on mastering poker such as the Recursive Belief-based Learning (ReBeL) by Facebook Research. They pursued a method of reinforcement learning on a partially observable Markov decision process which was inspired by the recent successes of AlphaZero. For Pluribus to demonstrate how effective it is compared to the state-of-the-art, it should run some experiments against ReBeL.

This is a very interesting topic, and this summary is clear enough for readers to understand. I think this application not only can apply in poker, maybe thinking of more applications in other areas? There are many famous AI that really changing our life. For example, AlphaGo and AlphaStar, which are developed by Google DeepMind, defeated professional gamers. Discussing more this will be interesting.

One of the biggest issues when applying AI to games against humans (when not all information is known, ie, opponents' cards) is the assumption is generally made that the human players are rational players which follow a certain set of "rules" based on the information that they know. This could be an issue with the fact that Pluribus has trained itself by playing itself instead of humans. While the results clearly show that Pluribus has found some kind of 'optimal' method to play, it would be interesting to see if it could actually maximize its profits by learning the trends of its human opponents over time (learning on the fly with information gained each hand while it's playing). In addition to that, the paper may discuss how human action could be changed in the game when they play with Superhuman AI. We can see that playing card games require various strategy and different people can have a different set of actions in the same game and in the same situation.

One interesting software called Piosolver leverages a similar tree-based algorithm presented in the paper to recommend the move that is deemed game theory optimal (GTO). In the poker world, GTO is a play-style that is based on mathematics and is considered a "defensive" strategy. Following the rock, paper, scissors analogy from the paper, a GTO play-style is synonymous with choosing randomly between the three options, whereas an exploitative strategy involves reading a human player's tendencies and adjusting the strategy accordingly. Piosolver is used by many professional poker players to enhance their game and gain intuition on what the best move is in certain situations.

Another way to train the proposed model can be a poker game with two or more AI players. That method was used by AlphaGo to train a better model.

Games with various AI players would be an interesting topic, and through comparing different AI, their shortcomes could be observed and improved. More discussions on this would be of interests.

Similar to Pluribis, another paper discussed a different AI program, called DeepStack, which also has defeated professional poker players at a 2-player Texas hold'em variant. However, instead of finding the Nash Equilibria, DeepStack uses recursive reasoning, decomposition, and a form of intuition that is automatically learned from self-play.

AI can calculate the exact odds of a specific card or set of cards dropped on the flop. The difficulty is that in poker, the player cannot see the opponent's hand. This kind of hidden information also brings other complications (such as fraud), which is more challenging for AI at the game. At the same time, using AI to train each other is also an interesting topic.

In inspiration from the critique about fraud, could this AI be used to detect cheating, such as illicitly obtaining information from an opponents hand, in games between human players? For instance by comparing the decision of the humans versus that of the AI's given the same information. Or are the strategies between human players and this AI too different to make conclusions about suspicious humans plays?

I think AI can possibly calculate the probability of winning each round based on the hand it gets, and modify that probability while replicating the experiments. This may help building the model more accuratly and efficiently.

The summary is overall well-defined, but authors should explain more on the algorithm parts such that specify how this model perform in each step to achieve the optimal solution. Also, there is an issue such that we must have an assumption before applying this model. The assumption is human need to follow the "rules". What if this model is against with human which does not follow the "rules" then how this model will learn from it? This is a question to contemplate.

This is an interesting topic discussing AI players in the game. It would be attractive if the summary can provide a brief comparison about the advantages and shortages of the AI players nowadays.

It is interesting to see how AI has some strategy playing against people in Texas poker. However, this game also relies on how people behave in their body language and the way they talk. This paper focuses more on theoretical side but not 100% true in real life.

There's still some aspects of strategies in poker that still aren't explicitly present in this type of AI system. However, this does appear to show something that is far more advanced that most contemporary AI in most online poker systems.

This is a interesting topic. It would be interesting to know how this can be applied to applications other than games like poker.

It would be better if the introduction part can be trimmed. It will be interesting to know how this superhuman AI deal with simple non-zero-sum games and how the algorithm would adjust.

This is a very interesting topic, and as mentioned above, this topic can expand far beyond the topic of multiplayer poker. In the end, the paper mentions that some common strategies by human players are dismissed and some strategies that are considered weak are being adopted by the AI. It would be interesting to see how one can embed this into the process of human decision making in the future, so that we can re-evaluate our decisions with the assistance from trained AI agents.

This is an interesting topic that can be applied to read outside of just playing game, such as in the field of ecnomics.

Conclusion

As Pluribus’s strategy was not developed with any human data and was trained only by self-play, it is an unbiased and a different perspective on how optimal play can be attained. Developing a superhuman AI for multiplayer poker is a widely recognized milestone in this area and the major remaining milestone in computer poker. Pluribus’s success shows that despite the lack of known strong theoretical guarantees on performance in multiplayer games, there are large-scale, complex multiplayer imperfect information settings in which a carefully constructed self-play-with-search algorithm can produce superhuman strategies.

References

Noam Brown and Tuomas Sandholm (July 11, 2019). Superhuman AI for multiplayer poker. Science 365.

Osborne, Martin J.; Rubinstein, Ariel (July 12, 1994). A Course in Game Theory. Cambridge, MA: MIT. p. 14.

Justin Sermeno. (November 17, 2020). Vanilla Counterfactual Regret Minimization for Engineers. https://justinsermeno.com/posts/cfr/#:~:text=Counterfactual%20regret%20minimization%20%28CFR%29%20is%20an%20algorithm%20that,decision.%20It%20can%20be%20positive%2C%20negative%2C%20or%20zero

Brown, N., Bakhtin, A., Lerer, A., & Gong, Q. (2020). Combining deep reinforcement learning and search for imperfect-information games. Advances in Neural Information Processing Systems, 33.