Loss Function Search for Face Recognition: Difference between revisions

| (60 intermediate revisions by 38 users not shown) | |||

| Line 3: | Line 3: | ||

== Introduction == | == Introduction == | ||

Face recognition is a technology that can label a face to a specific identity. The | Face recognition is a technology that can label a face to a specific identity. The field of study involves two tasks: 1. Identifying and classifying a face to a certain identity and 2. Verifying if this face image and another face image map to the same identity. Loss functions play an important role in evaluating how well the prediction models the given data. In the application of face recognition, they are used for training convolutional neural networks (CNNs) with discriminative features. A discriminative feature is one that is able to successfully discriminate the labeled data, and is typically a result of feature engineering/selection. However, traditional softmax loss lacks the power of feature discrimination. To solve this problem, a center loss was developed to learn centers for each identity to enhance the intra-class compactness. Hence, the paper introduced a new loss function using a scale parameter to produce higher gradients to well-separated samples which can reduce the softmax probability. | ||

Margin-based (angular, additive, additive angular margins) soft-max loss functions are important in learning discriminative features in face recognition. There have been hand-crafted methods previously developed that require much efforts such as A-softmax, V-softmax, AM-Softmax, and Arc-softmax. Li et al. proposed an AutoML for loss function search method also known as AM-LFS from a hyper-parameter optimization perspective [2]. It automatically determines the search space by leveraging reinforcement learning to the search loss functions during the training process, though the drawback is the complex and unstable search space. | |||

'''Soft Max''' | |||

Softmax probability is the probability for each class. It contains a vector of values that add up to 1 while ranging between 0 and 1. Cross-entropy loss is the negative value of target values times the log of the probabilities. When softmax probability is combined with cross-entropy loss in the last fully connected layer of the CNN, it yields the softmax loss function: | |||

<center><math>L_1=-\log\frac{e^{w^T_yx}}{e^{w^T_yx} + \sum_{k≠y}^K{e^{w^T_yx}}}</math> [1] </center> | |||

Specifically for face recognition, <math>L_1</math> is modified such that <math>w^T_yx</math> is normalized and <math>s</math> represents the magnitude of <math>w^T_yx</math>: | Specifically for face recognition, <math>L_1</math> is modified such that <math>w^T_yx</math> is normalized and <math>s</math> represents the magnitude of <math>w^T_yx</math>: | ||

<center><math>L_2=-log\frac{e^{s cos{(\theta_{{w_y},x})}}}{e^{s cos{(\theta_{{w_y},x})}} + \sum_{k≠y}^K{e^{s cos{(\theta_{{w_y},x})}}}}</math> [1] </center> | <center><math>L_2=-\log\frac{e^{s \cos{(\theta_{{w_y},x})}}}{e^{s \cos{(\theta_{{w_y},x})}} + \sum_{k≠y}^K{e^{s \cos{(\theta_{{w_y},x})}}}}</math> [1] </center> | ||

Where <math> \cos{(\theta_{{w_k},x})} = w^T_y </math> is cosine similarity and <math>\theta_{{w_k},x}</math> is angle between <math> w_k</math> and x. The learnt features with this soft max loss are prone to be separable (as desired). | |||

'''Margin-based Softmax''' | |||

This function is crucial in face recognition because it is used for enhancing feature discrimination. While there are different variations of the softmax loss function, the functions are built upon the same structure as the equation above. | |||

The margin-based softmax function is: | |||

<center><math>L_3=-\log\frac{e^{s f{(m,\theta_{{w_y},x})}}}{e^{s f{(m,\theta_{{w_y},x})}} + \sum_{k≠y}^K{e^{s \cos{(\theta_{{w_y},x})}}}} </math> </center> | |||

Here, <math>f{(m,\theta_{{w_y},x})} \leq \cos (\theta_{w_y,x})</math> is a carefully chosen margin function. | |||

Some other variations of chosen functions: | |||

'''A-Softmax Loss: ''' <math>f{(m_1,\theta_{{w_y},x})} = \cos (m_1\theta_{w_y,x})</math> , where m1 >= 1 and a integer. | |||

'''Arc-Softmax Loss: ''' <math>f{(m_1,\theta_{{w_y},x})} = \cos (\theta_{w_y,x} + m_2)</math>, where m2 > 0 | |||

'''AM-Softmax Loss: ''' <math>f{(m,\theta_{{w_y},x})} = \cos (m_1\theta_{w_y,x} + m_2) - m_3</math>, where m1 >= 1 and a integer; m2,m3 > 0 | |||

In this paper, the authors first identified that reducing the softmax probability is a key contribution to feature discrimination and designed two search spaces (random and reward-guided method). They then evaluated their Random-Softmax and Search-Softmax approaches by comparing the results against other face recognition algorithms using nine popular face recognition benchmarks. | |||

== Motivation == | == Motivation == | ||

Previous algorithms for facial recognition frequently rely on CNNs that may include metric learning loss functions such as contrastive loss or triplet loss. Without sensitive sample mining strategies, the computational cost for these functions | Previous algorithms for facial recognition frequently rely on CNNs that may include metric learning loss functions such as contrastive loss or triplet loss. Without sensitive sample mining strategies, the computational cost for these functions is high. This drawback prompts the redesign of classical softmax loss that cannot discriminate features. Multiple softmax loss functions have since been developed including margin-based formulations which often require fine-tuning of parameters and are susceptible to instability. Therefore, researchers need to put in a lot of effort in creating their method in the large design space. AM-LFS takes an optimization approach for selecting hyperparameters for the margin-based softmax functions, but its aforementioned drawbacks are caused by the lack of direction in designing the search space. | ||

To solve the issues associated with hand-tuned softmax loss functions and AM-LFS, the authors attempt to reduce the softmax probability to improve feature discrimination when using margin-based softmax loss functions. The development of margin-based softmax loss with only one parameter | To solve the issues associated with hand-tuned softmax loss functions and AM-LFS, the authors attempt to reduce the softmax probability to improve feature discrimination when using margin-based softmax loss functions. The development of margin-based softmax loss with only one required parameter and an improved search space using a reward-based method was determined by the authors to be the best option for their loss function. | ||

== Problem Formulation == | == Problem Formulation == | ||

| Line 30: | Line 52: | ||

<center><math>p_m=\frac{1}{ap+(1-a)}*p</math></center> | <center><math>p_m=\frac{1}{ap+(1-a)}*p</math></center> | ||

<center> where <math>a=1-e^{s{cos{(\theta_{w_y},x)}-f{(m,\theta_{w_y},x)}}}</math> and <math>a≤0</math></center> | <center> where <math>a=1-e^{s\,{cos{(\theta_{w_y},x)}-f{(m,\theta_{w_y},x)}}}</math> and <math>a≤0</math></center> | ||

<math>a</math> is considered as a modulating factor and <math>h{(a,p)}=\frac{1}{ap+(1-a)} \in (0,1]</math> is a modulating function [1]. Therefore, regardless of the margin function (<math>f</math>), the minimization of the softmax probability will ensure success. | <math>a</math> is considered as a modulating factor and <math>h{(a,p)}=\frac{1}{ap+(1-a)} \in (0,1]</math> is a modulating function [1]. Therefore, regardless of the margin function (<math>f</math>), the minimization of the softmax probability will ensure success. | ||

Compared to AM-LFS, this method involves only one parameter (<math>a</math>) that is also constrained, versus AM-LFS which has 2M parameters without constraints that specify the piecewise linear functions the method requires. Also, the piecewise linear functions of AM-LFS (<math>p_m={a_i}p+b_i</math>) may not be discriminative because it could be larger than the softmax probability. | Compared to AM-LFS, this method involves only one parameter (<math>a</math>) that is also constrained, versus AM-LFS which has 2M parameters without constraints that specify the piecewise linear functions the method requires. Also, the piecewise linear functions of AM-LFS (<math>p_m={a_i}p+b_i</math>) may not be discriminative because it could be larger than the softmax probability, while <math>p_m=h(a, p)*p < p </math> always holds. | ||

=== Random Search === | === Random Search === | ||

| Line 44: | Line 66: | ||

=== Reward-Guided Search === | === Reward-Guided Search === | ||

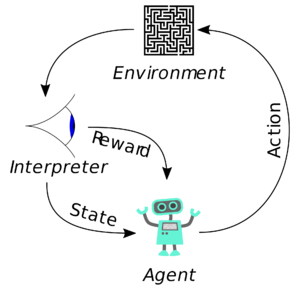

Unlike supervised learning, reinforcement learning (RL) is a behavioral learning model. It does not need to have input/output labelled and it does not need a sub-optimal action to be explicitly corrected. The algorithm receives feedback from the data to achieve the best outcome. The system has an agent that guides the process by taking an action that maximizes the notion of cumulative reward [3]. The process of RL is shown in figure 1. The equation of the cumulative reward function is: | Random search has no guidance for training. To solve this, the authors use reinforcement learning. Unlike supervised learning, reinforcement learning (RL) is a behavioral learning model. It does not need to have input/output labelled and it does not need a sub-optimal action to be explicitly corrected. The algorithm receives feedback from the data to achieve the best outcome. The system has an agent that guides the process by taking an action that maximizes the notion of cumulative reward [3]. The process of RL is shown in figure 1. The equation of the cumulative reward function is: | ||

<center><math>G_t \overset{\Delta}{=} R_t+R_{t+1}+R_{t+2}+⋯+R_T</math></center> | <center><math>G_t \overset{\Delta}{=} R_t+R_{t+1}+R_{t+2}+⋯+R_T</math></center> | ||

| Line 50: | Line 72: | ||

where <math>G_t</math> = cumulative reward, <math>R_t</math> = immediate reward, and <math>R_T</math> = end of episode. | where <math>G_t</math> = cumulative reward, <math>R_t</math> = immediate reward, and <math>R_T</math> = end of episode. | ||

<math>G_t</math> is the sum of immediate rewards from arbitrary time <math>t</math>. It is a random variable because it depends on the immediate reward which depends on the agent action and the environment reaction to this action. | <math>G_t</math> is the sum of immediate rewards from arbitrary time <math>t</math>. It is a random variable because it depends on the immediate reward which depends on the agent action and the environment's reaction to this action. | ||

<center>[[Image:G25_Figure1.png|300px |link=https://en.wikipedia.org/wiki/Reinforcement_learning#/media/File:Reinforcement_learning_diagram.svg |alt=Alt text|Title text]]</center> | <center>[[Image:G25_Figure1.png|300px |link=https://en.wikipedia.org/wiki/Reinforcement_learning#/media/File:Reinforcement_learning_diagram.svg |alt=Alt text|Title text]]</center> | ||

| Line 57: | Line 79: | ||

The reward function is what guides the agent to move in a certain direction. As mentioned above, the system receives feedback from the data to achieve the best outcome. This is caused by the reward being edited based on the feedback it receives when a task is completed [5]. | The reward function is what guides the agent to move in a certain direction. As mentioned above, the system receives feedback from the data to achieve the best outcome. This is caused by the reward being edited based on the feedback it receives when a task is completed [5]. | ||

In this paper, RL is being used to generate a distribution of the hyperparameter <math>\mu</math> for the SoftMax equation using the reward function. <math>\mu</math> updates after each epoch from the reward function. | In this paper, RL is being used to generate a distribution of the hyperparameter <math>\mu</math> for the SoftMax equation using the reward function. At each epoch, <math>B</math> hyper-parameters <math>{a_1, a_2, ..., a_B }</math> are sampled as <math>a \sim \mathcal{N}(\mu, \sigma)</math>. In each epoch, <math>B</math> models are generated with rewards <math>R(a_i), i \in [1, B]</math>. <math>\mu</math> updates after each epoch from the reward function. | ||

<center><math>\mu_{e+1}=\mu_e + \eta \frac{1}{B} \sum_{i=1}^B R{(a_i)}{\nabla_a}log{(g(a_i;\mu,\sigma))}</math></center> | <center><math>\mu_{e+1}=\mu_e + \eta \frac{1}{B} \sum_{i=1}^B R{(a_i)}{\nabla_a}log{(g(a_i;\mu,\sigma))}</math></center> | ||

Where <math>{g(a_i; \mu, \sigma})</math> is the PDF of a Gaussian distribution. The distributions of <math>{a}</math> are updated and the best model if found from the <math>{B}</math> candidates for the next epoch. | |||

=== Optimization === | === Optimization === | ||

Calculating the reward involves a standard bi-level optimization problem, which involves a hyperparameter ({<math>a_1,a_2,…,a_B</math>}) that can be used for minimizing one objective function while maximizing another objective function simultaneously: | Calculating the reward involves a standard bi-level optimization problem. A standard bi-level optimization problem is a hierarchy of two optimization tasks, an upper-level or leader and lower-level or follower problems, which involves a hyperparameter ({<math>a_1,a_2,…,a_B</math>}) that can be used for minimizing one objective function while maximizing another objective function simultaneously: | ||

<center><math>max_a R(a)=r(M_{w^*(a)},S_v)</math></center> | <center><math>max_a R(a)=r(M_{w^*(a)},S_v)</math></center> | ||

| Line 70: | Line 94: | ||

== Results and Discussion == | == Results and Discussion == | ||

=== Data Preprocessing === | |||

The training datasets consisted of cleaned versions of CASIA-WebFace and MS-Celeb-1M-v1c to remove the impact of noisy labels in the original sets. | |||

Furthermore, it is important to perform open-set evaluation for face recognition problems. That is, there shall be no overlapping identities between training and testing sets. As a result, there were a total of 15,414 identities removed from the testing sets. For fairness during comparison, all summarized results will be based on refined datasets. | |||

=== Results on LFW, SLLFW, CALFW, CPLFW, AgeDB, DFP === | === Results on LFW, SLLFW, CALFW, CPLFW, AgeDB, DFP === | ||

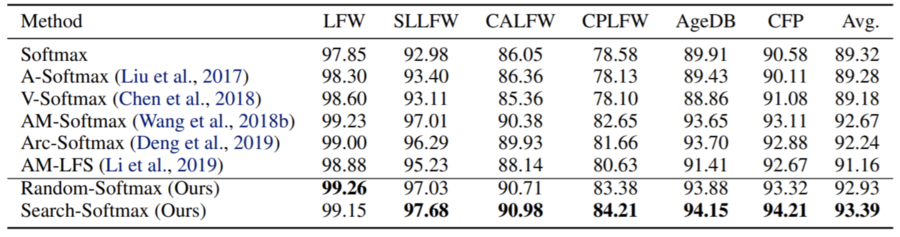

For LFW, there is not a noticeable difference between the algorithms proposed in this paper and the other algorithms | For LFW, there is not a noticeable difference between the algorithms proposed in this paper and the other algorithms, however, AM-Softmax achieved higher results than Search-Softmax. Random-Softmax achieved the highest results by 0.03%. | ||

Random-Softmax outperforms baseline Soft-max and is comparable to most of the margin-based softmax. Search-Softmax | Random-Softmax outperforms baseline Soft-max and is comparable to most of the margin-based softmax. Search-Softmax boosts the performance and better most methods specifically when training CASIA-WebFace-R data set, it achieves 0.72% average improvement over AM-Softmax. The reason the model proposed by the paper gives better results is because of their optimization strategy which helps boost the discrimination power. Also, the sampled candidate from the paper’s proposed search space can well approximate the margin-based loss functions. More tests need to happen to more complicated protocols to test the performance further. Not a lot of improvement has been shown on those test sets, since they are relatively simple and the performance of all the methods on these test sets are near saturation. The following table gives a summary of the performance of each model. | ||

<center>Table 1.Verification performance (%) of different methods on the test sets LFW, SLLFW, CALFW, CPLFW, AgeDB and CFP. The training set is '''CASIA-WebFace-R''' [1].</center> | <center>Table 1.Verification performance (%) of different methods on the test sets LFW, SLLFW, CALFW, CPLFW, AgeDB and CFP. The training set is '''CASIA-WebFace-R''' [1].</center> | ||

| Line 90: | Line 118: | ||

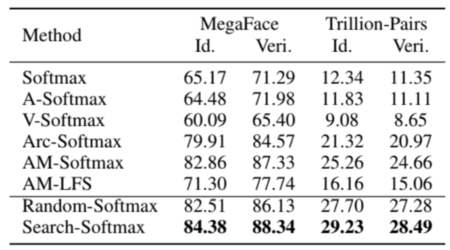

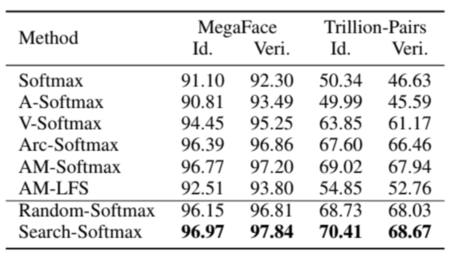

=== Results on MegaFace and Trillion-Pairs === | === Results on MegaFace and Trillion-Pairs === | ||

The different loss functions are tested again with more complicated protocols. The identification (Id.) Rank-1 and the verification (Veri.) with the true positive rate (TPR) at low false acceptance rate (FAR) at <math>1e-3</math> on MegaFace, the identification TPR@FAR = <math>1e-6</math> and the verification TPR@FAR = <math>1e-9</math> on Trillion-Pairs are reported on Table 4 and 5. | The different loss functions are tested again with more complicated protocols. The identification (Id.) Rank-1 and the verification (Veri.) with the true positive rate (TPR) at low false acceptance rate (FAR) at <math>1e^{-3}</math> on MegaFace, the identification TPR@FAR = <math>1e^{-6}</math> and the verification TPR@FAR = <math>1e^{-9}</math> on Trillion-Pairs are reported on Table 4 and 5. | ||

On the test sets MegaFace and Trillion-Pairs, Search- | On the test sets MegaFace and Trillion-Pairs, Search-Softmax achieves the best performance over all other alternative methods. On MegaFace, Search-Softmax beat the best competitor AM-softmax by a large margin. It also outperformed AM-LFS due to newly designed search space. | ||

<center>Table 4. Performance (%) of different loss functions on the test sets MegaFace and Trillion-Pairs. The training set is '''CASIA-WebFace-R''' [1].</center> | <center>Table 4. Performance (%) of different loss functions on the test sets MegaFace and Trillion-Pairs. The training set is '''CASIA-WebFace-R''' [1].</center> | ||

| Line 101: | Line 129: | ||

<center>[[Image:G25_Table5.png|450px |alt=Alt text|Title text]]</center> | <center>[[Image:G25_Table5.png|450px |alt=Alt text|Title text]]</center> | ||

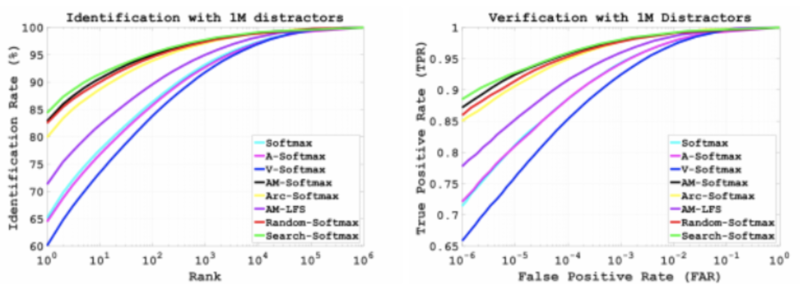

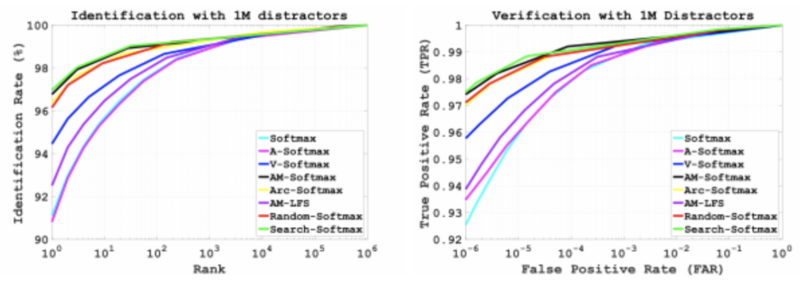

From the CMC curves and ROC curves in Figure 2, similar trends are observed at other measures. There is a | From the CMC curves and ROC curves in Figure 2, similar trends are observed at other measures. There is a similar trend with Trillion-Pairs where Search-Softmax loss is found to be superior with 4% improvements with CASIA-WebFace-R and 1% improvements with MS-Celeb-1M-v1c-R at both the identification and verification. Based on these experiments, Search-Softmax loss can perform well, especially with a low false positive rate and it shows a strong generalization ability for face recognition. | ||

<center>[[Image:G25_Figure2_left.png| | <center>[[Image:G25_Figure2_left.png|800px |alt=Alt text|Title text]] [[Image:G25_Figure2_right.png|800px |alt=Alt text|Title text]]</center> | ||

<center>Figure 2. From Left to Right: CMC curves and ROC curves on MegaFace Set with training set CASIA-WebFace-R, CMC curves and ROC curves on MegaFace Set with training set MS-Celeb-1M-v1c-R [1].</center> | <center>Figure 2. From Left to Right: CMC curves and ROC curves on MegaFace Set with training set CASIA-WebFace-R, CMC curves and ROC curves on MegaFace Set with training set MS-Celeb-1M-v1c-R [1].</center> | ||

== Conclusion == | == Conclusion == | ||

The paper discussed that in order to enhance feature discrimination for face recognition, it is crucial to reduce the softmax probability. To achieve this goal, unified formulation for the margin-based softmax losses is designed. In addition, we define a new search space to ensure feature recognition. Two search methods have been developed using a random and a reward-guided loss function and they were validated to be effective over six other methods using nine different test data sets. While these developed methods were generally more effective in increasing accuracy versus previous methods, there is very little difference between the two. It can be seen that Search-Softmax performs slightly better than Random-Softmax most of the time. | |||

== Critiques == | == Critiques == | ||

| Line 115: | Line 143: | ||

* The test data set they used to test Search-Softmax and Random-Softmax are simple and they saturate in other methods. So the results of their methods didn’t show many advantages since they produce very similar results. A more complicated data set needs to be tested to prove the method's reliability. | * The test data set they used to test Search-Softmax and Random-Softmax are simple and they saturate in other methods. So the results of their methods didn’t show many advantages since they produce very similar results. A more complicated data set needs to be tested to prove the method's reliability. | ||

* There is another paper Large-Margin Softmax Loss for Convolutional Neural Networks[https://arxiv.org/pdf/1612.02295.pdf] that provides a more detailed explanation about how to reduce margin-based softmax loss. | * There is another paper Large-Margin Softmax Loss for Convolutional Neural Networks[https://arxiv.org/pdf/1612.02295.pdf] that provides a more detailed explanation about how to reduce margin-based softmax loss. | ||

* It is questionable when it comes to the accuracy of testing sets, as they only used the clean version of CASIA-WebFace and | * It is questionable when it comes to the accuracy of testing sets, as they only used the clean version of CASIA-WebFace and MS-Celeb-1M-vlc for training instead of these two training sets with noisy labels. | ||

* In a similar [https://arxiv.org/pdf/1905.09773.pdf?utm_source=thenewstack&utm_medium=website&utm_campaign=platform paper], written by Tae-Hyun Oh et al., they also discuss an optimal loss function for face recognition. However, since in the other paper, they were doing face recognition from voice audio, the loss function used was slightly different than the ones discussed in this paper. | |||

* This model has many applications such as identifying disguised prisoners for police. But we need to do a good data preprocessing otherwise we might not get a good predicted result. But authors did not mention about the data preprocessing which is a key part of this model. It might be done in either feature extraction or feature selection, but it's crucial to indicate how they did it. | |||

* It will be better if we can know what kind of noises was removed in the clean version. Also, simply removing the overlapping data is wasteful. It would be better to just put them into one of the train and test samples. | |||

* This paper indicate that the new searching method and loss function have induced more effective face recognition result than other six methods. But there is no mention of the increase or decrease in computational efficiency since only very little difference exist between those methods and the real time evaluation is often required at the face recognition application level. | |||

* There are some loss functions that receives more than 2 inputs. For example, the ''triplet loss'' function, developed by Google, takes 3 inputs: positive input, negative input and anchor input. This makes sense because for face recognition, we want to model to learn not only what it is supposed to predict but also what it is not supposed to predict. Typically, triplet loss handles false positives much better. This paper can extend its scope to such loss function that takes more than 2 inputs. | |||

* It would be good to also know what the training time is like for the method, specifically the "Reward-Guided Search" which uses RL. Also the authors mention some data preprocessing that was performed, was this same preprocessing also performed for the methods they compared against? | |||

* Sections on Data Processing and Results can be improved. About the datasets, I have some questions about why they are divided in the current fashion. It is mentioned that "CASIA-WebFace and MS-Celeb-1M-v1c" are used as training datasets. But the comparison of algorithms are divided into three groups: Megaface and TrillionPairs, RFW, and a group of other datasets. In general, when we are comparing algorithms, we want to have a holistic view of how each algorithm compare. So I have some concerns about dividing the results into three section. More explanation can be provided. It also seems like Random-Softmax and Search Softmax outperform all other algorithms across all datasets. So it would make even more sense to have a big table including all the results. About data preprocessing, I believe that giving more information about which noisy data are removed would be nice. | |||

* Despite thorough comparison between each method against the proposed method, it does not give a reason to why it was the case that it was either better or worse, and it does not necessarily need to be a mathematical explanation but an intuitive one to demonstrate how it can be replicated and whether the results require a certain condition to achieve. | |||

* Though we have a graph demonstrating the training loss with Random-Softmax and Search-Softmax with regards to the number of Epochs as an independent variable which we may deduce the number of epochs used in later graphs but since one of the main features is that "Meanwhile, our optimization strategy enables that the dynamic loss can guide | |||

* Did the paper address why the average model performs worse on African faces, would it be a lack of data points? | |||

the model training of different epochs, which helps further boost the discrimination power." it is imperative that the results are comparable along the same scale (for example, for 20 epochs, then take the average of the losses). | |||

* The result summary is overwhelming with numbers and representation of result is lacking. It would be great if the result can be explained. Introduction of model and its component is lacking and could be explained more. | |||

* It would be better if the paper contains some Face Recognition visualization, i.e. show actually face recognition example to show the improvement. | |||

* The introduction of data and the analysis of data processing are important because there might be some limitations. Also, it would be better to give theoretical analysis of the effects of reducing softmax probability and the number of sampled models, which explains the update of the parameters for better performance. | |||

* It would be better to include time performance in the evaluation section. | |||

* The paper is missing details on datasets. It would be better to know if the datasets were balanced or unbalanced and how this would affect the accuracy. Also, computational comparisons between the new loss function versus traditional method would be interesting to know. | |||

* The paper included a dataset that measures racial bias, however it is a widely known fact that majority of face recognition models are trained on biased and imbalanced datasets themselves. For example, AI that has bias towards classifying a black person as a prisoner since the training set of prisoners is predominantly black. A question that remains unanswered is how training a model using the proposed loss function helps to combat racial bias in machine learning, and how these results in particular improved (or worsened) with its use. | |||

* There are too much data in the conclusion part. A brief conclusion based on several sentences should be enough to present the ideas. | |||

* The author could add the time efficiency of fave recognition in the result to compare the models with other current models for facial recognition since nowadays many application that uses face recognition rely on fast recognition(e.g. unlock phone with face id) | |||

*It is interesting to see how loss function impacts face recognition. It would be better to see different evaluations based on different datasets. Not only accuracy is important, but also efficiency is significant. | |||

* It is important for facial recognition models to feature some level of noise, since for most uses, things like lighting and camera quality is drastically different. For a more practical use of this, datasets with a certain level of noise should be introduced and tested. | |||

* The paper omits the full details of the necessity of normalization within the model; as described in the original [https://arxiv.org/abs/1801.05599 paper] describing A-softmax for face verification they stressed the importance of normalization. Does the lack of detail mean these new softmax loss functions don't require layer normalization? | |||

* The paper introduced the Search-Softmax algorithm, and written clearly in pseudocode. It will be more helpful if the piece of pseudocode is attached in the summary, because it is better than description in words. Also, it is important to reveal the experiment setting before talking about results. In the original paper, it is clearly stated the results were obtained under what condition. The condition includes what method was utilized for data processing(in this case it is a 6-layer CNN), how was the method implemented, etc. There is also another important concept mentioned called the ablation study. Basically it studied the effect of reducing softmax probability, the number of sampled models and the method convergence. They are important for later analysis because they are crucial to model optimization and usability. If the proposed Random-Softmax and Search-Softmax losses do not converge to a value(0 to be specific), then it is useless. | |||

== References == | == References == | ||

Latest revision as of 09:51, 15 December 2020

Presented by

Jan Lau, Anas Mahdi, Will Thibault, Jiwon Yang

Introduction

Face recognition is a technology that can label a face to a specific identity. The field of study involves two tasks: 1. Identifying and classifying a face to a certain identity and 2. Verifying if this face image and another face image map to the same identity. Loss functions play an important role in evaluating how well the prediction models the given data. In the application of face recognition, they are used for training convolutional neural networks (CNNs) with discriminative features. A discriminative feature is one that is able to successfully discriminate the labeled data, and is typically a result of feature engineering/selection. However, traditional softmax loss lacks the power of feature discrimination. To solve this problem, a center loss was developed to learn centers for each identity to enhance the intra-class compactness. Hence, the paper introduced a new loss function using a scale parameter to produce higher gradients to well-separated samples which can reduce the softmax probability.

Margin-based (angular, additive, additive angular margins) soft-max loss functions are important in learning discriminative features in face recognition. There have been hand-crafted methods previously developed that require much efforts such as A-softmax, V-softmax, AM-Softmax, and Arc-softmax. Li et al. proposed an AutoML for loss function search method also known as AM-LFS from a hyper-parameter optimization perspective [2]. It automatically determines the search space by leveraging reinforcement learning to the search loss functions during the training process, though the drawback is the complex and unstable search space.

Soft Max

Softmax probability is the probability for each class. It contains a vector of values that add up to 1 while ranging between 0 and 1. Cross-entropy loss is the negative value of target values times the log of the probabilities. When softmax probability is combined with cross-entropy loss in the last fully connected layer of the CNN, it yields the softmax loss function:

Specifically for face recognition, [math]\displaystyle{ L_1 }[/math] is modified such that [math]\displaystyle{ w^T_yx }[/math] is normalized and [math]\displaystyle{ s }[/math] represents the magnitude of [math]\displaystyle{ w^T_yx }[/math]:

Where [math]\displaystyle{ \cos{(\theta_{{w_k},x})} = w^T_y }[/math] is cosine similarity and [math]\displaystyle{ \theta_{{w_k},x} }[/math] is angle between [math]\displaystyle{ w_k }[/math] and x. The learnt features with this soft max loss are prone to be separable (as desired).

Margin-based Softmax

This function is crucial in face recognition because it is used for enhancing feature discrimination. While there are different variations of the softmax loss function, the functions are built upon the same structure as the equation above.

The margin-based softmax function is:

Here, [math]\displaystyle{ f{(m,\theta_{{w_y},x})} \leq \cos (\theta_{w_y,x}) }[/math] is a carefully chosen margin function.

Some other variations of chosen functions:

A-Softmax Loss: [math]\displaystyle{ f{(m_1,\theta_{{w_y},x})} = \cos (m_1\theta_{w_y,x}) }[/math] , where m1 >= 1 and a integer.

Arc-Softmax Loss: [math]\displaystyle{ f{(m_1,\theta_{{w_y},x})} = \cos (\theta_{w_y,x} + m_2) }[/math], where m2 > 0

AM-Softmax Loss: [math]\displaystyle{ f{(m,\theta_{{w_y},x})} = \cos (m_1\theta_{w_y,x} + m_2) - m_3 }[/math], where m1 >= 1 and a integer; m2,m3 > 0

In this paper, the authors first identified that reducing the softmax probability is a key contribution to feature discrimination and designed two search spaces (random and reward-guided method). They then evaluated their Random-Softmax and Search-Softmax approaches by comparing the results against other face recognition algorithms using nine popular face recognition benchmarks.

Motivation

Previous algorithms for facial recognition frequently rely on CNNs that may include metric learning loss functions such as contrastive loss or triplet loss. Without sensitive sample mining strategies, the computational cost for these functions is high. This drawback prompts the redesign of classical softmax loss that cannot discriminate features. Multiple softmax loss functions have since been developed including margin-based formulations which often require fine-tuning of parameters and are susceptible to instability. Therefore, researchers need to put in a lot of effort in creating their method in the large design space. AM-LFS takes an optimization approach for selecting hyperparameters for the margin-based softmax functions, but its aforementioned drawbacks are caused by the lack of direction in designing the search space.

To solve the issues associated with hand-tuned softmax loss functions and AM-LFS, the authors attempt to reduce the softmax probability to improve feature discrimination when using margin-based softmax loss functions. The development of margin-based softmax loss with only one required parameter and an improved search space using a reward-based method was determined by the authors to be the best option for their loss function.

Problem Formulation

Analysis of Margin-based Softmax Loss

Based on the softmax probability and the margin-based softmax probability, the following function can be developed [1]:

[math]\displaystyle{ a }[/math] is considered as a modulating factor and [math]\displaystyle{ h{(a,p)}=\frac{1}{ap+(1-a)} \in (0,1] }[/math] is a modulating function [1]. Therefore, regardless of the margin function ([math]\displaystyle{ f }[/math]), the minimization of the softmax probability will ensure success.

Compared to AM-LFS, this method involves only one parameter ([math]\displaystyle{ a }[/math]) that is also constrained, versus AM-LFS which has 2M parameters without constraints that specify the piecewise linear functions the method requires. Also, the piecewise linear functions of AM-LFS ([math]\displaystyle{ p_m={a_i}p+b_i }[/math]) may not be discriminative because it could be larger than the softmax probability, while [math]\displaystyle{ p_m=h(a, p)*p \lt p }[/math] always holds.

Random Search

Unified formulation [math]\displaystyle{ L_5 }[/math] is generated by inserting a simple modulating function [math]\displaystyle{ h{(a,p)}=\frac{1}{ap+(1-a)} }[/math] into the original softmax loss. It can be written as below [1]:

This encourages the feature margin between different classes and has the capability of feature discrimination. This leads to defining the search space as the choice of [math]\displaystyle{ h{(a,p)} }[/math] whose impacts on the training procedure are decided by the modulating factor [math]\displaystyle{ a }[/math]. In order to validate the unified formulation, a modulating factor is randomly set at each training epoch. This is noted as Random-Softmax in this paper.

Reward-Guided Search

Random search has no guidance for training. To solve this, the authors use reinforcement learning. Unlike supervised learning, reinforcement learning (RL) is a behavioral learning model. It does not need to have input/output labelled and it does not need a sub-optimal action to be explicitly corrected. The algorithm receives feedback from the data to achieve the best outcome. The system has an agent that guides the process by taking an action that maximizes the notion of cumulative reward [3]. The process of RL is shown in figure 1. The equation of the cumulative reward function is:

where [math]\displaystyle{ G_t }[/math] = cumulative reward, [math]\displaystyle{ R_t }[/math] = immediate reward, and [math]\displaystyle{ R_T }[/math] = end of episode.

[math]\displaystyle{ G_t }[/math] is the sum of immediate rewards from arbitrary time [math]\displaystyle{ t }[/math]. It is a random variable because it depends on the immediate reward which depends on the agent action and the environment's reaction to this action.

The reward function is what guides the agent to move in a certain direction. As mentioned above, the system receives feedback from the data to achieve the best outcome. This is caused by the reward being edited based on the feedback it receives when a task is completed [5].

In this paper, RL is being used to generate a distribution of the hyperparameter [math]\displaystyle{ \mu }[/math] for the SoftMax equation using the reward function. At each epoch, [math]\displaystyle{ B }[/math] hyper-parameters [math]\displaystyle{ {a_1, a_2, ..., a_B } }[/math] are sampled as [math]\displaystyle{ a \sim \mathcal{N}(\mu, \sigma) }[/math]. In each epoch, [math]\displaystyle{ B }[/math] models are generated with rewards [math]\displaystyle{ R(a_i), i \in [1, B] }[/math]. [math]\displaystyle{ \mu }[/math] updates after each epoch from the reward function.

Where [math]\displaystyle{ {g(a_i; \mu, \sigma}) }[/math] is the PDF of a Gaussian distribution. The distributions of [math]\displaystyle{ {a} }[/math] are updated and the best model if found from the [math]\displaystyle{ {B} }[/math] candidates for the next epoch.

Optimization

Calculating the reward involves a standard bi-level optimization problem. A standard bi-level optimization problem is a hierarchy of two optimization tasks, an upper-level or leader and lower-level or follower problems, which involves a hyperparameter ({[math]\displaystyle{ a_1,a_2,…,a_B }[/math]}) that can be used for minimizing one objective function while maximizing another objective function simultaneously:

In this case, the loss function takes the training set [math]\displaystyle{ S_t }[/math] and the reward function takes the validation set [math]\displaystyle{ S_v }[/math]. The weights [math]\displaystyle{ w }[/math] are trained such that the loss function is minimized while the reward function is maximized. The calculated reward for each model ({[math]\displaystyle{ M_{we1},M_{we2},…,M_{weB} }[/math]}) yields the corresponding score, then the algorithm chooses the one with the highest score for model index selection. With the model containing the highest score being used in the next epoch, this process is repeated until the training reaches convergence. In the end, the algorithm takes the model with the highest score without retraining.

Results and Discussion

Data Preprocessing

The training datasets consisted of cleaned versions of CASIA-WebFace and MS-Celeb-1M-v1c to remove the impact of noisy labels in the original sets. Furthermore, it is important to perform open-set evaluation for face recognition problems. That is, there shall be no overlapping identities between training and testing sets. As a result, there were a total of 15,414 identities removed from the testing sets. For fairness during comparison, all summarized results will be based on refined datasets.

Results on LFW, SLLFW, CALFW, CPLFW, AgeDB, DFP

For LFW, there is not a noticeable difference between the algorithms proposed in this paper and the other algorithms, however, AM-Softmax achieved higher results than Search-Softmax. Random-Softmax achieved the highest results by 0.03%.

Random-Softmax outperforms baseline Soft-max and is comparable to most of the margin-based softmax. Search-Softmax boosts the performance and better most methods specifically when training CASIA-WebFace-R data set, it achieves 0.72% average improvement over AM-Softmax. The reason the model proposed by the paper gives better results is because of their optimization strategy which helps boost the discrimination power. Also, the sampled candidate from the paper’s proposed search space can well approximate the margin-based loss functions. More tests need to happen to more complicated protocols to test the performance further. Not a lot of improvement has been shown on those test sets, since they are relatively simple and the performance of all the methods on these test sets are near saturation. The following table gives a summary of the performance of each model.

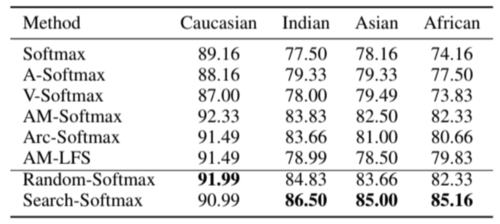

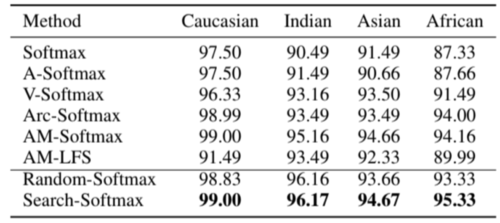

Results on RFW

The RFW dataset measures racial bias which consists of Caucasian, Indian, Asian, and African. Using this as the test set, Random-softmax and Search-softmax performed better than the other methods. Random-softmax outperforms the baseline softmax by a large margin which means reducing the softmax probability will enhance the feature discrimination for face recognition. It is also observed that the reward guided search-softmax method is more likely to enhance the discriminative feature learning resulting in higher performance as shown in Table 2 and Table 3.

Results on MegaFace and Trillion-Pairs

The different loss functions are tested again with more complicated protocols. The identification (Id.) Rank-1 and the verification (Veri.) with the true positive rate (TPR) at low false acceptance rate (FAR) at [math]\displaystyle{ 1e^{-3} }[/math] on MegaFace, the identification TPR@FAR = [math]\displaystyle{ 1e^{-6} }[/math] and the verification TPR@FAR = [math]\displaystyle{ 1e^{-9} }[/math] on Trillion-Pairs are reported on Table 4 and 5.

On the test sets MegaFace and Trillion-Pairs, Search-Softmax achieves the best performance over all other alternative methods. On MegaFace, Search-Softmax beat the best competitor AM-softmax by a large margin. It also outperformed AM-LFS due to newly designed search space.

From the CMC curves and ROC curves in Figure 2, similar trends are observed at other measures. There is a similar trend with Trillion-Pairs where Search-Softmax loss is found to be superior with 4% improvements with CASIA-WebFace-R and 1% improvements with MS-Celeb-1M-v1c-R at both the identification and verification. Based on these experiments, Search-Softmax loss can perform well, especially with a low false positive rate and it shows a strong generalization ability for face recognition.

Conclusion

The paper discussed that in order to enhance feature discrimination for face recognition, it is crucial to reduce the softmax probability. To achieve this goal, unified formulation for the margin-based softmax losses is designed. In addition, we define a new search space to ensure feature recognition. Two search methods have been developed using a random and a reward-guided loss function and they were validated to be effective over six other methods using nine different test data sets. While these developed methods were generally more effective in increasing accuracy versus previous methods, there is very little difference between the two. It can be seen that Search-Softmax performs slightly better than Random-Softmax most of the time.

Critiques

- Thorough experimentation and comparison of results to state-of-the-art provided a convincing argument.

- Datasets used did require some preprocessing, which may have improved the results beyond what the method otherwise would.

- AM-LFS was created by the authors for experimentation (the code was not made public) so the comparison may not be accurate.

- The test data set they used to test Search-Softmax and Random-Softmax are simple and they saturate in other methods. So the results of their methods didn’t show many advantages since they produce very similar results. A more complicated data set needs to be tested to prove the method's reliability.

- There is another paper Large-Margin Softmax Loss for Convolutional Neural Networks[1] that provides a more detailed explanation about how to reduce margin-based softmax loss.

- It is questionable when it comes to the accuracy of testing sets, as they only used the clean version of CASIA-WebFace and MS-Celeb-1M-vlc for training instead of these two training sets with noisy labels.

- In a similar paper, written by Tae-Hyun Oh et al., they also discuss an optimal loss function for face recognition. However, since in the other paper, they were doing face recognition from voice audio, the loss function used was slightly different than the ones discussed in this paper.

- This model has many applications such as identifying disguised prisoners for police. But we need to do a good data preprocessing otherwise we might not get a good predicted result. But authors did not mention about the data preprocessing which is a key part of this model. It might be done in either feature extraction or feature selection, but it's crucial to indicate how they did it.

- It will be better if we can know what kind of noises was removed in the clean version. Also, simply removing the overlapping data is wasteful. It would be better to just put them into one of the train and test samples.

- This paper indicate that the new searching method and loss function have induced more effective face recognition result than other six methods. But there is no mention of the increase or decrease in computational efficiency since only very little difference exist between those methods and the real time evaluation is often required at the face recognition application level.

- There are some loss functions that receives more than 2 inputs. For example, the triplet loss function, developed by Google, takes 3 inputs: positive input, negative input and anchor input. This makes sense because for face recognition, we want to model to learn not only what it is supposed to predict but also what it is not supposed to predict. Typically, triplet loss handles false positives much better. This paper can extend its scope to such loss function that takes more than 2 inputs.

- It would be good to also know what the training time is like for the method, specifically the "Reward-Guided Search" which uses RL. Also the authors mention some data preprocessing that was performed, was this same preprocessing also performed for the methods they compared against?

- Sections on Data Processing and Results can be improved. About the datasets, I have some questions about why they are divided in the current fashion. It is mentioned that "CASIA-WebFace and MS-Celeb-1M-v1c" are used as training datasets. But the comparison of algorithms are divided into three groups: Megaface and TrillionPairs, RFW, and a group of other datasets. In general, when we are comparing algorithms, we want to have a holistic view of how each algorithm compare. So I have some concerns about dividing the results into three section. More explanation can be provided. It also seems like Random-Softmax and Search Softmax outperform all other algorithms across all datasets. So it would make even more sense to have a big table including all the results. About data preprocessing, I believe that giving more information about which noisy data are removed would be nice.

- Despite thorough comparison between each method against the proposed method, it does not give a reason to why it was the case that it was either better or worse, and it does not necessarily need to be a mathematical explanation but an intuitive one to demonstrate how it can be replicated and whether the results require a certain condition to achieve.

- Though we have a graph demonstrating the training loss with Random-Softmax and Search-Softmax with regards to the number of Epochs as an independent variable which we may deduce the number of epochs used in later graphs but since one of the main features is that "Meanwhile, our optimization strategy enables that the dynamic loss can guide

- Did the paper address why the average model performs worse on African faces, would it be a lack of data points?

the model training of different epochs, which helps further boost the discrimination power." it is imperative that the results are comparable along the same scale (for example, for 20 epochs, then take the average of the losses).

- The result summary is overwhelming with numbers and representation of result is lacking. It would be great if the result can be explained. Introduction of model and its component is lacking and could be explained more.

- It would be better if the paper contains some Face Recognition visualization, i.e. show actually face recognition example to show the improvement.

- The introduction of data and the analysis of data processing are important because there might be some limitations. Also, it would be better to give theoretical analysis of the effects of reducing softmax probability and the number of sampled models, which explains the update of the parameters for better performance.

- It would be better to include time performance in the evaluation section.

- The paper is missing details on datasets. It would be better to know if the datasets were balanced or unbalanced and how this would affect the accuracy. Also, computational comparisons between the new loss function versus traditional method would be interesting to know.

- The paper included a dataset that measures racial bias, however it is a widely known fact that majority of face recognition models are trained on biased and imbalanced datasets themselves. For example, AI that has bias towards classifying a black person as a prisoner since the training set of prisoners is predominantly black. A question that remains unanswered is how training a model using the proposed loss function helps to combat racial bias in machine learning, and how these results in particular improved (or worsened) with its use.

- There are too much data in the conclusion part. A brief conclusion based on several sentences should be enough to present the ideas.

- The author could add the time efficiency of fave recognition in the result to compare the models with other current models for facial recognition since nowadays many application that uses face recognition rely on fast recognition(e.g. unlock phone with face id)

- It is interesting to see how loss function impacts face recognition. It would be better to see different evaluations based on different datasets. Not only accuracy is important, but also efficiency is significant.

- It is important for facial recognition models to feature some level of noise, since for most uses, things like lighting and camera quality is drastically different. For a more practical use of this, datasets with a certain level of noise should be introduced and tested.

- The paper omits the full details of the necessity of normalization within the model; as described in the original paper describing A-softmax for face verification they stressed the importance of normalization. Does the lack of detail mean these new softmax loss functions don't require layer normalization?

- The paper introduced the Search-Softmax algorithm, and written clearly in pseudocode. It will be more helpful if the piece of pseudocode is attached in the summary, because it is better than description in words. Also, it is important to reveal the experiment setting before talking about results. In the original paper, it is clearly stated the results were obtained under what condition. The condition includes what method was utilized for data processing(in this case it is a 6-layer CNN), how was the method implemented, etc. There is also another important concept mentioned called the ablation study. Basically it studied the effect of reducing softmax probability, the number of sampled models and the method convergence. They are important for later analysis because they are crucial to model optimization and usability. If the proposed Random-Softmax and Search-Softmax losses do not converge to a value(0 to be specific), then it is useless.

References

[1] X. Wang, S. Wang, C. Chi, S. Zhang and T. Mei, "Loss Function Search for Face Recognition", in International Conference on Machine Learning, 2020, pp. 1-10.

[2] Li, C., Yuan, X., Lin, C., Guo, M., Wu, W., Yan, J., and Ouyang, W. Am-lfs: Automl for loss function search. In Proceedings of the IEEE International Conference on Computer Vision, pp. 8410–8419, 2019. 2020].

[3] S. L. AI, “Reinforcement Learning algorithms - an intuitive overview,” Medium, 18-Feb-2019. [Online]. Available: https://medium.com/@SmartLabAI/reinforcement-learning-algorithms-an-intuitive-overview-904e2dff5bbc. [Accessed: 25-Nov-2020].

[4] “Reinforcement learning,” Wikipedia, 17-Nov-2020. [Online]. Available: https://en.wikipedia.org/wiki/Reinforcement_learning. [Accessed: 24-Nov-2020].

[5] B. Osiński, “What is reinforcement learning? The complete guide,” deepsense.ai, 23-Jul-2020. [Online]. Available: https://deepsense.ai/what-is-reinforcement-learning-the-complete-guide/. [Accessed: 25-Nov-2020].