Describtion of Text Mining: Difference between revisions

| (93 intermediate revisions by 47 users not shown) | |||

| Line 3: | Line 3: | ||

== Introduction == | == Introduction == | ||

This paper focuses on the different text mining | This paper focuses on the different text mining techniques and the applications of text mining in the healthcare and biomedical domain. The text mining field has been popular as a result of the amount of text data that is available in different forms by devising patterns and trends by means such as statistical pattern learning. The text data is bound to grow even more in 2020, indicating a 50 times growth since 2010. Text is unstructured information, which is easy for humans to construct and understand but difficult for machines. Hence, there is a need to design algorithms to effectively process this avalanche of text. To further explore the text mining field, the related text mining approaches can be considered. The different text mining approaches relate to two main methods: knowledge delivery and traditional data mining methods. | ||

The authors note that knowledge delivery methods involve the application of different steps to a specific data set to create specific patterns. Research in knowledge delivery methods has evolved over the years due to advances in hardware and software technology. On the other hand, data mining has experienced substantial development through the intersection of three fields: databases, machine learning, and statistics. As brought out by the authors, text mining approaches focus on the exploration of information from a specific text. The information explored is in the form of structured, semi-structured, and unstructured text. It is important to note that text mining covers different sets of algorithms and topics that include information retrieval. The topics and algorithms are used for analyzing different text forms. | The authors note that knowledge delivery methods involve the application of different steps to a specific data set to create specific patterns. Research in knowledge delivery methods has evolved over the years due to advances in hardware and software technology. On the other hand, data mining has experienced substantial development through the intersection of three fields: databases, machine learning, and statistics. As brought out by the authors, text mining approaches focus on the exploration of information from a specific text. The information explored is in the form of structured, semi-structured, and unstructured text. It is important to note that text mining covers different sets of algorithms and topics that include information retrieval. The topics and algorithms are used for analyzing different text forms. | ||

== Text Representation and Encoding == | ==Text Representation and Encoding == | ||

In this section of the paper, the authors explore the different ways in which the text can be represented on a large collection of documents. One common way of representing the documents is in the form of a bag of words. The bag of words considers the occurrences of different terms. In different text mining applications, documents are ranked and represented as vectors so as to display the significance of any word. The authors note that the three basic models used are vector space, inference network, and the probabilistic models. The vector space model is used to represent documents by converting them into vectors. In the model, a variable is used to represent each model to indicate the importance of the word in the document. The words are weighted using the TF-IDF scheme computed as | The authors review multiple methods of preprocessing text, including 4 methods to preprocess and recognize influence and frequency of individual group of words in a document. In many text mining algorithms, one of the key components is preprocessing. Preprocessing consists of different tasks that include filtering, tokenization, stemming, and lemmatization. The first step is tokenization, where a character sequence is broken down into different words or phrases. After the breakdown, filtering is carried out to remove some words. The various word inflected forms are grouped together through lemmatization, and later, the derived roots of the derived words are obtained through stemming. | ||

'''1. Tokenization''' | |||

This process splits text (i.e. a sentence) into a single unit of words, known as tokens while removing unnecessary characters. Tokenization relies on identifying word boundaries, that is ending of a word and beginning of another word, usually separated by space. Characters such as punctuation are removed and the text is split at space characters. An example of this would be converting the string "This is my string" to "This", "is", "my", "string". | |||

'''2. Filtering''' | |||

Filtering is a process by which unnecessary words or characters are removed. Often these include punctuation, prepositions, and conjugations. The resulting corpus then contains words with maximal importance in distinguishing between classes. This also includes removing words occurring very rarely, possibly of no significant relevance, as the the words with relatively high frequency may have little information to do classification. | |||

'''3. Lemmatization''' | |||

Lemmatization is the task that considers the morphological analysis of the words, i.e where the various inflected forms of a word are converted to a single form. However, unlike in stemming (see below), we must specify the part of speech (POS) of each word, i.e its intended meaning in the given sentence or document, which can prone to human error. For example, "geese" and "goose" have the same lemma "goose", as they have the same meaning. | |||

'''4. Stemming''' | |||

Stemming extracts the roots of words. It is a language dependent process. The goal of both stemming is to reduce inflectional and related (definition wise) forms of a word to a common base form. An example of this would be changing "am", "are", or "is" to "be". | |||

'''Vector Space Model''' | |||

In this section of the paper, the authors explore the different ways in which the text can be represented on a large collection of documents. One common way of representing the documents is in the form of a bag of words. The bag of words considers the occurrences of different terms. | |||

In different text mining applications, documents are ranked and represented as vectors so as to display the significance of any word. | |||

The authors note that the three basic models used are vector space, inference network, and the probabilistic models. The vector space model is used to represent documents by converting them into vectors. In the model, a variable is used to represent each model to indicate the importance of the word in the document. | |||

The weights have 2 main models used Boolean model and TF-IDF model: | |||

'''Boolean model''' | |||

terms are assigned with a positive wij if the term appears in the document. otherwise, it will be assigned a weight of 0. | |||

'''Term Frequency - inverse document frequency (TF-IDF)''' | |||

The words are weighted using the TF-IDF scheme computed as | |||

$$ | $$ | ||

q(w)=f_d(w)*log{\frac{|D|}{f_D(w)}} | q(w)=f_d(w)*\log{\frac{|D|}{f_D(w)}} | ||

$$ | $$ | ||

The frequency of each term is normalized by the inverse of document frequency, which helps distinct words with low frequency is recognized its importance. Each document is represented by a vector of term weights, <math>\omega(d) = (\omega(d, w_1), \omega(d,w_2),...,\omega(d,w_v))</math>. The similarity between two documents <math>d_1, d_2</math> is commonly measured by cosine similarity: | |||

$$ | |||

S(d_1,d_2) = \cos(\theta) = \frac{d_1\cdot d_2}{\sum_{i=1}^vw^2_{1i}\cdot\sum_{i=1}^vw^2_{2i}} | |||

$$ | |||

== Classification == | == Classification == | ||

Classification in Text Mining aims to | Classification in Text Mining aims to assign predefined classes to text documents. For a set <math>\mathcal{D} = {d_1, d_2, ... d_n}</math> of documents, each <math>d_i</math> is mapped to a label <math>l_i</math> from the set <math>\mathcal{L} = {l_1, l_2, ... l_k}</math>. The goal is to find a classification model <math>f</math> such that: <math>\\</math> | ||

$$ | $$ | ||

f: \mathcal{D} \rightarrow \mathcal{L} | f: \mathcal{D} \rightarrow \mathcal{L} \quad \quad \quad f(\mathcal{d}) = \mathcal{l} | ||

$$ | $$ | ||

The author illustrates 4 different classifiers that are commonly used in text mining. | The author illustrates 4 different classifiers that are commonly used in text mining. | ||

| Line 26: | Line 57: | ||

'''1. Naive Bayes Classifier''' | '''1. Naive Bayes Classifier''' | ||

Bayes rule is used to classify new examples and select the class that | Bayes rule is used to classify new examples and select the class that has the generated result that occurs most often. | ||

Naive Bayes Classifier models the distribution of documents in each class using a probabilistic model assuming that the distribution | Naive Bayes Classifier models the distribution of documents in each class using a probabilistic model assuming that the distribution | ||

of different terms is independent of each other. The models commonly used in this classifier tried to find the posterior probability of a class based on the distribution and assumes that the documents generated are based on a mixture model parameterized by <math>\theta</math> and compute the likelihood of a document using the sum of probabilities over all mixture component. | of different terms is independent of each other. The models commonly used in this classifier tried to find the posterior probability of a class based on the distribution and assumes that the documents generated are based on a mixture model parameterized by <math>\theta</math> and compute the likelihood of a document using the sum of probabilities over all mixture component. In addition, the Naive Bayes Classifier can help get around the curse of dimensionality, which may arise with high-dimensional data, such as text. | ||

'''2. Nearest Neighbour Classifier''' | '''2. Nearest Neighbour Classifier''' | ||

Nearest Neighbour Classifier uses distance-based measures to perform the classification. The documents which belong to the same class are more likely "similar" or close to each other based on the similarity measure. The classification of the test documents is inferred from the class labels of similar documents in the training set. | Nearest Neighbour Classifier uses distance-based measures to perform the classification. The documents which belong to the same class are more likely "similar" or close to each other based on the similarity measure. The classification of the test documents is inferred from the class labels of similar documents in the training set. K-Nearest Neighbor classification is well known to suffer from the "curse of dimensionality", as the proportional volume of each $d$-sphere surrounding each datapoint compared to the volume of the sample space shrinks exponentially in $d$. | ||

'''3. Decision Tree Classifier''' | '''3. Decision Tree Classifier''' | ||

| Line 40: | Line 71: | ||

'''4. Support Vector Machines''' | '''4. Support Vector Machines''' | ||

SVM is a form of Linear Classifiers which are models that makes a classification decision based on the value of the linear combinations of the documents features. The output of a linear predictor is defined to the <math> y=\vec{a} \cdot \vec{x} + b</math> where <math>\vec{x}</math> is the normalized document word frequency vector, <math>\vec{a}</math> is a vector of | SVM is a form of Linear Classifiers which are models that makes a classification decision based on the value of the linear combinations of the documents features. The output of a linear predictor is defined to the <math> y=\vec{a} \cdot \vec{x} + b</math> where <math>\vec{x}</math> is the normalized document word frequency vector, <math>\vec{a}</math> is a vector of coefficient and <math>b</math> is a scalar. Support Vector Machines attempts to find a linear separators between various classes. An advantage of the SVM method is it is robust to high dimensionality. | ||

== Clustering == | == Clustering == | ||

Clustering | Clustering has been extensively studied in the context of the text as it has a wide range of applications such as visualization and document organization. | ||

Clustering algorithms are used to group similar documents and thus aid in information retrieval. Text clustering can be in different levels of granularities, where clusters can be documents, paragraphs, sentences, or terms. Since text data has numerous distance characteristics that demand the design of text-specific algorithms for the task, using a binary vector to represent the text document is simply not enough. Here are some unique properties of text representation: | |||

1. Text representation has a large dimensionality, in which the size of the vocabulary from which the documents are drawn is massive, but a document might only contain a small number of words. | |||

2. The words in the documents are usually correlated with each other. Need to take the correlation into consideration when designing algorithms. | |||

3. The number of words differs from one another of the document. Thus the document needs to be normalized first before the clustering process. | |||

Three most commonly used text clustering algorithms are presented below. | |||

'''1. Hierarchical Clustering algorithms''' | |||

Hierarchical Clustering algorithms builds a group of clusters that can be depicted as a hierarchy of clusters. The hierarchy can be constructed in top-down (divisive) or bottom-up (agglomeration). Hierarchical clustering algorithms are one of the Distanced-based clustering algorithms, i.e., using a similarity function to measure the closeness between text documents. | |||

In the top-down approach, the algorithm begins with one cluster which includes all the documents. we recursively split this cluster into sub-clusters. | |||

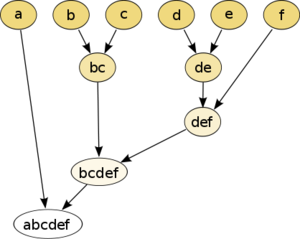

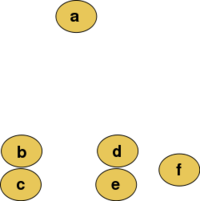

Here is an example of a Hierarchical Clustering algorithm, the data is to be clustered by the euclidean distance. This method builds the hierarchy from the individual elements by progressively merging clusters. In our example, we have six elements {a} {b} {c} {d} {e} and {f}. The first step determines which elements to merge in a cluster by taking the two closest elements, according to the chosen distance. | |||

[[File:418px-Hierarchical clustering simple diagram.svg.png| 300px | center]] | |||

<div align="center">Figure 1: Hierarchical Clustering Raw Data</div> | |||

[[File:250px-Clusters.svg (1).png| 200px | center]] | |||

<div align="center">Figure 2: Hierarchical Clustering Clustered Data</div> | |||

A main advantage of hierarchical clustering is that the algorithm only needs to be done once for any number of clusters (ie. if an individual wishes to use a different number of clusters than originally intended, they do not need to repeat the algorithm) | |||

'''2. k-means Clustering''' | |||

k-means clustering is a partitioning algorithm that partitions n documents in the context of text data into k clusters. | |||

Input: Document D, similarity measure S, number k of cluster | |||

Output: Set of k clusters | |||

Select randomly ''k'' datapoints as starting centroids | |||

While ''not converged'' do | |||

Assign documents to the centroids based on the closest similarity | |||

Calculate the cluster centroids for all clusters | |||

return ''k clusters'' | |||

The main disadvantage of k-means clustering is that it is indeed very sensitive to the initial choice of the number of k. Also, since the function is run until clusters converges, k-means clustering tends to take longer to perform than hierarchical clustering. On the other hand, advantages of k-means clustering are that it is simple to implement, the algorithm scales well to large datasets, and the results are easily interpretable. | |||

'''3. Probabilistic Clustering and Topic Models''' | |||

Topic modeling is one of the most popular probabilistic clustering algorithms in recent studies. The main idea is to create a *probabilistic generative model* for the corpus of text documents. In topic models, documents are a mixture of topics, where each topic represents a probability distribution over words. | |||

There are two main topic models: | |||

* Probabilistic Latent Semantic Analysis (pLSA) | |||

* Latent Dirichlet Allocation (LDA) | |||

The paper covers LDA in more detail. LDA is a state-of-the-art unsupervised algorithm for extracting topics from a collection of documents. | |||

Given <math>\mathcal{D} = \{d_1, d_2, \cdots, d_{|\mathcal{D}|}\}</math> is the corpus and <math>\mathcal{V} = \{w_1, w_2, \cdots, w_{|\mathcal{V}|}\}</math> is the vocabulary of the corpus. | |||

A topic is <math>z_j, 1 \leq j \leq K</math> is a multinomial probability distribution over <math>|\mathcal{V}|</math> words. | |||

The distribution of words in a given document is: | |||

<math>p(w_i|d) = \Sigma_{j=1}^K p(w_i|z_j)p(z_j|d)</math> | |||

The LDA assumes the following generative process for the corpus of <math>\mathcal{D}</math> | |||

* For each topic <math>k\in \{1,2,\cdots, K\}</math>, sample a word distribution <math>\phi_k \sim Dir(\beta)</math> | |||

* For each document <math>d \in \{1,2,\cdots,D\}</math> | |||

** Sample a topic distribution <math>\theta_d \sim Dir(\alpha)</math> | |||

** For each word <math>w_n, n \in \{1,2,\cdots,N\}</math> in document <math>d</math> | |||

*** Sample a topic <math>z_i \sim Mult(\theta_d)</math> | |||

*** Sample a word <math>w_n \sim Mult(\phi_{z_i})</math> | |||

Inference and Parameter estimation can be done by computing the posterior of the hidden variables. | |||

$$P(\varphi_{1:L}, \theta_{1:D}, z_{1:D}|w_{1:D})= \dfrac{P(\varphi_{1:L}, \theta_{1:D}, z_{1:D}}{P(w_{1:D}}$$ | |||

A Gibbs sampler may be used to approximate the posterior over the topic assignments for every word. | |||

$$P(z_i = k| w_i=w, z_{-i}, w_{-i}, \alpha, \beta) = \frac{n_{k, -i}^{(d)}+\alpha}{\sum_{k'=1}^K n_{k, -i}^{(d)}+Ka} \times \frac{n_{w, -i}^{(d)} + \beta}{\sum_{w'=1}^W n_{w, -i}^{(d)}+W\beta}$$ | |||

In practice, LDA is often used as a module in more complicated models and has already been applied to a wide variety of domains. In addition, many variations of LDA has been created, including supervised LDA (sLDA) and hierarchical LDA (hLDA) | |||

== Information Extraction == | == Information Extraction == | ||

Information Extraction (IE) is the process of extracting useful, structured information from unstructured or semi-structured text. It automatically extracts based on our command. | Information Extraction (IE) is the process of extracting useful, structured information from unstructured or semi-structured text. It automatically extracts based on our command. | ||

For example, | For example, from the sentence “XYZ company was founded by Peter in the year of 1950”, we can identify the two following relations: | ||

Founderof(Peter | 1. Founderof(Peter, XYZ) | ||

2. Foundedin(1950, XYZ) | |||

IE is a crucial step in data mining and has a broad variety of applications, such as web mining and natural language processing. Among all the IE tasks, two have become increasingly important, which are name entity recognition and relation extraction. | |||

The author mentioned 4 parts that are important for Information Extraction | The author mentioned 4 parts that are important for Information Extraction | ||

'''1. | '''1. Named Entity Recognition(NER)''' | ||

This is the process of identifying real world entity from free text, such as "Apple Inc.", "Donald Trump", "PlayStation 5" etc. Moreover, the task is to identify the category of these entities, such as "Apple Inc." is in the category of the company, "Donald Trump" is in the category of the USA president, and "PlayStation 5" is in the category of the entertainment system. | |||

This is the process of identifying real-world entity from free text, such as "Apple Inc.", "Donald Trump", "PlayStation 5" etc. Moreover, the task is to identify the category of these entities, such as "Apple Inc." is in the category of the company, "Donald Trump" is in the category of the USA president, and "PlayStation 5" is in the category of the entertainment system. | |||

'''2. Hidden Markov Model''' | '''2. Hidden Markov Model''' | ||

Since traditional probabilistic classification does not consider the predicted labels of neighbor words, we use the Hidden Markov Model when doing Information Extraction. This model is different because it considers the label of one word depends on the previous words that appeared. | |||

Since traditional probabilistic classification does not consider the predicted labels of neighbor words, we use the Hidden Markov Model when doing Information Extraction. This model is different because it considers that the label of one word depends on the previous words that appeared. The Hidden Markov model allows us to model the situation, given a sequence of labels <math> Y= (y_1, y_2, \cdots, y_n) </math>and sequence of observations <math> X= (x_1, x_2, \cdots, x_n) </math> we get | |||

<center> | |||

<math> | |||

y_i \sim p(y_i|y_{i-1}) \qquad x_i \sim p(x_i|x_{i-1}) | |||

</math> | |||

</center> | |||

'''3. Conditional Random Fields''' | '''3. Conditional Random Fields''' | ||

This is a technique that | |||

let G = (V, E) be a graph and Yv stands for the index of the vertices in G. Then (X, Y) is a | This is a technique that is widely used in Information Extraction. The definition of it is related to graph theory. | ||

p( | let G = (V, E) be a graph and Yv stands for the index of the vertices in G. Then (X, Y) is a conditional random field, when the random variables Yv, conditioned on X, obey Markov property with respect to the graph, and: | ||

<math>p(Y_v |X, Y_w ,w , v) = p(Y_v |X, Y_w ,w ∼ v)</math>, where w ∼ v means w and v are neighbors in G. | |||

'''4. Relation Extraction''' | '''4. Relation Extraction''' | ||

This is a task of finding semantic relationships between word entities in text documents | |||

This is a task of finding semantic relationships between word entities in text documents, for example in a sentence such as "Seth Curry is the brother of Stephen Curry". If there is a document including these two names, the task is to identify the relationship of these two entities. There are currently numerous techniques to perform relation extraction, but the most common is to consider it a classification problem. The problem is structured as, given two entities in that occur in a sentence classify their relation into fixed relation types. | |||

== Biomedical Application == | == Biomedical Application == | ||

| Line 74: | Line 199: | ||

Text mining has several applications in the domain of biomedical sciences. The explosion of academic literature in the field has made it quite hard for scientists to keep up with novel research. This is why text mining techniques are ever so important in making the knowledge digestible. | Text mining has several applications in the domain of biomedical sciences. The explosion of academic literature in the field has made it quite hard for scientists to keep up with novel research. This is why text mining techniques are ever so important in making the knowledge digestible. | ||

The text mining techniques are able to extract meaningful information from large data by making use of biomedical ontology, which is a compilation of common set of terms used in an area of knowledge. The Unified Medical Language System (UMLS) is the most comprehensive such resource, consisting of definitions of biomedical jargon. Several information extraction algorithms rely on the ontology to perform tasks such as Named Entity Recognition (NER) and Relation Extraction. | The text mining techniques are able to extract meaningful information from large data by making use of biomedical ontology, which is a compilation of a common set of terms used in an area of knowledge. The Unified Medical Language System (UMLS) is the most comprehensive such resource, consisting of definitions of biomedical jargon. Several information extraction algorithms rely on the ontology to perform tasks such as Named Entity Recognition (NER) and Relation Extraction. | ||

NER involves locating and classifying biomedical entities into meaningful categories and assigning semantic representation to those entities. The NER methods can be broadly grouped into Dictionary-based, Rule-based and Statistical approaches. Relation extraction, on the other hand, is the process of determining relationships between the entities. This is accomplished mainly by identifying correlation between entities through analyzing frequency of terms, as well as rules defined by domain experts. Moreover, modern algorithms are also able to summarize large documents and answer natural language questions posed by humans. | NER involves locating and classifying biomedical entities into meaningful categories and assigning semantic representation to those entities. The NER methods can be broadly grouped into Dictionary-based, Rule-based, and Statistical approaches. NER tasks are challenging in the biomedical domain due to three key reasons: (1) There is a continuously growing volume of semantically related entities in the biomedical domain due to continuous scientific progress, so NER systems depend on dictionaries of terms which can never be complete; (2) There are often numerous names for the same concept in the biomedical domain, such as "heart attack" and "myocardial infarction"; and (3) Acronyms and abbreviations are frequently used which makes it complicated to identify the concepts these terms express. Note that Dictionary-based approaches are therefore reserved for the most advanced NER methods. | ||

Relation extraction, on the other hand, is the process of determining relationships between the entities. This is accomplished mainly by identifying the correlation between entities through analyzing the frequency of terms, as well as rules defined by domain experts. Moreover, modern algorithms are also able to summarize large documents and answer natural language questions posed by humans. | |||

Summarization is a common biomedical text mining task that largely utilizes information extraction tasks. The idea is the automatically identify significant aspects of documents and represent them in a coherent fashion. However, evaluating summarization methods becomes very difficult since deciding whether a summary is "good" is often subjective, although there are some automatic evaluation techniques for summaries such as ROUGE (Recall-Oriented Understudy for Gisting Evaluation), which compares automatically generated summaries with those created by humans. | |||

== Conclusion == | == Conclusion == | ||

This paper gave a holistic overview of the methods and applications of text mining, particularly its relevance in the biomedical domain. It highlights several popular algorithms along with their limitations. Because of ever-growing data, the interest in this field is massive and is bound to grow in the future. | This paper gave a holistic overview of the methods and applications of text mining, particularly its relevance in the biomedical domain. It highlights several popular algorithms and summarizes them along with their advantages, limitations and some potential situations where they could be used. Because of ever-growing data, for example, the very high volume of scientific literature being produced every year, the interest in this field is massive and is bound to grow in the future. | ||

== Critiques== | |||

This is a very detailed approach to introduce some different algorithms on text mining. Since many algorithms are given, it might be a good idea to compare their performances on text mining by training them on some text data and compare them to the former baselines, to see if there exists any improvement. | |||

it is a detailed summary of the techniques used in text mining. It would be more helpful if some dataset can be included for training and testing. The algorithms were grouped by different topics so that different datasets and measurements are required. | |||

It would be better for the paper to include test accuracy for testing and training sets to support text mining is a more efficient and effective algorithm compared to other techniques. Moreover, this paper mentioned Text Mining approach can be used to extract high-quality information from videos. It is to believe that extracting from videos is much more difficult than images and texts. How is it possible to retain its test accuracy at a good level for videos? | |||

Text mining can no only impact the organizational processes, but also the ability to be competitive. Some common examples of the applications are risk management, cybercrime prevention, customer care service and contextual advertising/ | |||

Preprocessing an important step to analyze text, so it might be better to have the more details about that. For example, what types of words are usually removed and show we record the relative position of each word in the sentence. If one close related sentences were split into two sentences, how can we capture their relations? | |||

The authors could give more details on the applications of text mining in the healthcare and biomedical domain. For example, how could preprocessing, classification, clustering, and information extraction process be applied to this domain. Other than introduction of existing algorithms (e.g. NER), authors can provide more information about how they performs (with a sample dataset), what are their limitations, and comparisons among different algorithms. | |||

In the preprocessing section, it seems like the authors incorrectly describe what stemming is - stemming just removes the last few letters of a word (ex. studying -> study, studies -> studi). What the authors actually describe is lemmatization which is much more informative than stemming. The down side of lemmatization is that it takes more effort to build a lemmatizer than a stemmer and even once it is built it is slow in comparison with a stemmer. | |||

One of the challenges of text mining in the biomedical field is that a lot of patient data are still in the form of paper documents. Text mining can speed up the digitization of patient data and allow for the development of disease diagnosis algorithms. It'll be interesting to see how text mining can be integrated with healthcare AI such as the doppelganger algorithm to enhance question answering accuracy. (Cresswell et al, 2018) | |||

It might be helpful if the authors discuss more about the accuracy-wise performances of some text mining techniques, especially in the healthcare and biomedical domain, given the focus. It would be interesting if more information were provided about the level of accuracy needed in order to produce reliable and actionable information in such fields. Also, in these domains, sometimes a false negative could be more harmful than a false positive, such as a clinical misdiagnosis. It might be helpful to discuss a bit more about how to combats such issues in text mining. | |||

This is a survey paper that talks about many general aspects about text mining, without going into any specific one in detail. Overall it's interesting. My first feedback is on the "Information Retrieval" section of the paper. Hidden markov model is mentioned as one of the algorithms used for IR. Yet, hidden markov makes the strong assumption that given the current state, next state is independent of all the previous states. This is a very strong assumption to make in IR, as words in a sentence usually have a very strong connection to each other. This limitation should be discussed more extensively in the paper. Also, the overall structure of the paper seems to be a bit imbalanced. It solely talks about IR's application in biomedical sciences. Yet, IR has application in many different areas and subjects. | |||

This paper surveys through multiple methods and algorithms on test mining, more specifically, information extraction, test classification, and clustering. In the Information Extraction section, four possible methods are mentioned to deal with different examples of semantic texts. In the latest studies of machine learning, it is ubiquitous to see multiple methods or algorithms are combined together to achieve better performances. For a survey paper, it will be more interesting to see some connections between the four methods, and some insights such as how we can boost the accuracy of extracting precise information by combining 2 of the 4 methods together. | |||

It would be better discuss more applications and SoTA algorithms on each tasks. It just give an application in biomedical with NER, it is too simple. | |||

The summary is well-organized and gives enough information to first-time readers about text mining and different algorithms to model the data and predict using different classifiers. However, it would be better to add comparison between each classifier since the performance is important to know. | |||

This is a great informational summary, I don't have much critiques to give. But, I wanted to point out that many modern techniques ignore so many of these interesting data transformations and preprocessing steps, since the text in its raw form provides the most information for deep models to extract features from. Specifically, we can look at ULM-Fit (https://arxiv.org/abs/1801.06146) and BERT (https://arxiv.org/abs/1810.04805) and observe very little text preprocessing outside of tokenization, and simply allowing the model to learn the necessary features from a huge corpus. | |||

It might be better to explain more about Knowledge Discovery and Data Mining in the Introduction part, such as giving the definition and the comparison between them, so that the audience can understand text mining clearer. | |||

The paper and corresponding summary seems to be more breadth-focused and extremely high-level. I think this paper could've been taken a step further by including applications of the various algorithms. For example, the task of topic modelling which is highly customizable, and preprocessing which is dependent on the domain, can be accomplished using many approaches: transforming the processed texts to feature vectors using BERT or pre-trained Word2Vec; and then applying unsupervised learning methods such as LDA and clustering. Within the biomedical application mentioned, it might be of interest to look into BioBERT, which is trained on more domain-specific texts (https://koreauniv.pure.elsevier.com/en/publications/biobert-a-pre-trained-biomedical-language-representation-model-fo). | |||

The paper summary is great as it describes very important topic in today's world and how technology must adapt to the vast increase in data creation. Particularly, it is really cool to see how machine learning is used in a multi-disciplinary manner. | |||

Text-mining from this paper is described as a very compute-intensive process. Due to "a 50 times growth since 2010" to 2020, it would be nice to have a method of scaling the data in this domain to be more prepared for an even bigger growth of data in the next decade. Thus it would have been nice if the researchers included performance metrics (both computation and classification performances) of the system with different classifiers described in this paper. Lastly, it would be nice to see comparisons of ROUGE metrics for summarization that the researchers were able to achieve using the Text Mining technique they introduced. | |||

The topic is detailed. There are many factors that could affect the results of text mining since the writing habit in terms of vocabulary and the way a person construct his/her sentence could be quite different. Also, since now is the Internet era, there are always new words invented from the Internet, and some of them might be recorded in the dictionary as a casual vocabulary. A good machine learning model should be able to keep learning new words as human and correctly distinguish text through the literature structures like metaphors,slangs and folklore. | |||

This is a very detailed survey paper. It described many classical models related to text mining. However, it might be good to at least mention some new models for further reading to be a direction of readers who are new to this area. | |||

The paper does a great job of introducing new models/classification techniques. Aside from performance metrics, what is even more interesting is how well it scales, since data mining is very often done to deal with large, unfiltered data. The paper has very little mention to how well these algorithms scale, and no mention of the performance on large scale data. Perhaps a performance metric detailing exactly that is needed. | |||

The paper is introducing a lot of very common and starting points of machine learning algorithms that are used for text mining and went into a shallow depth of the mathematics behind each algorithm. However, it does not show any real concrete tests or example to demonstrate each's ability or fitness/effectiveness of each algorithm and thus leaving it a rather incomplete introduction. | |||

The paper was well-organized to keep everything with similar intuition together within one section of the paper, however, visualization of simple examples would have been helpful. For example, for SVM, a 2d illustration of margins and decision boundary would have worked far better than explaining the projection of vectors onto hyperplanes to find the maximum distance/margin. | |||

The paper is very math heavy, so it won't hurt to include some graphs to explain the model a little more. For instance, in the Probabilistic Clustering and Topic Models section, the LDA method was introduced. In the original paper, a LDA graphical model graph was given. It vividly shows the whole structure of the model and how each component interact with one another. | |||

== References == | == References == | ||

Allahyari, M., Pouriyeh, S., Assefi, M., Safaei, S., Trippe, E. D., Gutierrez, J. B., & Kochut, K. (2017). A brief survey of text mining: Classification, clustering, and extraction techniques. arXiv preprint arXiv:1707.02919. | [1] Allahyari, M., Pouriyeh, S., Assefi, M., Safaei, S., Trippe, E. D., Gutierrez, J. B., & Kochut, K. (2017). A brief survey of text mining: Classification, clustering, and extraction techniques. arXiv preprint arXiv:1707.02919. | ||

[2] Cresswell, Kathrin & Cunningham-Burley, Sarah & Sheikh, Aziz. (2018). Healthcare robotics - a qualitative exploration of key challenges and future directions (Preprint). Journal of Medical Internet Research. 20. 10.2196/10410. | |||

Latest revision as of 05:11, 15 December 2020

Presented by

Yawen Wang, Danmeng Cui, Zijie Jiang, Mingkang Jiang, Haotian Ren, Haris Bin Zahid

Introduction

This paper focuses on the different text mining techniques and the applications of text mining in the healthcare and biomedical domain. The text mining field has been popular as a result of the amount of text data that is available in different forms by devising patterns and trends by means such as statistical pattern learning. The text data is bound to grow even more in 2020, indicating a 50 times growth since 2010. Text is unstructured information, which is easy for humans to construct and understand but difficult for machines. Hence, there is a need to design algorithms to effectively process this avalanche of text. To further explore the text mining field, the related text mining approaches can be considered. The different text mining approaches relate to two main methods: knowledge delivery and traditional data mining methods.

The authors note that knowledge delivery methods involve the application of different steps to a specific data set to create specific patterns. Research in knowledge delivery methods has evolved over the years due to advances in hardware and software technology. On the other hand, data mining has experienced substantial development through the intersection of three fields: databases, machine learning, and statistics. As brought out by the authors, text mining approaches focus on the exploration of information from a specific text. The information explored is in the form of structured, semi-structured, and unstructured text. It is important to note that text mining covers different sets of algorithms and topics that include information retrieval. The topics and algorithms are used for analyzing different text forms.

Text Representation and Encoding

The authors review multiple methods of preprocessing text, including 4 methods to preprocess and recognize influence and frequency of individual group of words in a document. In many text mining algorithms, one of the key components is preprocessing. Preprocessing consists of different tasks that include filtering, tokenization, stemming, and lemmatization. The first step is tokenization, where a character sequence is broken down into different words or phrases. After the breakdown, filtering is carried out to remove some words. The various word inflected forms are grouped together through lemmatization, and later, the derived roots of the derived words are obtained through stemming.

1. Tokenization

This process splits text (i.e. a sentence) into a single unit of words, known as tokens while removing unnecessary characters. Tokenization relies on identifying word boundaries, that is ending of a word and beginning of another word, usually separated by space. Characters such as punctuation are removed and the text is split at space characters. An example of this would be converting the string "This is my string" to "This", "is", "my", "string".

2. Filtering

Filtering is a process by which unnecessary words or characters are removed. Often these include punctuation, prepositions, and conjugations. The resulting corpus then contains words with maximal importance in distinguishing between classes. This also includes removing words occurring very rarely, possibly of no significant relevance, as the the words with relatively high frequency may have little information to do classification.

3. Lemmatization

Lemmatization is the task that considers the morphological analysis of the words, i.e where the various inflected forms of a word are converted to a single form. However, unlike in stemming (see below), we must specify the part of speech (POS) of each word, i.e its intended meaning in the given sentence or document, which can prone to human error. For example, "geese" and "goose" have the same lemma "goose", as they have the same meaning.

4. Stemming

Stemming extracts the roots of words. It is a language dependent process. The goal of both stemming is to reduce inflectional and related (definition wise) forms of a word to a common base form. An example of this would be changing "am", "are", or "is" to "be".

Vector Space Model In this section of the paper, the authors explore the different ways in which the text can be represented on a large collection of documents. One common way of representing the documents is in the form of a bag of words. The bag of words considers the occurrences of different terms. In different text mining applications, documents are ranked and represented as vectors so as to display the significance of any word. The authors note that the three basic models used are vector space, inference network, and the probabilistic models. The vector space model is used to represent documents by converting them into vectors. In the model, a variable is used to represent each model to indicate the importance of the word in the document.

The weights have 2 main models used Boolean model and TF-IDF model: Boolean model terms are assigned with a positive wij if the term appears in the document. otherwise, it will be assigned a weight of 0.

Term Frequency - inverse document frequency (TF-IDF) The words are weighted using the TF-IDF scheme computed as

$$ q(w)=f_d(w)*\log{\frac{|D|}{f_D(w)}} $$

The frequency of each term is normalized by the inverse of document frequency, which helps distinct words with low frequency is recognized its importance. Each document is represented by a vector of term weights, [math]\displaystyle{ \omega(d) = (\omega(d, w_1), \omega(d,w_2),...,\omega(d,w_v)) }[/math]. The similarity between two documents [math]\displaystyle{ d_1, d_2 }[/math] is commonly measured by cosine similarity: $$ S(d_1,d_2) = \cos(\theta) = \frac{d_1\cdot d_2}{\sum_{i=1}^vw^2_{1i}\cdot\sum_{i=1}^vw^2_{2i}} $$

Classification

Classification in Text Mining aims to assign predefined classes to text documents. For a set [math]\displaystyle{ \mathcal{D} = {d_1, d_2, ... d_n} }[/math] of documents, each [math]\displaystyle{ d_i }[/math] is mapped to a label [math]\displaystyle{ l_i }[/math] from the set [math]\displaystyle{ \mathcal{L} = {l_1, l_2, ... l_k} }[/math]. The goal is to find a classification model [math]\displaystyle{ f }[/math] such that: [math]\displaystyle{ \\ }[/math] $$ f: \mathcal{D} \rightarrow \mathcal{L} \quad \quad \quad f(\mathcal{d}) = \mathcal{l} $$ The author illustrates 4 different classifiers that are commonly used in text mining.

1. Naive Bayes Classifier

Bayes rule is used to classify new examples and select the class that has the generated result that occurs most often. Naive Bayes Classifier models the distribution of documents in each class using a probabilistic model assuming that the distribution of different terms is independent of each other. The models commonly used in this classifier tried to find the posterior probability of a class based on the distribution and assumes that the documents generated are based on a mixture model parameterized by [math]\displaystyle{ \theta }[/math] and compute the likelihood of a document using the sum of probabilities over all mixture component. In addition, the Naive Bayes Classifier can help get around the curse of dimensionality, which may arise with high-dimensional data, such as text.

2. Nearest Neighbour Classifier

Nearest Neighbour Classifier uses distance-based measures to perform the classification. The documents which belong to the same class are more likely "similar" or close to each other based on the similarity measure. The classification of the test documents is inferred from the class labels of similar documents in the training set. K-Nearest Neighbor classification is well known to suffer from the "curse of dimensionality", as the proportional volume of each $d$-sphere surrounding each datapoint compared to the volume of the sample space shrinks exponentially in $d$.

3. Decision Tree Classifier

A hierarchical tree of the training instances, in which a condition on the attribute value is used to divide the data hierarchically. The decision tree recursively partitions the training data set into smaller subdivisions based on a set of tests defined at each node or branch. Each node of the tree is a test of some attribute of the training instance, and each branch descending from the node corresponds to one of the values of this attribute. The conditions on the nodes are commonly defined by the terms in the text documents.

4. Support Vector Machines

SVM is a form of Linear Classifiers which are models that makes a classification decision based on the value of the linear combinations of the documents features. The output of a linear predictor is defined to the [math]\displaystyle{ y=\vec{a} \cdot \vec{x} + b }[/math] where [math]\displaystyle{ \vec{x} }[/math] is the normalized document word frequency vector, [math]\displaystyle{ \vec{a} }[/math] is a vector of coefficient and [math]\displaystyle{ b }[/math] is a scalar. Support Vector Machines attempts to find a linear separators between various classes. An advantage of the SVM method is it is robust to high dimensionality.

Clustering

Clustering has been extensively studied in the context of the text as it has a wide range of applications such as visualization and document organization.

Clustering algorithms are used to group similar documents and thus aid in information retrieval. Text clustering can be in different levels of granularities, where clusters can be documents, paragraphs, sentences, or terms. Since text data has numerous distance characteristics that demand the design of text-specific algorithms for the task, using a binary vector to represent the text document is simply not enough. Here are some unique properties of text representation:

1. Text representation has a large dimensionality, in which the size of the vocabulary from which the documents are drawn is massive, but a document might only contain a small number of words.

2. The words in the documents are usually correlated with each other. Need to take the correlation into consideration when designing algorithms.

3. The number of words differs from one another of the document. Thus the document needs to be normalized first before the clustering process.

Three most commonly used text clustering algorithms are presented below.

1. Hierarchical Clustering algorithms

Hierarchical Clustering algorithms builds a group of clusters that can be depicted as a hierarchy of clusters. The hierarchy can be constructed in top-down (divisive) or bottom-up (agglomeration). Hierarchical clustering algorithms are one of the Distanced-based clustering algorithms, i.e., using a similarity function to measure the closeness between text documents.

In the top-down approach, the algorithm begins with one cluster which includes all the documents. we recursively split this cluster into sub-clusters. Here is an example of a Hierarchical Clustering algorithm, the data is to be clustered by the euclidean distance. This method builds the hierarchy from the individual elements by progressively merging clusters. In our example, we have six elements {a} {b} {c} {d} {e} and {f}. The first step determines which elements to merge in a cluster by taking the two closest elements, according to the chosen distance.

A main advantage of hierarchical clustering is that the algorithm only needs to be done once for any number of clusters (ie. if an individual wishes to use a different number of clusters than originally intended, they do not need to repeat the algorithm)

2. k-means Clustering

k-means clustering is a partitioning algorithm that partitions n documents in the context of text data into k clusters.

Input: Document D, similarity measure S, number k of cluster

Output: Set of k clusters

Select randomly k datapoints as starting centroids

While not converged do

Assign documents to the centroids based on the closest similarity

Calculate the cluster centroids for all clusters

return k clusters

The main disadvantage of k-means clustering is that it is indeed very sensitive to the initial choice of the number of k. Also, since the function is run until clusters converges, k-means clustering tends to take longer to perform than hierarchical clustering. On the other hand, advantages of k-means clustering are that it is simple to implement, the algorithm scales well to large datasets, and the results are easily interpretable.

3. Probabilistic Clustering and Topic Models

Topic modeling is one of the most popular probabilistic clustering algorithms in recent studies. The main idea is to create a *probabilistic generative model* for the corpus of text documents. In topic models, documents are a mixture of topics, where each topic represents a probability distribution over words.

There are two main topic models:

- Probabilistic Latent Semantic Analysis (pLSA)

- Latent Dirichlet Allocation (LDA)

The paper covers LDA in more detail. LDA is a state-of-the-art unsupervised algorithm for extracting topics from a collection of documents.

Given [math]\displaystyle{ \mathcal{D} = \{d_1, d_2, \cdots, d_{|\mathcal{D}|}\} }[/math] is the corpus and [math]\displaystyle{ \mathcal{V} = \{w_1, w_2, \cdots, w_{|\mathcal{V}|}\} }[/math] is the vocabulary of the corpus.

A topic is [math]\displaystyle{ z_j, 1 \leq j \leq K }[/math] is a multinomial probability distribution over [math]\displaystyle{ |\mathcal{V}| }[/math] words.

The distribution of words in a given document is:

[math]\displaystyle{ p(w_i|d) = \Sigma_{j=1}^K p(w_i|z_j)p(z_j|d) }[/math]

The LDA assumes the following generative process for the corpus of [math]\displaystyle{ \mathcal{D} }[/math]

- For each topic [math]\displaystyle{ k\in \{1,2,\cdots, K\} }[/math], sample a word distribution [math]\displaystyle{ \phi_k \sim Dir(\beta) }[/math]

- For each document [math]\displaystyle{ d \in \{1,2,\cdots,D\} }[/math]

- Sample a topic distribution [math]\displaystyle{ \theta_d \sim Dir(\alpha) }[/math]

- For each word [math]\displaystyle{ w_n, n \in \{1,2,\cdots,N\} }[/math] in document [math]\displaystyle{ d }[/math]

- Sample a topic [math]\displaystyle{ z_i \sim Mult(\theta_d) }[/math]

- Sample a word [math]\displaystyle{ w_n \sim Mult(\phi_{z_i}) }[/math]

Inference and Parameter estimation can be done by computing the posterior of the hidden variables.

$$P(\varphi_{1:L}, \theta_{1:D}, z_{1:D}|w_{1:D})= \dfrac{P(\varphi_{1:L}, \theta_{1:D}, z_{1:D}}{P(w_{1:D}}$$ A Gibbs sampler may be used to approximate the posterior over the topic assignments for every word.

$$P(z_i = k| w_i=w, z_{-i}, w_{-i}, \alpha, \beta) = \frac{n_{k, -i}^{(d)}+\alpha}{\sum_{k'=1}^K n_{k, -i}^{(d)}+Ka} \times \frac{n_{w, -i}^{(d)} + \beta}{\sum_{w'=1}^W n_{w, -i}^{(d)}+W\beta}$$ In practice, LDA is often used as a module in more complicated models and has already been applied to a wide variety of domains. In addition, many variations of LDA has been created, including supervised LDA (sLDA) and hierarchical LDA (hLDA)

Information Extraction

Information Extraction (IE) is the process of extracting useful, structured information from unstructured or semi-structured text. It automatically extracts based on our command.

For example, from the sentence “XYZ company was founded by Peter in the year of 1950”, we can identify the two following relations:

1. Founderof(Peter, XYZ)

2. Foundedin(1950, XYZ)

IE is a crucial step in data mining and has a broad variety of applications, such as web mining and natural language processing. Among all the IE tasks, two have become increasingly important, which are name entity recognition and relation extraction.

The author mentioned 4 parts that are important for Information Extraction

1. Named Entity Recognition(NER)

This is the process of identifying real-world entity from free text, such as "Apple Inc.", "Donald Trump", "PlayStation 5" etc. Moreover, the task is to identify the category of these entities, such as "Apple Inc." is in the category of the company, "Donald Trump" is in the category of the USA president, and "PlayStation 5" is in the category of the entertainment system.

2. Hidden Markov Model

Since traditional probabilistic classification does not consider the predicted labels of neighbor words, we use the Hidden Markov Model when doing Information Extraction. This model is different because it considers that the label of one word depends on the previous words that appeared. The Hidden Markov model allows us to model the situation, given a sequence of labels [math]\displaystyle{ Y= (y_1, y_2, \cdots, y_n) }[/math]and sequence of observations [math]\displaystyle{ X= (x_1, x_2, \cdots, x_n) }[/math] we get

[math]\displaystyle{ y_i \sim p(y_i|y_{i-1}) \qquad x_i \sim p(x_i|x_{i-1}) }[/math]

3. Conditional Random Fields

This is a technique that is widely used in Information Extraction. The definition of it is related to graph theory. let G = (V, E) be a graph and Yv stands for the index of the vertices in G. Then (X, Y) is a conditional random field, when the random variables Yv, conditioned on X, obey Markov property with respect to the graph, and: [math]\displaystyle{ p(Y_v |X, Y_w ,w , v) = p(Y_v |X, Y_w ,w ∼ v) }[/math], where w ∼ v means w and v are neighbors in G.

4. Relation Extraction

This is a task of finding semantic relationships between word entities in text documents, for example in a sentence such as "Seth Curry is the brother of Stephen Curry". If there is a document including these two names, the task is to identify the relationship of these two entities. There are currently numerous techniques to perform relation extraction, but the most common is to consider it a classification problem. The problem is structured as, given two entities in that occur in a sentence classify their relation into fixed relation types.

Biomedical Application

Text mining has several applications in the domain of biomedical sciences. The explosion of academic literature in the field has made it quite hard for scientists to keep up with novel research. This is why text mining techniques are ever so important in making the knowledge digestible.

The text mining techniques are able to extract meaningful information from large data by making use of biomedical ontology, which is a compilation of a common set of terms used in an area of knowledge. The Unified Medical Language System (UMLS) is the most comprehensive such resource, consisting of definitions of biomedical jargon. Several information extraction algorithms rely on the ontology to perform tasks such as Named Entity Recognition (NER) and Relation Extraction.

NER involves locating and classifying biomedical entities into meaningful categories and assigning semantic representation to those entities. The NER methods can be broadly grouped into Dictionary-based, Rule-based, and Statistical approaches. NER tasks are challenging in the biomedical domain due to three key reasons: (1) There is a continuously growing volume of semantically related entities in the biomedical domain due to continuous scientific progress, so NER systems depend on dictionaries of terms which can never be complete; (2) There are often numerous names for the same concept in the biomedical domain, such as "heart attack" and "myocardial infarction"; and (3) Acronyms and abbreviations are frequently used which makes it complicated to identify the concepts these terms express. Note that Dictionary-based approaches are therefore reserved for the most advanced NER methods.

Relation extraction, on the other hand, is the process of determining relationships between the entities. This is accomplished mainly by identifying the correlation between entities through analyzing the frequency of terms, as well as rules defined by domain experts. Moreover, modern algorithms are also able to summarize large documents and answer natural language questions posed by humans.

Summarization is a common biomedical text mining task that largely utilizes information extraction tasks. The idea is the automatically identify significant aspects of documents and represent them in a coherent fashion. However, evaluating summarization methods becomes very difficult since deciding whether a summary is "good" is often subjective, although there are some automatic evaluation techniques for summaries such as ROUGE (Recall-Oriented Understudy for Gisting Evaluation), which compares automatically generated summaries with those created by humans.

Conclusion

This paper gave a holistic overview of the methods and applications of text mining, particularly its relevance in the biomedical domain. It highlights several popular algorithms and summarizes them along with their advantages, limitations and some potential situations where they could be used. Because of ever-growing data, for example, the very high volume of scientific literature being produced every year, the interest in this field is massive and is bound to grow in the future.

Critiques

This is a very detailed approach to introduce some different algorithms on text mining. Since many algorithms are given, it might be a good idea to compare their performances on text mining by training them on some text data and compare them to the former baselines, to see if there exists any improvement.

it is a detailed summary of the techniques used in text mining. It would be more helpful if some dataset can be included for training and testing. The algorithms were grouped by different topics so that different datasets and measurements are required.

It would be better for the paper to include test accuracy for testing and training sets to support text mining is a more efficient and effective algorithm compared to other techniques. Moreover, this paper mentioned Text Mining approach can be used to extract high-quality information from videos. It is to believe that extracting from videos is much more difficult than images and texts. How is it possible to retain its test accuracy at a good level for videos?

Text mining can no only impact the organizational processes, but also the ability to be competitive. Some common examples of the applications are risk management, cybercrime prevention, customer care service and contextual advertising/

Preprocessing an important step to analyze text, so it might be better to have the more details about that. For example, what types of words are usually removed and show we record the relative position of each word in the sentence. If one close related sentences were split into two sentences, how can we capture their relations?

The authors could give more details on the applications of text mining in the healthcare and biomedical domain. For example, how could preprocessing, classification, clustering, and information extraction process be applied to this domain. Other than introduction of existing algorithms (e.g. NER), authors can provide more information about how they performs (with a sample dataset), what are their limitations, and comparisons among different algorithms.

In the preprocessing section, it seems like the authors incorrectly describe what stemming is - stemming just removes the last few letters of a word (ex. studying -> study, studies -> studi). What the authors actually describe is lemmatization which is much more informative than stemming. The down side of lemmatization is that it takes more effort to build a lemmatizer than a stemmer and even once it is built it is slow in comparison with a stemmer.

One of the challenges of text mining in the biomedical field is that a lot of patient data are still in the form of paper documents. Text mining can speed up the digitization of patient data and allow for the development of disease diagnosis algorithms. It'll be interesting to see how text mining can be integrated with healthcare AI such as the doppelganger algorithm to enhance question answering accuracy. (Cresswell et al, 2018)

It might be helpful if the authors discuss more about the accuracy-wise performances of some text mining techniques, especially in the healthcare and biomedical domain, given the focus. It would be interesting if more information were provided about the level of accuracy needed in order to produce reliable and actionable information in such fields. Also, in these domains, sometimes a false negative could be more harmful than a false positive, such as a clinical misdiagnosis. It might be helpful to discuss a bit more about how to combats such issues in text mining.

This is a survey paper that talks about many general aspects about text mining, without going into any specific one in detail. Overall it's interesting. My first feedback is on the "Information Retrieval" section of the paper. Hidden markov model is mentioned as one of the algorithms used for IR. Yet, hidden markov makes the strong assumption that given the current state, next state is independent of all the previous states. This is a very strong assumption to make in IR, as words in a sentence usually have a very strong connection to each other. This limitation should be discussed more extensively in the paper. Also, the overall structure of the paper seems to be a bit imbalanced. It solely talks about IR's application in biomedical sciences. Yet, IR has application in many different areas and subjects.

This paper surveys through multiple methods and algorithms on test mining, more specifically, information extraction, test classification, and clustering. In the Information Extraction section, four possible methods are mentioned to deal with different examples of semantic texts. In the latest studies of machine learning, it is ubiquitous to see multiple methods or algorithms are combined together to achieve better performances. For a survey paper, it will be more interesting to see some connections between the four methods, and some insights such as how we can boost the accuracy of extracting precise information by combining 2 of the 4 methods together.

It would be better discuss more applications and SoTA algorithms on each tasks. It just give an application in biomedical with NER, it is too simple.

The summary is well-organized and gives enough information to first-time readers about text mining and different algorithms to model the data and predict using different classifiers. However, it would be better to add comparison between each classifier since the performance is important to know.

This is a great informational summary, I don't have much critiques to give. But, I wanted to point out that many modern techniques ignore so many of these interesting data transformations and preprocessing steps, since the text in its raw form provides the most information for deep models to extract features from. Specifically, we can look at ULM-Fit (https://arxiv.org/abs/1801.06146) and BERT (https://arxiv.org/abs/1810.04805) and observe very little text preprocessing outside of tokenization, and simply allowing the model to learn the necessary features from a huge corpus.

It might be better to explain more about Knowledge Discovery and Data Mining in the Introduction part, such as giving the definition and the comparison between them, so that the audience can understand text mining clearer.

The paper and corresponding summary seems to be more breadth-focused and extremely high-level. I think this paper could've been taken a step further by including applications of the various algorithms. For example, the task of topic modelling which is highly customizable, and preprocessing which is dependent on the domain, can be accomplished using many approaches: transforming the processed texts to feature vectors using BERT or pre-trained Word2Vec; and then applying unsupervised learning methods such as LDA and clustering. Within the biomedical application mentioned, it might be of interest to look into BioBERT, which is trained on more domain-specific texts (https://koreauniv.pure.elsevier.com/en/publications/biobert-a-pre-trained-biomedical-language-representation-model-fo).

The paper summary is great as it describes very important topic in today's world and how technology must adapt to the vast increase in data creation. Particularly, it is really cool to see how machine learning is used in a multi-disciplinary manner.

Text-mining from this paper is described as a very compute-intensive process. Due to "a 50 times growth since 2010" to 2020, it would be nice to have a method of scaling the data in this domain to be more prepared for an even bigger growth of data in the next decade. Thus it would have been nice if the researchers included performance metrics (both computation and classification performances) of the system with different classifiers described in this paper. Lastly, it would be nice to see comparisons of ROUGE metrics for summarization that the researchers were able to achieve using the Text Mining technique they introduced.

The topic is detailed. There are many factors that could affect the results of text mining since the writing habit in terms of vocabulary and the way a person construct his/her sentence could be quite different. Also, since now is the Internet era, there are always new words invented from the Internet, and some of them might be recorded in the dictionary as a casual vocabulary. A good machine learning model should be able to keep learning new words as human and correctly distinguish text through the literature structures like metaphors,slangs and folklore.

This is a very detailed survey paper. It described many classical models related to text mining. However, it might be good to at least mention some new models for further reading to be a direction of readers who are new to this area.

The paper does a great job of introducing new models/classification techniques. Aside from performance metrics, what is even more interesting is how well it scales, since data mining is very often done to deal with large, unfiltered data. The paper has very little mention to how well these algorithms scale, and no mention of the performance on large scale data. Perhaps a performance metric detailing exactly that is needed.

The paper is introducing a lot of very common and starting points of machine learning algorithms that are used for text mining and went into a shallow depth of the mathematics behind each algorithm. However, it does not show any real concrete tests or example to demonstrate each's ability or fitness/effectiveness of each algorithm and thus leaving it a rather incomplete introduction.

The paper was well-organized to keep everything with similar intuition together within one section of the paper, however, visualization of simple examples would have been helpful. For example, for SVM, a 2d illustration of margins and decision boundary would have worked far better than explaining the projection of vectors onto hyperplanes to find the maximum distance/margin.

The paper is very math heavy, so it won't hurt to include some graphs to explain the model a little more. For instance, in the Probabilistic Clustering and Topic Models section, the LDA method was introduced. In the original paper, a LDA graphical model graph was given. It vividly shows the whole structure of the model and how each component interact with one another.

References

[1] Allahyari, M., Pouriyeh, S., Assefi, M., Safaei, S., Trippe, E. D., Gutierrez, J. B., & Kochut, K. (2017). A brief survey of text mining: Classification, clustering, and extraction techniques. arXiv preprint arXiv:1707.02919.

[2] Cresswell, Kathrin & Cunningham-Burley, Sarah & Sheikh, Aziz. (2018). Healthcare robotics - a qualitative exploration of key challenges and future directions (Preprint). Journal of Medical Internet Research. 20. 10.2196/10410.