Influenza Forecasting Framework based on Gaussian Processes: Difference between revisions

| (56 intermediate revisions by 20 users not shown) | |||

| Line 2: | Line 2: | ||

== Abstract == | == Abstract == | ||

This paper presents a novel framework for seasonal epidemic forecasting using Gaussian process regression. | This paper presents a novel framework for seasonal epidemic forecasting using Gaussian process regression. Compared with other state-of-the-art models, the new novel framework introduced in this paper shows accurate retrospective forecasts through training a subset of the CDC influenza-like-illness (ILI) dataset using the official CDC scoring rule (log-score). | ||

== Background == | == Background == | ||

Each year, the seasonal influenza epidemic affects public health | Each year, the seasonal influenza epidemic affects public health systems across the globe on a massive scale. In 2019-2020 alone, the United States reported 38 million cases, 400 000 hospitalizations, and 22 000 deaths [1]. A direct result of this is the need for reliable forecasts of future influenza development because they allow for improved public health policies and informed resource development and allocation. Many statistical methods have been developed to use data from the CDC and other real-time data sources, such as Google Trends to forecast influenza activities. | ||

Given the process of data collection and surveillance lag, accurate statistics for influenza warning systems are often delayed by some margin of time, making early prediction imperative. However, there are challenges in long-term epidemic forecasting. First, the temporal dependency is hard to capture with short-term input data. Without manually added seasonal trends, most statistical models fail to provide high accuracy. Second, the influence from other locations has not been exhaustively explored with limited data input. Spatio-temporal effects would therefore require adequate data sources to achieve good performance. | Given the process of data collection and surveillance lag, accurate statistics for influenza warning systems are often delayed by some margin of time, making early prediction imperative. However, there are challenges in long-term epidemic forecasting. First, the temporal dependency is hard to capture with short-term input data. Without manually added seasonal trends, most statistical models fail to provide high accuracy. Second, the influence from other locations has not been exhaustively explored with limited data input. Spatio-temporal effects would therefore require adequate data sources to achieve good performance. | ||

| Line 12: | Line 12: | ||

== Related Work == | == Related Work == | ||

Given the value of epidemic forecasts, the CDC regularly publishes ILI data and has funded a seasonal ILI forecasting challenge. This challenge has | Given the value of epidemic forecasts, the CDC regularly publishes ILI data and has funded a seasonal ILI forecasting challenge. This challenge has led to four state-of-the-art models in the field: Historical averages, a method using the counts of past seasons to build a kernel density estimate; MSS, a physical susceptible-infected-recovered model with assumed linear noise [5]; SARIMA, a framework based on seasonal auto-regressive moving average models [2]; and LinEns, an ensemble of three linear regression models. | ||

One disadvantage of forecasting is that the publication of CDC official ILI data is usually delayed by 1-3 weeks. Therefore, several attempts to use indirect web-based data to gain more timely estimates of CDC’s ILI, which is also known as nowcasting. The forecasting framework introduced in this paper can use those nowcasting approaches to receive a more timely data stream. | |||

== Motivation == | == Motivation == | ||

| Line 23: | Line 25: | ||

\[\mu(x) = k(x, X)^T(K_n+\sigma^2I)^{-1}Y\] | \[\mu(x) = k(x, X)^T(K_n+\sigma^2I)^{-1}Y\] | ||

\[\sigma(x) = k^{**}(x,x)-k(x,X)^T(K_n+\sigma^2I)^{-1}k(x,X)\] | \[\sigma(x) = k^{**}(x,x)-k(x,X)^T(K_n+\sigma^2I)^{-1}k(x,X)\] | ||

where | where <math>K_n</math> is the covariance matrix and for the kernel, it is being specified that it will use the Gaussian kernel. | ||

Consider the following set up: let <math>X = [\mathbf{x}_1,\ldots,\mathbf{x}_n]</math> <math>(d\times n)</math> be your training data, <math>\mathbf{y} = [y_1,y_2,\ldots,y_n]^T</math> be your noisy observations where <math>y_i = f(\mathbf{x}_i) + \epsilon_i</math>, <math>(\epsilon_i:i = 1,\ldots,n)</math> i.i.d. <math>\sim \mathcal{N}(0,{\sigma}^2)</math>, and <math>f</math> is the trend we are trying to model (by <math>\hat{f}</math>). Let <math>\mathbf{x}^*</math> <math>(d\times 1)</math> be your test data point, and <math>\hat{y} = \hat{f}(\mathbf{x}^*)</math> be your predicted outcome. | Consider the following set up: let <math>X = [\mathbf{x}_1,\ldots,\mathbf{x}_n]</math> <math>(d\times n)</math> be your training data, <math>\mathbf{y} = [y_1,y_2,\ldots,y_n]^T</math> be your noisy observations where <math>y_i = f(\mathbf{x}_i) + \epsilon_i</math>, <math>(\epsilon_i:i = 1,\ldots,n)</math> i.i.d. <math>\sim \mathcal{N}(0,{\sigma}^2)</math>, and <math>f</math> is the trend we are trying to model (by <math>\hat{f}</math>). Let <math>\mathbf{x}^*</math> <math>(d\times 1)</math> be your test data point, and <math>\hat{y} = \hat{f}(\mathbf{x}^*)</math> be your predicted outcome. | ||

| Line 37: | Line 39: | ||

$$ | $$ | ||

k(\mathbf{x}_i,\mathbf{x}_j) = \sigma^2\exp(-\frac{1}{2}(\mathbf{x}_i-\mathbf{x} | k(\mathbf{x}_i,\mathbf{x}_j) = \sigma^2\exp(-\frac{1}{2}(\mathbf{x}_i-\mathbf{x}_j)^T\Sigma(\mathbf{x}_i-\mathbf{x}_j)). | ||

$$ | $$ | ||

| Line 51: | Line 53: | ||

<div style="text-align:center;"> [[File:GPRegression.png|500px]] </div> | <div style="text-align:center;"> [[File:GPRegression.png|500px]] </div> | ||

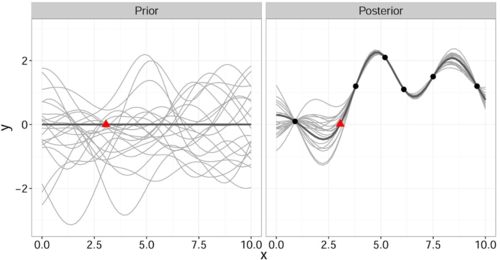

<div align="center">'''Figure 1. Gaussian process regression''': Select the functions from your joint prior distribution (left, grey curves) with mean <math>0</math> (left, bold line) that agree with the observed data points (right, black bullets). These form your posterior distribution (right, grey curves) with mean <math>\mu(\mathbf{x})</math> (right, bold line). Red triangle helps compare the two images (location marker) [ | <div align="center">'''Figure 1. Gaussian process regression''': | ||

<div align="center"> | |||

Select the functions from your joint prior distribution (left, grey curves) with mean <math>0</math> (left, bold line) that agree with the observed data points (right, black bullets). </div> | |||

<div align="center">These form your posterior distribution (right, grey curves) with mean <math>\mu(\mathbf{x})</math> (right, bold line). Red triangle helps compare the two images (location marker) [4]. </div> | |||

</div> | |||

== Data-set == | == Data-set == | ||

| Line 61: | Line 67: | ||

== Proposed Framework == | == Proposed Framework == | ||

To compute <math>\hat{y}</math>, the following algorithm is executed | To compute <math>\hat{y}</math>, the following algorithm is executed: | ||

<ol> | <ol> | ||

| Line 67: | Line 73: | ||

<li> Let <math>J \subseteq \{j^*-4 \leq j \leq j^*\}</math> (subset of possible weeks). | <li> Let <math>J \subseteq \{j^*-4 \leq j \leq j^*\}</math> (subset of possible weeks). | ||

<li> Assemble the Training Set <math>\{X_J, \mathbf{y}_{T,J}\}</math> | <li> Assemble the Training Set <math>\{X_J, \mathbf{y}_{T,J}\}</math> . | ||

<li> Train the Gaussian process | <li> Train the Gaussian process. | ||

<li> Calculate the '''distribution''' of <math>\hat{y}_{T,J}</math> using <math>p(\hat{y}_{T,J} | \mathbf{x}^*, X_J, \mathbf{y}_{T,J}) \sim \mathcal{N}(\mu(\mathbf{x}^*,X,\mathbf{y}_{T,J}),\sigma(\mathbf{x}^*,X_J))</math> | <li> Calculate the '''distribution''' of <math>\hat{y}_{T,J}</math> using <math>p(\hat{y}_{T,J} | \mathbf{x}^*, X_J, \mathbf{y}_{T,J}) \sim \mathcal{N}(\mu(\mathbf{x}^*,X,\mathbf{y}_{T,J}),\sigma(\mathbf{x}^*,X_J))</math>. | ||

<li> Set <math>\hat{y}_{T,J} =\mu(x^*,X_J,\mathbf{y}_{T,J})</math> | <li> Set <math>\hat{y}_{T,J} =\mu(x^*,X_J,\mathbf{y}_{T,J})</math>. | ||

<li> Repeat steps 2-5 for all sets of weeks <math>J</math> | <li> Repeat steps 2-5 for all sets of weeks <math>J</math>. | ||

<li> Determine the best 3 performing sets J (on the 2010/11 and 2011/12 validation sets) | <li> Determine the best 3 performing sets J (on the 2010/11 and 2011/12 validation sets). | ||

<li> Calculate the ensemble forecast by averaging the 3 best performing predictive distribution densities i.e. <math>\hat{y}_T = \frac{1}{3}\sum_{k=1}^3 \hat{y}_{T,J_{best}}</math> | <li> Calculate the ensemble forecast by averaging the 3 best performing predictive distribution densities i.e. <math>\hat{y}_T = \frac{1}{3}\sum_{k=1}^3 \hat{y}_{T,J_{best}}</math>. | ||

</ol> | </ol> | ||

| Line 85: | Line 91: | ||

== Results == | == Results == | ||

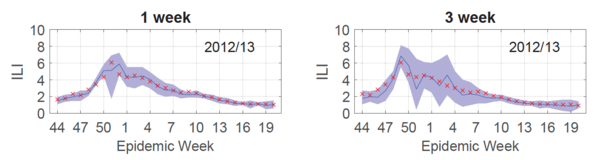

To demonstrate the accuracy of their results, retrospective forecasting was done on the ILI data-set. In other words, the Gaussian process model was trained to assume a previous season (2012/13) was the current season. In this fashion, the forecast could be compared to the already observed true outcome. | To demonstrate the accuracy of their results, retrospective forecasting was done on the ILI data-set. Retrospective forecasting involves using information from a specific time period in the past for example a certain week of the previous year and tries to forecast future values, for example, the next week's cases. Then, the forecasting accuracy can be evaluated on the actual observed value. In other words, the Gaussian process model was trained to assume a previous season (2012/13) was the current season. In this fashion, the forecast could be compared to the already observed true outcome. | ||

To produce a forecast for the entire 2012/13 season, 30 Gaussian processes were trained (each influenza season has 30 test points <math>\mathbf{x^*}</math>) and a curve connecting the predicted outputs <math>y_T = \hat{f}(\mathbf{x^*)}</math> was plotted ('''Fig.2''', blue line). As shown in '''Fig.2''', this forecast (blue line) was reliable for both 1 (left) and 3 (right) week targets, given that the 95% prediction interval ('''Fig.2''', purple shaded) contained the true values ('''Fig.2''', red x's) 95% of the time. | To produce a forecast for the entire 2012/13 season, 30 Gaussian processes were trained (each influenza season has 30 test points <math>\mathbf{x^*}</math>) and a curve connecting the predicted outputs <math>y_T = \hat{f}(\mathbf{x^*)}</math> was plotted ('''Fig.2''', blue line). As shown in '''Fig.2''', this forecast (blue line) was reliable for both 1 (left) and 3 (right) week targets, given that the 95% prediction interval ('''Fig.2''', purple shaded) contained the true values ('''Fig.2''', red x's) 95% of the time. | ||

| Line 91: | Line 97: | ||

<div style="text-align:center;"> [[File:ResultsOne.png|600px]] </div> | <div style="text-align:center;"> [[File:ResultsOne.png|600px]] </div> | ||

<div align="center">'''Figure 2. Retrospective forecasts and their uncertainty''': One week retrospective influenza forecasting for two targets (T = 1, 3). Red x’s are the true observed values, and blue lines and purple shaded areas represent point forecasts and 95% prediction intervals, respectively. </div> | <div align="center">'''Figure 2. Retrospective forecasts and their uncertainty''': | ||

<div align="center">One week retrospective influenza forecasting for two targets (T = 1, 3). Red x’s are the true observed values, and blue lines and purple shaded areas represent point forecasts and 95% prediction intervals, respectively. </div> | |||

</div> | |||

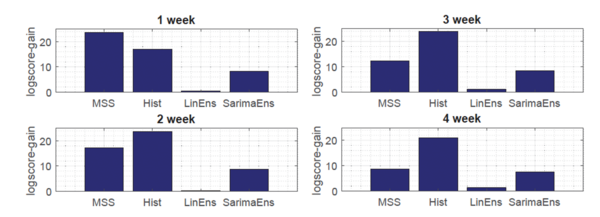

Moreover, as shown in '''Fig.3''', the novel Gaussian process regression framework outperformed all state-of-the-art models, included LinEns, for four different targets <math>(T = 1,\ldots, 4)</math>, when compared using the official CDC scoring criterion ''log-score''. Log-score describes the logarithmic probability of the forecast being within an interval around the true value. | Moreover, as shown in '''Fig.3''', the novel Gaussian process regression framework outperformed all state-of-the-art models, included LinEns, for four different targets <math>(T = 1,\ldots, 4)</math>, when compared using the official CDC scoring criterion ''log-score''. Log-score describes the logarithmic probability of the forecast being within an interval around the true value. | ||

| Line 98: | Line 105: | ||

<div style="text-align:center;"> [[File:ComparisonNew.png|600px]] </div> | <div style="text-align:center;"> [[File:ComparisonNew.png|600px]] </div> | ||

<div align="center">'''Figure 3. Average log-score gain of proposed framework''': Each bar shows the mean seasonal log-score gain of the proposed framework vs. the given state-of-the-art model, and each panel corresponds to a different target week <math> T = 1,...4 </math>. </div> | <div align="center">'''Figure 3. Average log-score gain of proposed framework''': | ||

<div align="center"> Each bar shows the mean seasonal log-score gain of the proposed framework vs. the given state-of-the-art model, and each panel corresponds to a different target week <math> T = 1,...4 </math>. </div> | |||

</div> | |||

== Conclusion == | == Conclusion == | ||

This paper presented a novel framework for forecasting seasonal epidemics using Gaussian process regression that outperformed multiple state-of-the-art forecasting methods on the CDC's ILI data-set. Hence, this work may play a key role in future influenza forecasting and as result, the improvement of public health policies and resource allocation. | This paper presented a novel framework for forecasting seasonal epidemics using Gaussian process regression that outperformed multiple state-of-the-art forecasting methods on the CDC's ILI data-set. We predict seasonal influenza and show that it can produce accurate point forecasts and accurate uncertainty quantification. Since influenza data has only been collected from 2003, therefore as more data is obtained: the training models can be further improved upon with more robust model optimizations. Hence, this work may play a key role in future influenza forecasting and as result, the improvement of public health policies and resource allocation. | ||

== Critique == | == Critique == | ||

The proposed framework provides a computationally efficient method to forecast any seasonal epidemic count data that is easily extendable to multiple target types. In particular | The proposed framework provides a computationally efficient method to forecast any seasonal epidemic count data that is easily extendable to multiple target types. In particular, one can compute key parameters such as the peak infection incidence <math>\left(\hat{y} = \text{max}_{0 \leq j \leq 52} d^i_j \right)</math>, the timing of the peak infection incidence <math>\left(\hat{y} = \text{argmax}_{0 \leq j \leq 52} d^i_j\right)</math> and the final epidemic size of a season <math>\left(\hat{y} = \sum_{j=1}^{52} d^i_j\right)</math>. However, given it is not a physical model, it cannot provide insights on parameters describing the disease spread. Moreover, the framework requires training data and hence, is not applicable for non-seasonal epidemics. | ||

Consider the Gaussian Process Regression, we can apply it on the COVID-19. Hence, we can add the data obtained from COVID-19 to the data-set part. Then apply the algorithm that was outlined from the project. We can predict the high wave period to better protect ourselves. But the thing is how can we obtain the training data from COVID-19 since there will be many missing values since many people may not know whether they have been affected or not. Thus, it will be a big problem to obtain the best training data. | |||

There is one Gaussian property which makes most of its models extremely tractable. A linear combination of two or more Gaussian distributions is still a Gaussian distribution. It's very easy to determine the mean and variance of the newly constructed Gaussian distribution. But there's a constraint of applying this property to several Gaussian distributions. All Gaussian distributions should be linearly independent of others, otherwise, the linear combination distribution might not be Gaussian anymore. | |||

This paper provides a state of the art approach for forecasting epidemics. It would have been interesting to see other types of kernels being used, such as a periodic kernel | This paper provides a state of the art approach for forecasting epidemics. It would have been interesting to see other types of kernels being used, such as a periodic kernel <math>k(x, x') = \sigma^2 \exp\left({-\frac{2 \sin^2 (\pi|x-x'|)/p}{l^2}}\right) </math>, as intuitively epidemics are known to have waves within seasons. This may have resulted in better-calibrated uncertainty estimates as well. | ||

It is mentioned that the the framework might not be good for non-seasonal epidemics because it requires training data, given that the COVID-19 pandemic comes in multiple waves and we have enough data from the first wave and second wave, we might be able to use this framework to predict the third wave and possibly the fourth one as well. It'd be interesting to see this forecasting framework being trained using the data from the first and second wave of COVID-19. | It is mentioned that the the framework might not be good for non-seasonal epidemics because it requires training data, given that the COVID-19 pandemic comes in multiple waves and we have enough data from the first wave and second wave, we might be able to use this framework to predict the third wave and possibly the fourth one as well. It'd be interesting to see this forecasting framework being trained using the data from the first and second wave of COVID-19. | ||

Gaussian Process Regression (GPR) can be extended to COVID-19 outbreak forecast [3]. A Multi-Task Gaussian Process Regression (MTGP) model is a special case of GPR that yields multiple outputs. It was first proposed back in 2008, and its recent application was in the battery capacity prediction by Richardson et. al. The proposed MTGP model was applied on Worldwide, China, India, Italy, and USA data that covers COVID-19 statistics from Dec 31 2019 to June 25 2020. Results show that MTGP outperforms linear regression, support vector regression, random forest regression, and long short-term memory model when it comes to forecasting accuracy. | |||

A critique for GPR is that it does not take into account the physicality of the epidemic process. So it would be interesting to investigate if we can incorporate elements of physical processes in this such as using Ordinary Differential Equations (ODE) based modeling and seeing if that improves prediction accuracy or helps us explain phenomena. | |||

It would be better to have a future work section to discuss the current shortage and explore the possible improvement and applications in the future. | |||

It will be interesting to provide a comparison of methods described in this paper with optimal control theory for modelling infectious disease. | |||

This is a very nice summary and everything was discussed clearly. I think it will be interesting that apply GPR by using the data in COVID-19, maybe this will be helpful to give a sense that how likely that 3rd wave of COVID-19 will happen. Maybe it will be better to discuss more that a training data set from a location will not train a good model for other countries. (p.s. I think <math> \sigma </math> is the standard deviation, sometimes saying variance function with the notation <math> \sigma </math> confused me.). | |||

This is a very interesting paper of using GPR to forecast influenza, and that is definitely something applicable to the situation we have right now. From the benchmarking section, it seems like GPR outperforms LinEns by a narrow margin, so it would be interesting to spend some time discussing the performance difference between GPR and LinEns to see if the benefit outweighs the cost. | |||

== References == | == References == | ||

| Line 118: | Line 143: | ||

[2] Ray, E. L., Sakrejda, K., Lauer, S. A., Johansson, M. A.,and Reich, N. G. (2017).Infectious disease prediction with kernel conditional densityestimation.Statistics in Medicine, 36(30):4908–4929. | [2] Ray, E. L., Sakrejda, K., Lauer, S. A., Johansson, M. A.,and Reich, N. G. (2017).Infectious disease prediction with kernel conditional densityestimation.Statistics in Medicine, 36(30):4908–4929. | ||

[3] Schulz, E., Speekenbrink, M., and Krause, A. (2017).A tutorial on gaussian process regression with a focus onexploration-exploitation scenarios.bioRxiv. | [3] Ketu, S., Mishra, P.K. Enhanced Gaussian process regression-based forecasting model for COVID-19 outbreak and significance of IoT for its detection. Appl Intell (2020). https://doi.org/10.1007/s10489-020-01889-9 | ||

[4] Schulz, E., Speekenbrink, M., and Krause, A. (2017).A tutorial on gaussian process regression with a focus onexploration-exploitation scenarios.bioRxiv. | |||

[5] Zimmer, C., Leuba, S. I., Cohen, T., and Yaesoubi, R.(2019).Accurate quantification of uncertainty in epidemicparameter estimates and predictions using stochasticcompartmental models.Statistical Methods in Medical Research,28(12):3591–3608.PMID: 30428780. | |||

[ | [6] Bussell E. H., Dangerfield C. E., Gilligan C. A. and Cunniffe N. J. 2019Applying optimal control theory to complex epidemiological models to inform real-world disease managementPhil. Trans. R. Soc. B37420180284 http://doi.org/10.1098/rstb.2018.0284 | ||

Latest revision as of 14:13, 7 December 2020

Abstract

This paper presents a novel framework for seasonal epidemic forecasting using Gaussian process regression. Compared with other state-of-the-art models, the new novel framework introduced in this paper shows accurate retrospective forecasts through training a subset of the CDC influenza-like-illness (ILI) dataset using the official CDC scoring rule (log-score).

Background

Each year, the seasonal influenza epidemic affects public health systems across the globe on a massive scale. In 2019-2020 alone, the United States reported 38 million cases, 400 000 hospitalizations, and 22 000 deaths [1]. A direct result of this is the need for reliable forecasts of future influenza development because they allow for improved public health policies and informed resource development and allocation. Many statistical methods have been developed to use data from the CDC and other real-time data sources, such as Google Trends to forecast influenza activities.

Given the process of data collection and surveillance lag, accurate statistics for influenza warning systems are often delayed by some margin of time, making early prediction imperative. However, there are challenges in long-term epidemic forecasting. First, the temporal dependency is hard to capture with short-term input data. Without manually added seasonal trends, most statistical models fail to provide high accuracy. Second, the influence from other locations has not been exhaustively explored with limited data input. Spatio-temporal effects would therefore require adequate data sources to achieve good performance.

Related Work

Given the value of epidemic forecasts, the CDC regularly publishes ILI data and has funded a seasonal ILI forecasting challenge. This challenge has led to four state-of-the-art models in the field: Historical averages, a method using the counts of past seasons to build a kernel density estimate; MSS, a physical susceptible-infected-recovered model with assumed linear noise [5]; SARIMA, a framework based on seasonal auto-regressive moving average models [2]; and LinEns, an ensemble of three linear regression models.

One disadvantage of forecasting is that the publication of CDC official ILI data is usually delayed by 1-3 weeks. Therefore, several attempts to use indirect web-based data to gain more timely estimates of CDC’s ILI, which is also known as nowcasting. The forecasting framework introduced in this paper can use those nowcasting approaches to receive a more timely data stream.

Motivation

It has been shown that LinEns forecasts outperform the other frameworks on the ILI data-set. However, this framework assumes a deterministic relationship between the epidemic week and its case count, which does not reflect the stochastic nature of the trend. Therefore, it is natural to ask whether a similar framework that assumes a stochastic relationship between these variables would provide better performance. This motivated the development of the proposed Gaussian process regression framework and the subsequent performance comparison to the benchmark models.

Gaussian Process Regression

A Gaussian process is specified with a mean function and a kernel function. Besides, the mean and the variance function of the Gaussian process is defined by: \[\mu(x) = k(x, X)^T(K_n+\sigma^2I)^{-1}Y\] \[\sigma(x) = k^{**}(x,x)-k(x,X)^T(K_n+\sigma^2I)^{-1}k(x,X)\] where [math]\displaystyle{ K_n }[/math] is the covariance matrix and for the kernel, it is being specified that it will use the Gaussian kernel.

Consider the following set up: let [math]\displaystyle{ X = [\mathbf{x}_1,\ldots,\mathbf{x}_n] }[/math] [math]\displaystyle{ (d\times n) }[/math] be your training data, [math]\displaystyle{ \mathbf{y} = [y_1,y_2,\ldots,y_n]^T }[/math] be your noisy observations where [math]\displaystyle{ y_i = f(\mathbf{x}_i) + \epsilon_i }[/math], [math]\displaystyle{ (\epsilon_i:i = 1,\ldots,n) }[/math] i.i.d. [math]\displaystyle{ \sim \mathcal{N}(0,{\sigma}^2) }[/math], and [math]\displaystyle{ f }[/math] is the trend we are trying to model (by [math]\displaystyle{ \hat{f} }[/math]). Let [math]\displaystyle{ \mathbf{x}^* }[/math] [math]\displaystyle{ (d\times 1) }[/math] be your test data point, and [math]\displaystyle{ \hat{y} = \hat{f}(\mathbf{x}^*) }[/math] be your predicted outcome.

Instead of assuming a deterministic form of [math]\displaystyle{ f }[/math], and thus of [math]\displaystyle{ \mathbf{y} }[/math] and [math]\displaystyle{ \hat{y} }[/math] (as classical linear regression would, for example), Gaussian process regression assumes [math]\displaystyle{ f }[/math] is stochastic. More precisely, [math]\displaystyle{ \mathbf{y} }[/math] and [math]\displaystyle{ \hat{y} }[/math] are assumed to have a joint prior distribution. Indeed, we have

$$ (\mathbf{y},\hat{y}) \sim \mathcal{N}(0,\Sigma(X,\mathbf{x}^*)) $$

where [math]\displaystyle{ \Sigma(X,\mathbf{x}^*) }[/math] is a matrix of covariances dependent on some kernel function [math]\displaystyle{ k }[/math]. In this paper, the kernel function is assumed to be Gaussian and takes the form

$$ k(\mathbf{x}_i,\mathbf{x}_j) = \sigma^2\exp(-\frac{1}{2}(\mathbf{x}_i-\mathbf{x}_j)^T\Sigma(\mathbf{x}_i-\mathbf{x}_j)). $$

It is important to note that this gives a joint prior distribution of functions (Fig. 1 left, grey curves).

By restricting this distribution to contain only those functions (Fig. 1 right, grey curves) that agree with the observed data points [math]\displaystyle{ \mathbf{x} }[/math] (Fig. 1 right, solid black) we obtain the posterior distribution for [math]\displaystyle{ \hat{y} }[/math] which has the form

$$ p(\hat{y} | \mathbf{x}^*, X, \mathbf{y}) \sim \mathcal{N}(\mu(\mathbf{x}^*,X,\mathbf{y}),\sigma(\mathbf{x}^*,X)) $$

Data-set

Let [math]\displaystyle{ d_j^i }[/math] denote the number of epidemic cases recorded in week [math]\displaystyle{ j }[/math] of season [math]\displaystyle{ i }[/math], and let [math]\displaystyle{ j^* }[/math] and [math]\displaystyle{ i^* }[/math] denote the current week and season, respectively. The ILI data-set contains [math]\displaystyle{ d_j^i }[/math] for all previous weeks and seasons, up to the current season with a 1-3 week publishing delay. Note that a season refers to the time of year when the epidemic is prevalent (e.g. an influenza season lasts 30 weeks and contains the last 10 weeks of year k, and the first 20 weeks of year k+1). The goal is to predict [math]\displaystyle{ \hat{y}_T = \hat{f}_T(x^*) = d^{i^*}_{j* + T} }[/math] where [math]\displaystyle{ T, \;(T = 1,\ldots,K) }[/math] is the target week (how many weeks into the future that you want to predict).

To do this, a design matrix [math]\displaystyle{ X }[/math] is constructed where each element [math]\displaystyle{ X_{ji} = d_j^i }[/math] corresponds to the number of cases in week (row) j of season (column) i. The training outcomes [math]\displaystyle{ y_{i,T}, i = 1,\ldots,n }[/math] correspond to the number of cases that were observed in target week [math]\displaystyle{ T,\; (T = 1,\ldots,K) }[/math] of season [math]\displaystyle{ i, (i = 1,\ldots,n) }[/math].

Proposed Framework

To compute [math]\displaystyle{ \hat{y} }[/math], the following algorithm is executed:

- Let [math]\displaystyle{ J \subseteq \{j^*-4 \leq j \leq j^*\} }[/math] (subset of possible weeks).

- Assemble the Training Set [math]\displaystyle{ \{X_J, \mathbf{y}_{T,J}\} }[/math] .

- Train the Gaussian process.

- Calculate the distribution of [math]\displaystyle{ \hat{y}_{T,J} }[/math] using [math]\displaystyle{ p(\hat{y}_{T,J} | \mathbf{x}^*, X_J, \mathbf{y}_{T,J}) \sim \mathcal{N}(\mu(\mathbf{x}^*,X,\mathbf{y}_{T,J}),\sigma(\mathbf{x}^*,X_J)) }[/math].

- Set [math]\displaystyle{ \hat{y}_{T,J} =\mu(x^*,X_J,\mathbf{y}_{T,J}) }[/math].

- Repeat steps 2-5 for all sets of weeks [math]\displaystyle{ J }[/math].

- Determine the best 3 performing sets J (on the 2010/11 and 2011/12 validation sets).

- Calculate the ensemble forecast by averaging the 3 best performing predictive distribution densities i.e. [math]\displaystyle{ \hat{y}_T = \frac{1}{3}\sum_{k=1}^3 \hat{y}_{T,J_{best}} }[/math].

Results

To demonstrate the accuracy of their results, retrospective forecasting was done on the ILI data-set. Retrospective forecasting involves using information from a specific time period in the past for example a certain week of the previous year and tries to forecast future values, for example, the next week's cases. Then, the forecasting accuracy can be evaluated on the actual observed value. In other words, the Gaussian process model was trained to assume a previous season (2012/13) was the current season. In this fashion, the forecast could be compared to the already observed true outcome.

To produce a forecast for the entire 2012/13 season, 30 Gaussian processes were trained (each influenza season has 30 test points [math]\displaystyle{ \mathbf{x^*} }[/math]) and a curve connecting the predicted outputs [math]\displaystyle{ y_T = \hat{f}(\mathbf{x^*)} }[/math] was plotted (Fig.2, blue line). As shown in Fig.2, this forecast (blue line) was reliable for both 1 (left) and 3 (right) week targets, given that the 95% prediction interval (Fig.2, purple shaded) contained the true values (Fig.2, red x's) 95% of the time.

Moreover, as shown in Fig.3, the novel Gaussian process regression framework outperformed all state-of-the-art models, included LinEns, for four different targets [math]\displaystyle{ (T = 1,\ldots, 4) }[/math], when compared using the official CDC scoring criterion log-score. Log-score describes the logarithmic probability of the forecast being within an interval around the true value.

Conclusion

This paper presented a novel framework for forecasting seasonal epidemics using Gaussian process regression that outperformed multiple state-of-the-art forecasting methods on the CDC's ILI data-set. We predict seasonal influenza and show that it can produce accurate point forecasts and accurate uncertainty quantification. Since influenza data has only been collected from 2003, therefore as more data is obtained: the training models can be further improved upon with more robust model optimizations. Hence, this work may play a key role in future influenza forecasting and as result, the improvement of public health policies and resource allocation.

Critique

The proposed framework provides a computationally efficient method to forecast any seasonal epidemic count data that is easily extendable to multiple target types. In particular, one can compute key parameters such as the peak infection incidence [math]\displaystyle{ \left(\hat{y} = \text{max}_{0 \leq j \leq 52} d^i_j \right) }[/math], the timing of the peak infection incidence [math]\displaystyle{ \left(\hat{y} = \text{argmax}_{0 \leq j \leq 52} d^i_j\right) }[/math] and the final epidemic size of a season [math]\displaystyle{ \left(\hat{y} = \sum_{j=1}^{52} d^i_j\right) }[/math]. However, given it is not a physical model, it cannot provide insights on parameters describing the disease spread. Moreover, the framework requires training data and hence, is not applicable for non-seasonal epidemics.

Consider the Gaussian Process Regression, we can apply it on the COVID-19. Hence, we can add the data obtained from COVID-19 to the data-set part. Then apply the algorithm that was outlined from the project. We can predict the high wave period to better protect ourselves. But the thing is how can we obtain the training data from COVID-19 since there will be many missing values since many people may not know whether they have been affected or not. Thus, it will be a big problem to obtain the best training data.

There is one Gaussian property which makes most of its models extremely tractable. A linear combination of two or more Gaussian distributions is still a Gaussian distribution. It's very easy to determine the mean and variance of the newly constructed Gaussian distribution. But there's a constraint of applying this property to several Gaussian distributions. All Gaussian distributions should be linearly independent of others, otherwise, the linear combination distribution might not be Gaussian anymore.

This paper provides a state of the art approach for forecasting epidemics. It would have been interesting to see other types of kernels being used, such as a periodic kernel [math]\displaystyle{ k(x, x') = \sigma^2 \exp\left({-\frac{2 \sin^2 (\pi|x-x'|)/p}{l^2}}\right) }[/math], as intuitively epidemics are known to have waves within seasons. This may have resulted in better-calibrated uncertainty estimates as well.

It is mentioned that the the framework might not be good for non-seasonal epidemics because it requires training data, given that the COVID-19 pandemic comes in multiple waves and we have enough data from the first wave and second wave, we might be able to use this framework to predict the third wave and possibly the fourth one as well. It'd be interesting to see this forecasting framework being trained using the data from the first and second wave of COVID-19.

Gaussian Process Regression (GPR) can be extended to COVID-19 outbreak forecast [3]. A Multi-Task Gaussian Process Regression (MTGP) model is a special case of GPR that yields multiple outputs. It was first proposed back in 2008, and its recent application was in the battery capacity prediction by Richardson et. al. The proposed MTGP model was applied on Worldwide, China, India, Italy, and USA data that covers COVID-19 statistics from Dec 31 2019 to June 25 2020. Results show that MTGP outperforms linear regression, support vector regression, random forest regression, and long short-term memory model when it comes to forecasting accuracy.

A critique for GPR is that it does not take into account the physicality of the epidemic process. So it would be interesting to investigate if we can incorporate elements of physical processes in this such as using Ordinary Differential Equations (ODE) based modeling and seeing if that improves prediction accuracy or helps us explain phenomena.

It would be better to have a future work section to discuss the current shortage and explore the possible improvement and applications in the future.

It will be interesting to provide a comparison of methods described in this paper with optimal control theory for modelling infectious disease.

This is a very nice summary and everything was discussed clearly. I think it will be interesting that apply GPR by using the data in COVID-19, maybe this will be helpful to give a sense that how likely that 3rd wave of COVID-19 will happen. Maybe it will be better to discuss more that a training data set from a location will not train a good model for other countries. (p.s. I think [math]\displaystyle{ \sigma }[/math] is the standard deviation, sometimes saying variance function with the notation [math]\displaystyle{ \sigma }[/math] confused me.).

This is a very interesting paper of using GPR to forecast influenza, and that is definitely something applicable to the situation we have right now. From the benchmarking section, it seems like GPR outperforms LinEns by a narrow margin, so it would be interesting to spend some time discussing the performance difference between GPR and LinEns to see if the benefit outweighs the cost.

References

[1] Estimated Influenza Illnesses, Medical visits, Hospitalizations, and Deaths in the United States - 2019–2020 Influenza Season. (2020). Retrieved November 16, 2020, from https://www.cdc.gov/flu/about/burden/2019-2020.html

[2] Ray, E. L., Sakrejda, K., Lauer, S. A., Johansson, M. A.,and Reich, N. G. (2017).Infectious disease prediction with kernel conditional densityestimation.Statistics in Medicine, 36(30):4908–4929.

[3] Ketu, S., Mishra, P.K. Enhanced Gaussian process regression-based forecasting model for COVID-19 outbreak and significance of IoT for its detection. Appl Intell (2020). https://doi.org/10.1007/s10489-020-01889-9

[4] Schulz, E., Speekenbrink, M., and Krause, A. (2017).A tutorial on gaussian process regression with a focus onexploration-exploitation scenarios.bioRxiv.

[5] Zimmer, C., Leuba, S. I., Cohen, T., and Yaesoubi, R.(2019).Accurate quantification of uncertainty in epidemicparameter estimates and predictions using stochasticcompartmental models.Statistical Methods in Medical Research,28(12):3591–3608.PMID: 30428780.

[6] Bussell E. H., Dangerfield C. E., Gilligan C. A. and Cunniffe N. J. 2019Applying optimal control theory to complex epidemiological models to inform real-world disease managementPhil. Trans. R. Soc. B37420180284 http://doi.org/10.1098/rstb.2018.0284