The Curious Case of Degeneration: Difference between revisions

No edit summary |

|||

| (48 intermediate revisions by 11 users not shown) | |||

| Line 2: | Line 2: | ||

Donya Hamzeian | Donya Hamzeian | ||

== Introduction == | == Introduction == | ||

Text generation is the act of automatically generating natural language texts like summarization, neural machine translation, fake news generation and etc. Degeneration happens when the output text is incoherent or produces repetitive results. For example in the figure below, the GPT2 model tries to generate the continuation text given the context. On the left side, the beam-search was used as the decoding strategy which has obviously stuck in a repetitive loop. On the right side, however, you can see how the pure sampling decoding strategy has generated incoherent results. | Text generation is the act of automatically generating natural language texts like summarization, neural machine translation, fake news generation and etc. Degeneration happens when the output text is incoherent or produces repetitive results. This paper exposes the difference between human text and machine text and also highlights the decoding strategies impact machine text. the author For example in the figure below, the GPT2 model tries to generate the continuation text given the context. On the left side, the beam-search was used as the decoding strategy which has obviously stuck in a repetitive loop. On the right side, however, you can see how the pure sampling decoding strategy has generated incoherent results. | ||

[[File: GPT2_example.png |caption=Example text|center |800px|caption position=bottom]] | [[File: GPT2_example.png |caption=Example text|center |800px|caption position=bottom]] | ||

<div align="center">Figure 1: Text generation examples</div> | |||

As a quick recap, the beam search is a best-first search algorithm. At each step, it selects the K most-probable predictions, where K is the beam width parameter set by humans. If K is 1, the beam search algorithm becomes the greedy search algorithm, where only the best prediction is picked. In beam search, the system only explores K paths, which reduces the memory requirements. | As a quick recap, the beam search is a best-first search algorithm. At each step, it selects the K most-probable predictions, where K is the beam width parameter set by humans. If K is 1, the beam search algorithm becomes the greedy search algorithm, where only the best prediction is picked. In beam search, the system only explores K paths, which reduces the memory requirements. | ||

| Line 9: | Line 11: | ||

The authors argue that decoding strategies that are based on maximization like beam search lead to degeneration even with powerful models like GPT-2. Even though there are some utility functions that encourage diversity, they are not enough and the text generated by maximization, beam-search, or top-k sampling is too probable which indicates the lack of diversity (variance) compared to human-generated texts | The authors argue that decoding strategies that are based on maximization like beam search lead to degeneration even with powerful models like GPT-2. Even though there are some utility functions that encourage diversity, they are not enough and the text generated by maximization, beam-search, or top-k sampling is too probable which indicates the lack of diversity (variance) compared to human-generated texts | ||

Others have questioned whether a problem with beam search is that by expanding on only the top k tokens in each step of the generation, in later steps it may miss possible sequence that would have resulted in a more probable overall phrase. The authors argue that this isn't an issue for generating natural language as it has a lower per-token probability on average and people usually optimize against saying the obvious. | |||

The authors blame the long, unreliable tail in the probability distribution of tokens that the model samples from i.e. vocabularies with low probability frequently appear in the output text. So, top-k sampling with high values of k may produce texts closer to human texts, yet they have high variance in likelihood leading to incoherency issues. | The authors blame the long, unreliable tail in the probability distribution of tokens that the model samples from i.e. vocabularies with low probability frequently appear in the output text. So, top-k sampling with high values of k may produce texts closer to human texts, yet they have a high variance in likelihood leading to incoherency issues. | ||

Therefore, instead of fixed k, it is good to dynamically increase or decrease the number of candidate tokens. Nucleus Sampling which is the contribution of this paper does this expansion and contraction of the candidate pool. | Therefore, instead of fixed k, it is good to dynamically increase or decrease the number of candidate tokens. Nucleus Sampling which is the contribution of this paper does this expansion and contraction of the candidate pool. | ||

===The problem with a fixed k=== | |||

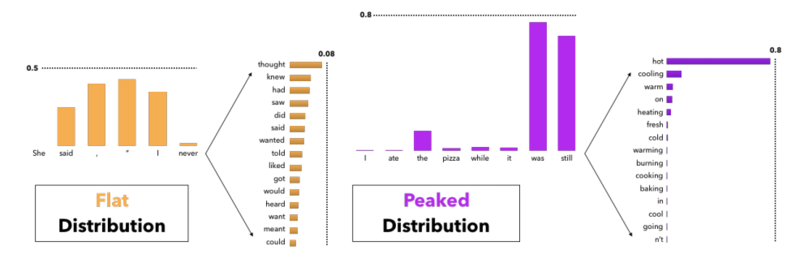

In the figure below, it can be seen why having a fixed k in the top-k sampling decoding strategy can lead to degenerative results, more specifically, incoherent and low diversity texts. For instance, in the left figure, the distribution of the next token is flat i.e. there are many tokens with nearly equal probability to be the next token. In this case, if we choose a small k, like 5, some tokens like "meant" and "want" may not appear in the generated text which makes it less diverse. On the other hand, in the right figure, the distribution of the next token is peaked, i.e there are very few words with very high probability. In this case, if we choose k to be large, like 10, we may end up choosing tokens like "going" and "n't" which makes the generated text incoherent. Therefore, it seems that having a fixed-k may lead to degeneration | |||

[[File: fixed-k.png |caption=Example text|center |800px|caption position=bottom]] | |||

<div align="center">Figure 2: Flat versus peaked distribution of tokens</div> | |||

==Language Model Decoding== | ==Language Model Decoding== | ||

There are two types of generation tasks. | There are two types of generation tasks. | ||

1. Directed generation tasks: In these tasks, there are pairs of (input, output), where the model tries to generate the output text which is tightly scoped by the input text. | 1. Directed generation tasks: In these tasks, there are pairs of (input, output), where the model tries to generate the output text which is tightly scoped by the input text. Due to this constraint, these tasks suffer less from the degeneration. Summarization, neural machine translation, and input-to-text generation are some examples of these tasks. | ||

2. Open-ended generation tasks like conditional story generation or like the tasks in the above figure have high degrees of freedom. As a result, degeneration is more frequent in these tasks and the focus of this paper. | |||

The goal of the open-ended tasks is to generate the next n continuation tokens given a context sequence with m tokens. That is to maximize the following probability. | |||

\begin{align} | |||

P(x_{1:m+n})=\prod_{i=1}^{m+n}P(x_i|x_1 \ldots x_{i-1}) | |||

\end{align} | |||

====Nucleus Sampling==== | ====Nucleus Sampling==== | ||

The authors propose Nucleus Sampling as a stochastic decoding method where the shape of the probability distribution determines the set of vocabulary tokens to be sampled. | |||

In this they first find the smallest vocabulary set <math>V^{(p)}</math> which satisfies <math>\Sigma_{x \in V^{(p)}} P(x|x_{1:i-1}) \ge p</math>. They then normalise the subset <math>V^{(p)}</math> into a probability distribution by dividing its elements by <math>p'=\Sigma_{x \in V^{(p)}} P(x|x_{1:i-1}) \ge p</math>. These normalized probabilities will then be used for the generation of word samples. This entire process can be viewed as a re-scaling to the original probability distribution in to a new distbition <math>P'</math>. Where: | |||

P'(x|x_{1:i-1}) = \begin{cases} | |||

\begin{align} | |||

P'(x|x_{1:i-1}) = \begin{cases}\frac{P(x|x_{1:i-1})}{p'}, & x \in V^{(p)} \\ 0, & otherwise \end{cases} | |||

\end{ | \end{align} | ||

This decoding strategy is beneficial as it can truncate possible long tails of the original probability distribution. Thus is can then help avoid the associated problem of incoherent samples for phrases generated by long-tailed distributions as previously discussed. | |||

====Top-k Sampling==== | ====Top-k Sampling==== | ||

Top-k sampling also relies on truncating the distribution. In this decoding strategy, we need to first find a set of tokens with size k <math>V^{(k)} </math> which maximizes <math>\Sigma_{x \in V^{(k)}} P(x|x_{1:i-1})</math> and set <math>p' = \Sigma_{x \in V^{(k)}} P(x|x_{1:i-1})</math>. Finally, rescale the probability distribution similar to the Nucleus sampling. | Top-k sampling also relies on truncating the distribution. In this decoding strategy, we need to first find a set of tokens with size <math>k</math>, <math>V^{(k)} </math>, which maximizes <math>\Sigma_{x \in V^{(k)}} P(x|x_{1:i-1})</math> and set <math>p' = \Sigma_{x \in V^{(k)}} P(x|x_{1:i-1})</math>. Finally, rescale the probability distribution similar to the Nucleus sampling. | ||

Intuitively, the difference between Top-k sampling and Nucleus sampling is how they set a threshold of truncation - the former one defines a threshold at which the tail of the probability distribution gets truncated, whereas the latter puts a cap on the number of tokens in the vocabulary set. It is noteworthy that thresholding the number of tokens can cause <math>p'</math> to fluctuate greatly at different time steps. | |||

====Sampling with Temperature==== | ====Sampling with Temperature==== | ||

In this method, which was proposed in [1], the probability of tokens are calculated according to the equation below where <math>t \in (0,1)</math> is the temperature and <math>u_{1:|V|} </math> are logits. | |||

<math> | <math> | ||

P(x= V_l|x_{1:i-1}) = \frac{\exp(\frac{u_l}{t})}{\Sigma_{l'}\exp(\frac{u'_l}{t})} | P(x= V_l|x_{1:i-1}) = \frac{\exp(\frac{u_l}{t})}{\Sigma_{l'}\exp(\frac{u'_l}{t})} | ||

</math> | </math> | ||

Recent studies have shown that lowering <math>t</math> improves the quality of the generated texts while it decreases diversity. Note that the temperature <math>t</math> controls how conservative the model is, and this analogy comes from thermodynamics, where lower temperature means lower energy states are unlikely to be encountered. Hence, the lower the temperature, the less likely the model is to sample tokens with lower probability. | |||

==Likelihood Evaluation== | ==Likelihood Evaluation== | ||

| Line 53: | Line 75: | ||

[[File: Perplexity.png |caption=Example text|center |800px|caption position=bottom]] | [[File: Perplexity.png |caption=Example text|center |800px|caption position=bottom]] | ||

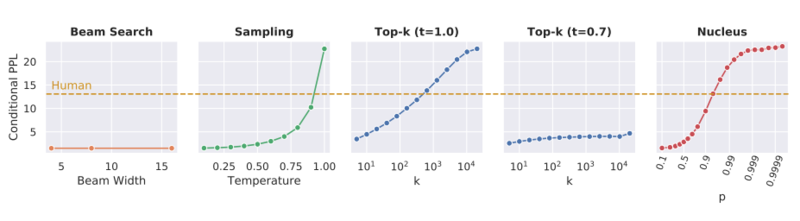

<div align="center">Figure 3: Comparison of perplexity across decoding strategies</div> | |||

====What is Perplexity?==== | |||

Perplexity as previously mentioned is a score that comes from information theory [3]. It is a measure of how well a probabilistic model or distribution predicts a sample. This intuitively leads to it is useful for comparing how competition models explain the same sample or dataset. Perplexity has close ties to information entropy as can be seen in the following discrete formulation of perplexity for a probability distribution. | |||

:<math>PP(p) := 2^{H(p)}=2^{-\sum_x p(x)\log_2 p(x)}</math> | |||

Here <math>H(p)</math> is the entropy in bits and <math>p(x)</math> is the probability of observing <math>x</math> from the distribution. | |||

Perplexity in the context of probability models also has close ties to information entropy. The idea here is a model <math>f(x)</math> is fit to data from an unknown probability distribution <math>p(x)</math>. When the model is given test samples which were not used during its construction; the model will assign these samples some probability <math>f(x_i)</math>. Here <math>x_i</math> comes from a test set where <math>i = 1,...,N</math>. The perplexity will be lowest for a model which has high probabilities for the test samples. This can be seen in the following equation: | |||

:<math>PPL = b^{- \frac{1}{N} \sum_{i=1}^N \log_b q(x_i)}</math> | |||

Here <math>b</math> is the base and can be any number though commonly 2 is used to represent bits. | |||

==Distributional Statistical Evaluation== | ==Distributional Statistical Evaluation== | ||

====Zipf Distribution Analysis==== | ====Zipf Distribution Analysis==== | ||

| Line 59: | Line 96: | ||

[[File: Zipf.png |caption=Example text|center |800px|caption position=bottom]] | [[File: Zipf.png |caption=Example text|center |800px|caption position=bottom]] | ||

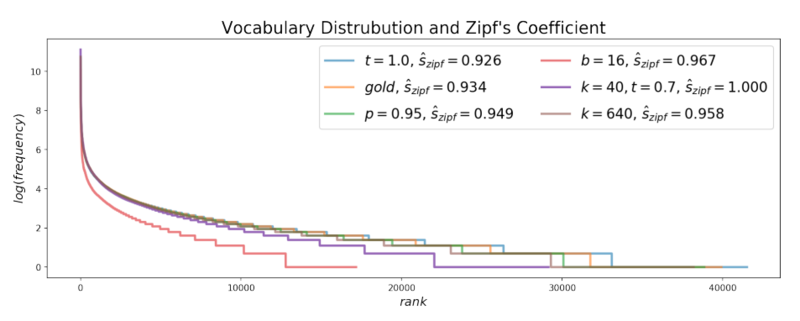

<div align="center">Figure 4: Zipf Distribution Analysis</div> | |||

====Self BLEU==== | ====Self BLEU==== | ||

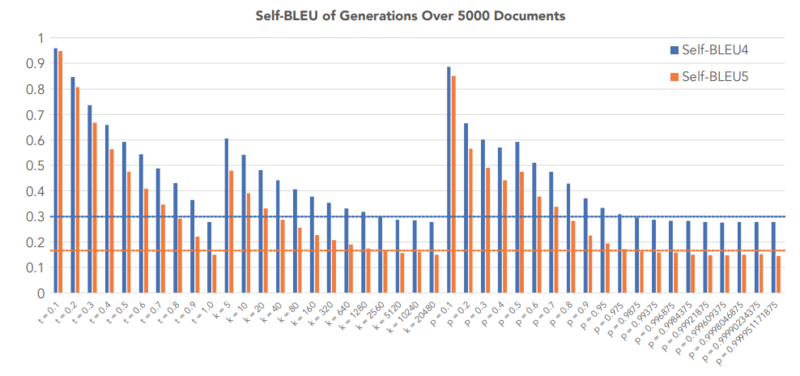

The Self-BLEU score[ | The Self-BLEU score[2] is used to compare the diversity of each decoding strategy and was computed for each generated text using all other generations in the evaluation set as references. In the figure below, the self-BLEU score of three decoding strategies- Top-K sampling, Sampling with Temperature, and Nucleus sampling- were compared against the Self-BLEU of human-generated texts. By looking at the figure below, we see that high values of parameters that generate the self-BLEU close to that of the human texts result in incoherent, low perplexity, in Top-K sampling and Temperature Sampling, while this is not the case for Nucleus sampling. | ||

[[File: BLEU.png |caption=Example text|center |800px|caption position=bottom]] | [[File: BLEU.png |caption=Example text|center |800px|caption position=bottom]] | ||

<div align="center">Figure 5: Comparison of Self-BLEU for decoding strategies</div> | |||

==Conclusion== | ==Conclusion== | ||

In this paper, different decoding strategies were analyzed on open-ended generation tasks. | In this paper, different decoding strategies were analyzed on open-ended generation tasks. Their results show that (1) likelihood maximization decoding causes degeneration, (2) the probability distributions of the best current language models have an unreliable tail which needs to be truncated during generation, and (3) Nucleus Sampling is currently the best available decoding strategy for generating long-form text that is both high-quality — as measured by human evaluation — and as diverse as human-written text. | ||

However, for many practical real-life problems, we need to consider a large value of k in Top-k sampling. Thus, we can say that nucleus sampling offers a subjective advantage over the Top-k sampling method. Also, we can observe a similar issue in nucleus sampling for choosing the value of p as in Top-k sampling. | |||

== Critiques== | |||

The only problem that I can observe from the nucleus sampling that it is only restricted to words from the p-th percentile of the distribution during generation. The probabilities of words for which the cumulative sum exceeds the percentile are rescaled and the sequence is sampled from this subset. I think a probing methodology will make it generate more varied and semantically richer responses. | |||

The comparison of perplexity scores across varying decoding strategies is interesting in the sense that we see that the Top-k method with k = 10^3 results in a score roughly similar to the Nucleus method with p = 0.95. Which method would be more computationally efficient? It seems that finding the set <math> V^{(p)}</math> is more computationally intensive compared to choosing the top-k outputs each time. | |||

== Repository == | |||

The official repository for this paper is available at <span class="plainlinks">[https://github.com/ari-holtzman/degen "official repository"]</span> | |||

== Tutorial on beam search == | |||

1- [https://youtu.be/Er2ucMxjdHE Greedy Search] | |||

2- [https://youtu.be/RLWuzLLSIgw?list=PLCSzVeDv57Z1y0uWZXYX2kq5UUpqA0Mk2 Beam Search] | |||

== References == | == References == | ||

[1]: Yaoming Zhu, Sidi Lu, Lei Zheng, Jiaxian Guo, Weinan Zhang, Jun Wang, and Yong Yu. Texygen: A benchmarking platform for text generation models. SIGIR, 2018 | [1]: David H Ackley, Geoffrey E Hinton, and Terrence J Sejnowski. A learning algorithm for boltzmann machines. Cognitive science, 9(1):147–169, 1985. | ||

[2]: Yaoming Zhu, Sidi Lu, Lei Zheng, Jiaxian Guo, Weinan Zhang, Jun Wang, and Yong Yu. Texygen: A benchmarking platform for text generation models. SIGIR, 2018 | |||

[3]: Perplexity: https://en.wikipedia.org/wiki/Perplexity | |||

Latest revision as of 05:41, 10 December 2020

Presented by

Donya Hamzeian

Introduction

Text generation is the act of automatically generating natural language texts like summarization, neural machine translation, fake news generation and etc. Degeneration happens when the output text is incoherent or produces repetitive results. This paper exposes the difference between human text and machine text and also highlights the decoding strategies impact machine text. the author For example in the figure below, the GPT2 model tries to generate the continuation text given the context. On the left side, the beam-search was used as the decoding strategy which has obviously stuck in a repetitive loop. On the right side, however, you can see how the pure sampling decoding strategy has generated incoherent results.

As a quick recap, the beam search is a best-first search algorithm. At each step, it selects the K most-probable predictions, where K is the beam width parameter set by humans. If K is 1, the beam search algorithm becomes the greedy search algorithm, where only the best prediction is picked. In beam search, the system only explores K paths, which reduces the memory requirements.

The authors argue that decoding strategies that are based on maximization like beam search lead to degeneration even with powerful models like GPT-2. Even though there are some utility functions that encourage diversity, they are not enough and the text generated by maximization, beam-search, or top-k sampling is too probable which indicates the lack of diversity (variance) compared to human-generated texts

Others have questioned whether a problem with beam search is that by expanding on only the top k tokens in each step of the generation, in later steps it may miss possible sequence that would have resulted in a more probable overall phrase. The authors argue that this isn't an issue for generating natural language as it has a lower per-token probability on average and people usually optimize against saying the obvious.

The authors blame the long, unreliable tail in the probability distribution of tokens that the model samples from i.e. vocabularies with low probability frequently appear in the output text. So, top-k sampling with high values of k may produce texts closer to human texts, yet they have a high variance in likelihood leading to incoherency issues. Therefore, instead of fixed k, it is good to dynamically increase or decrease the number of candidate tokens. Nucleus Sampling which is the contribution of this paper does this expansion and contraction of the candidate pool.

The problem with a fixed k

In the figure below, it can be seen why having a fixed k in the top-k sampling decoding strategy can lead to degenerative results, more specifically, incoherent and low diversity texts. For instance, in the left figure, the distribution of the next token is flat i.e. there are many tokens with nearly equal probability to be the next token. In this case, if we choose a small k, like 5, some tokens like "meant" and "want" may not appear in the generated text which makes it less diverse. On the other hand, in the right figure, the distribution of the next token is peaked, i.e there are very few words with very high probability. In this case, if we choose k to be large, like 10, we may end up choosing tokens like "going" and "n't" which makes the generated text incoherent. Therefore, it seems that having a fixed-k may lead to degeneration

Language Model Decoding

There are two types of generation tasks.

1. Directed generation tasks: In these tasks, there are pairs of (input, output), where the model tries to generate the output text which is tightly scoped by the input text. Due to this constraint, these tasks suffer less from the degeneration. Summarization, neural machine translation, and input-to-text generation are some examples of these tasks.

2. Open-ended generation tasks like conditional story generation or like the tasks in the above figure have high degrees of freedom. As a result, degeneration is more frequent in these tasks and the focus of this paper.

The goal of the open-ended tasks is to generate the next n continuation tokens given a context sequence with m tokens. That is to maximize the following probability.

\begin{align} P(x_{1:m+n})=\prod_{i=1}^{m+n}P(x_i|x_1 \ldots x_{i-1}) \end{align}

Nucleus Sampling

The authors propose Nucleus Sampling as a stochastic decoding method where the shape of the probability distribution determines the set of vocabulary tokens to be sampled. In this they first find the smallest vocabulary set [math]\displaystyle{ V^{(p)} }[/math] which satisfies [math]\displaystyle{ \Sigma_{x \in V^{(p)}} P(x|x_{1:i-1}) \ge p }[/math]. They then normalise the subset [math]\displaystyle{ V^{(p)} }[/math] into a probability distribution by dividing its elements by [math]\displaystyle{ p'=\Sigma_{x \in V^{(p)}} P(x|x_{1:i-1}) \ge p }[/math]. These normalized probabilities will then be used for the generation of word samples. This entire process can be viewed as a re-scaling to the original probability distribution in to a new distbition [math]\displaystyle{ P' }[/math]. Where:

\begin{align} P'(x|x_{1:i-1}) = \begin{cases}\frac{P(x|x_{1:i-1})}{p'}, & x \in V^{(p)} \\ 0, & otherwise \end{cases} \end{align}

This decoding strategy is beneficial as it can truncate possible long tails of the original probability distribution. Thus is can then help avoid the associated problem of incoherent samples for phrases generated by long-tailed distributions as previously discussed.

Top-k Sampling

Top-k sampling also relies on truncating the distribution. In this decoding strategy, we need to first find a set of tokens with size [math]\displaystyle{ k }[/math], [math]\displaystyle{ V^{(k)} }[/math], which maximizes [math]\displaystyle{ \Sigma_{x \in V^{(k)}} P(x|x_{1:i-1}) }[/math] and set [math]\displaystyle{ p' = \Sigma_{x \in V^{(k)}} P(x|x_{1:i-1}) }[/math]. Finally, rescale the probability distribution similar to the Nucleus sampling.

Intuitively, the difference between Top-k sampling and Nucleus sampling is how they set a threshold of truncation - the former one defines a threshold at which the tail of the probability distribution gets truncated, whereas the latter puts a cap on the number of tokens in the vocabulary set. It is noteworthy that thresholding the number of tokens can cause [math]\displaystyle{ p' }[/math] to fluctuate greatly at different time steps.

Sampling with Temperature

In this method, which was proposed in [1], the probability of tokens are calculated according to the equation below where [math]\displaystyle{ t \in (0,1) }[/math] is the temperature and [math]\displaystyle{ u_{1:|V|} }[/math] are logits.

[math]\displaystyle{ P(x= V_l|x_{1:i-1}) = \frac{\exp(\frac{u_l}{t})}{\Sigma_{l'}\exp(\frac{u'_l}{t})} }[/math]

Recent studies have shown that lowering [math]\displaystyle{ t }[/math] improves the quality of the generated texts while it decreases diversity. Note that the temperature [math]\displaystyle{ t }[/math] controls how conservative the model is, and this analogy comes from thermodynamics, where lower temperature means lower energy states are unlikely to be encountered. Hence, the lower the temperature, the less likely the model is to sample tokens with lower probability.

Likelihood Evaluation

To see the results of the nucleus decoding strategy, they used GPT2-large that was trained on WebText to generate 5000 text documents conditioned on initial paragraphs with 1-40 tokens.

Perplexity

This score was used to compare the coherence of different decoding strategies. By looking at the graphs below, it is possible for Sampling, Top-k sampling, and Nucleus strategies to be tuned such that they achieve a perplexity close to the perplexity of human-generated texts; however, with the best parameters according to the perplexity the first two strategies generate low diversity texts.

What is Perplexity?

Perplexity as previously mentioned is a score that comes from information theory [3]. It is a measure of how well a probabilistic model or distribution predicts a sample. This intuitively leads to it is useful for comparing how competition models explain the same sample or dataset. Perplexity has close ties to information entropy as can be seen in the following discrete formulation of perplexity for a probability distribution.

- [math]\displaystyle{ PP(p) := 2^{H(p)}=2^{-\sum_x p(x)\log_2 p(x)} }[/math]

Here [math]\displaystyle{ H(p) }[/math] is the entropy in bits and [math]\displaystyle{ p(x) }[/math] is the probability of observing [math]\displaystyle{ x }[/math] from the distribution.

Perplexity in the context of probability models also has close ties to information entropy. The idea here is a model [math]\displaystyle{ f(x) }[/math] is fit to data from an unknown probability distribution [math]\displaystyle{ p(x) }[/math]. When the model is given test samples which were not used during its construction; the model will assign these samples some probability [math]\displaystyle{ f(x_i) }[/math]. Here [math]\displaystyle{ x_i }[/math] comes from a test set where [math]\displaystyle{ i = 1,...,N }[/math]. The perplexity will be lowest for a model which has high probabilities for the test samples. This can be seen in the following equation:

- [math]\displaystyle{ PPL = b^{- \frac{1}{N} \sum_{i=1}^N \log_b q(x_i)} }[/math]

Here [math]\displaystyle{ b }[/math] is the base and can be any number though commonly 2 is used to represent bits.

Distributional Statistical Evaluation

Zipf Distribution Analysis

Zipf's law says that the frequency of any word is inversely proportional to its rank in the frequency table, so it suggests that there is an exponential relationship between the rank of each word with its frequency in the text. By looking at the graph below, it seems that the Zipf's distribution of the texts generated with Nucleus sampling is very close to the Zipf's distribution of the human-generated(gold) texts, while beam-search is very different from them.

Self BLEU

The Self-BLEU score[2] is used to compare the diversity of each decoding strategy and was computed for each generated text using all other generations in the evaluation set as references. In the figure below, the self-BLEU score of three decoding strategies- Top-K sampling, Sampling with Temperature, and Nucleus sampling- were compared against the Self-BLEU of human-generated texts. By looking at the figure below, we see that high values of parameters that generate the self-BLEU close to that of the human texts result in incoherent, low perplexity, in Top-K sampling and Temperature Sampling, while this is not the case for Nucleus sampling.

Conclusion

In this paper, different decoding strategies were analyzed on open-ended generation tasks. Their results show that (1) likelihood maximization decoding causes degeneration, (2) the probability distributions of the best current language models have an unreliable tail which needs to be truncated during generation, and (3) Nucleus Sampling is currently the best available decoding strategy for generating long-form text that is both high-quality — as measured by human evaluation — and as diverse as human-written text. However, for many practical real-life problems, we need to consider a large value of k in Top-k sampling. Thus, we can say that nucleus sampling offers a subjective advantage over the Top-k sampling method. Also, we can observe a similar issue in nucleus sampling for choosing the value of p as in Top-k sampling.

Critiques

The only problem that I can observe from the nucleus sampling that it is only restricted to words from the p-th percentile of the distribution during generation. The probabilities of words for which the cumulative sum exceeds the percentile are rescaled and the sequence is sampled from this subset. I think a probing methodology will make it generate more varied and semantically richer responses.

The comparison of perplexity scores across varying decoding strategies is interesting in the sense that we see that the Top-k method with k = 10^3 results in a score roughly similar to the Nucleus method with p = 0.95. Which method would be more computationally efficient? It seems that finding the set [math]\displaystyle{ V^{(p)} }[/math] is more computationally intensive compared to choosing the top-k outputs each time.

Repository

The official repository for this paper is available at "official repository"

Tutorial on beam search

2- Beam Search

References

[1]: David H Ackley, Geoffrey E Hinton, and Terrence J Sejnowski. A learning algorithm for boltzmann machines. Cognitive science, 9(1):147–169, 1985.

[2]: Yaoming Zhu, Sidi Lu, Lei Zheng, Jiaxian Guo, Weinan Zhang, Jun Wang, and Yong Yu. Texygen: A benchmarking platform for text generation models. SIGIR, 2018

[3]: Perplexity: https://en.wikipedia.org/wiki/Perplexity