CapsuleNets: Difference between revisions

| (98 intermediate revisions by 30 users not shown) | |||

| Line 1: | Line 1: | ||

The paper "Dynamic Routing Between Capsules" was written by three researchers at Google Brain: Sara Sabour, Nicholas Frosst, and Geoffrey E. Hinton. This paper was published and presented at the 31st Conference on Neural Information Processing Systems (NIPS 2017) in Long Beach, California. The same three researchers recently published a highly related paper "Matrix Capsules with EM Routing" for ICLR 2018. | The paper "Dynamic Routing Between Capsules" was written by three researchers at Google Brain: Sara Sabour, Nicholas Frosst, and Geoffrey E. Hinton. This paper was published and presented at the 31st Conference on Neural Information Processing Systems (NIPS 2017) in Long Beach, California. The same three researchers recently published a highly related paper "[https://openreview.net/pdf?id=HJWLfGWRb Matrix Capsules with EM Routing]" for ICLR 2018. Source code in tensorflow can be found here [https://github.com/naturomics/CapsNet-Tensorflow] | ||

=Motivation= | =Motivation= | ||

Ever since AlexNet eclipsed the performance of competing architectures in the 2012 ImageNet challenge, convolutional neural networks have maintained their dominance in computer vision applications. Despite the recent successes and innovations brought about by convolutional neural networks, some assumptions made in these networks are perhaps unwarranted and deficient. Using a novel neural network architecture, the authors create CapsuleNets, a network that they claim is able to learn image representations in a more robust, human-like manner. With only a 3 layer capsule network, they achieved near state-of-the-art results on MNIST. | Ever since AlexNet eclipsed the performance of competing architectures in the 2012 ImageNet challenge, convolutional neural networks have maintained their dominance in computer vision applications. Despite the recent successes and innovations brought about by convolutional neural networks, some assumptions made in these networks are perhaps unwarranted and deficient. Using a novel neural network architecture, the authors create CapsuleNets, a network that they claim is able to learn image representations in a more robust, human-like manner. With only a 3 layer capsule network, they achieved near state-of-the-art results on MNIST. | ||

The activities of the neurons within an active capsule represent the various properties of a particular entity that is present in the image. These properties can include many different types of instantiation parameter such as pose (position, size, orientation), deformation, velocity, albedo, hue, texture, etc. One very special property is the existence of the instantiated entity in the image. An obvious way to represent existence is by using a separate logistic unit whose output is the probability that the entity exists. This paper explores an interesting alternative which is to use the overall length of the vector of instantiation parameters to represent the existence of the entity and to force the orientation of the vector to represent the properties of the entity. The length of the vector output of a capsule cannot exceed 1 because of an application of a non-linearity that leaves the orientation of the vector unchanged but scales down its magnitude. | |||

The fact that the output of a capsule is a vector makes it possible to use a powerful dynamic routing mechanism to ensure that the output of the capsule gets sent to an appropriate parent in the layer above. Initially, the output is routed to all possible parents but is scaled down by coupling coefficients that sum to 1. For each possible parent, the capsule computes a “prediction vector” by multiplying its own output by a weight matrix. If this prediction vector has a large scalar product with the output of a possible parent, there is top-down feedback which increases the coupling coefficient for that parent and decreasing it for other parents. This increases the contribution that the capsule makes to that parent thus further increasing the scalar product of the capsule’s prediction with the parent’s output. This type of “routing-by-agreement” should be far more effective than the very primitive form of routing implemented by max-pooling, which allows neurons in one layer to ignore all but the most active feature detector in a local pool in the layer below. The authors demonstrate that their dynamic routing mechanism is an effective way to implement the “explaining away” that is needed for segmenting highly overlapping objects | |||

==Adversarial Examples== | ==Adversarial Examples== | ||

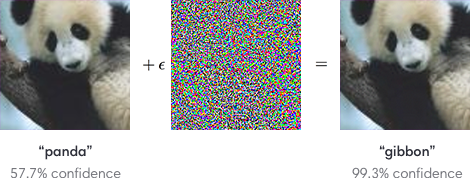

First discussed by Christian Szegedy et. al. in late 2013, adversarial examples have been heavily discussed by the deep learning community as a potential security threat to AI learning. Adversarial examples are defined as inputs that an attacker creates intentionally fool a machine learning model. An example of an adversarial example is shown below: | First discussed by Christian Szegedy et. al. in late 2013, adversarial examples have been heavily discussed by the deep learning community as a potential security threat to AI learning. Adversarial examples are defined as inputs that an attacker creates intentionally to fool a machine learning model. An example of an adversarial example is shown below: | ||

[[File:adversarial_img_1.png |center]] | [[File:adversarial_img_1.png |center]] | ||

To | |||

To human eyes, the image appears to be a panda both before and after noise is injected into the image, whereas the trained ConvNet model discerns the noisy image as a Gibbon with almost 100% certainty. The fact that the network is unable to classify the above image as a panda after the epsilon perturbation leads to many potential security risks in AI dependent systems such as self-driving vehicles. Although various methods have been suggested to combat adversarial examples, robust defenses are hard to construct due to the inherent difficulties in constructing theoretical models for the adversarial example crafting process. However, beyond the fact that these examples may serve as a security threat, it emphasizes that these convolutional neural networks do not learn image classification/object detection patterns the same way that a human would. Rather than identifying the core features of a panda such as its eyes, mouth, nose, and the gradient changes in its black/white fur, the convolutional neural network seems to be learning image representations in a completely different manner. Deep learning researchers often attempt to model neural networks after human learning, and it is clear that further steps must be taken to robustify ConvNets against targeted noise perturbations. | |||

==Drawbacks of CNNs== | ==Drawbacks of CNNs== | ||

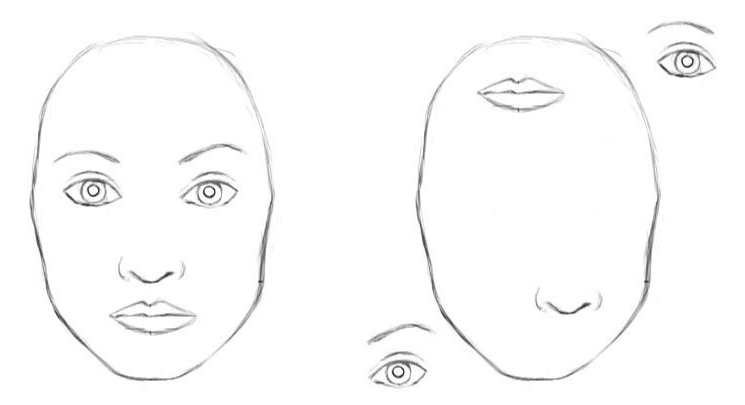

Hinton claims that the key fault with traditional CNNs lies within the pooling function. Although pooling builds translational invariance into the network, it fails to preserve spatial relationships between objects. When we pool, we effectively reduce a | Hinton claims that the key fault with traditional CNNs lies within the pooling function. Although pooling builds translational invariance into the network, it fails to preserve spatial relationships between objects. When we pool, we effectively reduce a <math>k \cdot k</math> kernel of convolved cells into a scalar input. This results in a desired local invariance without inhibiting the network's ability to detect features but causes valuable spatial information to be lost. | ||

Also, in CNNs, higher-level features combine lower-level features as a weighted sum: activations of a previous layer multiplied by the current layer's weight, then passed to another activation function. In this process, pose relationship between simpler features is not part of the higher-level feature. | |||

In the example below, the network is able to detect the similar features (eyes, mouth, nose, etc) within both images, but fails to recognize that one image is a human face, while the other is a Picasso-esque due to the CNN's inability to encode spatial relationships after multiple pooling layers. | In the example below, the network is able to detect the similar features (eyes, mouth, nose, etc) within both images, but fails to recognize that one image is a human face, while the other is a Picasso-esque due to the CNN's inability to encode spatial relationships after multiple pooling layers. | ||

In deep learning, the activation level of a neuron is often interpreted as the likelihood of detecting a specific feature. CNNs are good at detecting features but less effective at exploring the spatial relationships among features (perspective, size, orientation). | |||

[[File:Equivariance Face.png |center]] | |||

Here, the CNN could wrongly activate the neuron for the face detection. Without realizing the mismatch in spatial orientation and size, the activation for the face detection will be too high. | |||

Conversely, we hope that a CNN can recognize that both of the following pictures contain a kitten. Unfortunately, when we feed the two images into a ResNet50 architecture, only the first image is correctly classified, while the second image is predicted to be a guinea pig. | Conversely, we hope that a CNN can recognize that both of the following pictures contain a kitten. Unfortunately, when we feed the two images into a ResNet50 architecture, only the first image is correctly classified, while the second image is predicted to be a guinea pig. | ||

| Line 27: | Line 37: | ||

[[File:kitten-rotated-180.jpg |center]] | [[File:kitten-rotated-180.jpg |center]] | ||

For a more in depth discussion on the problems with ConvNets, please listen to Geoffrey Hinton's talk "What is wrong with convolutional neural nets?" given at MIT during the Brain & Cognitive Sciences - Fall Colloquium Series (December 4, 2014). | For a more in-depth discussion on the problems with ConvNets, please listen to Geoffrey Hinton's talk "What is wrong with convolutional neural nets?" given at MIT during the Brain & Cognitive Sciences - Fall Colloquium Series (December 4, 2014). | ||

==Intuition for Capsules== | ==Intuition for Capsules== | ||

Human vision ignores irrelevant details by using a carefully determined sequence of fixation points to ensure that only a tiny fraction of the optic array is ever processed at the highest resolution. Hinton argues that our brains reason visual information by deconstructing it into a hierarchical representation which we then match to familiar patterns and relationships from memory. The key difference between this understanding and the functionality of CNNs is that recognition of an object should not depend on the angle from which it is viewed. | Human vision ignores irrelevant details by using a carefully determined sequence of fixation points to ensure that only a tiny fraction of the optic array is ever processed at the highest resolution. Hinton argues that our brains reason visual information by deconstructing it into a hierarchical representation which we then match to familiar patterns and relationships from memory. The key difference between this understanding and the functionality of CNNs is that recognition of an object should not depend on the angle from which it is viewed. | ||

To enforce rotational and translational equivariance, Capsule Networks store and preserve hierarchical pose relationships between objects. The core idea behind capsule theory is the explicit numerical representations of relative relationships between different objects within an image. Building these relationships into the Capsule Networks model, the network is able to recognize newly seen objects as a rotated view of a previously seen object. For example, the below image shows the Statue of Liberty under five different angles. If a person had only seen the Statue of Liberty from one angle, they would be able to ascertain that all five pictures below contain the same object (just from a different angle). | To enforce rotational and translational equivariance, Capsule Networks store and preserve hierarchical pose relationships between objects. The core idea behind capsule theory is the explicit numerical representations of relative relationships between different objects within an image. Building these relationships into the Capsule Networks model, the network is able to recognize newly seen objects as a rotated view of a previously seen object. For example, the below image shows the Statue of Liberty under five different angles. If a person had only seen the Statue of Liberty from one angle, they would be able to ascertain that all five pictures below contain the same object (just from a different angle). However, the underlying concept of rotation invariant recognition using only one point of view might be, in fact, contrary to what most humans actually do. In reality, even though the human observer is able to correctly tag all the five images as representations of the same object, that human observer has accumulated a vast number of learning experiences in terms of discerning colors, shades, shapes, among other factors that most likely improve his or her conclusion and that are quite difficult to embed in an image classification algorithm. | ||

[[File:Rotational Invariance.jpeg |center]] | [[File:Rotational Invariance.jpeg |center]] | ||

Building on this idea of hierarchical representation of spatial relationships between key entities within an image, the authors introduce Capsule Networks. Unlike traditional CNNs, Capsule Networks are better equipped to classify correctly under rotational invariance. Furthermore, the authors managed to achieve state of the art results on MNIST using a fraction of the training samples that alternative state of the art networks | Building on this idea of hierarchical representation of spatial relationships between key entities within an image, the authors introduce Capsule Networks. Unlike traditional CNNs, Capsule Networks are better equipped to classify correctly under rotational invariance. Furthermore, the authors managed to achieve state of the art results on MNIST using a fraction of the training samples that alternative state of the art networks requires. | ||

=Background, Notation, and Definitions= | =Background, Notation, and Definitions= | ||

==What is a Capsule== | ==What is a Capsule== | ||

"Each capsule learns to recognize an implicitly defined visual entity over a limited domain of viewing conditions and deformations and it outputs both the probability that the entity is present within its limited domain and a set of “instantiation parameters” that may include the precise pose, lighting and deformation of the visual entity relative to an implicitly defined canonical version of that entity. When the capsule is working properly, the probability of the visual entity being present is locally invariant — it does not change as the entity moves over the manifold of possible appearances within the limited domain covered by the capsule. The instantiation parameters, however, are “equivariant” — as the viewing conditions change and the entity moves over the appearance manifold, the instantiation parameters change by a corresponding amount because they are representing the intrinsic coordinates of the entity on the appearance manifold." | "Each capsule learns to recognize an implicitly defined visual entity over a limited domain of viewing conditions and deformations and it outputs both the probability that the entity is present within its limited domain and a set of “instantiation parameters” that may include the precise pose, lighting, and deformation of the visual entity relative to an implicitly defined canonical version of that entity. When the capsule is working properly, the probability of the visual entity being present is locally invariant — it does not change as the entity moves over the manifold of possible appearances within the limited domain covered by the capsule. The instantiation parameters, however, are “equivariant” — as the viewing conditions change and the entity moves over the appearance manifold, the instantiation parameters change by a corresponding amount because they are representing the intrinsic coordinates of the entity on the appearance manifold." | ||

In essence, capsules store object properties in a vector form; probability of detection is encoded as the vector's length, while spatial properties are encoded as the individual vector components. Thus, when a feature is present but the image captures it under a different angle, the probability of detection remains unchanged. | In essence, capsules store object properties in a vector form; probability of detection is encoded as the vector's length, while spatial properties are encoded as the individual vector components. Thus, when a feature is present but the image captures it under a different angle, the probability of detection remains unchanged. | ||

A brief overview/understanding of capsules can be found in other papers from the author. To quote from [https://openreview.net/pdf?id=HJWLfGWRb this paper]: | |||

<blockquote> | |||

A capsule network consists of several layers of capsules. The set of capsules in layer <math>L</math> is denoted | |||

as <math>\Omega_L</math>. Each capsule has a 4x4 pose matrix, <math>M</math>, and an activation probability, <math>a</math>. These are like the | |||

activities in a standard neural net: they depend on the current input and are not stored. In between | |||

each capsule <math>i</math> in layer <math>L</math> and each capsule <math>j</math> in layer <math>L + 1</math> is a 4x4 trainable transformation matrix, | |||

<math>W_{ij}</math> . These <math>W_{ij}</math>'s (and two learned biases per capsule) are the only stored parameters and they | |||

are learned discriminatively. The pose matrix of capsule <math>i</math> is transformed by <math>W_{ij}</math> to cast a vote | |||

<math>V_{ij} = M_iW_{ij}</math> for the pose matrix of capsule <math>j</math>. The poses and activations of all the capsules in layer | |||

<math>L + 1</math> are calculated by using a non-linear routing procedure which gets as input <math>V_{ij}</math> and <math>a_i</math> for all | |||

<math>i \in \Omega_L, j \in \Omega_{L+1}</math> | |||

</blockquote> | |||

<math></math> | |||

==Notation== | ==Notation== | ||

| Line 50: | Line 74: | ||

We want the length of the output vector of a capsule to represent the probability that the entity represented by the capsule is present in the current input. The paper performs a non-linear squashing operation to ensure that vector length falls between 0 and 1, with shorter vectors (less likely to exist entities) being shrunk towards 0. | We want the length of the output vector of a capsule to represent the probability that the entity represented by the capsule is present in the current input. The paper performs a non-linear squashing operation to ensure that vector length falls between 0 and 1, with shorter vectors (less likely to exist entities) being shrunk towards 0. | ||

\begin{align} \mathbf{v}_j &= \frac{||\mathbf{s}_j||^2}{1+ ||\mathbf{s}_j||^2} \frac{\mathbf{s}_j}{||\mathbf{s}_j|| | \begin{align} \mathbf{v}_j &= \frac{||\mathbf{s}_j||^2}{1+ ||\mathbf{s}_j||^2} \frac{\mathbf{s}_j}{||\mathbf{s}_j||} \end{align} | ||

where <math>\mathbf{v}_j</math> is the vector output of capsule <math>j</math> and <math>s_j</math> is its total input. | where <math>\mathbf{v}_j</math> is the vector output of capsule <math>j</math> and <math>s_j</math> is its total input. | ||

For all but the first layer of capsules, the total input to a capsule <math>s_j</math> is a weighted sum over all “prediction vectors” <math>\hat{\mathbf{u}}_{j|i}</math> from the capsules in the layer below and is produced by multiplying the output <math>\mathbf{u} | For all but the first layer of capsules, the total input to a capsule <math>s_j</math> is a weighted sum over all “prediction vectors” <math>\hat{\mathbf{u}}_{j|i}</math> from the capsules in the layer below and is produced by multiplying the output <math>\mathbf{u}_i</math> of a capsule in the layer below by a weight matrix <math>\mathbf{W}ij</math> | ||

\begin{align} | \begin{align} | ||

\mathbf{s}_j = \sum_i c_{ij}\hat{\mathbf{u}}_{j|i}, \hat{\mathbf{u}}_{j|i}= \mathbf{W}_{ij}\mathbf{u}_i | \mathbf{s}_j = \sum_i c_{ij}\hat{\mathbf{u}}_{j|i}, ~\hspace{0.5em} \hat{\mathbf{u}}_{j|i}= \mathbf{W}_{ij}\mathbf{u}_i | ||

\end{align} | \end{align} | ||

where the <math>c_{ij}</math> are coupling coefficients that are determined by the iterative dynamic routing process. | where the <math>c_{ij}</math> are coupling coefficients that are determined by the iterative dynamic routing process. | ||

| Line 64: | Line 88: | ||

\begin{align} | \begin{align} | ||

c_{ij} = \frac{\exp(b_{ij})}{\sum_k \exp(b_{ | c_{ij} = \frac{\exp(b_{ij})}{\sum_k \exp(b_{ik})} | ||

\end{align} | \end{align} | ||

=Network Training and Dynamic Routing= | =Network Training and Dynamic Routing= | ||

| Line 73: | Line 98: | ||

[[File:CapsuleNets.jpeg|center|800px]] | [[File:CapsuleNets.jpeg|center|800px]] | ||

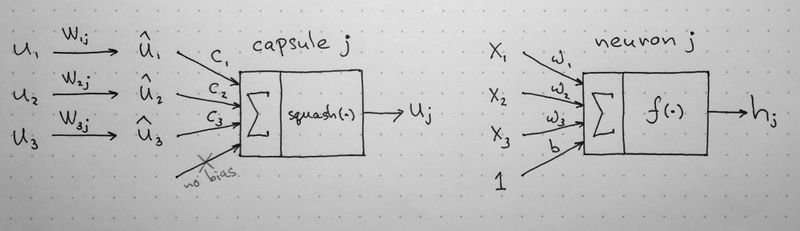

The above image illustrates the key mathematical operations happening within a capsule (and compares them to the structure of a neuron). | The above image illustrates the key mathematical operations happening within a capsule (and compares them to the structure of a neuron). Although the operations are rather straightforward, it's crucial to note that the capsule computes an affine transformation onto each input vector. The length of the input vectors <math>\mathbf{u}_{i}</math> represent the probability of entity <math>i</math> existing in a lower level. This vector is then reoriented with an affine transform using <math>\mathbf{W}_{ij}</math> matrices that encode spatial relationships between entity <math>\mathbf{u}_{i}</math> and other lower level features. | ||

We illustrate the intuition behind vector-to-vector matrix multiplication within capsules using the following example: if vectors <math>\mathbf{u}_{1}</math>, <math>\mathbf{u}_{2}</math>, and <math>\mathbf{u}_{3}</math> represent detection of eyes, nose, and mouth respectively, then after multiplication with trained weight matrices <math>\mathbf{W}_{ij}</math> (where j denotes existence of a face), we should get a general idea of the general location of the higher level feature (face), similar to the image below. | |||

[[File:Predictions.jpeg |center]] | [[File:Predictions.jpeg |center]] | ||

==Dynamic Routing== | ==Dynamic Routing== | ||

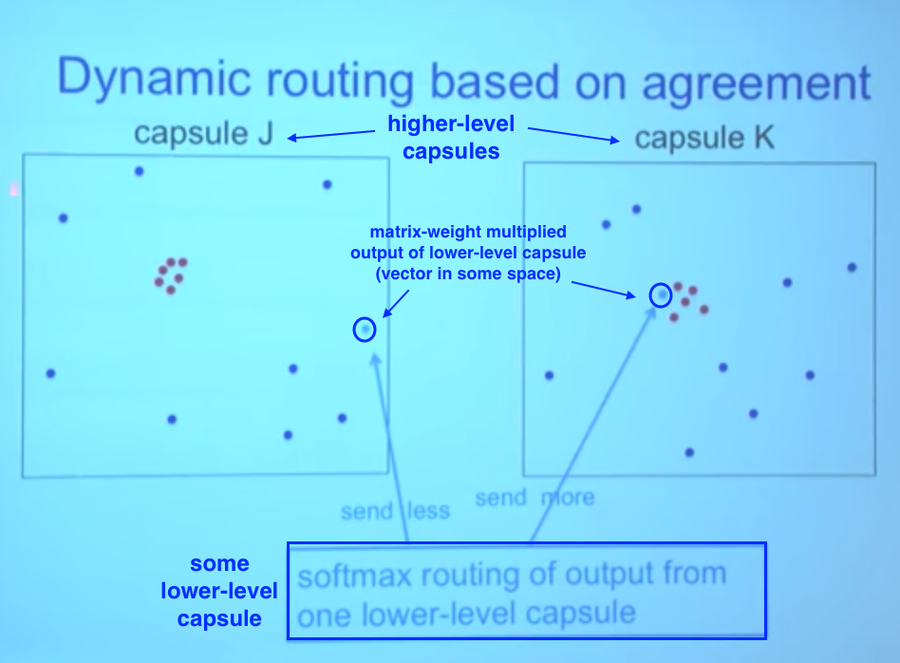

A capsule <math>i</math> in a lower-level layer needs to decide how to send its output vector to higher-level capsules <math>j</math>. This decision is made with probability proportional to <math>c_{ij}</math>. If there are <math>K</math> capsules in the level that capsule <math>i</math> routes to, then we know the following properties about <math>c_{ij}</math>: <math>\sum_{j=1}^M c_{ij} = 1, c_{ij} \geq 0</math> | |||

In essence, the <math>\{c_{ij}\}_{j=1}^M</math> denotes a discrete probability distribution with respect to capsule <math>i</math>'s output location. Lower level capsules decide which higher level capsules to send vectors into by adjusting the corresponding routing weights <math>\{c_{ij}\}_{j=1}^M</math>. After a few iterations in training, numerous vectors will have already been sent to all higher level capsules. Based on the similarity between the current vector being routed and all vectors already sent into the higher level capsules, we decide which capsule to send the current vector into. | |||

[[File:Dynamic Routing.png|center|900px]] | |||

From the image above, we notice that a cluster of points similar to the current vector has already been routed into capsule K, while most points in capsule J are highly dissimilar. It thus makes more sense to route the current observations into capsule K; we adjust the corresponding weights upward during training. | |||

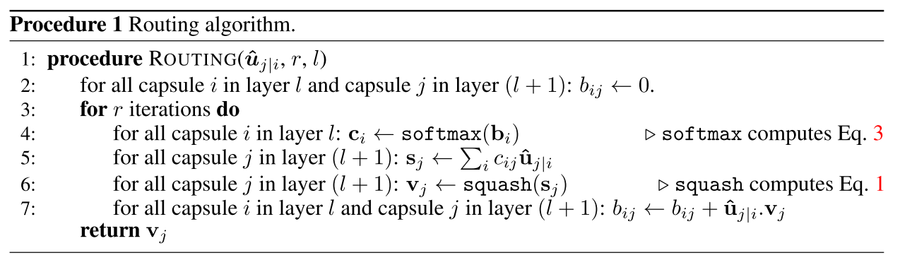

These weights are determined through the dynamic routing procedure: | |||

[[File:Routing Algo.png|900px]] | |||

Note that the convergence of this routing procedure has been questioned. Although it is empirically shown that this procedure converges, the convergence has not been proven. | |||

Although dynamic routing is not the only manner in which we can encode relationships between capsules, the premise of the paper is to demonstrate the capabilities of capsules under a simple implementation. Since the paper was released in 2017, numerous alternative routing implementations have been released including an EM matrix routing algorithm by the same authors (ICLR 2018). | |||

=Architecture= | =Architecture= | ||

=Experimental Results= | The capsule network architecture given by the authors has 11.36 million trainable parameters. The paper itself is not very detailed on exact implementation of each architectural layer, and hence it leaves some degree of ambiguity on coding various aspects of the original network. The capsule network has 6 overall layers, with the first three layers denoting components of the encoder, and the last 3 denoting components of the decoder. | ||

==Loss Function== | |||

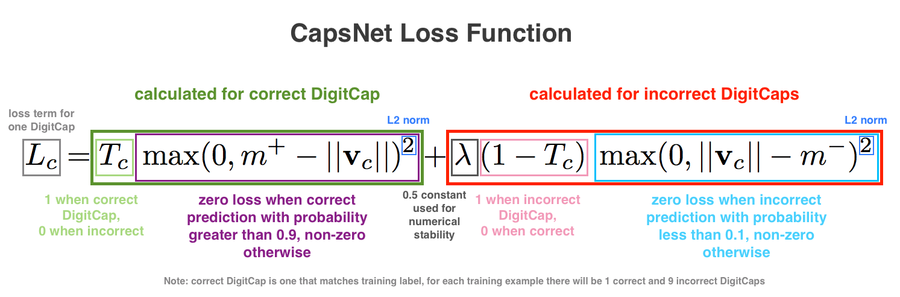

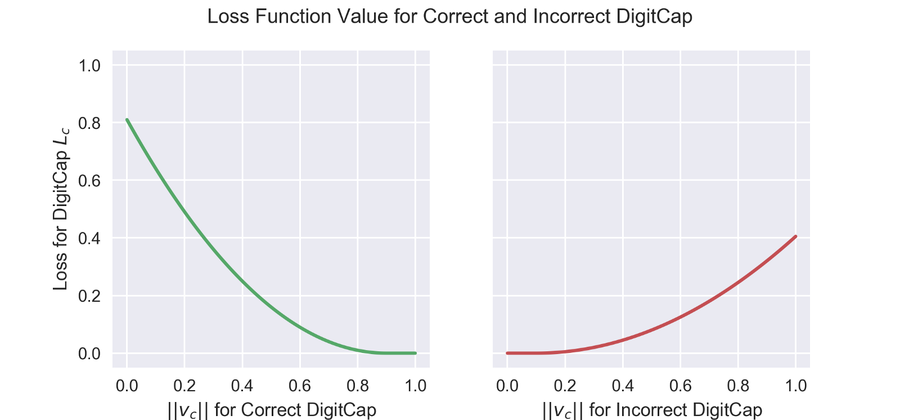

[[File:Loss Function.png|900px]] | |||

The cost function looks very complicated, but can be broken down into intuitive components. Before diving into the equation, remember that the length of the vector denotes the probability of object existence. The left side of the equation denotes loss when the network classifies an observation correctly; the term becomes zero when the classification is incorrect. To compute loss when the network correctly classifies the label, we subtract the vector norm from a fixed quantity <math>m^+ := 0.9</math>. On the other hand, when the network classifies a label incorrectly, we penalize the loss based on the network's confidence in the incorrect label; we compute the loss by subtracting <math>m^- := 0.1</math> from the vector norm. | |||

A graphical representation of loss function values under varying vector norms is given below. | |||

[[File:Loss function chart.png|900px]] | |||

==Encoder Layers== | |||

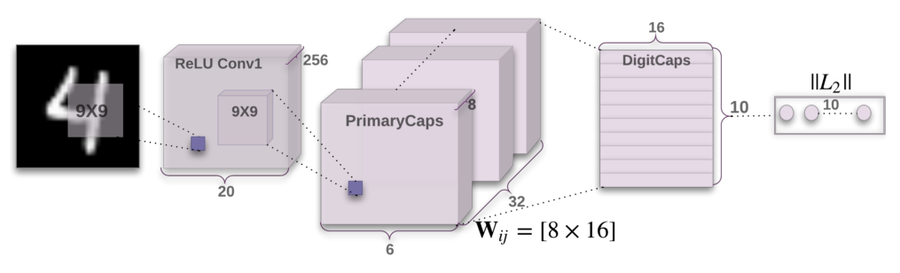

All experiments within this paper were conducted on the MNIST dataset, and thus the architecture is built to classify the corresponding dataset. For more complex datasets, the experiments were less promising. | |||

[[File:Architecture.png|center|900px]] | |||

The encoder layer takes in a 28x28 MNIST image and learns a 16 dimensional representation of instantiation parameters. | |||

'''Layer 1: Convolution''': | |||

This layer is a standard convolution layer. Using kernels with size 9x9x1, a stride of 1, and a ReLU activation function, we detect the 2D features within the network. | |||

'''Layer 2: PrimaryCaps''': | |||

We represent the low level features detected during convolution as 32 primary capsules. Each capsule applies eight convolutional kernels with stride 2 to the output of the convolution layer and feeds the corresponding transformed tensors into the DigiCaps layer. | |||

'''Layer 3: DigiCaps''': | |||

This layer contains 10 digit capsules, one for each digit. As explained in the dynamic routing procedure, each input vector from the PrimaryCaps layer has its own corresponding weight matrix <math>W_{ij}</math>. Using the routing coefficients <math>c_{ij}</math> and temporary coefficients <math>b_{ij}</math>, we train the DigiCaps layer to output ten 16 dimensional vectors. The length of the <math>i^{th}</math> vector in this layer corresponds to the probability of detection of digit <math>i</math>. | |||

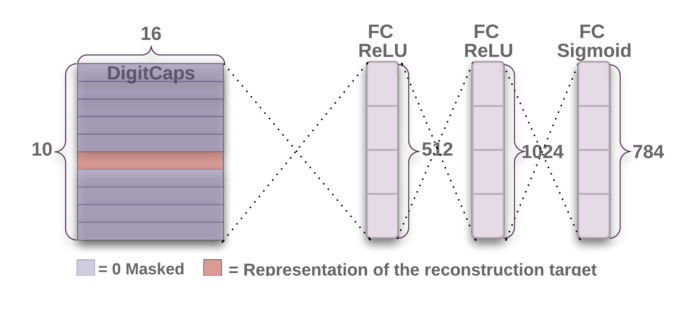

==Decoder Layers== | |||

The decoder layer aims to train the capsules to extract meaningful features for image detection/classification. During training, it takes the 16 layer instantiation vector of the correct (not predicted) DigiCaps layer, and attempts to recreate the 28x28 MNIST image as best as possible. Setting the loss function as reconstruction error (Euclidean distance between the reconstructed image and original image), we tune the capsules to encode features that are meaningful within the actual image. | |||

[[File:Decoder.png|center|900px]] | |||

The layer consists of three fully connected layers, and transforms a 16x1 vector from the encoder layer into a 28x28 image. | |||

In addition to the digicaps loss function, we add reconstruction error as a form of regularization. During training, everything but the activity vector of the correct digit capsule is masked, and then this activity vector is used to reconstruct the input image. We minimize the Euclidean distance between the outputs of the logistic units and the pixel intensities of the original and reconstructed images. We scale down this reconstruction loss by 0.0005 so that it does not dominate the margin loss during training. As illustrated below, reconstructions from the 16D output of the CapsNet are robust while keeping only important details. | |||

[[File:Reconstruction.png|center|900px]] | |||

=MNIST Experimental Results= | |||

==Accuracy== | |||

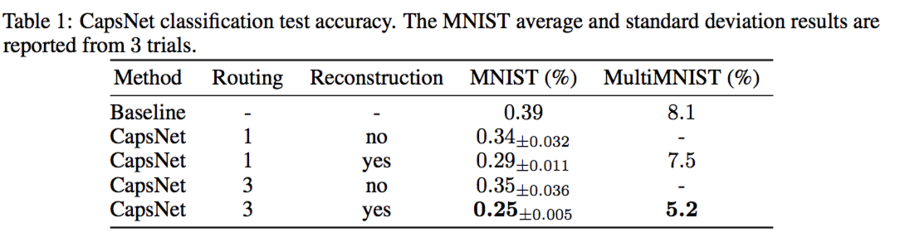

The paper tests on the MNIST dataset with 60K training examples, and 10K testing. Wan et al. [2013] achieves 0.21% test error with ensembling and augmenting the data with rotation and scaling. They achieve 0.39% without them. As shown in Table 1, the authors manage to achieve 0.25% test error with only a 3 layer network; the previous state of the art only beat this number with very deep networks. This example shows the importance of routing and reconstruction regularizer, which boosts the performance. On the other hand, while the accuracies are very high, the number of parameters is much smaller compared to the baseline model. | |||

[[File:Accuracies.png|center|900px]] | |||

==What Capsules Represent for MNIST== | |||

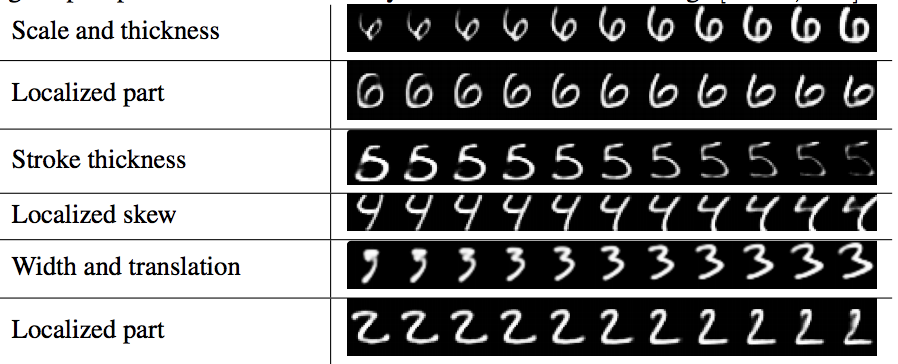

The following figure shows the digit representation under capsules. Each row shows the reconstruction when one of the 16 dimensions in the DigitCaps representation is tweaked by intervals of 0.05 in the range [−0.25, 0.25]. By tweaking the values, we notice how the reconstruction changes, and thus get a sense for what each dimension is representing. The authors found that some dimensions represent global properties of the digits, while other represent localized properties. | |||

[[File:CapsuleReps.png|center|900px]] | |||

One example the authors provide is: different dimensions are used for the length of the ascender of a 6 and the size of the loop. The variations include stroke thickness, skew and width, as well as digit-specific variations. The authors are able to show dimension representations using a decoder network by feeding a perturbed vector. | |||

==Robustness of CapsNet== | |||

The authors conclude that DigitCaps capsules learn more robust representations for each digit class than traditional CNNs. The trained CapsNet becomes moderately robust to small affine transformations in the test data. | |||

To compare the robustness of CapsNet to affine transformations against traditional CNNs, both models (CapsNet and a traditional CNN with MaxPooling and DropOut) were trained on a padded and translated MNIST training set, in which each example is an MNIST digit placed randomly on a black background of 40 × 40 pixels. The networks were then tested on the [http://www.cs.toronto.edu/~tijmen/affNIST/ affNIST] dataset (MNIST digits with random affine transformation). An under-trained CapsNet which achieved 99.23% accuracy on the MNIST test set achieved a corresponding 79% accuracy on the affnist test set. A traditional CNN achieved similar accuracy (99.22%) on the mnist test set, but only 66% on the affnist test set. | |||

=MultiMNIST & Other Experiments= | |||

==MultiMNIST== | |||

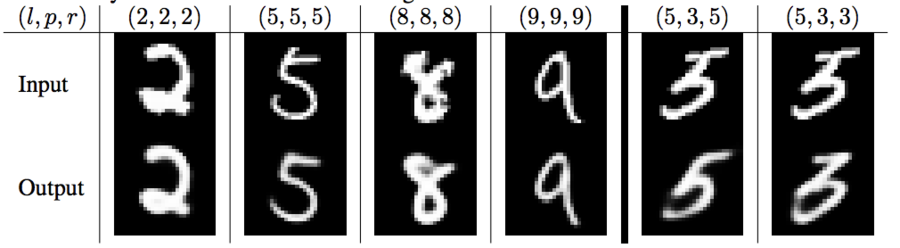

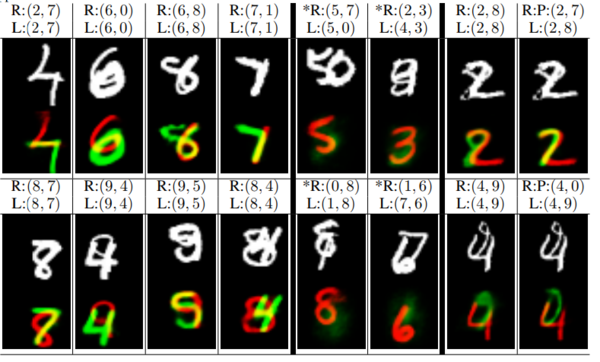

To evaluate the performance of the model on highly overlapping digits, the authors generate a 'MultiMNIST' dataset. In MultiMNIST, images are two overlaid MNIST digits of the same set(train or test) but different classes. The results indicate a classification error rate of 5%. Additionally, CapsNet can be used to segment the image into the two digits that compose it. Moreover, the model is able to deal with the overlaps and reconstruct digits correctly since each digit capsule can learn the style from the votes of PrimaryCapsules layer (Figure 5). | |||

There are some additional steps to generating the MultiMNIST dataset. | |||

1. Both images are shifted by up to 4 pixels in each direction resulting in a 36 × 36 image. Bounding boxes of digits in MNIST overlap by approximately 80%, so this is used to make both digits identifiable (since there is no RGB difference learnable by the network to separate the digits) | |||

2. The label becomes a vector of two numbers, representing the original digit and the randomly generated (and overlaid) digit. | |||

[[File:CapsuleNets MultiMNIST.PNG|600px|thumb|center|Figure 5: Sample reconstructions of a CapsNet with 3 routing iterations on MultiMNIST test dataset. | |||

The two reconstructed digits are overlayed in green and red as the lower image. The upper image | |||

shows the input image. L:(l1; l2) represents the label for the two digits in the image and R:(r1; r2) | |||

represents the two digits used for reconstruction. The two right most columns show two examples | |||

with wrong classification reconstructed from the label and from the prediction (P). In the (2; 8) | |||

example the model confuses 8 with a 7 and in (4; 9) it confuses 9 with 0. The other columns have | |||

correct classifications and show that the model accounts for all the pixels while being able to assign | |||

one pixel to two digits in extremely difficult scenarios (column 1 − 4). Note that in dataset generation | |||

the pixel values are clipped at 1. The two columns with the (*) mark show reconstructions from a | |||

digit that is neither the label nor the prediction. These columns suggest that the model is not just | |||

finding the best fit for all the digits in the image including the ones that do not exist. Therefore in case | |||

of (5; 0) it cannot reconstruct a 7 because it knows that there is a 5 and 0 that fit best and account for | |||

all the pixels. Also, in the case of (8; 1) the loop of 8 has not triggered 0 because it is already accounted | |||

for by 8. Therefore it will not assign one pixel to two digits if one of them does not have any other | |||

support.]] | |||

==Other datasets== | |||

The authors also tested the proposed capsule model on CIFAR10 dataset and achieved an error rate of 10.6%. The model tested was an ensemble of 7 models. Each of the models in the ensemble had the same architecture as the model used for MNIST (apart from 3 additional channels and 64 different types of primary capsules being used). These 7 models were trained on 24x24 patches of the training images for 3 iterations. During experimentation, the authors also found out that adding an additional none-of-the-above category helped improved the overall performance. The error rate achieved is comparable to the error rate achieved by a standard CNN model. According to the authors, one of the reasons for low performance is the fact that background in CIFAR-10 images are too varied for it to be adequately modeled by reasonably sized capsule net. | |||

The proposed model was also evaluated using a small subset of SVHN dataset. The network trained was much smaller and trained using only 73257 training images. The network still managed to achieve an error rate of 4.3% on the test set. | |||

=Critique= | =Critique= | ||

Although the network performs incredibly favourable in the author's experiments, it has a long way to go on more complex datasets. On CIFAR-10, the network achieved subpar results, and the experimental results seem to be worse when the problem becomes more complex. This is anticipated, since these networks are still in their early stage; later innovations might come in the upcoming decades/years. It could also be wise to apply the model to other datasets with larger sizes to make the functionality more acceptable. MNIST dataset has simple patterns and even if the model wanted to be presented with only one dataset, it was better not to be MNIST dataset especially in this case that the focus is on human-eye detection and numbers are not that regular in real-life experiences. | |||

Hinton talks about CapsuleNets revolutionizing areas such as self-driving, but such groundbreaking innovations are far away from CIFAR-10, and even further from MNIST. Only time can tell if CapsNets will live up to their hype. | |||

Moreover, there is no underlying intuition provided on the main point of the paper which is that capsule nets preserve relations between extracted features from the proposed architecture. An explanation on the intuition behind this idea will go a long way in arguing against CNN networks. | |||

Capsules inherently segment images and learn a lower dimensional embedding in a new manner, which makes them likely to perform well on segmentation and computer vision tasks once further research is done. | |||

Additionally, these networks are more interpretable than CNNs, and have strong theoretical reasoning for why they could work. Naturally, it would be hard for a new architecture to beat the heavily researched/modified CNNs. | |||

* ([https://openreview.net/forum?id=HJWLfGWRb]) it's not fully clear how effective it can be performed / how scalable it is. Evaluation is performed on a small dataset for shape recognition. The approach will need to be tested on larger, more challenging datasets. | |||

=Future Work= | |||

The same authors [N. F. Geoffrey E Hinton, Sara Sabour] presented another paper "MATRIX CAPSULES WITH EM ROUTING" in ICLR 2018, which achieved better results than the work presented in this paper. They presented a new multi-layered capsule network architecture, implemented an EM routing procedure, and introduced "Coordinate Addition". While dynamic routing involves capsules voting on the pose matrix of many different capsules in the layer above, EM-routing iteratively updates coefficients for each image using the Expectation-Maximization algorithm such that the output of each capsule is routed to a capsule in the layer above that receives a cluster of similar votes. This new type reduced number of errors by 45%, and performed better than standard CNN on white box adversarial attacks. Capsule architectures are gaining interest because of their ability to achieve equivariance of parts, and employ a new form of pooling called "routing" (as opposed to max pooling) which groups parts that make similar predictions of the whole to which they belong, rather than relying on spatial co-locality. | |||

Moreover, the authors hint towards trying to change the curvature and sensitivities to various factors by introducing new form of loss function. It may improve the performance of the model for more complicated data set which is one of the model's drawback. | |||

Moreover, as mentioned in critiques, a good future work for this group would be making the model more robust to the dataset and achieve acceptable performance on datasets with more regularly seen images in real life experiences. | |||

=References= | =References= | ||

#N. F. Geoffrey E Hinton, Sara Sabour. Matrix capsules with em routing. In International Conference on Learning Representations, 2018. | |||

#S. Sabour, N. Frosst, and G. E. Hinton, “Dynamic routing between capsules,” arXiv preprint arXiv:1710.09829v2, 2017 | |||

# Hinton, G. E., Krizhevsky, A. and Wang, S. D. (2011), Transforming Auto-encoders | |||

#Geoffrey Hinton's talk: What is wrong with convolutional neural nets? - Talk given at MIT. Brain & Cognitive Sciences - Fall Colloquium Series. [https://www.youtube.com/watch?v=rTawFwUvnLE ] | |||

#Understanding Hinton’s Capsule Networks - Max Pechyonkin's series [https://medium.com/ai%C2%B3-theory-practice-business/understanding-hintons-capsule-networks-part-i-intuition-b4b559d1159b] | |||

#Martín Abadi, Ashish Agarwal, Paul Barham, Eugene Brevdo, Zhifeng Chen, Craig Citro, Greg SCorrado, Andy Davis, Jeffrey Dean, Matthieu Devin, et al. Tensorflow: Large-scale machinelearning on heterogeneous distributed systems.arXiv preprint arXiv:1603.04467, 2016. | |||

#Jimmy Ba, Volodymyr Mnih, and Koray Kavukcuoglu. Multiple object recognition with visualattention.arXiv preprint arXiv:1412.7755, 2014. | |||

#Jia-Ren Chang and Yong-Sheng Chen. Batch-normalized maxout network in network.arXiv preprintarXiv:1511.02583, 2015. | |||

#Dan C Cire ̧san, Ueli Meier, Jonathan Masci, Luca M Gambardella, and Jürgen Schmidhuber. High-performance neural networks for visual object classification.arXiv preprint arXiv:1102.0183,2011. | |||

#Ian J Goodfellow, Yaroslav Bulatov, Julian Ibarz, Sacha Arnoud, and Vinay Shet. Multi-digit numberrecognition from street view imagery using deep convolutional neural networks.arXiv preprintarXiv:1312.6082, 2013. | |||

Latest revision as of 00:36, 17 December 2018

The paper "Dynamic Routing Between Capsules" was written by three researchers at Google Brain: Sara Sabour, Nicholas Frosst, and Geoffrey E. Hinton. This paper was published and presented at the 31st Conference on Neural Information Processing Systems (NIPS 2017) in Long Beach, California. The same three researchers recently published a highly related paper "Matrix Capsules with EM Routing" for ICLR 2018. Source code in tensorflow can be found here [1]

Motivation

Ever since AlexNet eclipsed the performance of competing architectures in the 2012 ImageNet challenge, convolutional neural networks have maintained their dominance in computer vision applications. Despite the recent successes and innovations brought about by convolutional neural networks, some assumptions made in these networks are perhaps unwarranted and deficient. Using a novel neural network architecture, the authors create CapsuleNets, a network that they claim is able to learn image representations in a more robust, human-like manner. With only a 3 layer capsule network, they achieved near state-of-the-art results on MNIST.

The activities of the neurons within an active capsule represent the various properties of a particular entity that is present in the image. These properties can include many different types of instantiation parameter such as pose (position, size, orientation), deformation, velocity, albedo, hue, texture, etc. One very special property is the existence of the instantiated entity in the image. An obvious way to represent existence is by using a separate logistic unit whose output is the probability that the entity exists. This paper explores an interesting alternative which is to use the overall length of the vector of instantiation parameters to represent the existence of the entity and to force the orientation of the vector to represent the properties of the entity. The length of the vector output of a capsule cannot exceed 1 because of an application of a non-linearity that leaves the orientation of the vector unchanged but scales down its magnitude.

The fact that the output of a capsule is a vector makes it possible to use a powerful dynamic routing mechanism to ensure that the output of the capsule gets sent to an appropriate parent in the layer above. Initially, the output is routed to all possible parents but is scaled down by coupling coefficients that sum to 1. For each possible parent, the capsule computes a “prediction vector” by multiplying its own output by a weight matrix. If this prediction vector has a large scalar product with the output of a possible parent, there is top-down feedback which increases the coupling coefficient for that parent and decreasing it for other parents. This increases the contribution that the capsule makes to that parent thus further increasing the scalar product of the capsule’s prediction with the parent’s output. This type of “routing-by-agreement” should be far more effective than the very primitive form of routing implemented by max-pooling, which allows neurons in one layer to ignore all but the most active feature detector in a local pool in the layer below. The authors demonstrate that their dynamic routing mechanism is an effective way to implement the “explaining away” that is needed for segmenting highly overlapping objects

Adversarial Examples

First discussed by Christian Szegedy et. al. in late 2013, adversarial examples have been heavily discussed by the deep learning community as a potential security threat to AI learning. Adversarial examples are defined as inputs that an attacker creates intentionally to fool a machine learning model. An example of an adversarial example is shown below:

To human eyes, the image appears to be a panda both before and after noise is injected into the image, whereas the trained ConvNet model discerns the noisy image as a Gibbon with almost 100% certainty. The fact that the network is unable to classify the above image as a panda after the epsilon perturbation leads to many potential security risks in AI dependent systems such as self-driving vehicles. Although various methods have been suggested to combat adversarial examples, robust defenses are hard to construct due to the inherent difficulties in constructing theoretical models for the adversarial example crafting process. However, beyond the fact that these examples may serve as a security threat, it emphasizes that these convolutional neural networks do not learn image classification/object detection patterns the same way that a human would. Rather than identifying the core features of a panda such as its eyes, mouth, nose, and the gradient changes in its black/white fur, the convolutional neural network seems to be learning image representations in a completely different manner. Deep learning researchers often attempt to model neural networks after human learning, and it is clear that further steps must be taken to robustify ConvNets against targeted noise perturbations.

Drawbacks of CNNs

Hinton claims that the key fault with traditional CNNs lies within the pooling function. Although pooling builds translational invariance into the network, it fails to preserve spatial relationships between objects. When we pool, we effectively reduce a [math]\displaystyle{ k \cdot k }[/math] kernel of convolved cells into a scalar input. This results in a desired local invariance without inhibiting the network's ability to detect features but causes valuable spatial information to be lost.

Also, in CNNs, higher-level features combine lower-level features as a weighted sum: activations of a previous layer multiplied by the current layer's weight, then passed to another activation function. In this process, pose relationship between simpler features is not part of the higher-level feature.

In the example below, the network is able to detect the similar features (eyes, mouth, nose, etc) within both images, but fails to recognize that one image is a human face, while the other is a Picasso-esque due to the CNN's inability to encode spatial relationships after multiple pooling layers. In deep learning, the activation level of a neuron is often interpreted as the likelihood of detecting a specific feature. CNNs are good at detecting features but less effective at exploring the spatial relationships among features (perspective, size, orientation).

Here, the CNN could wrongly activate the neuron for the face detection. Without realizing the mismatch in spatial orientation and size, the activation for the face detection will be too high.

Conversely, we hope that a CNN can recognize that both of the following pictures contain a kitten. Unfortunately, when we feed the two images into a ResNet50 architecture, only the first image is correctly classified, while the second image is predicted to be a guinea pig.

For a more in-depth discussion on the problems with ConvNets, please listen to Geoffrey Hinton's talk "What is wrong with convolutional neural nets?" given at MIT during the Brain & Cognitive Sciences - Fall Colloquium Series (December 4, 2014).

Intuition for Capsules

Human vision ignores irrelevant details by using a carefully determined sequence of fixation points to ensure that only a tiny fraction of the optic array is ever processed at the highest resolution. Hinton argues that our brains reason visual information by deconstructing it into a hierarchical representation which we then match to familiar patterns and relationships from memory. The key difference between this understanding and the functionality of CNNs is that recognition of an object should not depend on the angle from which it is viewed.

To enforce rotational and translational equivariance, Capsule Networks store and preserve hierarchical pose relationships between objects. The core idea behind capsule theory is the explicit numerical representations of relative relationships between different objects within an image. Building these relationships into the Capsule Networks model, the network is able to recognize newly seen objects as a rotated view of a previously seen object. For example, the below image shows the Statue of Liberty under five different angles. If a person had only seen the Statue of Liberty from one angle, they would be able to ascertain that all five pictures below contain the same object (just from a different angle). However, the underlying concept of rotation invariant recognition using only one point of view might be, in fact, contrary to what most humans actually do. In reality, even though the human observer is able to correctly tag all the five images as representations of the same object, that human observer has accumulated a vast number of learning experiences in terms of discerning colors, shades, shapes, among other factors that most likely improve his or her conclusion and that are quite difficult to embed in an image classification algorithm.

Building on this idea of hierarchical representation of spatial relationships between key entities within an image, the authors introduce Capsule Networks. Unlike traditional CNNs, Capsule Networks are better equipped to classify correctly under rotational invariance. Furthermore, the authors managed to achieve state of the art results on MNIST using a fraction of the training samples that alternative state of the art networks requires.

Background, Notation, and Definitions

What is a Capsule

"Each capsule learns to recognize an implicitly defined visual entity over a limited domain of viewing conditions and deformations and it outputs both the probability that the entity is present within its limited domain and a set of “instantiation parameters” that may include the precise pose, lighting, and deformation of the visual entity relative to an implicitly defined canonical version of that entity. When the capsule is working properly, the probability of the visual entity being present is locally invariant — it does not change as the entity moves over the manifold of possible appearances within the limited domain covered by the capsule. The instantiation parameters, however, are “equivariant” — as the viewing conditions change and the entity moves over the appearance manifold, the instantiation parameters change by a corresponding amount because they are representing the intrinsic coordinates of the entity on the appearance manifold."

In essence, capsules store object properties in a vector form; probability of detection is encoded as the vector's length, while spatial properties are encoded as the individual vector components. Thus, when a feature is present but the image captures it under a different angle, the probability of detection remains unchanged.

A brief overview/understanding of capsules can be found in other papers from the author. To quote from this paper:

A capsule network consists of several layers of capsules. The set of capsules in layer [math]\displaystyle{ L }[/math] is denoted as [math]\displaystyle{ \Omega_L }[/math]. Each capsule has a 4x4 pose matrix, [math]\displaystyle{ M }[/math], and an activation probability, [math]\displaystyle{ a }[/math]. These are like the activities in a standard neural net: they depend on the current input and are not stored. In between each capsule [math]\displaystyle{ i }[/math] in layer [math]\displaystyle{ L }[/math] and each capsule [math]\displaystyle{ j }[/math] in layer [math]\displaystyle{ L + 1 }[/math] is a 4x4 trainable transformation matrix, [math]\displaystyle{ W_{ij} }[/math] . These [math]\displaystyle{ W_{ij} }[/math]'s (and two learned biases per capsule) are the only stored parameters and they are learned discriminatively. The pose matrix of capsule [math]\displaystyle{ i }[/math] is transformed by [math]\displaystyle{ W_{ij} }[/math] to cast a vote [math]\displaystyle{ V_{ij} = M_iW_{ij} }[/math] for the pose matrix of capsule [math]\displaystyle{ j }[/math]. The poses and activations of all the capsules in layer [math]\displaystyle{ L + 1 }[/math] are calculated by using a non-linear routing procedure which gets as input [math]\displaystyle{ V_{ij} }[/math] and [math]\displaystyle{ a_i }[/math] for all [math]\displaystyle{ i \in \Omega_L, j \in \Omega_{L+1} }[/math]

[math]\displaystyle{ }[/math]

Notation

We want the length of the output vector of a capsule to represent the probability that the entity represented by the capsule is present in the current input. The paper performs a non-linear squashing operation to ensure that vector length falls between 0 and 1, with shorter vectors (less likely to exist entities) being shrunk towards 0.

\begin{align} \mathbf{v}_j &= \frac{||\mathbf{s}_j||^2}{1+ ||\mathbf{s}_j||^2} \frac{\mathbf{s}_j}{||\mathbf{s}_j||} \end{align}

where [math]\displaystyle{ \mathbf{v}_j }[/math] is the vector output of capsule [math]\displaystyle{ j }[/math] and [math]\displaystyle{ s_j }[/math] is its total input.

For all but the first layer of capsules, the total input to a capsule [math]\displaystyle{ s_j }[/math] is a weighted sum over all “prediction vectors” [math]\displaystyle{ \hat{\mathbf{u}}_{j|i} }[/math] from the capsules in the layer below and is produced by multiplying the output [math]\displaystyle{ \mathbf{u}_i }[/math] of a capsule in the layer below by a weight matrix [math]\displaystyle{ \mathbf{W}ij }[/math]

\begin{align} \mathbf{s}_j = \sum_i c_{ij}\hat{\mathbf{u}}_{j|i}, ~\hspace{0.5em} \hat{\mathbf{u}}_{j|i}= \mathbf{W}_{ij}\mathbf{u}_i \end{align} where the [math]\displaystyle{ c_{ij} }[/math] are coupling coefficients that are determined by the iterative dynamic routing process.

The coupling coefficients between capsule [math]\displaystyle{ i }[/math] and all the capsules in the layer above sum to 1 and are determined by a “routing softmax” whose initial logits [math]\displaystyle{ b_{ij} }[/math] are the log prior probabilities that capsule [math]\displaystyle{ i }[/math] should be coupled to capsule [math]\displaystyle{ j }[/math].

\begin{align} c_{ij} = \frac{\exp(b_{ij})}{\sum_k \exp(b_{ik})} \end{align}

Network Training and Dynamic Routing

Understanding Capsules

The notation can get somewhat confusing, so I will provide intuition behind the computational steps within a capsule. The following image is taken from naturomic's talk on Capsule Networks.

The above image illustrates the key mathematical operations happening within a capsule (and compares them to the structure of a neuron). Although the operations are rather straightforward, it's crucial to note that the capsule computes an affine transformation onto each input vector. The length of the input vectors [math]\displaystyle{ \mathbf{u}_{i} }[/math] represent the probability of entity [math]\displaystyle{ i }[/math] existing in a lower level. This vector is then reoriented with an affine transform using [math]\displaystyle{ \mathbf{W}_{ij} }[/math] matrices that encode spatial relationships between entity [math]\displaystyle{ \mathbf{u}_{i} }[/math] and other lower level features.

We illustrate the intuition behind vector-to-vector matrix multiplication within capsules using the following example: if vectors [math]\displaystyle{ \mathbf{u}_{1} }[/math], [math]\displaystyle{ \mathbf{u}_{2} }[/math], and [math]\displaystyle{ \mathbf{u}_{3} }[/math] represent detection of eyes, nose, and mouth respectively, then after multiplication with trained weight matrices [math]\displaystyle{ \mathbf{W}_{ij} }[/math] (where j denotes existence of a face), we should get a general idea of the general location of the higher level feature (face), similar to the image below.

Dynamic Routing

A capsule [math]\displaystyle{ i }[/math] in a lower-level layer needs to decide how to send its output vector to higher-level capsules [math]\displaystyle{ j }[/math]. This decision is made with probability proportional to [math]\displaystyle{ c_{ij} }[/math]. If there are [math]\displaystyle{ K }[/math] capsules in the level that capsule [math]\displaystyle{ i }[/math] routes to, then we know the following properties about [math]\displaystyle{ c_{ij} }[/math]: [math]\displaystyle{ \sum_{j=1}^M c_{ij} = 1, c_{ij} \geq 0 }[/math]

In essence, the [math]\displaystyle{ \{c_{ij}\}_{j=1}^M }[/math] denotes a discrete probability distribution with respect to capsule [math]\displaystyle{ i }[/math]'s output location. Lower level capsules decide which higher level capsules to send vectors into by adjusting the corresponding routing weights [math]\displaystyle{ \{c_{ij}\}_{j=1}^M }[/math]. After a few iterations in training, numerous vectors will have already been sent to all higher level capsules. Based on the similarity between the current vector being routed and all vectors already sent into the higher level capsules, we decide which capsule to send the current vector into.

From the image above, we notice that a cluster of points similar to the current vector has already been routed into capsule K, while most points in capsule J are highly dissimilar. It thus makes more sense to route the current observations into capsule K; we adjust the corresponding weights upward during training.

These weights are determined through the dynamic routing procedure:

Note that the convergence of this routing procedure has been questioned. Although it is empirically shown that this procedure converges, the convergence has not been proven.

Although dynamic routing is not the only manner in which we can encode relationships between capsules, the premise of the paper is to demonstrate the capabilities of capsules under a simple implementation. Since the paper was released in 2017, numerous alternative routing implementations have been released including an EM matrix routing algorithm by the same authors (ICLR 2018).

Architecture

The capsule network architecture given by the authors has 11.36 million trainable parameters. The paper itself is not very detailed on exact implementation of each architectural layer, and hence it leaves some degree of ambiguity on coding various aspects of the original network. The capsule network has 6 overall layers, with the first three layers denoting components of the encoder, and the last 3 denoting components of the decoder.

Loss Function

The cost function looks very complicated, but can be broken down into intuitive components. Before diving into the equation, remember that the length of the vector denotes the probability of object existence. The left side of the equation denotes loss when the network classifies an observation correctly; the term becomes zero when the classification is incorrect. To compute loss when the network correctly classifies the label, we subtract the vector norm from a fixed quantity [math]\displaystyle{ m^+ := 0.9 }[/math]. On the other hand, when the network classifies a label incorrectly, we penalize the loss based on the network's confidence in the incorrect label; we compute the loss by subtracting [math]\displaystyle{ m^- := 0.1 }[/math] from the vector norm.

A graphical representation of loss function values under varying vector norms is given below.

Encoder Layers

All experiments within this paper were conducted on the MNIST dataset, and thus the architecture is built to classify the corresponding dataset. For more complex datasets, the experiments were less promising.

The encoder layer takes in a 28x28 MNIST image and learns a 16 dimensional representation of instantiation parameters.

Layer 1: Convolution: This layer is a standard convolution layer. Using kernels with size 9x9x1, a stride of 1, and a ReLU activation function, we detect the 2D features within the network.

Layer 2: PrimaryCaps: We represent the low level features detected during convolution as 32 primary capsules. Each capsule applies eight convolutional kernels with stride 2 to the output of the convolution layer and feeds the corresponding transformed tensors into the DigiCaps layer.

Layer 3: DigiCaps: This layer contains 10 digit capsules, one for each digit. As explained in the dynamic routing procedure, each input vector from the PrimaryCaps layer has its own corresponding weight matrix [math]\displaystyle{ W_{ij} }[/math]. Using the routing coefficients [math]\displaystyle{ c_{ij} }[/math] and temporary coefficients [math]\displaystyle{ b_{ij} }[/math], we train the DigiCaps layer to output ten 16 dimensional vectors. The length of the [math]\displaystyle{ i^{th} }[/math] vector in this layer corresponds to the probability of detection of digit [math]\displaystyle{ i }[/math].

Decoder Layers

The decoder layer aims to train the capsules to extract meaningful features for image detection/classification. During training, it takes the 16 layer instantiation vector of the correct (not predicted) DigiCaps layer, and attempts to recreate the 28x28 MNIST image as best as possible. Setting the loss function as reconstruction error (Euclidean distance between the reconstructed image and original image), we tune the capsules to encode features that are meaningful within the actual image.

The layer consists of three fully connected layers, and transforms a 16x1 vector from the encoder layer into a 28x28 image.

In addition to the digicaps loss function, we add reconstruction error as a form of regularization. During training, everything but the activity vector of the correct digit capsule is masked, and then this activity vector is used to reconstruct the input image. We minimize the Euclidean distance between the outputs of the logistic units and the pixel intensities of the original and reconstructed images. We scale down this reconstruction loss by 0.0005 so that it does not dominate the margin loss during training. As illustrated below, reconstructions from the 16D output of the CapsNet are robust while keeping only important details.

MNIST Experimental Results

Accuracy

The paper tests on the MNIST dataset with 60K training examples, and 10K testing. Wan et al. [2013] achieves 0.21% test error with ensembling and augmenting the data with rotation and scaling. They achieve 0.39% without them. As shown in Table 1, the authors manage to achieve 0.25% test error with only a 3 layer network; the previous state of the art only beat this number with very deep networks. This example shows the importance of routing and reconstruction regularizer, which boosts the performance. On the other hand, while the accuracies are very high, the number of parameters is much smaller compared to the baseline model.

What Capsules Represent for MNIST

The following figure shows the digit representation under capsules. Each row shows the reconstruction when one of the 16 dimensions in the DigitCaps representation is tweaked by intervals of 0.05 in the range [−0.25, 0.25]. By tweaking the values, we notice how the reconstruction changes, and thus get a sense for what each dimension is representing. The authors found that some dimensions represent global properties of the digits, while other represent localized properties.

One example the authors provide is: different dimensions are used for the length of the ascender of a 6 and the size of the loop. The variations include stroke thickness, skew and width, as well as digit-specific variations. The authors are able to show dimension representations using a decoder network by feeding a perturbed vector.

Robustness of CapsNet

The authors conclude that DigitCaps capsules learn more robust representations for each digit class than traditional CNNs. The trained CapsNet becomes moderately robust to small affine transformations in the test data.

To compare the robustness of CapsNet to affine transformations against traditional CNNs, both models (CapsNet and a traditional CNN with MaxPooling and DropOut) were trained on a padded and translated MNIST training set, in which each example is an MNIST digit placed randomly on a black background of 40 × 40 pixels. The networks were then tested on the affNIST dataset (MNIST digits with random affine transformation). An under-trained CapsNet which achieved 99.23% accuracy on the MNIST test set achieved a corresponding 79% accuracy on the affnist test set. A traditional CNN achieved similar accuracy (99.22%) on the mnist test set, but only 66% on the affnist test set.

MultiMNIST & Other Experiments

MultiMNIST

To evaluate the performance of the model on highly overlapping digits, the authors generate a 'MultiMNIST' dataset. In MultiMNIST, images are two overlaid MNIST digits of the same set(train or test) but different classes. The results indicate a classification error rate of 5%. Additionally, CapsNet can be used to segment the image into the two digits that compose it. Moreover, the model is able to deal with the overlaps and reconstruct digits correctly since each digit capsule can learn the style from the votes of PrimaryCapsules layer (Figure 5).

There are some additional steps to generating the MultiMNIST dataset.

1. Both images are shifted by up to 4 pixels in each direction resulting in a 36 × 36 image. Bounding boxes of digits in MNIST overlap by approximately 80%, so this is used to make both digits identifiable (since there is no RGB difference learnable by the network to separate the digits)

2. The label becomes a vector of two numbers, representing the original digit and the randomly generated (and overlaid) digit.

Other datasets

The authors also tested the proposed capsule model on CIFAR10 dataset and achieved an error rate of 10.6%. The model tested was an ensemble of 7 models. Each of the models in the ensemble had the same architecture as the model used for MNIST (apart from 3 additional channels and 64 different types of primary capsules being used). These 7 models were trained on 24x24 patches of the training images for 3 iterations. During experimentation, the authors also found out that adding an additional none-of-the-above category helped improved the overall performance. The error rate achieved is comparable to the error rate achieved by a standard CNN model. According to the authors, one of the reasons for low performance is the fact that background in CIFAR-10 images are too varied for it to be adequately modeled by reasonably sized capsule net.

The proposed model was also evaluated using a small subset of SVHN dataset. The network trained was much smaller and trained using only 73257 training images. The network still managed to achieve an error rate of 4.3% on the test set.

Critique

Although the network performs incredibly favourable in the author's experiments, it has a long way to go on more complex datasets. On CIFAR-10, the network achieved subpar results, and the experimental results seem to be worse when the problem becomes more complex. This is anticipated, since these networks are still in their early stage; later innovations might come in the upcoming decades/years. It could also be wise to apply the model to other datasets with larger sizes to make the functionality more acceptable. MNIST dataset has simple patterns and even if the model wanted to be presented with only one dataset, it was better not to be MNIST dataset especially in this case that the focus is on human-eye detection and numbers are not that regular in real-life experiences.

Hinton talks about CapsuleNets revolutionizing areas such as self-driving, but such groundbreaking innovations are far away from CIFAR-10, and even further from MNIST. Only time can tell if CapsNets will live up to their hype.

Moreover, there is no underlying intuition provided on the main point of the paper which is that capsule nets preserve relations between extracted features from the proposed architecture. An explanation on the intuition behind this idea will go a long way in arguing against CNN networks.

Capsules inherently segment images and learn a lower dimensional embedding in a new manner, which makes them likely to perform well on segmentation and computer vision tasks once further research is done.

Additionally, these networks are more interpretable than CNNs, and have strong theoretical reasoning for why they could work. Naturally, it would be hard for a new architecture to beat the heavily researched/modified CNNs.

- ([2]) it's not fully clear how effective it can be performed / how scalable it is. Evaluation is performed on a small dataset for shape recognition. The approach will need to be tested on larger, more challenging datasets.

Future Work

The same authors [N. F. Geoffrey E Hinton, Sara Sabour] presented another paper "MATRIX CAPSULES WITH EM ROUTING" in ICLR 2018, which achieved better results than the work presented in this paper. They presented a new multi-layered capsule network architecture, implemented an EM routing procedure, and introduced "Coordinate Addition". While dynamic routing involves capsules voting on the pose matrix of many different capsules in the layer above, EM-routing iteratively updates coefficients for each image using the Expectation-Maximization algorithm such that the output of each capsule is routed to a capsule in the layer above that receives a cluster of similar votes. This new type reduced number of errors by 45%, and performed better than standard CNN on white box adversarial attacks. Capsule architectures are gaining interest because of their ability to achieve equivariance of parts, and employ a new form of pooling called "routing" (as opposed to max pooling) which groups parts that make similar predictions of the whole to which they belong, rather than relying on spatial co-locality. Moreover, the authors hint towards trying to change the curvature and sensitivities to various factors by introducing new form of loss function. It may improve the performance of the model for more complicated data set which is one of the model's drawback.

Moreover, as mentioned in critiques, a good future work for this group would be making the model more robust to the dataset and achieve acceptable performance on datasets with more regularly seen images in real life experiences.

References

- N. F. Geoffrey E Hinton, Sara Sabour. Matrix capsules with em routing. In International Conference on Learning Representations, 2018.

- S. Sabour, N. Frosst, and G. E. Hinton, “Dynamic routing between capsules,” arXiv preprint arXiv:1710.09829v2, 2017

- Hinton, G. E., Krizhevsky, A. and Wang, S. D. (2011), Transforming Auto-encoders

- Geoffrey Hinton's talk: What is wrong with convolutional neural nets? - Talk given at MIT. Brain & Cognitive Sciences - Fall Colloquium Series. [3]

- Understanding Hinton’s Capsule Networks - Max Pechyonkin's series [4]

- Martín Abadi, Ashish Agarwal, Paul Barham, Eugene Brevdo, Zhifeng Chen, Craig Citro, Greg SCorrado, Andy Davis, Jeffrey Dean, Matthieu Devin, et al. Tensorflow: Large-scale machinelearning on heterogeneous distributed systems.arXiv preprint arXiv:1603.04467, 2016.

- Jimmy Ba, Volodymyr Mnih, and Koray Kavukcuoglu. Multiple object recognition with visualattention.arXiv preprint arXiv:1412.7755, 2014.

- Jia-Ren Chang and Yong-Sheng Chen. Batch-normalized maxout network in network.arXiv preprintarXiv:1511.02583, 2015.

- Dan C Cire ̧san, Ueli Meier, Jonathan Masci, Luca M Gambardella, and Jürgen Schmidhuber. High-performance neural networks for visual object classification.arXiv preprint arXiv:1102.0183,2011.

- Ian J Goodfellow, Yaroslav Bulatov, Julian Ibarz, Sacha Arnoud, and Vinay Shet. Multi-digit numberrecognition from street view imagery using deep convolutional neural networks.arXiv preprintarXiv:1312.6082, 2013.