maximum-Margin Matrix Factorization: Difference between revisions

m (Conversion script moved page Maximum-Margin Matrix Factorization to maximum-Margin Matrix Factorization: Converting page titles to lowercase) |

|||

| (28 intermediate revisions by 3 users not shown) | |||

| Line 7: | Line 7: | ||

== Hinge Loss Function == | == Hinge Loss Function == | ||

If Y has been fully observed and there is no unknown, our goal is to find X as a simple (low-rank) representation of Y. By choosing the sum-squared error loss function, one can easily use SVD technique to minimize the loss and find X. Despite the fact that this solution is suitable for lowering the rank, it does not work when we have unknowns in Y (due to several local minimas problem). In addition one may think about other loss functions instead of SSE (although SSE is ''convex'' and has a nice behavior in the optimization problems). For example, in this problem '''Hinge loss''' works fine, specially considering the fact that values in Y are restricted to <math>\pm1</math>. Here hinge loss is defined as: | If Y has been fully observed and there is no unknown, our goal is to find X as a simple (low-rank) representation of Y. By choosing the sum-squared error loss function, one can easily use SVD technique to minimize the loss and find X. Despite the fact that this solution is suitable for lowering the rank, it does not work when we have unknowns in Y (due to several local minimas problem). In addition, one may think about other loss functions instead of SSE (although SSE is ''convex'' and has a nice behavior in the optimization problems). For example, in this problem, '''Hinge loss''' works fine, specially considering the fact that values in Y are restricted to <math>\pm1</math>. Here, hinge loss is defined as: | ||

<math>\textrm{Hinge}(X | Y) = \displaystyle \sum_{(i,j) \in S} \max (0, 1 - x_{ij} \cdot y_{ij})</math> | <math>\textrm{Hinge}(X | Y) = \displaystyle \sum_{(i,j) \in S} \max (0, 1 - x_{ij} \cdot y_{ij})</math> | ||

| Line 15: | Line 15: | ||

Another way to tackle this problem is to use '''Matrix Factorization'''. In matrix factorization one tries to find a prediction <math>X = UV^T</math> such that the rank of each factor (U and V) is low. In addition to this U and V are meaningful in this problem; the i<sub>th</sub> row of U is an indicator of the importance (weights) of the features for i<sub>th</sub> user, therefore i<sub>th</sub> row of V is the characterization of the i<sub>th</sub> movie based on its features. Here, lowering the rank of X is equal to lowering the rank of its factors, but unfortunately the main problem in this case is that, rank optimization problem is not ''convex''. | Another way to tackle this problem is to use '''Matrix Factorization'''. In matrix factorization one tries to find a prediction <math>X = UV^T</math> such that the rank of each factor (U and V) is low. In addition to this U and V are meaningful in this problem; the i<sub>th</sub> row of U is an indicator of the importance (weights) of the features for i<sub>th</sub> user, therefore i<sub>th</sub> row of V is the characterization of the i<sub>th</sub> movie based on its features. Here, lowering the rank of X is equal to lowering the rank of its factors, but unfortunately the main problem in this case is that, rank optimization problem is not ''convex''. | ||

=== A short discussion on Loss Function for classification=== | |||

Collaborative prediction, as described in the problem definition, involves classifying each entry of a matrix into either 1 or -1. Consider the notation in the last paragraph and suppose that we are to predict the j<sub>th</sub> row of the matrix X. Suppose further that the matrix U is fixed, then the classification problem boils down to finding the j<sub>th</sub> row of V which gives the ''optimal prediction''. Denoting the j<sub>th</sub> row of V by vector <math>v</math>, the matrix entry <math>X_{ij}</math> by real number <math>x</math>, and the i<sub>th</sub> row of U by vector <math>u</math>, then the classification problem can be rephrased as finding the weight vector <math>v</math> and predict the value of <math>x</math> (which is either 1 or -1) by the dot production function <math>f(v)=vu^T</math>. Usually, we specify a threshold value (for example, take the threshold as 0) and classify <math>x</math> into 1 or -1 as follows: if <math>f(v)</math> is greater than the threshold, then classify <math>x</math> as 1; otherwise classify <math>x</math> as -1. | |||

There are many ways to measure how well the above classification scheme performs(in the training stage). The most natural way is to calculate the number of incorrect classification: when the true value is 1 but the classification scheme gives -1; or vice versa. However, this very natural performance measure gives rise to a very intractable optimization problem. To make the optimization more tractable, several popular ''proxy loss functions'' are used to replace the above measurement in measuring the performance of the classification scheme. These proxy loss functions include the log loss function, the squared loss function and the hinge loss function. | |||

A comparison of these three proxy loss functions is available at http://hunch.net/?p=547. This article suggests not using log loss since the optimisation of log los can be unstable. | |||

== Frobenius Norm == | == Frobenius Norm == | ||

| Line 41: | Line 49: | ||

where <math>\|X\|_{T}</math> is the '''Trace Norm''' of X and is defined as: <br /> | where <math>\|X\|_{T}</math> is the '''Trace Norm''' of X and is defined as: <br /> | ||

<math> \|X\|_{T} = \sum |\lambda_i| = Tr(\sqrt{XX^T})</math> <br /> | <math> \|X\|_{T} = \sum |\lambda_i| = Tr(\sqrt{XX^T})</math> <br /> | ||

In addition, by using SVD of <math>X = A \Sigma B^T</math>, one can see <math>U = A \sqrt{\Sigma}</math> and | In addition, by using SVD of <math>X = A \Sigma B^T</math>, one can see that both <math>U = A \sqrt{\Sigma}</math> and | ||

<math>V = B\sqrt{\Sigma}</math> satisfy this lemma. | <math>V = B\sqrt{\Sigma}</math> satisfy this lemma. | ||

| Line 56: | Line 64: | ||

''Spectral Norm'' of a matrix is the absolute value of its largest eigenvalue. | ''Spectral Norm'' of a matrix is the absolute value of its largest eigenvalue. | ||

In addition it can be easily shown the relation between the norm, trace norm and rank of a matrix: | In addition it can be easily be shown that the relation between the norm, trace norm and rank of a matrix is as follows: | ||

<math>\forall X: \|X\|_{F} \leq \|X\|_{T} \leq \sqrt{Rank(X)} \cdot \|X\|_{F}</math> | <math>\forall X: \|X\|_{F} \leq \|X\|_{T} \leq \sqrt{Rank(X)} \cdot \|X\|_{F}</math> | ||

| Line 64: | Line 72: | ||

<math>\{X| \|X\|_{F} \leq \alpha\} = conv \{uv^T| u \in \Re^n , v \in \Re^m , |u| = |v| = \alpha\}</math> | <math>\{X| \|X\|_{F} \leq \alpha\} = conv \{uv^T| u \in \Re^n , v \in \Re^m , |u| = |v| = \alpha\}</math> | ||

which shows the set of matrices with a bounded trace norm is Convex | which shows that the set of matrices with a bounded trace norm is Convex; also it seems that this set has the lowest rank matrices on its boundary. Thus, this optimization problem is in fact the process of searching in a convex set to optimize a convex function. | ||

== Soft Margin Optimization == | == Soft Margin Optimization == | ||

| Line 88: | Line 96: | ||

which has a dual form with a simple structure. Also the prediction can be done using a solution to the dual problem directly. | which has a dual form with a simple structure. Also the prediction can be done using a solution to the dual problem directly. | ||

== Experiments == | |||

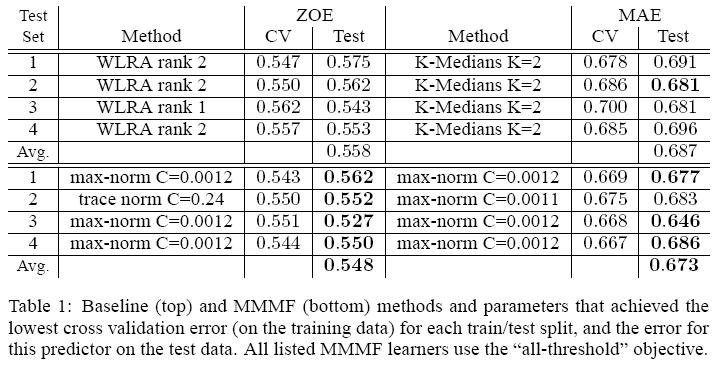

Preliminary experiments was performed on a subset of the 100K MovieLens Dataset, consisting of the 100 users and 100 movies with the most ratings. To do this, we used CSDP to solve the resulting SDPs. The ratings are on a discrete scale of one through five, and we experimented with both generalizations of the hinge loss above, allowing per-user thresholds. As the "base line" learners, we used WLRA and K-Medians. | |||

The the data was split into four sets. For each of the these four test sets, we used the remaining sets to calculate a 3-fold cross-validation (CV) error for each method (WLRA, K-medians, trace norm and max-norm MMMF with immediate-threshold and allthreshold | |||

hinge loss) using a range of parameters (rank for WLRA, number of centers for K-medians, slack cost for MMMF). For each of the four splits, we selected the two MMMF learners with lowest CV ZOE andMAE and the two Baseline learners with lowest CV ZOE and MAE, and measured their error on the held-out test data. | |||

[[File:Paer4-Table1.JPG]] | |||

Table 1 lists these CV and test errors, and the average test error across all four test sets. On average and on three of the four test sets, MMMF achieves lower MAE than the Baseline learners; on all four of the test sets, MMMF achieves lower ZOE than the Baseline learners. | |||

== Limitation == | |||

It is unrealistic that observed entries are assumed to be uniformly sampled. For example, Users tend to rate items they like. In fact, allowing an uncontrolled sampling distribution would guarantee low error on items the user likes, but not on items he would really like based on our prediction. | |||

Latest revision as of 09:45, 30 August 2017

Problem Definition

Assume Y is an [math]\displaystyle{ n \times m }[/math] matrix containing n user preferences about m movies, such that [math]\displaystyle{ y_{ij} = +1 }[/math] if user i likes movie j, and [math]\displaystyle{ y_{ij} = -1 }[/math] if he/she dislikes it. Due to the lack of knowledge about the user's opinions, Y is partially observable: it has some [math]\displaystyle{ \pm1 }[/math] while some other cells are unknown. The main goal is to find matrix X such than it preserves the knowledge in Y, and predicts the value of its unknown cells.

Predicting the unknown values in this problem is possible because the rows and columns of Y are assumed to be related to each other. One can translate this relation by the rank of X; In other words, the rank of X indicates the number of features affecting the values in Y. Therefore, minimizing the number of the features or the rank of X is equivalent to finding a simple relation in the given knowledge of Y. In addition, keeping the number of features low is one way to avoid the problem of over-fitting in the prediction process. If the rank of X is equal to the rank of Y, we will have the trivial solution of X = Y.

Hinge Loss Function

If Y has been fully observed and there is no unknown, our goal is to find X as a simple (low-rank) representation of Y. By choosing the sum-squared error loss function, one can easily use SVD technique to minimize the loss and find X. Despite the fact that this solution is suitable for lowering the rank, it does not work when we have unknowns in Y (due to several local minimas problem). In addition, one may think about other loss functions instead of SSE (although SSE is convex and has a nice behavior in the optimization problems). For example, in this problem, Hinge loss works fine, specially considering the fact that values in Y are restricted to [math]\displaystyle{ \pm1 }[/math]. Here, hinge loss is defined as:

[math]\displaystyle{ \textrm{Hinge}(X | Y) = \displaystyle \sum_{(i,j) \in S} \max (0, 1 - x_{ij} \cdot y_{ij}) }[/math] (where [math]\displaystyle{ \, S = \{(i, j) | y_{ij} }[/math] is known[math]\displaystyle{ \} }[/math])

Prediction Using Matrix Factorization

Another way to tackle this problem is to use Matrix Factorization. In matrix factorization one tries to find a prediction [math]\displaystyle{ X = UV^T }[/math] such that the rank of each factor (U and V) is low. In addition to this U and V are meaningful in this problem; the ith row of U is an indicator of the importance (weights) of the features for ith user, therefore ith row of V is the characterization of the ith movie based on its features. Here, lowering the rank of X is equal to lowering the rank of its factors, but unfortunately the main problem in this case is that, rank optimization problem is not convex.

A short discussion on Loss Function for classification

Collaborative prediction, as described in the problem definition, involves classifying each entry of a matrix into either 1 or -1. Consider the notation in the last paragraph and suppose that we are to predict the jth row of the matrix X. Suppose further that the matrix U is fixed, then the classification problem boils down to finding the jth row of V which gives the optimal prediction. Denoting the jth row of V by vector [math]\displaystyle{ v }[/math], the matrix entry [math]\displaystyle{ X_{ij} }[/math] by real number [math]\displaystyle{ x }[/math], and the ith row of U by vector [math]\displaystyle{ u }[/math], then the classification problem can be rephrased as finding the weight vector [math]\displaystyle{ v }[/math] and predict the value of [math]\displaystyle{ x }[/math] (which is either 1 or -1) by the dot production function [math]\displaystyle{ f(v)=vu^T }[/math]. Usually, we specify a threshold value (for example, take the threshold as 0) and classify [math]\displaystyle{ x }[/math] into 1 or -1 as follows: if [math]\displaystyle{ f(v) }[/math] is greater than the threshold, then classify [math]\displaystyle{ x }[/math] as 1; otherwise classify [math]\displaystyle{ x }[/math] as -1.

There are many ways to measure how well the above classification scheme performs(in the training stage). The most natural way is to calculate the number of incorrect classification: when the true value is 1 but the classification scheme gives -1; or vice versa. However, this very natural performance measure gives rise to a very intractable optimization problem. To make the optimization more tractable, several popular proxy loss functions are used to replace the above measurement in measuring the performance of the classification scheme. These proxy loss functions include the log loss function, the squared loss function and the hinge loss function.

A comparison of these three proxy loss functions is available at http://hunch.net/?p=547. This article suggests not using log loss since the optimisation of log los can be unstable.

Frobenius Norm

Instead of using the rank of each factor, we can use Frobenius Norm of each factor to address this problem; in fact, this norm is closely related to the rank of matrix. Moreover, in this way the optimization problem will be convex, and one may get the benefit of using common optimization techniques. The Frobenius norm is defined as:

[math]\displaystyle{ \|X\|_{F}^2 = \sum x_{ij}^2 = Tr(XX^T) = \sum \lambda_i^2 }[/math] (where [math]\displaystyle{ Tr() }[/math] is the Trace Function and [math]\displaystyle{ \lambda }[/math]s are singular values of X)

Notion of Margin in Collaborative Filtering

Assume one of the factors is fixed and the goal is to predict the other factor. This is the famous problem of linear prediction or SVM. Assume U is fixed, so predicting each column of X is in fact, solving a SVM for U to find a row in V. Recall that in SVM, to maximize the margin the norm of the linear separator [math]\displaystyle{ \|\beta\|^2 }[/math] should be minimized. Therefore, predicting Y with the maximum margin is equivalent to minimizing the norm of the factor V or [math]\displaystyle{ \|V\|_{F}^2 }[/math]. However, the problem here is to predict both U and V together (which is called collaborative filtering), but at each step, one factor can assumed to be fixed, so minimizing the norm of the other factor gives the maximum margin for this prediction.

The Optimization Problem and Trace Norm

So far it has been shown that the optimization problem is to find the factors with minimum norm such that the prediction has a low loss:

[math]\displaystyle{ \displaystyle \min_{X = UV^T} (\|U\|_{F}^2 + \|V\|_{F}^2) + c \cdot \textrm{Hinge}(X|Y) }[/math]

This optimization problem is difficult because both objective function and constraints are not linear. The following lemma helps to change the shape of the optimization problem in a way that it becomes easier to solve:

Lemma 1:

[math]\displaystyle{ \displaystyle \min_{X = UV^T} \frac{1}{2}(\|U\|_{F}^2 + \|V\|_{F}^2) =

\displaystyle \min_{X = UV^T} (\|U\|_{F} \cdot \|V\|_{F}) = \|X\|_{T} }[/math]

where [math]\displaystyle{ \|X\|_{T} }[/math] is the Trace Norm of X and is defined as:

[math]\displaystyle{ \|X\|_{T} = \sum |\lambda_i| = Tr(\sqrt{XX^T}) }[/math]

In addition, by using SVD of [math]\displaystyle{ X = A \Sigma B^T }[/math], one can see that both [math]\displaystyle{ U = A \sqrt{\Sigma} }[/math] and

[math]\displaystyle{ V = B\sqrt{\Sigma} }[/math] satisfy this lemma.

Based on the lemma 1 the optimization problem can be reformulated as:

[math]\displaystyle{ \displaystyle \min_X \|X\|_{T} + c \cdot \textrm{Hinge}(X|Y) }[/math]

Relation Between Rank And Trace Norm

In the literature, the notion of trace norm has been widely used instead of dealing with the rank of matrices. The next theorem explains the relation between the rank and the trace norm:

Theorem 1:

The convex envelope (smallest convex bounding function) of the rank function, on matrices with unit spectral norm, is the trace norm function.

Spectral Norm of a matrix is the absolute value of its largest eigenvalue.

In addition it can be easily be shown that the relation between the norm, trace norm and rank of a matrix is as follows:

[math]\displaystyle{ \forall X: \|X\|_{F} \leq \|X\|_{T} \leq \sqrt{Rank(X)} \cdot \|X\|_{F} }[/math]

Based on this relation, and the fact that the trace norm is a convex function, it can be shown:

[math]\displaystyle{ \{X| \|X\|_{F} \leq \alpha\} = conv \{uv^T| u \in \Re^n , v \in \Re^m , |u| = |v| = \alpha\} }[/math]

which shows that the set of matrices with a bounded trace norm is Convex; also it seems that this set has the lowest rank matrices on its boundary. Thus, this optimization problem is in fact the process of searching in a convex set to optimize a convex function.

Soft Margin Optimization

As it is possible in SVM that no linear separator can be found to satisfy the constraints (classes are not linearly separable), there exists some Y for which no factorization preserves all the knowledge from Y. So the same solution as soft-margin SVM can be used here, and one can add slack variables to the loss function:

[math]\displaystyle{ \displaystyle \min_X \|X\|_{T} + c \cdot \displaystyle \sum_{(i,j) \in S} \xi_{ij} }[/math]

s.t. [math]\displaystyle{ \forall (i,j) \in S: \xi_{ij} \geq 0 }[/math] , [math]\displaystyle{ x_{ij} \cdot y_{ij} \geq 1 - \xi_{ij} }[/math]

Using Semi-Definite Programming

Now this optimization problem is easy to understand, and also it is convex with linear constrains. To solve, it should be reformulated to one of the known convex optimization problems. Next lemma shows how Semi-Definite Programming can be used to solve this optimization:

Lemma 2:

[math]\displaystyle{ \forall X \in \Re^{n \cdot m} }[/math] and [math]\displaystyle{ t \in \Re : \|X\|_{T} \leq t \Longleftrightarrow \exists A, B }[/math] s.t.

[math]\displaystyle{ M = \left( \begin{array}{cc} A & X \\ X^T & B \\ \end{array} \right) \succeq 0 }[/math] and [math]\displaystyle{ Tr(M) \leq 2t }[/math]

Therefore the last optimization problem can be formulated as a linear, convex SDP optimization problem:

[math]\displaystyle{ \displaystyle \min_{M \succeq 0} Tr(M) + c \cdot \displaystyle \sum_{(i,j) \in S} \xi_{ij} }[/math]

s.t. [math]\displaystyle{ M = \left( \begin{array}{cc} A & X \\ X^T & B \\ \end{array} \right)\succeq 0 }[/math]

and [math]\displaystyle{ \forall (i,j) \in S: \xi_{ij} \geq 0 }[/math] , [math]\displaystyle{ x_{ij} \cdot y_{ij} \geq 1 - \xi_{ij} }[/math]

which has a dual form with a simple structure. Also the prediction can be done using a solution to the dual problem directly.

Experiments

Preliminary experiments was performed on a subset of the 100K MovieLens Dataset, consisting of the 100 users and 100 movies with the most ratings. To do this, we used CSDP to solve the resulting SDPs. The ratings are on a discrete scale of one through five, and we experimented with both generalizations of the hinge loss above, allowing per-user thresholds. As the "base line" learners, we used WLRA and K-Medians.

The the data was split into four sets. For each of the these four test sets, we used the remaining sets to calculate a 3-fold cross-validation (CV) error for each method (WLRA, K-medians, trace norm and max-norm MMMF with immediate-threshold and allthreshold

hinge loss) using a range of parameters (rank for WLRA, number of centers for K-medians, slack cost for MMMF). For each of the four splits, we selected the two MMMF learners with lowest CV ZOE andMAE and the two Baseline learners with lowest CV ZOE and MAE, and measured their error on the held-out test data.

Table 1 lists these CV and test errors, and the average test error across all four test sets. On average and on three of the four test sets, MMMF achieves lower MAE than the Baseline learners; on all four of the test sets, MMMF achieves lower ZOE than the Baseline learners.

Limitation

It is unrealistic that observed entries are assumed to be uniformly sampled. For example, Users tend to rate items they like. In fact, allowing an uncontrolled sampling distribution would guarantee low error on items the user likes, but not on items he would really like based on our prediction.